Abstract

Text-based question-answering (QA in short) is a popular application on multimedia environments. In this paper, we mainly focus on the multi-paragraphs QA systems, which can retrieve many candidate paragraphs to feed into the extraction module to locate the answers in the paragraphs. However, according to our observations, there are no real answer in many candidate paragraphs. To filter these paragraphs, we propose a multi-level fused sequence matching (MFM in short) model through deep network methods. Then we construct a distant supervision dataset based on Wikipedia and carry out several experiments on that. Also we use another popular sequence matching dataset to test the performance of our model. Experiments show that our MFM model can outperform recent models not only on the filtering candidates in multi-paragraphs QA task but also on the sequence matching task.

Similar content being viewed by others

Keywords

1 Introduction

Question-answering (QA in short) on text is a longtime hot topic in Natural Language Processing (NLP in short). We can simply categorize it into single-paragraph QA and multi-paragraphs QA. On the single-paragraph datasets such as Stanford Question Answering Dataset (SQuAD), top models can even exceed human performance using read comprehension (RC in short) models [4, 9, 13], in which every answer to the questions must be located in a given paragraph. So recently multi-paragraphs QA turns to be a popular research topic, where the datasets can be very large-scale and complex such as WikipediaFootnote 1. Such QA systems usually attempt to combine the search engine with RC models, such as DrQA [1], in which question-related documents can be retrieved back as candidates by the search engine and the candidates answers can be extracted by the RC models. Since the documents are too long for the RC models to extract answers, document usually will be split into paragraphs one by one.

However, according to our observations, feeding the retrieval candidate paragraphs directly to the RC model will lead to a sharp reduction on precision of answer. To validate that, we have carried out series experiments on the codes of Drqa-masterFootnote 2, for which the result is depicted in the Fig. 1. In doing so, we carefully analyze the cause of precision reduction. Actually the problem is that few parts of paragraphs may cover the answer and a smaller part of paragraphs can make sure RC model extracts the answer correctly. In other words, lots of paragraphs returned by search are noise for RC model, while all of them are considered to contain the correct answer. Consequently, they disturb the selection of correct answer and cause the precision reduction. So we aim to filter these question-unrelated candidate paragraphs for this QA task.

The number of candidate paragraphs is denoted as \(N_{cp}\), while the ones that can provide correct answer by the RC model is denoted as \(N_{cp \sim \{c\}}\). We can observe that \(N_{cp} \gg N_{cp \sim \{c\}}\). The percentage of candidate paragraphs with correct answers after retrieving is denoted as \(P_{cp\sim \{r\}}\) and the percentage of candidate paragraphs with correct answers after extracting is denoted as \(P_{cp \sim \{e\}}\). Also we can observe that \(P_{cp\sim \{r\}} \gg P_{cp \sim \{e\}}\)

Actually, using the traditional retrieval methods (ex. BM25 variants.) [5, 8, 11] of search engine, question-related documents can be ranked so as to filter those with lower scores. However, these methods mainly rely on statistical features of words rather than the semantic features of sentences or paragraphs. In contrast, neural network models are suitable for such task by deep learning method to capture the complex semantic features. And the main task is to compute the similarity between question and paragraphs, which is a classical task of text matching in NLP. Unfortunately, existing neural network models [2, 6, 10, 14,15,16] for text matching tasks (Ex. textual entailment) can’t be directly adapted to this candidates filtration task. Because these tasks such as textual entailment and answer selection usually process short sequences and the two sequences to match are of similar lengths. However, the candidates filtration task is to match long sequences of paragraphs with the short sequences of questions, which is difficult to fuse their semantic information.

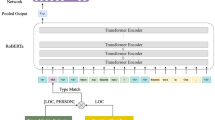

To address the problem, we proposes a multi-level fused sequence matching model for candidates filtering, which is referred to as MFM model. Compared with previous text matching model, the structure of MFM model is more complex which is more proper to fully capture semantic feature of paragraphs. This novel model is mainly consist of three parts: (i) multi-level fused encoding step for fusing word-embedding features, manual features and contextual features, (ii) alignment and comparison step which obtain the comparison features between paragraph and question through an attention mechanism, and (iii) aggregation and prediction step which aggregate all the features and make a final classification on the candidate paragraph. To validate our claim, we construct a distant supervised dataset for multi-paragraphs QA. Then we carry out several experiments on this dataset and public dataset on SNIL, which is a popular dataset for textual entailment task. Results show that our MFM model can outperform recent methods in precision scores on the two datasets.

The rest of this paper is organized as follows. Section 2 presents the overview and design details of our MFM model. Section 3 illustrates the experimental results. Section 4 discusses the related works. And finally Sect. 5 concludes the paper.

2 Model

In the multi-paragraphs QA system, search will return many paragraphs depend on question (recorded as \(Q= \{q_1, q_2, q_3,\ldots , q_j \ldots , q_m\}\)), while most candidate paragraphs are noise that will be feed into RC model to extract answers. So our MFM model aims to discriminate each paragraph (recorded as \(P= \{p_1, p_2, p_3,\ldots , p_i,\ldots , p_n\}\)) of them that is noise. Here, \(p_i\) and \(q_j\) are token(word) of sequence.

2.1 Multi-level Fused Encoding

Initial Encoding. Before precessing sequences with neural network, it is necessary to initially encode each token. Thus, we use the 300-dimensional GloVe [3] word embeddings as the representation of each token on word-level. Word embeddings can reflect the semantic relevance among words. Moreover, we append manual features to the embeddings for the tokens of sequence P, which have been proved to be effective on RC task [1]. Manual features of each token we used include part-of-speech (POS), name entity recognition (NER) tags, term frequency (TF) and a flag, which indicates whether the token appeared in the sequence Q (Fig. 2).

Multi-level Contextual Encoding. In order to obtain higher-level semantic information of each word in the context, we employ Bidirectional Long Short-Term Memory [7] (BiLSTM for short) to encoding each token from word-level embeddings. Considering that only one BiLSTM can not fully capture the contextual information of long sequence [4], we use multi-layer BiLSTM to obtain the contextual features for each token of sequence on multiple levels, which is formulated as Eqs. (1) and (2).

Here \(\varvec{emb}(.)\) is corresponding word embeddings while \(\varvec{f}(.)\) is the manual features. Thus \(\varvec{H}_0(.)\) is the initial encoding. \(\varvec{H}_k(.)\) is the \(k^{th}\) level contextual encoding for each token and \(\varvec{H}_{k+1}(.)\) depends on \(\varvec{H}_k(.)\) shown as Eq. (2). Compared with \(\varvec{H}_k(.)\), \(\varvec{H}_{k+1}(.)\) gathers more contextual information.

Fused Encoding. To obtain abundant features, we concatenate the initial word embeddings with each level contextual encoding as Eq.(3),

2.2 Attention Model Based Alignment and Comparison

Alignment. To compare the sequences of question and paragraph, we use the attention mechanism to align them [14, 16]. Taking into account the difference in length between P and Q, we employ a unidirectional attention to align question (Q) to paragraph (P). Before alignment, we measure similarity between token \(p_i\in P\) and \(q_j\in Q\) as Eqs. (4, 5) shown.

\(\varvec{W}\) and \(\varvec{b}\) are the learnable parameters, while \(\varvec{SimMatrix}\) is a \(m \times n\) sized matrix whose element \(s_{ij}\) means the similarity between \(p_i\) and \(q_j\).

\(\varvec{P}^{'}_k\) is the \(k^{th}\) level attentional representation of sequence Q, which is an alignment representation of sequence P.

Comparison. After alignment, we compare the features of the two sequences on corresponding level as follows:

For each level k, we use \(\varvec{sub}_k\) and \(\varvec{mul}_k\) as its comparison features.

2.3 Aggregation and Prediction

In the previous section, we obtained abundant information at different levels. In this section we aggregate the captured features and make a final prediction.

Multi-level Input. The features of \(k^{th}\) level can be treated as a 4-channel 2D feature map, whose channels contain \(\varvec{P}_k\), \(\varvec{P^{'}}_k\), \(\varvec{sub}_k\) and \(\varvec{mul}_k\). We stack up all the layers of feature maps and make up a 4K-channel 2D feature map which contains features (fusion features and comparison features) of all levels for the two sequences, which is formulated as Eqs. (10, 11). Here, K is the number of BiLSTM. Concat(., .) means stacked together on channel.

Convolution and Prediction. We aggregate all features by multi-layer 2D-CNN, which can effectively reduce the size of the feature map and capture the fine-grained features.

\(\varvec{CNN}(.)\) is a multi-layer CNN. After CNN processing, the FeatureMap is converted into a small sized map with x channels, and x is the number of convolutional kernels in CNN’s last layer. In each channel, the map is compressed into a vector by max pooling along rows which corresponds to the length of sequence. So, \(\varvec{v}\) is a x channels 1 dimensional image used to make prediction.

Through a full connection layer, we employ Softmax to predict the relation between two sequences. Flatten(.) means concatenate each row of the matrix into vector and \((\varvec{W}_p,\varvec{b}_p)\) is parameters to be trained.

3 Experiments and Evaluations

In this section, we evaluate our model on two different datasets: SNLI and a paragraphs filter dataset (PF) constructed from DrQA by distant supervision. SNLI is a traditional dataset on the task of textual entailment while the other dataset is for paragraphs filter of multi-paragraphs QA. Our work is mainly focused on the latter dataset, for which we add our work as a Filter to the DrQA.

3.1 Distant Supervised Dataset

In the DrQA, Retriever will search many paragraphs for each question, and Reader (the RC model) will extract the answer for each paragraph. For a given question, only few paragraphs can get the right answer, we give these paragraphs a right label, and the other paragraphs a wrong label shown as Table 1. We use questions in dev-set and train-set of SQuAD to construct dev-set and train-set of PF respectively.

Considering that wrong candidates are more than the right one, we replicate the right samples and the number of them will be equal to the wrong samples.

3.2 Baseline

Here, we will introduce two baselines for our work: DrQA-Reader [1] and CA Model [2]. The former is a RC model employed by DrQA and we make a slight change based on its network structure. The latter is a typical model for text matching task such as textual entailment. For SNLI, there are many other work, we just simply take the reported performance for the purpose of comparation.

DrQA-Reader. This model contains multi-level BiLSTM encoding and manual features. We only change the final prediction part, which merge the token features to obtain a single sequence feature by a self-attention mechanism for classification.

Here, \(\varvec{w}_{merge}\in \mathfrak {R}^{h}\), \(\varvec{b}_{merge}\in \mathfrak {R}^{m}\) are learnable parameters and \(\varvec{P}\in \mathfrak {R}^{h\times m}\) is the feature representation of paragraph (P in our work) while \(\varvec{p}_i\in \mathfrak {R}^{h}\) is the feature vector for \(i^{th}\) token of paragraph, where h is the size of feature vector and m is the length of paragraph. Finally, \(\varvec{v^{'}}\) will be used to make prediction as Eq. (14).

CA-Model. Wang et al. in [2] have proposed a generic Compare-Aggregate framework for text matching task such as textual entailment and answer selection. They apply a single BiLSTM to encode P and Q and then use unidirectional attention to compare them. Finally, only comparison features are aggregated by 1D CNN for prediction. We use the CA-model framework as benchmark on datasets SNLI and PF.

3.3 Result and Anlysis

We use accuracy as the evaluation metric for the text matching task, since there is only one correct label for each instance (shown as Table 2). But for the evaluation of multi-paragraphs QA pipeline, it will return many answers for each question. So we use recall for all questions which check whether the correct answer appears in returned top-n answers (shown as Table 3). To demonstrate each component’s property of our model, we performed ablation experiment (shown as Table 4).

We observe following from the results. Firstly, our model achieves the best performance on dataset PF and very competitive on the popular dataset SNLI. Secondly, our model as a Filter can improve the recall of pipeline of multi-paragraphs QA system, which indicates that the property of the pipeline of multi-paragraphs QA can be improved by removing amount of noise candidates. So our model is a generally for many text-matching cases and our work is effective for the multi-paragraphs QA systems.

4 Related Works

We review related work in two types of tasks for semantic information analysis of neural network.

4.1 Sequence Matching

Sequence matching task is very similar to our work on objective. The difference is the data situation. Mainstream sequence matching task such as textual entailment and answer selection usually faced with short sequence, which can be analyzed with involuted comparison features. [15] used fine-grained pair-wise comparison, and use CNN to capture the features of similarity matrix of two sequences. [6] tried to use soft-attention to compare two sequences and use 1D-CNN to capture features of sequence. Comparison and CNN works alternately. Soft-attention can mix different sequences’ information well, so it is widely used for TE [21] and AS [14, 22]. Based on previous works, [2] summed up a generic Compare-Aggregate framework for AS, TE and so on. And [10] used bidirectional attention to compare two sequences under Compare-Aggregate framework.

4.2 Machine Reading Comprehension

Reading comprehension (RC in short) task is similar to our work on data situation but the objective of task is different. On the task of RC, two sequences (question and paragraph) have different statuses because the prediction of answer is mainly implement on paragraph. Thus, [12] tackle cloze-type RC task (dataset CNN and DailyMail [23]) by encoding question into one feature vector and select answer in paragraph based on it. [13] tried to use bidirectional attention flow, however, in order to fuse all features into paragraph’s representation, it merge attentional features of question into one feature vector which will be affixed to paragraph’s each token vector. On account of information loss by merging question’s attentional features, [9] used two rounds of attentional alignment so as to avoid summarizing question.

5 Conclusion

In this work, we propose a multi-level fused sequence matching model (MFM model) to filter the noise candidates. Our model encode two sequences of question and paragraph on multi-levels and make fusion with initial encodings. Thus, it can fully capture contextual features for long sequences. Experimental results show that our model can get an outstanding performance compared with baseline on PF and be competitive on benchmark of SNLI.

References

Chen, D., Fisch, A., Weston, J., et al.: Reading wikipedia to answer open-domain questions, 1870–1879 (2017)

Wang, S., Jiang, J.: A compare-aggregate model for matching text sequences. In: Conference on ICLR 2017 (2017)

Pennington, J., Socher, R., Manning, C.: Glove: global vectors for word representation. In: Conference on Empirical Methods in Natural Language Processing, pp. 1532–1543 (2014)

Huang, H.Y., Zhu, C., Shen, Y., et al.: FusionNet: fusing via fully-aware attention with application to machine comprehension (2017)

Robertson, S., Zaragoza, H.: The probabilistic relevance framework: BM25 and beyond. Found. Trends\(\textregistered \) Inf. Retr. 3(4), 333–389 (2009)

Yin, W., Schütze, H., Xiang, B., et al.: ABCNN: attention-based convolutional neural network for modeling sentence Pairs. Comput. Sci. (2015)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. In: Supervised Sequence Labelling with Recurrent Neural Networks, pp. 1735–1780. Springer, Heidelberg (1997)

Zaragoza, H., Craswell, N., Taylor, M.J., et al.: Microsoft Cambridge at TREC 13: web and hard tracks. In: TREC 2004 (2004)

Xiong, C., Zhong, V., Socher, R.: Dynamic coattention networks for question answering (2016)

Wang, Z., Hamza, W., Florian, R.: Bilateral multi-perspective matching for natural language sentences (2017)

Ponte, J.M., Croft, W.B.: A language modeling approach to information retrieval. In: Research and Development in Information Retrieval, pp. 275–281 (1998)

Kadlec, R., Schmid, M., Bajgar, O., et al.: Text understanding with the attention sum reader network, 908–918 (2016)

Seo, M., Kembhavi, A., Farhadi, A., et al.: Bidirectional attention flow for machine comprehension (2016)

Tan, M., Xiang, B., Zhou, B.: LSTM-based deep learning models for non-factoid answer selection. Comput. Sci. (2015)

He, H., Lin, J.: Pairwise word interaction modeling with deep neural networks for semantic similarity measurement. In: Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 937–948 (2016)

Yu, L., Hermann, K.M., Blunsom, P., et al.: Deep learning for answer sentence selection. Comput. Sci. (2014)

Bowman, S.R., Angeli, G., Potts, C., et al.: A large annotated corpus for learning natural language inference. Comput. Sci. (2015)

Feng, M., Xiang, B., Glass, M.R., et al.: Applying deep learning to answer selection: a study and an open task, 813–820 (2015)

Cheng, J., Dong, L., Lapata, M.: Long short-term memory-networks for machine reading (2016)

Wang, S., Jiang, J.: Learning natural language inference with LSTM (2015)

Rocktaschel, T., Grefenstette, E., Hermann, K.M., et al.: Reasoning about entailment with neural attention (2015)

Tan, M., Santos, C.D., Xiang, B., et al.: Improved representation learning for question answer matching. In: Meeting of the Association for Computational Linguistics, pp. 464–473 (2016)

Hermann, K.M., Kociský, T., Grefenstette, E., et al.: Teaching machines to read and comprehend, 1693–1701 (2015)

Chen, Q., Zhu, X., Ling, Z., et al.: Enhancing and combining sequential and tree LSTM for natural language inference, 1657–1668 (2016)

Parikh, A.P., Täckström, O., Das, D., et al.: A decomposable attention model for natural language inference, 2249–2255 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Liu, Y. et al. (2018). MFM: A Multi-level Fused Sequence Matching Model for Candidates Filtering in Multi-paragraphs Question-Answering. In: Hong, R., Cheng, WH., Yamasaki, T., Wang, M., Ngo, CW. (eds) Advances in Multimedia Information Processing – PCM 2018. PCM 2018. Lecture Notes in Computer Science(), vol 11166. Springer, Cham. https://doi.org/10.1007/978-3-030-00764-5_41

Download citation

DOI: https://doi.org/10.1007/978-3-030-00764-5_41

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00763-8

Online ISBN: 978-3-030-00764-5

eBook Packages: Computer ScienceComputer Science (R0)