Abstract

Anatomic models of kidneys may help surgeons make plans or guide surgical procedures, in which segmentation is a prerequisite. We develop a convolutional neural network to segment multiple renal structures from arterial-phase CT images, including parenchyma, arteries, veins, collecting systems, and abnormal structures. To the best of our knowledge, this is the first work dedicated to jointly segment these five renal structures. We introduce two novel techniques. First, we generalize the sequential residual architecture to residual graphs. With this generalization, we convert a popular multi-scale architecture (U-Net) to a residual U-Net. Second, we solve the unbalanced data problem which commonly exists in medical image segmentation by weighting pixels with multi-scale entropy. Our multi-scale entropy map combines information theory and scale analysis to capture spatial complexity of a multi-class label map. The two techniques significantly improve segmentation accuracy. Trained on 400 CT scans and tested on another 100, our algorithm achieves median Dice indices 0.96, 0.86, 0.8, 0.62, and 0.29 respectively for renal parenchyma, arteries, veins, collecting systems and abnormal structures.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Kidney cancer has 63,990 estimated new cases and causes 14,400 deaths in 2017 in United States alone [1]. Laparoscopic partial nephrectomy has become increasingly popular due to the preservation of kidneys’ healthy part and the reduced invasiveness. Minimally invasive surgeries by the da VinciTM surgical systems have increased significantly over the past 20 years, with approximately 877,000 procedures in 2017.

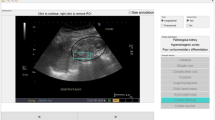

The da VinciTM system not only offers surgeons with dexterity and precision, but is also a great platform to enable image-guided surgery. The system has a stereo endoscope and a stereo viewer that make augmented reality possible. The endoscope is stably held by a robotic arm which provides a stable view, and its position is tracked in real-time through the robotic kinematics. The TileProTM feature through which external videos can be piped in enables augmented reality without further integration.

Visualizing segmented renal structures (such as parenchyma, arteries, veins, ureters, and tumors) in 3D has many clear advantages over native CT images. Pre-operatively, it can help surgeons assess the 3D relationship of different structures and create the best plan. Intra-operatively, the model can provide real-time guidance. More specifically, there are three benefits: (1) It enables selective arterial clamping to improve the health of the preserved part of the kidney, compared to total clamping in complex procedures that need more time. (2) It enables the awareness of critical structures (major blood vessels and collecting systems) close to a tumor and helps reduce surgical complication. (3) Overlaying tumors not visible from outside of the kidney can help surgeons to localize tumors quickly in the absence of tactile feedback.

Image-guided laparoscopic partial nephrectomy has been studied in many previous works [2, 3]. The reported works are focused on registration and augmented reality. Efficient and low cost segmentation of the renal structures is a prerequisite for any image guidance. Manual segmentation by using interactive tools is time consuming, prohibiting the adoption of such use. In this work, we try to automate the segmentation of renal structures.

To assist surgical planning and guidance, we segment the following five structures: kidney parenchyma, renal arteries (including aorta), renal veins (including IVC), collecting systems (including renal calyces, renal pelvis and ureters), and abnormal structures (including tumors and cysts). We use the arterial-phase CT images as the input due to its wide availability in different renal scan protocols. Kidney parenchyma and arteries has higher contrast while other structures are not enhanced thus difficult to disambiguate from the background.

Previous works on kidney segmentation employed random forests [4, 5], multi-atlases [6], and deep learning [7, 8]. Some [4,5,6,7] focused on segmentation of the kidney body, while others [8] focused on blood vessels and collecting systems. Deep convolutional neural networks have revolutionized a lot of image analysis tasks including medical image segmentation. A fully convolutional network [9,10,11] whose the output network is a probabilistic map of the same size as the input is an end-to-end approach that solves feature learning and classification jointly.

We develop a single convolutional neural network to segment all the aforementioned structures. Our network structure is inspired by the popular U-Net [9] and the ResNet [12]. Our work presents a number of contributions:

-

To the best of our knowledge, this is the first work dedicated to jointly segment kidney body and its internal structures.

-

We generalize the sequential residual architecture [12] to direct acyclic graphs. With this generalization, we convert a popular multi-scale architecture U-Net [9] to a residual U-Net.

-

We propose an elegant approach to encode the importance of pixels to solve the problems of unbalanced data, both inter-class data and intra-class, which are common in medical image segmentation.

-

We show notable improvement made by the two new techniques on a dataset of 500 CT images.

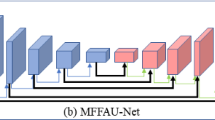

2 Residual U-Net

2.1 Residual Graphs

The residual architecture [12] has significantly improved deep neural networks’ performance in image recognition. The original residual network (ResNet) is a sequential accumulation of many (possibly nonlinear) functions, as shown in Fig. 1(a). It directly propagates the optimization gradient from the end to the beginning, so leads to a better training process.

(a) An example of sequential residual network. (b) An example of directed acyclic graph. (c) The residual graph derived from (b). (d) The U-Net architecture. Downward arrows represent down-sampling or strided convolutions. Upward arrows represent up-sampling or deconvolution. (e) The Res. U-Net architecture. It consists of two threads. The first thread is scale-specific features: the green channels. It follows a similar architecture to the U-Net. The second thread is the residual architecture, including the red, the orange and the blue channels.

However, not all models are sequential, for example, the popular U-Net [9], as shown in Fig. 1(d). In the model, the output of filter \( f_{pre} \) takes two paths. One goes down, forwarding information from fine scales to coarse scales. The other merges at filter \( f_{post} \) with context information extracted from coarse scales. Such a structure is not sequential, but a directed acyclic graph with branching and merging.

We propose the following residual architecture for directed acyclic graphs, fully compatible with original sequential one. Let \( G\, = \,\left\{ {V,\,\vec{E}} \right\} \) be a directed acyclic graph where \( V \) is its vertices and \( \vec{E} \) is its directed edges. Given a vertex \( a\, \in \,V \), let \( {\text{acs}}\left( a \right) \), excluding \( a \) itself, denote the vertices in \( V \) which can reach \( a \) via directed paths in \( \vec{E} \). The residual graph derived from \( G \) is composed by functions on vertices. The function \( f_{a} \) on a vertex \( a \) takes the summation of \( {\text{acs}}\left( a \right) \) as its input, that is:

For instance, Fig. 1(c) shows the residual graph derived from the graph in Fig. 1(b). In Fig. 1(c), the input of \( f_{4} \) is the summation of \( f_{1} \) and \( f_{2} \); the input of \( f_{5} \) is the summation of \( f_{2} \) and \( f_{3} \); the input of \( f_{6} \) is the summation of \( f_{1} \), \( f_{2} \), …, \( f_{5} \).

2.2 Multi-scale Residual Network

We combine the residual graph with the U-Net into a Res. U-Net, as shown in Fig. 1(e). Data flow from fine scales to coarse scales through two channels: the green which is scale-specific features and the red which is the accumulation of supervision features. The green and the red channels, concatenated together, are projected to a high-dimensional feature space through \( f_{preproj} \), and then compressed by \( f_{presup} \). The output of \( f_{presup} \) is added to the red channel to keep the accumulation updated. The green channel after \( f_{preproj} \) and the red channel after \( f_{presup} \), are forwarded to a coarser scale \( k\, + \,1 \), respectively through strided convolution and down-sampling. Data flow from coarse scales to fine scales through two channels, the green, and the blue, respectively through de-convolution and up-sampling. The blue is the accumulation of supervision features from the coarsest scale to the current scale. The blue channel is added to the red channel to keep the accumulation updated. The accumulated features, concatenated with green channels, are projected to a high-dimensional feature space through \( f_{postproj} \), and then compressed by \( f_{postsup} \). The outputs of \( f_{presup} \) and \( f_{postsup} \) are added to the blue channel to keep it as the accumulation. When the blue channel travels to the first scale, it is the accumulation of all scales.

The Res. U-Net consists of two threads. The first thread is scale-specific features: the green channels. It follows a similar architecture to the U-Net. It branches at \( f_{preproj} \) and then merges at \( f_{postproj} \). The second thread is the residual architecture, including the red, the orange and the blue channels. We consider the pair of \( f_{preproj} \) and \( f_{presup} \) and the pair of \( f_{postproj} \) and \( f_{postsup} \) as processing units in the residual architecture. \( f_{presup} \) or \( f_{postsup} \) produces the output of a processing unit. \( f_{preproj} \) or \( f_{postproj} \) produces intermediate features. The input to a processing unit always includes summation of \( f_{presup} \) or \( f_{postsup} \) from its ancestor processing units.

Parameters of the Res. U-Net used in our experiment are in Table 1.

3 Multi-scale Categorical Entropy

Uniform weighting in model training may yield unsatisfactory segmentation. For example, it will make a model tend to label pixels into a class overwhelmingly greater in size than others [13]. Besides inter-class unbalance, it is also problematic for intra-class pixels. For example, it will undesirably make thin vessels less important than thick ones simply because the later take a lesser number of pixels. By intuition, we would like to have a pixel-wise weight map with the following properties: (1) edges are important because they define the boundary; (2) complicated structures, though possibly small, are important, for example, branching thin vessels; (3) the further away a point is from the foreground, the less important it is.

We propose using multi-scale entropy to weight pixels. Given a multi-channel probabilistic map \( y \) and a series of scale levels \( \sigma_{0} \) < … < \( \sigma_{n} \), the multi-scale entropy \( s \) is defined as:

where \( \kappa_{\sigma } \) is a Gaussian kernel of width \( \delta \), and \( y_{{\sigma_{i} }} \left( p \right)\left[ c \right] \) is \( c \)-th channel’s probability at a point \( p \) in \( y_{{\sigma_{i} }} \). Equation (1) generates a series of smoothed probabilistic maps \( y_{\delta } \) s from the original map \( y \). Equation (2) calculates the relative entropy between two smoothed probabilistic maps \( y_{{\sigma_{i} }} \) and \( y_{{\sigma_{i + 1} }} \). It captures how complex the label map is at a scale. Equation (3) smooths the relative entropy map to the resolution of \( \sigma_{i + 1} \). Equation (4) sums up relative entropy at different scales. \( \sigma_{0} \), …, \( \sigma_{n} \) usually increase exponentially. Figure 2 shows an entropy map derived with \( \sigma = \) 1, 2, 4, 8, 16, 32, 64, 128, and 256. It emphasizes edges, complicated structures, and areas near the foreground.

An example of multi-scale entropy map. (a) A patch of a CT image. (b) Manual segmentation, with green for parenchyma, red for arteries, blue for veins, yellow for collecting systems and purple for masses. (c) The entropy map derived with smoothing kernel sizes 1, 2, 4, 8, 16, 32, 64, 128, and 256. It emphasizes edges, complicated structures, and areas near the foreground.

4 Experiments

4.1 Data

The dataset consists of 500 subjects’ arterial-phase renal CT images. The average spacing is 0.757 mm × 0.757 mm × 0.906 mm, with standard deviation 0.075 mm × 0.075 mm × 0.327 mm. The dataset is randomly divided into two disjoint subsets, one of 400 subjects for training and the other of 100 subjects for testing.

The renal parenchyma, arteries, veins, collecting system, and abnormal structures are manually labelled. Abnormal structures (including tumors, cysts, etc.) are jointly labelled into one class “mass”. In total there are five foreground classes.

4.2 Methods and Evaluation

The following methods are compared: (1) the U-Net trained with uniform weighting on pixels; (2) the Res. U-Net trained with uniform weighting on pixels; (3) the Res. U-Net trained with multi-scale entropy weighting on pixels. All methods use multi-class cross entropy as their loss function to handle multiple anatomical structures. For method (3), multi-scale entropy map derived from manual segmentation is used to weight pixel’s contribution to the loss function. Each method is iteratively trained with 5000 batches consisting of \( b\, = \,12 \) patches. 3D patches of size 67 × 67 × 67 are randomly and evenly sampled from each class (including the background), and randomly rotated. The learning rate is initially 0.00025 * \( \sqrt {b - 1} \), and exponentially decays to 0.00001 * \( \sqrt {b - 1} \) at the last batch. The Adam optimizer [14] is employed to minimize the loss function. It takes about 24 h to train a model with a Quadro P6000 GPU.

The following indices are used to evaluate the methods.

-

AUC: the area under the receiver operating characteristic curve. For each foreground class, a binary mask is be derived by thresholding its probabilistic map. Precision and sensitivity, defined as follows, are calculated by comparing the binary mask with the manual segmentation:

This procedure is applied to every image, and then the precision or the sensitivity is averaged over all images. To eliminate the arbitrariness of the threshold value, different thresholds are used, from 0.05 to 0.95 with a step size of 0.05. The averaged precision and sensitivity pairs at different threshold values form a curve. The larger the area under the curve (AUC), the better a model is. The AUC is calculated separately for each class.

-

Median Dice indices. Given the multi-class probabilistic maps of an image, a mutually exclusive multi-class segmentation can be derived by labelling each pixel with the class holding the highest probability. The Dice index, defined as follows, are calculated for each class:

This procedure is applied to every image and the median is summarized.

4.3 Results

Table 2 shows the accuracy of the three methods, evaluated on the testing dataset. All the methods achieve excellent accuracy (AUC ≥ 0.97, and Dice ≥ 0.94) for parenchyma, and high accuracy (AUC from 0.84 to 0.91, and Dice from 0.81 to 0.86) for arteries. They show relatively large variance for veins and collecting system. For veins, the AUC ranges from 0.58 to 0.82 and the Dice ranges from 0.62 to 0.80. For collecting systems, the AUC ranges from 0.52 to 0.66 and the Dice ranges from 0.54 to 0.62. None of the models performs well with masses, with AUC from 0.05 to 0.22 and Dice from 0.06 to 0.29.

For all the classes, the Res. U-Net with entropy weighting performs the best; the Res. U-Net with uniform weighting performs the second; and the U-Net with uniform weighting performs the last. The Res. U-Net improves the accuracy for veins from AUC = 0.58 and Dice = 0.62 to AUC = 0.77 and Dice = 0.75, by more than 0.12. The entropy weighting further improves the accuracy to AUC = 0.82 and Dice = 0.80, by 0.05. The Res. U-Net improves the accuracy for collecting systems from AUC = 0.53 and Dice = 0.54 to AUC = 0.61 and Dice = 0.58, by about 0.04. The entropy weighting further improves the accuracy to AUC = 0.66 and Dice = 0.62, by another 0.04. The same trend can also be found for masses.

Most previous works [4,5,6,7] focused on the kidney body, with the best reported Dice index equal to 0.96 [4]. Our method achieves the same level of accuracy. We also evaluated the deeply supervised U-Net in [8] for blood vessels and collecting systems. Its AUCs are 0.78 for arteries, 0.45 for veins, and 0.32 for collecting systems. Please note that the evaluation conducted in [8] only involved connected components overlapping the ground truth and that the evaluation in this paper does not involves such post-processing depending on the ground truth. Figure 3 shows visual examples of results by the Res. U-Net weighted by multi-scale entropy. Their Dice indices respectively rank the top 50th and 75th among 100 images, where better Dice indices imply smaller rank numbers. It in average takes 17.7 s to segment a 3D volume.

5 Conclusions

The residual neural network significantly improves performance by propagating optimization forces directly to different layers. We generalize it from its original sequential structure to directed acyclic graphs. The proposed Res. U-Net, derived from the popular U-Net with a branching and merging structure, conducts multi-scale processing with the residual architecture. It significantly improves segmentation of renal veins and collecting systems.

In addition to inter-class balance, intra-class pixels should also be weighted according to their importance. Our multi-scale entropy captures the spatial complexity of a multi-class label map. It jointly considers distance, edges and shapes. The Res. U-Net trained with multi-scale entropy considerably improves accuracy for renal veins and collecting systems.

References

Cancer Stat Facts: Kidney and Renal Pelvis Cancer. https://seer.cancer.gov/statfacts/html/kidrp.html

Hughes-Hallett, A., et al.: Augmented reality partial nephrectomy: examining the current status and future perspectives. Urology 83, 266–273 (2014)

Su, L., Vagvolgyi, B., Agarwal, R., Reiley, C., Taylor, R., Hager, G.: Augmented reality during robot-assisted laparoscopic partial nephrectomy: toward real-time 3D-CT to stereoscopic video registration. Urology 73, 896–900 (2009)

Cuingnet, R., Prevost, R., Lesage, D., Cohen, L.D., Mory, B., Ardon, R.: Automatic detection and segmentation of kidneys in 3D CT images using random forests. In: Ayache, N., Delingette, H., Golland, P., Mori, K. (eds.) MICCAI 2012. LNCS, vol. 7512, pp. 66–74. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33454-2_9

Khalifa, F., Soliman, A., Dwyer, A., Gimelfarb, G., ElBaz, A.: A random forest-based framework for 3D kidney segmentation from dynamic contrast-enhanced CT images. In IEEE International Conference on Image Processing (2016)

Yang, G., et al.: Automatic kidney segmentation in CT images based on multi-atlas image registration. In: Annual International Conference of the IEEE on Engineering in Medicine and Biology Society (2014)

Sharma, K., et al.: Automatic segmentation of kidneys using deep learning for total kidney volume quantification in autosomal dominant polycystic kidney disease. Sci. Rep. 7, 2049 (2017)

Taha, A., Lo, P., Li, J., Zhao, T.: Kid-Net: convolution networks for kidney vessels segmentation from CT-volumes. In: Medical Image Computing and Computer-Assisted Intervention (2018)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Merkow, J., Marsden, A., Kriegman, D., Tu, Z.: Dense volume-to-volume vascular boundary detection. In: Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W. (eds.) MICCAI 2016. LNCS, vol. 9902, pp. 371–379. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46726-9_43

Milletari, F., Navab, N., Ahmadi, S.-A.: V-Net: fully convolutional neural networks for volumetric medical image segmentation. In: International Conference on 3D Vision (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (2016)

Huang, C., Li, Y., Loy, C.C., Tang, X.: Learning deep representation for imbalanced classification. In: IEEE Conference on Computer Vision and Pattern Recognition (2016)

Kingma, D., Ba, J.: Adam: a method for stochastic optimization. In: International Conference for Learning Representations

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, J., Lo, P., Taha, A., Wu, H., Zhao, T. (2018). Segmentation of Renal Structures for Image-Guided Surgery. In: Frangi, A., Schnabel, J., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2018. MICCAI 2018. Lecture Notes in Computer Science(), vol 11073. Springer, Cham. https://doi.org/10.1007/978-3-030-00937-3_52

Download citation

DOI: https://doi.org/10.1007/978-3-030-00937-3_52

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00936-6

Online ISBN: 978-3-030-00937-3

eBook Packages: Computer ScienceComputer Science (R0)