Abstract

Image sets-based face recognition receives growing research interest in pattern recognition and machine learning. The most challenging problem focuses on how to formulate a computable and discriminative model by using given data sets. In this paper, we propose a new method, which is called Bilinear Regression Classifier (BLRC) for short, to address the image sets-based face recognition problem. BLRC classifies a given test set by choosing the category that simultaneously maximizes the unrelated subspace and minimize the related subspace. In particular, the unrelated subspace is used to characterize the distances between the query set and the unrelated image sets, while the related subspace is used to characterize the distances between the query set and the related sets. In our work, the Mahalanobis metric, rather than the Euclidean metric, is exploited to compute the subspace distance. The subspace coefficient vectors are obtained by solving an Elastic-Net regularized regression model. Extensive experiments are conducted on several benchmark datasets to evaluate the real recognition performance of the new method. The results show that our BLRC method obtains competitive accuracies with some state-of-the-art methods.

C.-X. Ren—This work is supported in part by the Science and Technology Program of Shenzhen under Grant JCYJ20170818155415617, the National Natural Science Foundation of China under Grants 61572536, and the Science and Technology Program of GuangZhou under Grant 201804010248.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Face recognition has traditionally been posed as the problem of identifying a face from a single image. Good performance is usually rely on smartly designed classifiers. A number of classifiers were proposed, such as the Nearest Neighbor (NN) [4], A Local Support Vector Machine Approach [12], Sparse Representation-based Classifier [16] and Linear Regression Classification (LRC) [11]. These classifiers use a single test sample for classification and assume that images are taken in controlled environments. Their classification performance is generally dependent on the representation of individual test samples. However, facial appearance changes dramatically under variations in pose, illumination, expression, etc., and images captured under controlled conditions may not suffice for reliable recognition under the more varied conditions, that occur in real surveillance and video retrieval applications. Recently there has been growing interest in face recognition from image sets. Rather than supplying a single query image, the system supplies a set of images of the same unknown individual, and we expect that rich information provided in the image sets can improve the recognition rate.

Image sets classification algorithms include parametric methods [1, 8, 14] and non-parametric methods [2, 3, 5,6,7, 9, 13, 17]. Parametric method, firstly use the probability density functions to represent the image sets, then they use distance of divergence functions to measure the similarity between the image set (probability distribution), and they finally classify the test image set into the category which the closest image collection belongs. There are various difficulties in parametric methods, and the recognition performance is usually unsatisfactory. In recent years, researchers have focused on nonparametric methods that are independent of models. These methods do not have any assumptions about the distribution of image sets. Typical example of such methods is subspace algorithm.

This paper makes a brief review on dual linear regression classification (DLRC), then proposes the bilinear regression classification (BLRC) for image set retrieval. For BLRC algorithm, we first give the concept of uncorrelated subspace. Then, we introduce two strategies to constitute the unrelated subspace. Next, we calculate related distance metric and unrelated distance metric. Last, we introduce a combination metric for two new classifiers based on two constitution strategies of the unrelated subspace. Experimental results shows that the performance of BLRC is better than DLRC and several state-of-the-art classifiers for some benchmark.

2 Dual Linear Regression Classification

Suppose \(a\) and \(b\) be height and width of an image. Let two sets of (down-scaled) face images be represented by

where \(x_i\ (i=1,2,\cdots , m)\) and \(y_j\ (j=1,2,\cdots , n)\) are column vectors of size \(ab\).

Column vectors of the image set \(X\) and the image set \(Y\) determine a subspace respectively, and an image located at the intersection of the two subspaces. That is, the “virtual” face image can be assumed vector \(V\) should be a linear combination of the column vectors of two image sets respectively. To calculate the distance between two image sets, our task is to find the “virtual” face \(V\) and Coefficient vectors \(\alpha = (\alpha _1,\alpha _2, \cdots , \alpha _m)^T\), \(\beta =( \beta _1,\beta _2, \cdots , \beta _n)^T\) such that

Considering that we have all down-scaled images standardized into unit vectors, we further require that

When \(\hat{x}_i=x_i-x_m\ (i=1,2, \cdots , m-1)\), \(\hat{y}_j=y_j-y_n\ (j=1,2, \cdots , n-1)\). We have

where \(\hat{\alpha }=(\alpha _1,\alpha _2, \cdots , \alpha _{m-1})^T\), \(\hat{\beta }=(\beta _1,\beta _2, \cdots , \beta _{n-1})^T\). Assume that there is a approximate solution \(\gamma =(\alpha _1,\alpha _2, \cdots , \alpha _{m-1},\beta _1,\beta _2, \cdots , \beta _{n-1})^T\in \mathrm{I\!R}^{(m+n-2)\times 1}\) for the equation

where \(\hat{XY}=[\hat{x}_1,\hat{x}_2, \cdots , \hat{x}_{m-1},-{\hat{y}_1},-{\hat{y}_2}, \cdots , -{\hat{y}_{n-1}}]\).

After obtaining the estimated value of the regression coefficient \(\gamma \), the “virtual” face image may be represented by the image set \(X\) and the image set \(Y\) respectively. Specifically, the “virtual” face image \(V_X\) reconstructed from the image set \(X\) is

while the “virtual” face image \(V_Y\) reconstructed from the image set \(Y\) is

Obviously, difference between the two reconstructed “virtual” face images is essentially the residual of the linear regression equation. Since the difference between the image set \(X\) and the image set \(Y\) can be expressed by calculating the difference between the two reconstructed “virtual” face images, we can use the residual of the linear regression equation to estimate the similarity of the two image sets subspace \(X\), \(Y\), namely

If the \(D(X,Y)\) value is smaller, the two image sets are closer to each other.

3 Bilinear Regression Classification

Inspired by DLRC, this section proposes bilinear regression classification. We show a simple flowchart in Fig. 1. The main contents of this section are organized as follows. First, the concept of unrelated subspaces is presented in Subsect. 3.1. Second, two strategies of constituting the unrelated subspace are described in Subsect. 3.2. Then, both related metric and unrelated metrics are computed in Subsect. 3.3. Last, the final distance metric for classification called combination metric, is described in Subsect. 3.4.

3.1 Definition of Unrelated Image Set Subspace

Definition 1

Suppose that there are C-classes image set in the training set, there are a total of M test image sets in the test set. For each image set in the test set, it is assumed that we need to calculate the distance between the test image set and the \(c^{th}\) image set, where \(c=1,2 \cdots C\), and the \(c^{th}\) image set in the training image set has \(N_c\) image samples. If there is a set U, U also contains \(N_c\) samples, and these \(N_c\) samples are from the other \(C-1\) classes except for the \(c^{th}\) class, then set U is called the unrelated image set subspace of the above test image set.

According to Definition 1, we need to select \(N_c\) image samples from the remaining \(C-1\) class samples that exclude \(c^{th}\) category to construct the unrelated image set subspace. In next subsection we will describe how to construct unrelated image set subspace.

3.2 Constructions of the Unrelated Subspace

The \(c^{th}\) image set \(X^c\) in the training image set is represented as follows:

That means that the \(c^{th}\) image set in the training set defines a subspace, which can be represented by \(X^c\).

The subspace \(X\) determined by all images on the training set is as follows:

in which \(l=\sum _{c=1}^C{N_c}\).

The overall mean of training image set \(X\) is

The mean of the \(c^{th}\) image set on training image sets is \(X_{mean}^c=\frac{1}{N_c}\sum _{i=1}^{N_c}{x_i^c}\). Images in class \(c\) are centralized as \(\hat{x}_i^c=x_i^c-X_{mean}^c (c=1,2, \cdots , C; i=1,2, \cdots ; N_c) \), then the centralized training image set \(\hat{X}\) is formulated as follows:

Similarly, the image subspace determined by the test image set \(Y\) presented by

For image set \(Y\), \(y_{mean}=\frac{1}{n}\sum _{i=1}^n {y_i}\), centralized as \(\hat{y}_i=y_i-y_{mean}\ (i=1,2, \cdots , n)\), and then the centralized testing image set \(\hat{Y}\) is formulated as follows:

Strategy 1. When calculating the manhatta distance between the test image set and the \(c^{th}\) image set, the distance between \(y_{mean}\) and a training sample \(X_i\) can be computed as:

The distance metric set \(D\) of the training image set \(X\) and \(y_{mean}\) is as follows:

First, we remove the elements corresponding to the \(c^{th}\) class from \(D\) as \(\hat{D}\in R^{1\times (L-N_c)}\). Then we sort the elements in \(\hat{D}\) in ascend order and select \(N_c\) samples \(x_i^p (p\ne c)\) from \(X\), which corresponds to the smallest \(N_c\) distances from \(\hat{D}\) to constitute the unrelated subspace \(U_c\).

The classifier based on strategy 1 will be called bilinear regression classification-I (BLRC-I).

Strategy 2. When calculating the distance between the test image set and the \(c^{th}\) training image set, assuming that training image set \(X\) and test image set \(Y\) determine a “virtual” face image space. Different from strategy 1, Strategy 2 does not directly calculate the distance between each image in the training image set \(X\) and the center \(y_{mean}\) of the test image set. Instead, it calculates the distance between the projection of each image in the training image set on the “virtual” face space and the center of the test image set \(y_{mean}\).

In order to obtain the joint coefficient vector of the two image sets \(\hat{X}\) and \(\hat{Y}\), the joint image set \(E\) and the test vector \(e\) can be constituted as:

Suppose that \(\theta \in \mathrm{I\!R}^{(L+n)\times 1}\) is the joint coefficient vector of \(\hat{X}\) and \(\hat{Y}\), which can be calculated by solving the optimization problem

where \( \lambda _1>0, \lambda _2>0\) and \(\lambda _1+\lambda _2=1\).

After solving the regression coefficient \(\hat{\theta }\). Then, the Mahalanobis distance between the projection of each image in the training image set \(X\) on the “virtual” face space and the center of the test image set can be expressed by the following equation:

The distance metric set \(D\) is formulated by

First, we remove the elements corresponding to the \(c^{th}\) class from \(D\) as \(\hat{D}\in R^{1\times (L-N_c)}\). Then we sort the elements in \(\hat{D}\) in ascend order and select \(N_c\) samples \(x_i^p(p\ne c)\) from \(X\), which corresponds to the smallest \(N_c\) distances from \(\hat{D}\) to constitute the unrelated subspace \(U_c\),

The classifier based on strategy 2 will be called bilinear regression classification-II (BLRC-II).

3.3 Related and Unrelated Distance Metric

Related Distance Metric. In Subsect. 3.2, we have obtained the class mean \(X_{mean}^c\) for each class in the training set. After centralized processing, the training image set of class c can be converted to

Now we need to calculate the distance between the test image set \(\hat{Y}\) and the \(c^{th}\) image set \(\hat{X}_c\) in the training set. To obtain the joint regression coefficients of the two image sets, the joint image set \(S_r^c\) and test vector \(s_r^c\) can be constituted as:

and

Assume that \(\gamma ^c\in \mathrm{I\!R}^{(N_c+n)\times 1}\) is the joint regression coefficient of \(\hat{X}_c\) and \(\hat{Y}\). According to the regression equation \(s_r^c=S_r^c \gamma ^c\), we can see that the solution of \(\gamma ^c\in R^{(N_c+n)\times 1}\) is

Then, the reconstructed “virtual” face image \(r_1\) obtained from the \(c^{th}\) training image set \(\hat{X}_c\) is

The reconstructed “virtual” face image \(r_2\) obtained from the test image set \(Y\) is

Finally, the distance between \(r_1\) and \(r_2\) can be used to represent the distance between the test image set and the \(c^{th}\) image set in the training set, which is expressed by

That is, the residual of the linear regression equation \(s_r^c=S_r^c\gamma ^c\) can be used to represent the distance between the test image set and the \(c^{th}\) image set in the training set.

Unrelated Distance Metric. The unrelated image set subspace \(U_c\) of the test image set has been obtained in Sect. 3.2. The mean vector of \(U_c\) is

After centralization, the unrelated image set subspace \(U_c\) can be converted to

Now we need to calculate the distance between the test image set \(\hat{Y}\) and the unrelated image set subspace \(U_c\). To obtain the joint regression coefficients of two image sets, the joint image set \(S_u^c\) and test vector \(s_u^c\) can be constituted as

and

Assume that \(\delta ^c\in \mathrm{I\!R}^{(N_c+n)\times 1}\) is the joint regression coefficient of \(\hat{U}_c\) and \(\hat{Y}\). According to the regression equation \(s_u^c=S_r^c \delta ^c\), it indicates that the solution of \(\delta ^c\in \mathrm{I\!R}^{(N_c+n)\times 1}\) is

Then, the reconstructed “virtual” face image \(r_1\) obtained from the unrelated image set subspace \(\hat{U}_c\) is

The reconstructed “virtual” face image \(r_2\) obtained from the test image set \(Y\) is

Finally, the distance between \(r_1\) and \(r_2\) can be used to represent the distance between the test image set and the unrelated image set subspace, which is expressed by

That is, the residual of the linear regression equation \(s_u^c=S_u^c\delta ^c\) can be used to represent the distance between the test image set and the unrelated image set subspace.

3.4 Combined Distance Metric

After obtaining the related distance metric \(d_r^c\) and the unrelated distance metric \(d_u^c\), we can construct a discriminative criterion by combine the two metric results in a suitable manner. It is obvious that if the test image set belongs to category \(c\), we hope that the distance between the test image set \(\hat{Y}\) and the \(c^{th}\) image set \(\hat{X}_c\) is closer, that is, the \(d_r^c\) is as small as possible. on the other hand, it is desirable to make the feature representations between the test image set \(\hat{Y}\) and the unrelated image set \(\hat{U}_c\) further, that is, the \(d_u^c\) is as large as possible. So we propose a new metric \(d_p^c\) as

The smaller the value of \(d_p^c\), the greater similarity between the test image set and the \(c^{th}\) image set. In other words our face image set recognition criterion selects the image set category \(c\) when \(d_p^c\) takes the minimum value, i.e.

4 Experimental Results

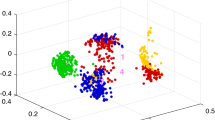

This section provides extensive experimental results to evaluate the performance of two proposed classifiers: BLRC-I and BLRC-II. These experiments are conducted by using several benchmark datasets, i.e., image-based face recognition on the LFW face database [18] and AR face database [10], video-based face recognition on Honda/UCSD face database [8].

4.1 Experiments on LFW

LFW face database were captured in unconstrained environments such that there will be large variations in face images including pose, age, race, facial expression, lighting, occlusions, and background, etc. We use the aligned version of the LFW database, LFW-a to evaluate the recognition performance.

LFW-a contains more than 5,000 subjects. Each subject including images of the same individual in different poses. Note that all the images in LFW-a are of size \(250\times 250\). We manually crop the images into size of \(90\times 78\) (by removing 88 pixel margins from top, 72 from bottom, and 86 pixel margins from both left and right sides). An subset of LFW containing 62 persons, each people has more than 20 face images, is used for evaluating the algorithms. Our experimental setting is identical to that in [3]. The first 10 images of each subject are selected to form the training set, while the last 10 images are used as the probe images.

The proposed classifiers are compared with methods including sparse approximated nearest points (SANP) [5, 6], affine hull based image set distance (ASIHD) [2], convex hull based image set distance (CSIHD) [2], manifold discriminant analysis (MDA) [13], Dual Linear Regression Based Classification for Face Cluster Recognition (DLRC) [3] and Pairwise Linear Regression Classification for Image Set Retrieval (PLRC) [19]. All methods use the down-scaled images of size of \(10\times 10\) and \(15\times 10\) as in [3]. The classification results of all methods are illustrated in Table 1. For the images with size of \(10\times 10\), the proposed BLRC-I achieves identical performances with the MDA and PLRC-I method, and the recognition rate is 93.55\(\%\), which exceeds other classifiers. For BLRC-II, the recognition rate is 98.39\(\%\), obtains the best recognition rate compared with other methods. For images with size of \(15\times 10\), BLRC-I reaches 96.77\(\%\) recognition rate, BLRC-II, recognition rate is as high as 98.39\(\%\). The effects of BLRC-II are higher than those of other classifiers as shown in Table 1.

4.2 Experiments on AR

In this section, we study the performance of the proposed classifiers by using the well-known AR database. There are over 4000 face images of 126 subjects (70 men and 56 women) in the database. The face images of each individual contain different expressions, lighting conditions, wearing sun glasses and wearing scarf. We use the cropped AR database that includes 2600 face images of 100 individuals, First, we manually crop images into a size of \(90\times 70\) (by removing 38 pixel margins from top, 39 from bottom, and 24 pixel margins from left and 25 pixel margins right sides). Then downscale the clipped image to get \(40\times 40\) resolutions. In the experiments, the first 13 images of each subject are selected to form a training image set, and the remaining 13 images are composed of test image sets.

For this database, the proposed classifiers are compared with following state-of-the-art approaches: SANP [5, 6], ASIHD [2], CSIHD [2], DLRC [3] and PLRC [19]. The recognition rates of different classifiers have been presented in Table 2. Experimental results show that compared with other algorithms, the recognition accuracy of the BLRC-I and BLRC-II for image set recognition is as high as 97.98\(\%\), which shows obvious improvement on the classification performance.

4.3 Honda/UCSD Face Database

The Honda/UCSD dataset contains 59 video clips of 20 subjects [8], all but one have at least 2 videos. 20 videos are called training videos and the remainder 39 test videos. The lengths of videos vary from 291 to 1168 frames. In order to maintain the comparability of the experimental results, we use face images consistent with other proceeding work [6].

This dataset has been used extensively for image-based face recognition, the accuracy has reached 100\(\%\) or close to 100\(\%\). Therefore, researchers have turned to experiment on the settings using a small amount frames. We carry out the experiment using the first 50 frames in each video for this database. The shared database by [5] is used. For the video clips that contain less than 50 frames, all frames are selected in the experiment. The following methods are chosen for comparison: DCC [7], MMD [15], MDA [13], AHISD [2], CHISD [2], MSM [17], SANP [5, 6], DLRC [3] and PLRC [19]. Table 3 lists all recognition rates of these classifiers on this database. We find that the recognition rates of BLRC-I, AHISD, RNP, DLRC and PLRC-I are all equal 87.18\(\%\), which is much better than those of DCC and MMD methods. The BLRC-II classifier obtains the highest accuracy 92.31\(\%\) for this database, which is obviously superior to the results of other types of recognition algorithms.

5 Conclusion

In this paper, bilinear regression classification method (BLRC) is proposed for face image set recognition. Compared to DLRC, BLRC increases the unrelated subspace for classification. Based on different methods of constituting the unrelated subspace, two classifiers are proposed in this paper. In order to validate the performance of two classifiers, some experiments are evaluated on three database for face image set classification tasks. All experimental results confirm the effectiveness of two proposed classification algorithms.

References

Arandjelovic, O., Shakhnarovich, G., Fisher, J., Cipolla, R., Darrell, T.: Face recognition with image sets using manifold density divergence. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 581–588 (2005)

Cevikalp, H., Triggs, B.: Face recognition based on image sets. In: Computer Vision and Pattern Recognition, pp. 2567–2573 (2010)

Chen, L.: Dual linear regression based classification for face cluster recognition. In: Computer Vision and Pattern Recognition, pp. 2673–2680 (2014)

Cover, T., Hart, P.: Nearest neighbor pattern classification. IEEE Press (1967)

Hu, Y., Mian, A.S., Owens, R.: Sparse approximated nearest points for image set classification, vol. 42, no. 7, pp. 121–128 (2011)

Yiqun, H., Mian, A.S., Owens, R.: Face recognition using sparse approximated nearest points between image sets. IEEE Trans. Pattern Anal. Mach. Intell. 34(10), 1992–2004 (2012)

Kim, T.K., Kittler, J., Cipolla, R.: Discriminative learning and recognition of image set classes using canonical correlations. IEEE Trans. Pattern Anal. Mach. Intell. 29(6), 1005 (2007)

Lee, K.C., Ho, J., Yang, M.H., Kriegman, D.: Video-based face recognition using probabilistic appearance manifolds. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 313–320 (2003)

Mahmood, A., Mian, A., Owens, R.: Semi-supervised spectral clustering for image set classification. In: Computer Vision and Pattern Recognition, pp. 121–128 (2014)

Martínez, A.M., Kak, A.C.: PCA versus LDA. IEEE Trans. Pattern Anal. Mach. Intell. 23(2), 228–233 (2001)

Naseem, I., Togneri, R., Bennamoun, M.: Linear regression for face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 32(11), 2106–2112 (2010)

Sch, C., Laptev, I., Caputo, B.: Recognizing human actions: a local SVM approach. In: International Conference on Pattern Recognition, pp. 32–36 (2004)

Wang, R., Chen, X.: Manifold discriminant analysis. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 429–436 (2009)

Wang, R., Guo, H., Davis, L.S., Dai, Q.: Covariance discriminative learning: a natural and efficient approach to image set classification. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2496–2503 (2012)

Wang, R., Shan, S., Chen, X., Gao, W.: Manifold-manifold distance with application to face recognition based on image set. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2008)

Wright, J., Ganesh, A., Zhou, Z., Wagner, A., Ma, Y.: Demo: robust face recognition via sparse representation. In: IEEE International Conference on Automatic Face and Gesture Recognition, pp. 1–2 (2009)

Yamaguchi, O., Fukui, K., Maeda, K.: Face recognition using temporal image sequence. In: 1998 Proceedings of IEEE International Conference on Automatic Face and Gesture Recognition, pp. 318–323 (1998)

Zhu, P., Zhang, L., Hu, Q., Shiu, S.C.K.: Multi-scale patch based collaborative representation for face recognition with margin distribution optimization. In: European Conference on Computer Vision, pp. 822–835 (2012)

Feng, Q., Zhou, Y., Lan, R.: Pairwise linear regression classification for image set retrieval. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 4865–4872 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Hua, WW., Ren, CX. (2018). Face Image Set Recognition Based on Bilinear Regression. In: Lai, JH., et al. Pattern Recognition and Computer Vision. PRCV 2018. Lecture Notes in Computer Science(), vol 11258. Springer, Cham. https://doi.org/10.1007/978-3-030-03338-5_20

Download citation

DOI: https://doi.org/10.1007/978-3-030-03338-5_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03337-8

Online ISBN: 978-3-030-03338-5

eBook Packages: Computer ScienceComputer Science (R0)