Abstract

We introduce a novel embedded algorithm for feature selection, using Support Vector Machine (SVM) with kernel functions. Our method, called Kernel Parameter Descent SVM (KPD-SVM), is taking parameters of kernel functions as variables to optimize the target functions in SVM model training. KPD-SVM use sequential minimal optimization, which breaks the large quadratic optimization problem into some smaller possible optimization problem, avoids inner loop on time-consuming numerical computation. Additionally, KPD-SVM optimize the shape of RBF kernel to eliminate features which have low relevance for the class label. Through kernel selection and execution of improved algorithm in each case, we simultaneously find the optimal solution of selected features in the modeling process. We compare our method with algorithms like filter method (Fisher Criterion Score) or wrapper method (Recursive Feature Elimination SVM) to demonstrate its effectiveness and efficiency.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Feature Selection is a vital issue in machine learning. It is common to apply feature selection methods to classification problems, especially when those original data sets have redundant features [1].

According to [2], there are three main directions for feature selection: filter, wrapper, and embedded methods.

Filter takes statistical analysis to filter out poorly informative features, it is usually done before the samples taken into a classifier. Relief [3] is a typical filter method which is statistically relevant to the target concept and feeds features into the classifier.

Wrapper approach searches the whole set of samples to score feature subset, therefore it naturally entails training and implementation of learning algorithms during the procedure of feature selection, wrappers use different classifier such as naive Bayes [4], neural networks [5] and nearest neighbor [6]. The random forests based wrapper approaches [7, 8] are widely used to identify important features from feature subset.

In embedded method, feature selection is embedded into the classifier [9], feature is selected by the internal function of an algorithm such as least absolute shrinkage and selection operator (LASSO) [10] and decision tree [11].

Above methods have their limitation, wrapper algorithms are complex in computation, but usually obtain more accurate results than filter methods [12], the problem of a wrapper is high computational cost because it involves repeated training. The robustness of above methods in high dimension data set is a crucial problem. Therefore some features select approaches constructed by combining multiple classifiers, their robust more than the approaches with a single classifier [13]. In addition, support vector machines (SVM) have been proposed as a wrapper classifier for feature selection [14].

Although standard implementation of SVM shows good performance in classification prediction, it cannot rank each features’ importance for feature elimination. Thus we introduce a novel approach which selects features according to the descent path of kernel parameters, indirectly figuring out the importance of each features as well as optimizing the model predicting ability. The method we called Kernel Parameter Descent Support Vector Machine (KPD-SVM), the approach not only optimizes the parameter of SVM, but also obtains a subset of features for specific objective. KPD-SVM will be talked in detail and be compared with other characteristic approaches of feature selection in SVM.

2 Related Works

2.1 Support Vector Machine

In this section, we will simply review the development of SVM method.

Support Vector Machine (SVM) is a strictly math-based machine learning model, raised by Vapnik [15]. The principle of SVM classifier is obvious. It tries to find out the optimal hyperplane for the optimization problem with “soft margin” as follows:

Here we denote \(\xi _{i}\) as slack variable. The training data can be transformed into higher dimensional space through kernel function \(x\rightarrow \phi (x)\). So the decision function can be rewritten as:

Since the scalar products \(\langle \phi (x),\phi (y) \rangle \) are the only value to be calculated, kernel function

is used to solve them. As result the optimization problem can be rewritten as:

2.2 Feature Selection in SVMs

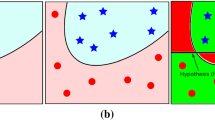

Typically, there are three methods in SVM based feature selection process, Filter, Wrapper and Embedded [1]. Here we review each of them briefly and stress one representative algorithm of each method, for experimental comparison in next section.

-

Filter Method: Among all the measurement in Filter method, Fisher Criterion Score (F-Score) is one of the most common indicator to use. It computes the significance of each feature independently of the other feature by comparing that feature’s correlation to the output labels. The respective score F(j) of feature j is given by:

$$\begin{aligned} F(j)=\Bigg | \frac{\mu _{j}^{+}+\mu _{j}^{-}}{(\sigma _{j}^{+})^{2}+(\sigma _{j}^{-})^{2}}\Bigg | \end{aligned}$$(5)Where \(\mu _{j}^{+}\) (\(\mu _{j}^{-}\))is the mean value for the jth feature in positive(negative) class. And \(\sigma _{j}^{+}\)(\(\sigma _{j}^{-}\)) is the standard deviation. When the F(j) is large, it means jth feature has much more information to discriminate itself from other features, which suggests it ranks top of the feature list and would be more likely not to be eliminate and vice versa. The disadvantage of filter method is time consuming and skillful because you need to choose a suitable measurement method.

-

Wrapper Method: One representative wrapper method is Recursive Feature Elimination SVM (RFE-SVM), which is raised by Guyon [16]. RFE-SVM aims to find out the r-feature subset among the original n-feature set through backward greedy algorithm, which build model by the whole feature at the beginning then cut off one feature according the ranking order. The disadvantage of Wrapper method is that it is more time consuming than filter method because it need to train models on different feature subsets.

-

Embedded Method: The last method for feature selection is embedded method. The most different novelty between embedded and others is that it conducts the selection in the process of model training. One common embedded method is to add a penalty item to the target function which limits the model complexity [17]. Compared with filter method and wrapper method, we choose embedded method in our model because it is less time consuming.

3 The Proposed Method: KPD-SVM

The principle of proposed method aims to improve the classification performance as well as to eliminate less important features by optimizing parameter/parameters in kernel function. This method use penalty item like \(L0-norm\) or \(L1-norm\) of the parameter to punish the large number of feature we consider in modeling which is more likely to cause over-fitting problems. Through gradient descent algorithm, we can find out the best solution (which means the best classification performance) of the vector of kernel parameters. During this iteration process, we set the parameters whose values are lower than a small criterion as 0. Thus we can deal with the feature selection task.

3.1 Kernel Function

Among the kernel function SVM commonly uses, we pay attention to the following mostly-used kernels:

-

Gaussian Kernel function we write the kernel function in the form of the summation in each feature:

$$\begin{aligned} K(x,y)=exp \bigg (- \sum ^{d}_{j=1}\frac{(x_{j}-y_{j})^{2}}{2\sigma ^{2}_{j}}\bigg ) \end{aligned}$$(6)where \(\sigma =[\sigma _{1},\sigma _{2},\sigma _{3}\ldots ,\sigma _{n}]\) indicates the width of the kernel and determines the kernel shape. d is the number of features. For better demonstration, we denote:

$$\begin{aligned} \gamma =[\frac{1}{2\sigma _{1}^{2}},\frac{1}{2\sigma _{2}^{2}},\frac{1}{2\sigma _{3}^{2}},\ldots ,\frac{1}{2\sigma _{d}^{2}}] \end{aligned}$$(7)which leads to

$$\begin{aligned} K(x,y)=exp \bigg (- \sum ^{d}_{j=1}\gamma _{j} (x_{j}-y_{j})^{2}\bigg ) \end{aligned}$$(8) -

Exponential kernel (Laplace) Similar with Gaussian kernel, it is shown as:

$$\begin{aligned} K(x,y)=exp \bigg (- \sum ^{d}_{j=1}\gamma _{j} (x_{j}-y_{j})\bigg ) \end{aligned}$$(9) -

Polynomial kernel its function as:

$$\begin{aligned} K(x,y)=(\alpha x^{T}y+c)^{D} \end{aligned}$$(10)Here we fix D and let \(c=1\) in our proposed method, hence we only need to consider the vector of \(\alpha \):

$$\begin{aligned} K(x,y)=((\sum _{j=1}^{d}\alpha _{j} x_{j}y_{j})+1)^{D} \end{aligned}$$(11)

3.2 Target Function in KPD-SVM

According the previous definition, the set of Lagrange multipliers \(\alpha \) is considered, and adding the new parameter \(\gamma \) in kernel function and penalty item of model complexity, therefore the optimization problem \(\min _{\mathbf {w} ,b} \frac{1}{2} \Vert \mathbf {w}\Vert ^{2}\) is minimized with a penalty function and some constrains.Our target function G is as follows:

where the \(\varPsi (\alpha )\) are transformed from the target optimization function (4) of the standard SVM:

and \(\varPhi (\alpha ,\gamma )\) is penalized function, the first item of Eq. (14) is transformed from the second item of Eq. (13), the second item of Eq. (14) is penalized item:

where \(\gamma _{j}\) need to be non-negative and we use \(L0-norm\) as \(f(\gamma )\), which is approximately equal to [9]:

\(C_2\) is the strength of the penalty of the complexity of our model which is different from C for penalty of training error(slack variable \(\xi \)). Also \(L0-norm\) can be replaced with \(L1-norm\) or \(L2-norm\) in our target function.

Because this optimization problem is not convex [17], it may be hard to search the globally optimal solution. So that we propose an algorithm to search a locally optimal solution. Then we use a method to solve this optimization problem in two step [17]:

[Step 1] Given a set of fixed kernel parameter \( \gamma \), calculate the value of \(\alpha \) in optimal function \(\min _\alpha \varPsi (\alpha )\), here sequential minimal optimization(SMO) [18] is a method to solve the SVM QP problem.

For convenience, all quantities that refer to the first multiplier will have a subscript 1, while the other refers to the second multiplier \(\alpha _2\). Without loss of generality, the second multiplier \(\alpha _2\) will be computed firstly. The following bounds W, H apply to \(\alpha _2\) while the target \(y_1\) does not equal the target \(y_2\):

If the target \(y_1=y_2\), the bounds apply to \(\alpha _2\) is shown as:

The second derivative of the objective function \(\min _\alpha \varPsi (\alpha )\) along the diagonal line can be conducted as:

Under the normal condition, the objective function is positive definite, there will be a minimum along the direction of the linear constraint, and \(\eta \) is greater than 0. The new minimum is computed along the direction of the constraint as follow:

where \(E_i=u_i-y_i, i=1,2\) is the error on the i-th training example, as a next step, the constrained minimum is clipped by the bound W, H. Let \(s=y_1y_2\). The optimal \(\alpha _1\) is computed by the optimized and clipped \(\alpha _2\):

Under unusual condition, \(\eta \) will not be positive, which can cause the objective function to become indefinite.

[Step 2] Find out the best \( \gamma \) for given fixed \(\alpha \) in step 1, solve the objective function \(\min _{\alpha ,\gamma }\varPhi (\alpha ,\gamma )\) using gradient descent algorithm. And if the renewed \( \gamma _{j}\) is below the criterion we set, eliminate the feature j and loop for next iteration until reaching the stop criterion. For given j the gradient of \(F(\gamma ^{*}_{j})\) is:

Gaussian

Polynomial

To avoid misunderstandings of \(\gamma \) in polynomial kernel and target function, we set \(\gamma ^{poly}\) in polynomial kernel. Exponential Kernel (Laplace)

The algorithm adjust the kernel components using gradient descent procedure, specially to parameter \(\gamma \), which is set to be small to avoid negative at the first iterations.

3.3 Detailed Process of Proposed Algorithm

The pseudo code is shown as below:

where

and

In the algorithm, we may consider the following vital step, some details are given as follows:

Kernel Selection, Use the whole features to train model with different kernels (eg. Gaussian, Polynomial) and different parameter (\(\gamma , D, c\)). Calculate the average accuracy of each model with different kernels by cross validations. Then select the kernel with the best performance which is the most appropriate kernel of this data set.

Set Original Value, At the start of algorithm, we give the initial value of \(\alpha , \gamma \), and some parameter for update.

Calculate \(\alpha \), Based on standard SVM training process and may take SMO algorithm [18] to quickly and efficiently find out the answer \(\alpha ^{*}\).

Update \(\sigma \) and \(\gamma \), Apply gradient descent algorithm to renew \(\sigma _{i}\) or \(\gamma _{i}^{*}\), the lines 10–13 of the algorithm shows the iteration process, one by one for fixed the optimal \(\alpha \).

Step size of gradient descent, We set \(\theta \) as the step size of gradient descend in each iteration.

Elimination criterion, \(\varepsilon \) is the eliminate threshold which means we eliminate the feature j by setting \(\gamma ^{*}_{j}=0\) if value \(\gamma ^{*}_{j}\) is below \(\varepsilon \).

Stop criterion, For the stop criterion, we set a relative stop criterion \(\zeta _{relative}\) and an absolute stop criterion \(\zeta _{absolute}\) in order to balance the time of iterations and the performance of the model. \(\zeta _{relative}\) is defined as the ratio \(\frac{\parallel (\gamma ^{*})^{[t+1]}-(\gamma ^{*})^{[t]}\parallel _{1}}{\parallel (\gamma ^{*})^{[t]}\parallel _{1}}\) and \(\zeta _{absolute}\) is set as \(\parallel (\gamma ^{*})^{[t]}\parallel _{1}\).

3.4 Discussion of Parameter

Our discussion mainly concentrates on one issue: Selection of parameter values in proposed method. Basically, the proposed method outperforms in its process of feature selection and modeling. However, there are some parameters we need to tune for the optimal solution of classification. In [17], it has already concluded that \(\beta \), \(\varepsilon \) and \(\gamma ^{[0]}\) have less influence in the final solution. In terms of the penalty for slack variables, C, we use Leave-One-Out Cross-Validation to find the best value of C in each case.

Complexity Penalty \(C_{2}\): \(C_{2}\) is the coefficient of penalty item on the number of feature or model complexity. A large \(C_{2}\) means a strict limitation to build greatly complicated model. We choose \(C_{2}\) according to the balance of prediction performances and model complexity.

Step Size \(\theta \): \(\theta \) represents the step size of gradient descend in each iteration.

We want to use an automatically adjusted step size in some cases. Hence,we denote \(\theta _{auto}\) as \(\frac{\varepsilon }{median\{\varDelta _{j} F(\gamma ^{*})\}}\), \(j=1,\ldots ,d\). And we may take \(\theta = \min \{\theta _{original},\theta _{auto}\}\) as step size in each iteration.

Stop Criterion \(\zeta _{absolute},\zeta _{relative}\): With the increasing number of iterations, the \(1-norm\) difference of kernel parameter in t and \(t+1\) iteration goes to convergence, which shows the algorithm can find out the best kernel parameter in certain countable iterations.

4 Experiments

In this section, we apply the proposed method to do experiments in some real-world dataset. Also we will compare our method with F-score and RFE-SVM, which represents the filter and wrapper algorithm in feature selection. The measurements we make comparison are as follows: First, model prediction performance. Second, the number of features in the optimal solution.

4.1 Data Set

The data sets we selected are from UCI Machine Learning Database. Detailed information of each data set is shown as follows:

-

Sonar: This is the data set used by Gorman and Sejnowski in their study of the classification of sonar signals. The data set contains 111 patterns obtained by bouncing sonar signals off a metal cylinder at various angles and under various conditions. And it contains 97 patterns obtained from rocks under similar conditions. The label associated with each record contains the letter “R” if the object is a rock and “M” if it is a mine (metal cylinder).

-

WBC: The Wisconsin Breast Cancer data set has 569 observations and 30 features. All feature values are recoded with four significant digits. In addition, people who are diagnosed are labeled as \(M\quad (malignant\quad tumor)\) and the other are marked as \(B\quad (benign\quad tumor)\).

We basically consider the following three kernel functions: Gaussian, Polynomial, Laplace (Exponential). Then the values of parameters in each kernel function we used are as follows:

-

\(\sigma _{Gaussian} = (0.0001,0.0005,0.001,0.005,0.01,0.05, 0.1,0.5,1,10,50,100,500,1000)\)

-

\(D = (2,3,4,5,6,7,8,9,10,11,12,13,14,15)\)

-

\(\sigma _{Laplace}= (0.0001,0.0005,0.001,0.005,0.01,0.05, 0.1,0.5,1,10,50,100,500,1000)\)

4.2 Case: Sonar

Basic information of this data set is shown in Table 1.

First we carry out kernel selection. Fig. 1 shows the average performances of each kernel function applied in Sonar. Thus we choose Polynomial Kernel in this case.

Figure 2 shows the performance of proposed method KPD-SVM compared with F-Score and RFE-SVM. The optimal feature subset are selected by each method, and the number of these subsets are shown below: Filter(F1-Scores):24, Wrapper(RFE-SVM):18-20, Embedded(KPD-SVM):20.

In conclusion, KPD-SVM outperforms F-Score and RFE-SVM in this Sonar case.

4.3 Case: WBC

Basic information of this data set is shown in Table 2.

First we carry out kernel selection. In WBC we choose Polynomial Kernel in this case. Figure 3 shows the average performances of each kernel function applied in WBC.

The performance of proposed method KPD-SVM compared with F-Score and RFE-SVM shown in Fig. 4. The optimal feature subset are selected by each method, and the number of these subsets are shown below: Filter(F1-Scores):26, Wrapper(RFE-SVM):19, Embedded(KPD-SVM):15.

In conclusion, considering the model prediction accuracy and the model complexity (the number of features), we can say KPD-SVM outperforms in this WBC case.

5 Conclusion

In this paper, we have presented a novel method called Kernel Parameter Descent Support Vector Machine (KPD-SVM) for feature selection using kernel functions. Our embedded method can generalize a well-trained SVM classifier as well as a good solution for feature selecting. In addition, our KPD-SVM method outperforms other methods, like filter method (F-Score) and wrapper method (RFE-SVM). Besides, compared with former embedded algorithm by optimizing kernel parameters [1,2,3,4], our method has novelties in stop criterion and step size settings in executions, which performs better in time consuming.

References

Chandrashekar, G., Sahin, F.: A Survey on Feature Selection Methods. Pergamon Press, Inc., Oxford (2014)

Cheriet, M., Kharma, N., Liu, C.L., et al.: Character Recognition Systems: A Guide for Students and Practitioners. Scitech Book News (2007)

Kira, K., Rendell, L.A.: A practical approach to feature selection. In: International Workshop on Machine Learning, pp. 249–256. Morgan Kaufmann Publishers Inc., Burlington (1992)

Kohavi, R., John, G.H.: Wrappers for feature subset selection. Artif. Intell. 97(1–2), 273–324 (1997)

Sesmero, M.P., Alonso-Weber, J.M., Ledezma, A., et al.: A new artificial neural network ensemble based on feature selection and class recoding. Neural Comput. Appl. 21(4), 771–783 (2012)

Yang, J., Yao, D., Zhan, X., Zhan, X.: Predicting disease risks using feature selection based on random forest and support vector machine. In: Basu, M., Pan, Y., Wang, J. (eds.) ISBRA 2014. LNCS, vol. 8492, pp. 1–11. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-08171-7_1

Anaissi, A., Kennedy, P.J., Goyal, M., et al.: A balanced iterative random forest for gene selection from microarray data. Bmc Bioinform. 14(1), 1–10 (2013)

Swan, A.L., Mobasheri, A., Allaway, D., et al.: Application of machine learning to proteomics data: classification and biomarker identification in postgenomics biology. Omics J. Integr. Biol. 17(12), 595–610 (2013)

Blum, A.L., Langley, P.: Selection of relevant features and examples in machine learning. Artif. Intell. 97(1–2), 245–271 (1997)

Tibshirani, R.: Regression shrinkage and selection via the lasso: a retrospective. J. R. Stat. Soc. 73, 273–282 (2011)

Chan, H.P., Kim, S.B.: Sequential random k-nearest neighbor feature selection for high-dimensional data. Expert. Syst. Appl. 42(5), 2336–2342 (2015)

Maldonado, S., Weber, R.: A wrapper method for feature selection using support vector machines. Inf. Sci. 179(13), 2208–2217 (2009)

Tuv, E., Borisov, A., Runger, G., et al.: Feature selection with ensembles, artificial variables, and redundancy elimination. J. Mach. Learn. Res. 10(3), 1341–1366 (2009)

Chen, P., Zhang, D.: Constructing support vector machines ensemble classification method for imbalanced datasets based on fuzzy integral. In: Ali, M., Pan, J.-S., Chen, S.-M., Horng, M.-F. (eds.) IEA/AIE 2014. LNCS (LNAI), vol. 8481, pp. 70–76. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-07455-9_8

Vapnik, V.N., Vapnik, V.: Statistical Learning Theory, vol. 1. Wiley, New York (1998)

Guyon, I., Gunn, S., Nikravesh, M., et al.: Feature Extraction: Foundations and Applications. Studies in Fuzziness and Soft Computing. Springer, New York (2006). https://doi.org/10.1007/978-3-540-35488-8

Maldonado, S., Weber, R., Basak, J.: Simultaneous feature selection and classification using kernel-penalized support vector machines. Elsevier Science Inc. (2011)

Platt, J.C.: Sequential minimal optimization: a fast algorithm for training support vector machines. In: Advances in Kernel Methods-Support Vector Learning, pp. 212–223 (1998)

Acknowledgements

We would like to acknowledge Professor Chih-Jen Lin from National Taiwan University for his research on Support Vector Machine and his work on software LIBSVM.

This work was supported by the Guangdong Provincial Government of China through the “Computational Science Innovative Research Team” program and Guangdong Province Key Laboratory of Computational Science at the Sun Yat-sen University, and the National Science Foundation of China (11471012).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhu, H., Bi, N., Tan, J., Fan, D. (2018). An Embedded Method for Feature Selection Using Kernel Parameter Descent Support Vector Machine. In: Lai, JH., et al. Pattern Recognition and Computer Vision. PRCV 2018. Lecture Notes in Computer Science(), vol 11258. Springer, Cham. https://doi.org/10.1007/978-3-030-03338-5_30

Download citation

DOI: https://doi.org/10.1007/978-3-030-03338-5_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03337-8

Online ISBN: 978-3-030-03338-5

eBook Packages: Computer ScienceComputer Science (R0)