Abstract

Infrared small target detection plays an important role in infrared monitoring and early warning systems. This paper proposes a local adaptive contrast measure for robust infrared small target detection using gray and variance difference. First, a size-adaptive gray-level target enhancement process is performed. Then, an improved multiscale variance difference method is proposed for target enhancement and cloud clutter removal. To demonstrate the effectiveness of the proposed approach, a test dataset consisting of two infrared image sequences with different backgrounds was collected. Experiments on the test dataset demonstrate that the proposed infrared small target detection method can achieve better detection performance than the state-of-the-art approaches.

Supported by the National Natural Science Foundation of China (Nos. 61602499 and 61471371), and the National Postdoctoral Program for Innovative Talents (No. BX201600172).

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

It is challenging to detect infrared (IR) small targets due to several reasons. First, an infrared small target only occupies a few pixels in an image since the detection distance is long [3, 13]. Second, the target has a point spread characteristic due to reflection, refraction, and sensor aperture diffraction [23, 24]. Besides, the intensity and shape of a small IR target can be changed under different seasons, weathers, and time [8, 12]. In addition, sunlight reflections can also be caused by ocean and cirrus clouds. Moreover, broken cloud and cloud edges are always the main causes for false alarms in infrared small target detection [9, 21].

Recently, the progress in Human Visual Systems (HVS) has been widely used to improve the performance of small IR target detection. According to the HVS attention mechanism, the local contrasts between targets and their surrounding backgrounds are more important than the absolute intensities of visual signals in an attention system [19]. In the literature, several local contrast measures have been proposed to imitate the HVS selective attention mechanism [2, 4, 6, 7, 11, 16,17,18, 22]. These measures have shown great potential in infrared small target detection. For example, Chen et al. [2] proposed a Local Contrast Map (LCM) for local target enhancement and background clutter suppression. Han et al. [11] proposed an improved LCM (ILCM) to enhance the detection rate. Wei et al. [22] produced a Multiscale Patch-based Contrast Measure (MPCM) for small target enhancement and background clutter suppression, although it is able to simultaneously detect bright and dark targets in IR images, some discrete points still remain in heavy clutters. Deng et al. [4] introduced a Novel Weighted Image Entropy (NWIE) measure using multiscale gray-level difference and local information entropy. It focuses on the suppression of cloud edges. Nasiri et al. [16] recently proposed a performance-leading Variance Difference (VARD) based method. However, the detection performance is still limited by its fixed-size sliding window.

Inspired by the multiscale gray difference used in [4, 20], we propose a joint filter using multiscale gray and variance difference to improve VARD. Two major contributions of this work can be summarized as follows.

-

(1)

A maximum contrast measure is used to extract the maximum cross-scale gray difference. Meanwhile, the optimal size map of the internal window is obtained for subsequent use.

-

(2)

A revised multiscale variance difference measure is designed to alleviate the impact of the background fluctuation and optimize the calculation of variance in each internal window.

The rest of this paper is organized as follows. In Sect. 2, we review the VARD method. In Sect. 3, we describe our proposed MGVD joint filter. In Sect. 4, several experiments are conducted to test our method. The paper is concluded in Sect. 5.

2 The VARD Method

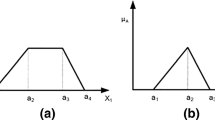

Local contrast has been widely used in HVS inspired IR small target detection [2, 16, 18]. Nasiri et al. [16] recently proposed the VARD method for small target detection. In VARD, a fixed-size sliding window with three windows is first extracted from an IR image, as shown in the right part of Fig. 1. The sizes of the internal, middle and external windows are set to \(7\times 7\), \(11\times 11\), \(15\times 15\), respectively.

Given a target with Gaussian shape in an IR image, the target is usually brighter than its surroundings. Therefore, when a target exists in an internal window, the intensity in the internal window is higher than that in the middle window.

where \(M_{in}\), \(M_{mid}\), \(P_{rem}\) represent the mean values of the internal window, the mean values of the middle window and their difference, respectively. \((x_{0},y_{0})\) represents the central pixel under investigation.

Note that, some areas in an IR image with strong evolving clouds are similar to target regions, therefore, the variance difference between the internal and external window around the investigated image patch is calculated as follows.

where \(V_{in}\) and \(V_{e}\) represent the variance of the internal and external windows, respectively. \(D_{in}\) is the size of the internal window, \(D_{in}^{2}-1\) is the number of neighbors of an image patch.

In fact, the size of a small target can range from \(2\times 2\) to about \(9\times 9\) pixels. In the ideal case, the size of the internal window should be the same as the target size. To deal with this problem, LCM [11], MPCM [22], NWIE [4] define several multiscale sliding windows to match the size of a real target. The VARD method achieves a state-of-the-art detection performance and efficiency. However, the intensity and variance estimation of the internal window defined in Eqs. 1 and 2 is inaccurate as its internal window has a fixed size. Besides, the number of neighboring image patches for the calculation of variance difference (Eq. 3) is insufficient. That is because it only considers the situations when a sliding window enters a target region, but do not consider the situations when a sliding window leaves a target region, as shown in Fig. 2. These factors decrease the accuracy of local gray and variance difference, and finally affects the detection rate and false alarm rate of the algorithm. Therefore, MGVD is proposed to improve VARD.

3 MGVD-based Small Target Detection

In this section, we introduce a new multiscale IR small target detection method to improve VARD.

3.1 Multiscale Gray Difference

As demonstrated in literature [4, 14, 15], an IR small target has a signature discontinuous with its neighborhood (as shown in Fig. 1). In this paper, multiscale gray difference is presented to measure the dissimilarity of a target region from its surrounding areas. For an image I, the kth gray difference at point (x, y) can be formulated as:

where \(k=1, 2, \ldots , K\), which corresponds to the variable sizes of the internal window \(3\times 3\), \(5\times 5\), \(\ldots \), \((2K+1)\times (2K+1)\). The set \(\varOmega _{k}\) denotes the pixels contained in the internal window, the set \(\varOmega _{max}\) denotes the pixels contained in the maximal neighboring area (corresponding to the middle window). I(x, y) and I(p, q) represent the gray value at point in \(\varOmega _{k}\) and \(\varOmega _{max}\), \(N_{\varOmega _{k}}\) and \(N_{\varOmega _{max}}\) are the number of pixels in sets \(\varOmega _{k}\) and \(\varOmega _{max}\). K is the number of variable internal windows.

Using different sizes of the internal windows, we can obtain a set of corresponding gray difference \(D_{k}(x,y)\). Then, the maximum difference measure \(D_{max}(x,y)\) at point (x, y) is

Consequently, we can obtain the maximum contrast map (i.e., \(D_{max}\)) between the internal window and the middle window.

3.2 Multiscale Variance Difference

Some areas in an IR image with strong evolving clouds are similar to target regions, therefore, using gray difference only is insufficient to extract a target. In addition, the grayscale value in the middle window may be affected by the target, we further consider the variance difference between different internal and external windows of its neighboring image patches.

Different from VARD, we increase the number of neighboring image patches for the calculation of variance difference, as illustrated in Fig. 2. In our method, the size of the internal window is set to \(D_{in}^{*}(x,y)\), which corresponds to the maximum difference measure in Eq. 5. The number of neighboring image patches is \(D_{2}=2D_{in}^{*}-1\). Finally, the multiscale variance difference can be calculated as:

where \(V_{in}^{'}\) represents the variance of the internal window, \(V_{e_{j}}\) represents the variance of the external window in neighboring image patches, \(V\!ARD^{'}\) represents our revised variance difference for a single image patch. Consequently, the multiscale variance difference is calculated as:

where \(\odot \) means the Hadamard product.

3.3 MGVD-based Small Target Detection

The proposed algorithm has five major steps, a flow chart is shown in Fig. 3. First, image patches with three windows are first extracted from an IR image. Second, the maximum contrast measure between the internal window and the middle window is calculated on each image patch. Third, the variance difference is calculated between the internal window and its surrounding background in the external windows. Fourth, the multiscale gray difference map is multiplied with the multiscale variance difference map. Finally, we used the same adaptive-threshold segmentation method as [1, 2, 10] to extract candidate targets. The threshold is computed according to

where \(\mu \) and \(\sigma \) are the mean and standard deviation of the final enhanced map, respectively. In our experience, k ranges from 2 to 15.

4 Experimental Results and Analysis

To test the performance of our proposed method, qualitative and quantitative experiments are presented in this section.

4.1 Experimental Setup

To demonstrate the effectiveness of our proposed method, two real IR image sequences with heavy clutters are tested. Example images are shown in Fig. 4 and the details of these two real sequences are summarized in Table 1.

Five recent HVS-based single frame target detection methods have been used as baseline methods, including Average Gray Absolute Difference Maximum Map (AGADM) [20], LCM [2], NLCM [18], NWIE [4], and VARD [16]. LCM is a traditional HVS-based local contrast method, AGADM and NWIE are two multiscale gray difference based methods, NLCM and VARD are two joint target detection methods using both grayscale and variance.

Three evaluation criteria have been used to measure the target enhancement and background suppression performance, including Signal to Clutter Ratio Gain (SCRG) and Background Suppression Factor (BSF) and Receiver Operating Characteristic (ROC) curves [4, 18, 25]. They are defined as:

where \(S_{in}\) and \(S_{out}\), \(C_{in}\) and \(C_{out}\) are the amplitude of target signal and the standard deviations of clutter in the input and output images, respectively.

A ROC curve represents the relationship between the probability of detection and false alarm rate. Specifically, for a given threshold T in Eq. 9, the probability of detection \(P_{d}\) and false alarm rate \(P_{f}\) [3, 5, 16] can be calculated as:

where \(n_{t}\), \(n_{c}\), \(n_{f}\) and n represent the number of detected true pixels, ground-truth target pixels, false alarm pixels and the total number of image pixels, respectively.

4.2 Qualitative Results

The target enhancement and detection results achieved by different methods on the two sequences are shown in Figs. 5 and 6. It can be seen that the image processed by our method has less clutter and residual noise under different clutter backgrounds as compared to the baseline methods. That is attributed to the adaptive calculation of grayscale maximum contrast measure and variance difference in each image patch. The AGADM and LCM methods are inferior to the other four methods in background suppression. Although the NLCM and NWIE methods can preserve the target to a certain extent, several strong cloud edges remain in the filtered results of the two image sequences. Since the size of the sliding window in VARD is fixed, the targets with various sizes cannot be optimally enhanced. Therefore, they are missed in some frames in Sequence 1. Besides, cloud edges are also enhanced and still remain in strong evolving background in Sequence 2 after filtered by VARD.

In summary, the above qualitative results demonstrate that the proposed method obtains the best target enhancement and background suppression performance. However, there are still few deficiencies of our MGVD method. For example, when a target is so far away from the imaging system that it only occupies 2–3 pixels in an image, the temporal cues in multiple frames should be used to extract targets.

4.3 Quantitative Results

The average SCRG and BSF results obtained by our method and the baseline methods are shown in Fig. 7 and Table 2. We can find that the SCRGs and BSFs achieved by AGADM and LCM method are relatively low. The VARD method is the second best method in BSF. In contrast, our proposed method removes isolated clutter residuals and preserves the target missed by VARD in strong cloud edges (as shown in Sequence 1). Consequently, our MGVD method obtains the highest scores in both SCRG and BSF, with remarkable background suppression performance being achieved. It is clear that the performance of the NLCM and NWIE methods are poor for the removal of strong evolving cloud edges, especially in Sequence 2.

ROC curves are used to further compare our proposed method to the baseline methods. As illustrated in Fig. 8, it can be seen that the ROC curves of our method on the two real image sequences are close to the upper left corner. That is, our method outperforms other baseline methods in terms of \(P_{d}\) and \(P_{f}\). On Sequence 1, when the false alarm rate is \(2\times 10^{-5}\), our proposed method and VARD can achieve a detection rate of 90%. When the false alarm rate is \(1\times 10^{-4}\), all the methods can obtain a detection rate over 90%, except for LCM. On Sequence 2, when the false alarm rate is \(1\times 10^{-5}\), only our proposed method can obtain a detection rate of \(90\%\).

4.4 Computational Efficiency

All the methods were implemented in Matlab 2014a on a PC with a 2.7 GHz CPU and 4.0 GB RAM. We ran our method on 2 real IR image sequences. The run time on each dataset is 5.73 s and 17.20 s. Since our method uses a sliding window to check all possible locations in an image, it is not very efficient. Its efficiency should be further improved in future.

5 Conclusion

This paper presented a joint filter for small target detection using multiscale gray and variance difference. Maximum gray difference is first extracted by an absolute gray difference method. The optimal size of the internal window is then used to calculate the variance of the internal window. Finally, the neighboring image patches are expanded for the estimation of variance difference. Experiments shows that the proposed method achieves promising target enhancement and background suppression performance on complicated real IR images.

References

Bai, X., Bi, Y.: Derivative entropy-based contrast measure for infrared small-target detection. IEEE Trans. Geosci. Remote Sens. 56(99), 2452–2466 (2018)

Chen, C.P., Li, H., Wei, Y., Xia, T., Tang, Y.Y.: A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 52(1), 574–581 (2014)

Dai, Y., Wu, Y.: Reweighted infrared patch-tensor model with both nonlocal and local priors for single-frame small target detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 10(8), 3752–3767 (2017)

Deng, H., Sun, X., Liu, M., Ye, C., Zhou, X.: Infrared small-target detection using multiscale gray difference weighted image entropy. IEEE Trans. Aerosp. Electron. Syst. 52(1), 60–72 (2016)

Deng, H., Sun, X., Liu, M., Ye, C., Zhou, X.: Small infrared target detection based on weighted local difference measure. IEEE Trans. Geosci. Remote Sens. 54(7), 4204–4214 (2016)

Deng, H., Sun, X., Liu, M., Ye, C., Zhou, X.: Entropy-based window selection for detecting dim and small infrared targets. Pattern Recogn. 61, 66–77 (2017)

Dong, L., Wang, B., Zhao, M., Xu, W.: Robust infrared maritime target detection based on visual attention and spatiotemporal filtering. IEEE Trans. Geosci. Remote Sens. 55(5), 3037–3050 (2017)

Fan, Z., Bi, D., Xiong, L., Ma, S., He, L., Ding, W.: Dim infrared image enhancement based on convolutional neural network. Neurocomputing 272, 396–404 (2018)

Gao, C., Wang, L., Xiao, Y., Zhao, Q., Meng, D.: Infrared small-dim target detection based on Markov random field guided noise modeling. Pattern Recogn. 76, 463–475 (2018)

Gao, J., Lin, Z., Guo, Y., An, W.: TVPCF: a spatial and temporal filter for small target detection in IR images. In: Digital Image Computing: Techniques and Applications (DICTA), pp. 1–7 (2017)

Han, J., Ma, Y., Zhou, B., Fan, F., Liang, K., Fang, Y.: A robust infrared small target detection algorithm based on human visual system. IEEE Geosci. Remote Sens. Lett. 11(12), 2168–2172 (2014)

Kim, S.: Infrared variation reduction by simultaneous background suppression and target contrast enhancement for deep convolutional neural network-based automatic target recognition. Opt. Eng. 56(6), 063108 (2017)

Li, Y., Zhang, Y.: Robust infrared small target detection using local steering kernel reconstruction. Pattern Recogn. 77, 113–125 (2018)

Liu, D., Li, Z., Liu, B., Chen, W., Liu, T., Cao, L.: Infrared small target detection in heavy sky scene clutter based on sparse representation. Infrared Phys. Technol. 85, 13–31 (2017)

Liu, R., Wang, J., Yang, H., Gong, C., Zhou, Y., Liu, L., Zhang, Z., Shen, S.: Tensor Fukunaga-Koontz transform for small target detection in infrared images. Infrared Phys. Technol. 78, 147–155 (2016)

Nasiri, M., Chehresa, S.: Infrared small target enhancement based on variance difference. Infrared Phys. Technol. 82, 107–119 (2017)

Nie, J., Qu, S., Wei, Y., Zhang, L., Deng, L.: An infrared small target detection method based on multiscale local homogeneity measure. Infrared Phys. Technol. 90, 186–194 (2018)

Qin, Y., Li, B.: Effective infrared small target detection utilizing a novel local contrast method. IEEE Geosci. Remote Sens. Lett. 13(12), 1890–1894 (2016)

Shi, Y., Wei, Y., Yao, H., Pan, D., Xiao, G.: High-boost-based multiscale local contrast measure for infrared small target detection. IEEE Geosci. Remote Sens. Lett. 15(99), 1–5 (2018)

Wang, G., Zhang, T., Wei, L., Sang, N.: Efficient method for multiscale small target detection from a natural scene. Opt. Eng. 35(3), 761–769 (1996)

Wang, X., Peng, Z., Kong, D., He, Y.: Infrared dim and small target detection based on stable multisubspace learning in heterogeneous scene. IEEE Trans. Geosci. Remote Sens. 55(10), 5481–5493 (2017)

Wei, Y., You, X., Li, H.: Multiscale patch-based contrast measure for small infrared target detection. Pattern Recogn. 58, 216–226 (2016)

Xin, Y.H., Zhou, J., Chen, Y.S.: Dual multi-scale filter with SSS and GW for infrared small target detection. Infrared Phys. Technol. 81, 97–108 (2017)

Zhang, H., Bai, J., Li, Z., Liu, Y., Liu, K.: Scale invariant SURF detector and automatic clustering segmentation for infrared small targets detection. Infrared Phys. Technol. 83, 7–16 (2017)

Zhang, X., Ding, Q., Luo, H., Hui, B., Chang, Z., Zhang, J.: Infrared small target detection based on directional zero-crossing measure. Infrared Phys. Technol. 87, 113–123 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Gao, J., Guo, Y., Lin, Z., An, W. (2018). Infrared Small Target Detection Using Multiscale Gray and Variance Difference. In: Lai, JH., et al. Pattern Recognition and Computer Vision. PRCV 2018. Lecture Notes in Computer Science(), vol 11259. Springer, Cham. https://doi.org/10.1007/978-3-030-03341-5_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-03341-5_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03340-8

Online ISBN: 978-3-030-03341-5

eBook Packages: Computer ScienceComputer Science (R0)