Abstract

In recent years, the focus of machine learning has been shifting to the paradigm of transfer learning where the data distribution in the target domain differs from that in the source domain. This is a prevalent setting in real-world classification problems and numerous well-established theoretical results in the classical supervised learning paradigm will break down under this setting. In addition, the increasing privacy protection awareness restricts access to source domain samples and poses new challenges for the development of privacy-preserving transfer learning algorithms. In this paper, we propose a novel differentially private multiple-source hypothesis transfer learning method for logistic regression. The target learner operates on differentially private hypotheses and importance weighting information from the sources to construct informative Gaussian priors for its logistic regression model. By leveraging a publicly available auxiliary data set, the importance weighting information can be used to determine the relationship between the source domain and the target domain without leaking source data privacy. Our approach provides a robust performance boost even when high quality labeled samples are extremely scarce in the target data set. The extensive experiments on two real-world data sets confirm the performance improvement of our approach over several baselines. Data related to this paper is available at: http://qwone.com/~jason/20Newsgroups/ and https://www.cs.jhu.edu/~mdredze/datasets/sentiment/index2.html.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the era of big data, an abundant supply of high quality labeled data is crucial for the success of modern machine learning. But it is both impractical and unnecessary for a single entity, whether it is a tech giant or a powerful government agency, to collect the massive amounts of data single-handedly and perform the analysis in isolation. Collaboration among data centers or crowd-sourced data sets can be truly beneficial in numerous data-driven research areas and industries nowadays. As modern data sets get bigger and more complex, the traditional practice of centralizing data from multiple data owners turns out to be extremely inefficient. Increasing concerns over data privacy also create barriers to data sharing, making it difficult to coordinate large-scale collaborative studies especially when sensitive human subject data (e.g., medical records, financial information) are involved. Instead of moving raw data, a more efficient approach is to exchange the intermediate computation results obtained from distributed training data sets (e.g., gradients [15], likelihood values in MLE [2]) during the machine learning process. However, even the exchange of intermediate results or summary statistics is potentially privacy-breaching as revealed by recent evidence. There is a large body of research work addressing this problem in a rigorous privacy-preserving manner, especially under the notion of differential privacy [23, 24].

In this paper, we consider the differentially private distributed machine learning problem under a more realistic assumption – the multiple training data sets are drawn from different distributions and the learned machine learning hypothesis (i.e. classifier or predictive model) will be tested and employed on a data set drawn from another different yet related distribution. In this setting, the training sets are referred to as the “source domains” and the test set is referred to as the “target domain”. High quality labeled data in the target domain is usually costly, if not impossible, to collect, making it necessary and desirable to take advantage of the sources in order to build a reliable hypothesis on the target. The task of “transferring” source knowledge to improve target “learning” is known as transfer learning, one of the fundamental problems in statistical learning that is attracting increasing attention from both academia and industry. This phenomenon is prevalent in real-world applications and solving the problem is important because numerous solid theoretical justification, especially the generalization capability, in traditional supervised learning paradigm will break down under transfer learning. Plenty of research work has been done in this area, as surveyed in [16]. Meanwhile, the unprecedented emphasis on data privacy today brings up a natural question for the researchers:

How to improve the learning of the hypothesis on the target using knowledge of the sources while protecting the data privacy of the sources?

To our best knowledge, limited research work exists on differentially private multiple-source transfer learning. Two challenges emerge when addressing this problem – what to transfer and how to ensure differential privacy. In the transfer learning literature, there are four major approaches – instance re-weighting [6], feature representation transfer [20], hypothesis transfer [10] and relational knowledge transfer [14]. In particular, we focus on hypothesis transfer learning, where hypotheses trained on the source domains are utilized to improve the learning of target hypothesis and no access to source data is allowed. To better incorporate the source hypotheses, we also adapt an importance weighting mechanism in [9] to measure the relationship between sources and target by leveraging a public auxiliary unlabeled data set. Based on the source-target relationship, we can determine how much weight to assign to each source hypothesis in the process of constructing informative Gaussian priors for the parameters of the target hypothesis. Furthermore, the enforcement of differential privacy requires an additional layer of privacy protection because unperturbed source domain knowledge may incur privacy leakage risk. In the following sections, we explicitly apply our methodology to binary logistic regression. Our approach will provide end-to-end differential privacy guarantee by perturbing the importance weighting information and the source hypotheses before transferring them to the target. Extensive empirical evaluations on real-world data demonstrate its utility and significant performance lift over several important baselines.

The main contributions of this work can be summarized as follows.

-

We propose a novel multiple-source differentially private hypothesis transfer learning algorithm and focus specifically on its application in binary logistic regression. It addresses the notorious problem of insufficient labeled target data by making use of unlabeled data and provides rigorous differential privacy guarantee.

-

Compared with previous work, only one round of direct communication between sources and target is required in our computationally efficient one-shot model aggregation, therefore overcoming some known drawbacks (e.g. avoiding the complicated iterative hypothesis training process and privacy budget composition in [7, 25], eliminating the need for a trusted third party in [8]).

-

The negative impact of unrelated source domains (i.e. negative transfer) is alleviated as the weight assigned to each source hypothesis will be determined by its relationship with the target.

-

The non-private version of our method achieves performance comparable to that of the target hypothesis trained with an abundant supply of labeled data. It provides a new perspective for multiple-source transfer learning when privacy is not a concern.

The rest of the paper is organized as follows: background and related work are introduced in Sect. 2. We then present the methods and algorithms in Sect. 3. It is followed by empirical evaluation of the method on two real-world data sets in Sect. 4. Lastly, we conclude the work in Sect. 5.

2 Background and Related Work

The privacy notion we use is differential privacy, which is a mathematically rigorous definition of data privacy [4].

Definition 1

(Differential Privacy). A randomized algorithm \(\varvec{M}\) (with output space \(\varOmega \) and well-defined probability density \(\mathcal {D}\)) is \((\epsilon ,\delta )\)-differentially private if for all adjacent data sets \(\varvec{S}, \varvec{S}'\) that differ in a single data point \(\varvec{s}\) and for all measurable sets \(\omega \in \varOmega \):

Differential privacy essentially implies that the existence of any particular data point \(\varvec{s}\) in a private data set \(\varvec{S}\) cannot be determined by analyzing the output of a differentially private algorithm \(\varvec{M}\) applied on \(\varvec{S}\). The parameter \(\epsilon \) is typically known as the privacy budget which quantifies the privacy loss whenever an algorithm is applied on the private data. A differentially private algorithm with smaller \(\epsilon \) means that it is more private. The other privacy parameter \(\delta \) represents the probability of the algorithm failing to satisfy differential privacy and is usually set to zero.

Differential privacy can be applied to address the problem of private machine learning. The final outputs or the intermediate computation results of machine learning algorithms can potentially reveal sensitive information of individuals who contribute data to the training set. A popular approach to achieving differential privacy is output perturbation, derived from the sensitivity method in [4]. It works by adding carefully calibrated noises to the parameters of the learned hypothesis before releasing it. In particular, Chaudhuri et al. [3] adapted the sensitivity method into a differentially private regularized empirical risk minimization (ERM) algorithm of which logistic regression is a special case. They proved that adding Laplace noise with scale inversely proportional to the privacy parameter \(\epsilon \) and the regularization parameter \(\lambda \) to the learned hypothesis provides \((\epsilon , 0)\)-differential privacy.

More recently, research efforts have been focused on distributed privacy-preserving machine learning where private data sets are collected by multiple parties. One line of research involves exchanging differentially private information (e.g. gradients) among multiple parties during the iterative hypothesis training process [23, 24]. An alternative line of work focuses on privacy-preserving model aggregation techniques. Pathak et al. [19] addressed the hypothesis ensemble problem by simple parameter averaging and publishing the aggregated hypothesis in a differentially private manner. Papernot et al. [18] proposed a different perspective – Private Aggregation of Teacher Ensembles (PATE), where private data set is split into disjoint subsets and different “teacher” hypotheses are trained on all subsets. The private teacher ensemble is used to produce labels on auxiliary unlabeled data by noisy voting and a“student” hypothesis is trained on the auxiliary data and released. Hamm et al. [8] explored a similar strategy of transferring local hypotheses and focused on convex loss functions. Their work involves a “trusted entity” who collects unperturbed local hypotheses trained on multiple private data sets and uses them to generate“soft” labels on an auxiliary unlabeled data set. A global hypothesis is trained on the soft-labeled auxiliary data and output perturbation is used to ensure its differential privacy.

In the limited literature of differentially private transfer learning, the most recent and relevant work to ours are [7, 25] which focus on multi-task learning , one of the variants of transfer learning. Gupta et al. [7] proposed a differentially private mutli-task learning algorithm using noisy task relationship matrix and developed a novel attribute-wise noise addition scheme. Xie et al. [25] introduced a privacy-preserving proximal gradient algorithm to asynchronously update the hypothesis parameters of the learning tasks. Both work are iterative solutions and require multiple rounds of information exchange between tasks. However, one of the key drawbacks of iterative differentially private methods is that privacy risks accumulate with each iteration. The composition theorem of differential privacy [5] provides a framework to quantify the privacy budget consumption. Given a certain level of total privacy budget, there is a limit on how many iterations can be performed on a specific private data set, which severely affects the utility-privacy trade-off of iterative solutions.

3 The Proposed Methods

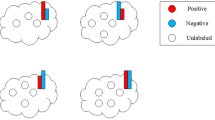

Let us assume that there are K sources in the transfer learning system (as shown in Fig. 1), indexed as \(k = 1, ...,K\). For the k-th source, we denote the labeled samples as \(\varvec{S}^k_l = \{(\varvec{x}_i^k, y_i^k): 1 \le i \le n^k_l\}\) and the unlabeled samples as \(\varvec{S}^k_{ul} = \{\varvec{x}_j^k: 1 \le j \le n^k_{ul}\}\), where  are the feature vectors, \(y^k_i\) is the corresponding label, and \(n^k_l, n^k_{ul}\) are the sizes of \(\varvec{S}^k_l\) and \(\varvec{S}^k_{ul}\) respectively. The samples in the k-th source are drawn i.i.d. according to a probability distribution \(\mathcal {D}^k\). There is also a target data set \(\varvec{T}\) with abundant unlabeled samples and very few labeled samples drawn i.i.d. from a different yet related distribution \(\mathcal {D}^T\). Following the setting of [12], \(\mathcal {D}^T\) is assumed to be a mixture of the source distributions \(\mathcal {D}^k\)’s. A public data set \(\varvec{P}\) of size \(n^P\) (not necessarily labeled) is accessible to both the sources and the target serving as an information intermediary.

are the feature vectors, \(y^k_i\) is the corresponding label, and \(n^k_l, n^k_{ul}\) are the sizes of \(\varvec{S}^k_l\) and \(\varvec{S}^k_{ul}\) respectively. The samples in the k-th source are drawn i.i.d. according to a probability distribution \(\mathcal {D}^k\). There is also a target data set \(\varvec{T}\) with abundant unlabeled samples and very few labeled samples drawn i.i.d. from a different yet related distribution \(\mathcal {D}^T\). Following the setting of [12], \(\mathcal {D}^T\) is assumed to be a mixture of the source distributions \(\mathcal {D}^k\)’s. A public data set \(\varvec{P}\) of size \(n^P\) (not necessarily labeled) is accessible to both the sources and the target serving as an information intermediary.

We present the full details of our method (Algorithm 1) here. Two pieces of knowledge need to be transferred to the target from each source in a differentially private manner. The first piece is the hypothesis locally trained on the labeled source samples \(\varvec{S}^k_l\). In order to protect the data privacy, we apply the output perturbation method in [3] to perturb the source hypotheses into \(\varvec{\theta }^k_{priv}\) \((k = 1, ..., K)\) before transferring them to the target (Step 1 in Algorithm 1).

The second piece of knowledge is the differentially private “importance weights” vector with respect to samples in the public data set \(\varvec{P}\), which is used to measure the relationship between source distribution and target distribution. It is a very difficult task to estimate high-dimensional probability densities and releasing the unperturbed distribution or the histogram can be privacy-breaching in itself. No existing work addresses the differentially private source-target relationship problem explicitly. We leverage a public data set \(\varvec{P}\) and propose a novel method based on the importance weighting mechanism in [9] (see Algorithm 2) which was originally developed for the purpose of differentially private data publishing. We take advantage of the “importance weights” vector and successfully quantify the source-target relationship without violating the data privacy of sources. To be more specific, every source k will compute the differentially private “importance weight” for each sample in \(\varvec{P}\) using its unlabeled samples \(\varvec{S}^k_{ul}\) (Step 2 in Algorithm 1). The “importance weight” of a data point in \(\varvec{P}\) is large if it is similar to the samples in \(\varvec{S}^k_{ul}\), and small otherwise. The “importance weights” vector \(\varvec{w}^k\) is therefore an \(n^P\)-dimensional vector with non-negative entries that add up to \(n^P\). By making a multiplicative adjustment to the public data set \(\varvec{P}\) using \(\varvec{w}^k\), \(\varvec{P}\) will look similar to \(\varvec{S}^k_{ul}\) in proportion. In other words, \(\varvec{w}^k\) is the “recipe” of transforming \(\varvec{P}\) into a data set drawn from \(\mathcal {D}^k\).

After receiving the “importance weights” vectors \(\varvec{w}^k\)’s from sources, the target will also compute its own non-private“importance weight” vector \(\varvec{w}^T\) (Step 3 in Algorithm 1). These “recipes” bear information about \(\mathcal {D}^k\)’s and \(\mathcal {D}^T\) and are crucial for determining how related each source is to the target. As an intuitive example, the recipe of making regular grape juice out of grapes and water is similar to the recipe of making light grape juice, but very different from that of making wine. By simply comparing the recipes, regular juice can conclude that it is alike light juice but not wine. Given \(\varvec{w}^k\)’s and \(\varvec{w}^T\), the target can further compute the “hypothesis weight” to assign to each source hypothesis by solving an optimization problem that minimizes the divergence between target“importance weights” vector and a linear combination of source “importance weights” vectors (Step 4 in Algorithm 1). The “hypothesis weights” vector \(\varvec{w}_H\) is a probability vector of dimension K. The implied assumption here is that hypotheses trained on sources similar to the target should be assigned higher weights in the model aggregation process, whereas the impact of hypotheses trained on source domains unrelated to the target should be reduced. Finally, the target will construct an informative Bayesian prior for its logistic regression model using \(\varvec{\theta }^k_{priv}\)’s and \(\varvec{w}_H\) based on the method in [13] (Step 5–6 in Algorithm 1).

To ensure differential privacy, both pieces of source knowledge transferred to the target need to be perturbed carefully. Note that the private hypothesis is trained on labeled source samples and the private “importance weights” vector is trained on the disjoint unlabeled source samples. Therefore no composition or splitting of privacy budget is involved. The privacy analysis is presented in Theorem 2 below.

Theorem 2

Algorithm 1 is \((\epsilon , 0)\)-differentially private with respect to both the labeled and the unlabeled samples in each source.

Proof

We sketch the proof here for brevity. First, the perturbed source hypotheses \(\varvec{\theta }^k_{priv}\)’s are proved to be differentially private with respect to the labeled source samples by [3]. Additionally, the “importance weights” vector \(\varvec{w}^k\) is \((\epsilon ,0)\)-differentially private with respect to the unlabeled source samples by [9]. Therefore, the final target hypothesis \(\varvec{\theta }^T\) built upon \(\varvec{\theta }^k_{priv}\)’s and \(\varvec{w}^k\)’s is also \((\epsilon ,0)\)-differentially private with respect to the sources by the post-processing guarantee of differential privacy [5].

4 Experiments

In this section we empirically evaluate the effectiveness of our differentially private hypothesis transfer learning method (DPHTL) using publicly available real-world data sets. The experiment results show that the hypothesis obtained by our method provides a performance boost over the hypothesis trained on the limited labeled target data while guaranteeing differential privacy of sources and is also better than the hypotheses obtained by several baselines under various level of privacy requirements.

The first data set is the text classification data set 20NewsGroup (20NG)Footnote 1 [11], a popular benchmark data set for transfer learning and domain adaptation [17, 21]. It is a collection of approximately 20,000 newsgroup documents with stop words removed and partitioned evenly across 20 different topics. Some topics can be further grouped into a broader subject, for example, computer-related, recreation-related. To construct a binary classification problem, we randomly paired each of the 5 computer-related topics with 2 non-computer-related topics in each source domain. Our purpose is to build a logistic regression model to classify each document as either computer-related or non-computer-related. Specifically, there are 5 source domains with documents sampled from different topic groups as listed in Table 1. The target data set and the public data set are assumed to be mixtures of the source domains.

The second data set is the Amazon review sentiment data (AMAZON)Footnote 2, a famous benchmark multi-domain sentiment data set collected by Blitzer et al [1]. It contains product reviews from 4 different types – Book, DVD, Kitchen and Electronics. Reviews with star rating \(> 3\) are labeled positive, and those with star rating \(< 3\) are labeled negative. Each source domain contains reviews from one type and the target domain and public data set are mixtures of the source domains. After initial preprocessing, each product review is represented by its count of unigrams and bigrams in the document and the amounts of positive reviews and negative reviews are balanced. Note that among the four product types, DVD and Book are similar domains sharing many common sentiment words, whereas Kitchen and Electronics are more correlated with each other because most of kitchen appliances belong to electronics.

4.1 Data Preprocessing

For the 20NG data set, we removed the “headers”,“footers” and “quotes”. When building the vocabulary for each source domain, we ignored terms that have a document frequency strictly higher than 0.5 or strictly lower than 0.005. The total number of terms in the combination of source vocabularies is 5,588. To simulate the transfer learning setup, we sampled 1,000 documents from each source domain as our unlabeled source data set and another 800 documents with labels from each source domain as the labeled source data set. The public data set is an even mixture of the 5 sources with 1,500 documents in total. The target data set is also a mixture of all the source domains with insufficient labels. The final logistic regression model will be tested on a hold-out data set of size 320 sampled from the target domain. For the AMAZON data set, we selected the 1,500 most-frequently used unigrams/bigrams from each source domain and combined them to construct the feature set used in the logistic regression model. The total number of features is 2,969. We sampled 1,000 unlabeled reviews from each source domain as the unlabeled source data sets and another 1,000 labeled reviews as the labeled source data sets. The public data set is an even mixture of the 4 product types with 2,000 reviews in total. The counts of features were also transformed to a normalized tf-idf representation. The size of the target data set is 1,500 and the performance will be evaluated on a hold-out test set of size 600 sampled from the target domain. For both data sets, validation sets were reserved for the grid search of regularization parameters. We explored the performance of our method (DPHTL) across varying mixtures of sources, varying percentage of target labels and varying privacy requirements.

4.2 Differentially Private Importance Weighting

For the first set of experiments, we study the utility of the differentially private importance weighting mechanism (Step 2–4 in Algorithm 1) on both data sets. As shown by the results, our method can indeed reveal the proportion of each source domain in the target domain even under stringent privacy requirements. We set the regularization parameter \(\lambda _{IW}\) to be 0.001 after grid search among several candidates and plot the mean squared error (MSE) between the original proportion vector and the “hypothesis weights” vector determined by our method across varying privacy requirements \(\epsilon \). The experiments were repeated 20 times with different samples and the the error bars in the figures represent the standard errors.

The results (see Figs. 2 and 3) clearly show that the “hypothesis weights” vector is a good privacy-preserving estimation of the proportion of source domains in the target domain for both 20NG and AMAZON. As the privacy requirement is relaxed (\(\epsilon \) increases), the MSE will approach zero, reflecting the fact that the weight assigned to each source hypothesis is directly affected by the relationship between the source and the target. It is crucial in avoiding negative transfer as brute-force transfer may actually hurt performance if the sources are too dissimilar to the target [22].

4.3 Differentially Private Hypothesis Transfer Learning

For the second set of experiments, we investigate the privacy-utility trade-off of our DPHTL method and compare its performance with those of several baselines. The first baseline SOURCE is the best performer on the target test set among all the differentially private source hypotheses. The second baseline AVERAGE is the posterior Bayesian logistic regression model which assigns equal weights to all the source hypotheses in constructing the Gaussian prior. The third baseline is referred to as SOFT, which represents building the logistic regression model using soft labels. It is an adaptation of the work proposed by Hamm et al. in [8] under our transfer learning setting. More specifically, all the unlabeled samples in the target will be soft-labeled by the fraction \(\alpha (\varvec{x})\) of positive votes from all the differentially private source hypotheses (see Algorithm 2 in [8]). For a certain target sample \(\varvec{x}\),

The minimizer of the loss function of the regularized logistic regression with labeled target samples and soft-labeled target samples will be output as the final hypothesis for the SOFT baseline. Similar to our method, Hamm et al. also explored the idea of leveraging auxiliary unlabeled data and proposed a hypothesis ensemble approach. However, there are two key discrepancies. Firstly, no trusted third party is required in our setting so the local hypotheses are perturbed before being transferred to the target. Secondly, the algorithms in [8] were not designed for transfer learning and by assumption the samples are drawn from a distribution common to all parties. Therefore, no mechanism is in place to prevent negative transfer.

In addition, we compared with the non-private hypotheses WEAK and TARGET trained on the target. The hypothesis WEAK is trained using the limited labeled samples in the target data set. The hypothesis TARGET is trained using the true labels of all the samples (both labeled and unlabeled) in the target data set and can be considered as the best possible performer since it has access to all the true labels and ignores privacy. The test accuracy of the obtained hypotheses is plotted as a function of the percentage of labeled samples in the target set. The experiments were repeated 20 times and the error bars are standard errors. In order to save space, we illustrate the utility-privacy trade-off by figures for one mixture only.

We set the logistic regression regularization parameter at \(\lambda _{LR} = 0.003\) for 20NG and \(\lambda _{LR} = 0.005\) for AMAZON after preliminary grid search on the validation set. Figures 4 and 5 show the test accuracy for all the methods across increasing privacy parameter \(\epsilon \). DPHTL and AVERAGE start to emerge as better performers at an intermediate privacy level for both 20NG and AMAZON. When the privacy requirement is further relaxed, DPHTL becomes the best performer by a large margin at all range of labeled target sample percentage. Moreover, DPHTL is the only method performing as well as TARGET when privacy is not a concern. In comparison, the utilities of AVERAGE and SOFT are hindered by unrelated source domains. More test results are presented in Table 2 when the percentage of labeled target samples is fixed at 5%. In general, DPHTL gains a performance improvement over other baselines by taking advantage of the “hypotheses weights” while preserving the privacy of sources. Besides, it presents a promising solution to the notorious situation where very few labeled data is available at the target domain.

5 Conclusion

This paper proposes a multiple-source hypothesis transfer learning system that protects the differential privacy of sources. By leveraging the relatively abundant supply of unlabeled samples and an auxiliary public data set, we derive the relationship between sources and target in a privacy-preserving manner. Our hypothesis ensemble approach incorporates this relationship information to avoid negative transfer when constructing the Gaussian prior for the target logistic regression model. Moreover, our approach provides a promising and effective solution when the labeled target samples are scarce. Experimental results on benchmark data sets confirm our performance improvement over several baselines from recent work.

References

Blitzer, J., Dredze, M., Pereira, F.: Biographies, bollywood, boom-boxes and blenders: domain adaptation for sentiment classification. In: Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, pp. 440–447 (2007)

Boker, S.M., et al.: Maintained individual data distributed likelihood estimation (middle). Multivar. Behav. Res. 50(6), 706–720 (2015)

Chaudhuri, K., Monteleoni, C., Sarwate, A.D.: Differentially private empirical risk minimization. J. Mach. Learn. Res. 12, 1069–1109 (2011)

Dwork, C., McSherry, F., Nissim, K., Smith, A.: Calibrating noise to sensitivity in private data analysis. In: Halevi, S., Rabin, T. (eds.) TCC 2006. LNCS, vol. 3876, pp. 265–284. Springer, Heidelberg (2006). https://doi.org/10.1007/11681878_14

Dwork, C., Roth, A.: The algorithmic foundations of differential privacy. Found. Trends Theoret. Comput. Sci. 9(3–4), 211–407 (2014)

Garcke, J., Vanck, T.: Importance weighted inductive transfer learning for regression. In: Calders, T., Esposito, F., Hüllermeier, E., Meo, R. (eds.) ECML PKDD 2014. LNCS (LNAI), vol. 8724, pp. 466–481. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-662-44848-9_30

Gupta, S.K., Rana, S., Venkatesh, S.: Differentially private multi-task learning. In: Chau, M., Wang, G.A., Chen, H. (eds.) PAISI 2016. LNCS, vol. 9650, pp. 101–113. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-31863-9_8

Hamm, J., Cao, Y., Belkin, M.: Learning privately from multiparty data. In: International Conference on Machine Learning, pp. 555–563 (2016)

Ji, Z., Elkan, C.: Differential privacy based on importance weighting. Mach. Learn. 93(1), 163–183 (2013)

Kuzborskij, I., Orabona, F.: Stability and hypothesis transfer learning. In: Proceedings of The 30th International Conference on Machine Learning, pp. 942–950. ACM (2013)

Lang, K.: Newsweeder: learning to filter netnews. In: Machine Learning Proceedings 1995, pp. 331–339. Elsevier (1995)

Mansour, Y., Mohri, M., Rostamizadeh, A.: Domain adaptation with multiple sources. In: Advances in Neural Information Processing Systems, pp. 1041–1048 (2009)

Marx, Z., Rosenstein, M.T., Dietterich, T.G., Kaelbling, L.P.: Two algorithms for transfer learning. Inductive transfer: 10 years later (2008)

Mihalkova, L., Mooney, R.J.: Transfer learning by mapping with minimal target data. In: Proceedings of the Association for the Advancement of Artificial Intelligence (AAAI) Workshop on Transfer Learning for Complex Tasks (2008)

Nedic, A., Ozdaglar, A.: Distributed subgradient methods for multi-agent optimization. IEEE Trans. Autom. Control 54(1), 48–61 (2009)

Pan, S.J., Yang, Q.: A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22(10), 1345–1359 (2010)

Pan, W., Zhong, E., Yang, Q.: Transfer learning for text mining. In: Aggarwal, C., Zhai, C. (eds.) Mining Text Data, pp. 223–257. Springer, Boston (2012)

Papernot, N., Song, S., Mironov, I., Raghunathan, A., Talwar, K., Erlingsson, U.: Scalable private learning with PATE. In: International Conference on Learning Representations (2018). https://openreview.net/forum?id=rkZB1XbRZ

Pathak, M., Rane, S., Raj, B.: Multiparty differential privacy via aggregation of locally trained classifiers. In: Advances in Neural Information Processing Systems, pp. 1876–1884 (2010)

Raina, R., Battle, A., Lee, H., Packer, B., Ng, A.Y.: Self-taught learning: transfer learning from unlabeled data. In: Proceedings of the 24th International Conference on Machine Learning, pp. 759–766. ACM (2007)

Raina, R., Ng, A.Y., Koller, D.: Constructing informative priors using transfer learning. In: Proceedings of the 23rd International Conference on Machine Learning, pp. 713–720. ACM (2006)

Rosenstein, M.T., Marx, Z., Kaelbling, L.P., Dietterich, T.G.: To transfer or not to transfer. In: NIPS 2005 Workshop on Transfer Learning, vol. 898, pp. 1–4 (2005)

Shokri, R., Shmatikov, V.: Privacy-preserving deep learning. In: Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, pp. 1310–1321. ACM (2015)

Wang, Y., et al.: Privacy preserving distributed deep learning and its application in credit card fraud detection. In: 2018 17th IEEE International Conference on Trust, Security and Privacy In Computing And Communications/12th IEEE International Conference on Big Data Science and Engineering (TrustCom/BigDataSE), pp. 1070–1078. IEEE (2018)

Xie, L., Baytas, I.M., Lin, K., Zhou, J.: Privacy-preserving distributed multi-task learning with asynchronous updates. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1195–1204. ACM (2017)

Acknowledgements

We would like to thank the anonymous reviewers for their helpful comments. This work is partially supported by a grant from the Army Research Laboratory W911NF-17-2-0110. Quanquan Gu is partly supported by the National Science Foundation SaTC CNS-1717206. The views and conclusions contained in this paper are those of the authors and should not be interpreted as representing any funding agencies.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, Y., Gu, Q., Brown, D. (2019). Differentially Private Hypothesis Transfer Learning. In: Berlingerio, M., Bonchi, F., Gärtner, T., Hurley, N., Ifrim, G. (eds) Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2018. Lecture Notes in Computer Science(), vol 11052. Springer, Cham. https://doi.org/10.1007/978-3-030-10928-8_48

Download citation

DOI: https://doi.org/10.1007/978-3-030-10928-8_48

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-10927-1

Online ISBN: 978-3-030-10928-8

eBook Packages: Computer ScienceComputer Science (R0)