Abstract

One of the most common abnormalities that create a disorder in brain activity is the Focal Cortical Dysplasia (FCD), which can cause pharmacoresistant epilepsy. Patients with this kind of pathology can be treated surgically to remove the lesioned zone of the brain. However, the location of these lesions depends on the specialist expertise. Then, suitable support regarding the FCD analysis is required to minimize the localization subjectivity, primarily, for imbalance scenarios, e.g., few pathological regions are provided. In this work, we propose a new image processing approach to support FCD localization using a minimal redundancy maximal relevance-based feature selection stage that relies on a mutual information cost function to deal with imbalance problems. Then, our proposal finds a feature space through sequential searching aiming to highlight significant relationships between FCD labels and structural-based parameters from magnetic resonance brain images. Achieved results show a more significant improvement in terms of classifications statistics compared to state-of-the-art works.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Focal Cortical Dysplasias (FCDs) are one of the most common abnormalities that produce a disorder in the electrical functioning of the brain, which is related to epilepsy. FCD can be caused by genetic factors, lack of oxygen during brain development, parasites among others [7]. Patients with pharmacoresistant epilepsy can be treated surgically. Nevertheless, it is necessary to identify with high precision the location of the FCDs. Currently, invasive electrophysiological tests are performed to locate these abnormalities. However, due to these test are invasive, they are uncomfortable and even dangerous for the patient. In this sense, it is necessary to develop non-invasive tools for locating FCD based on medical images [10]. Commonly, to localize FCDs a radiologist (or a group of specialists) performs a visual analysis over magnetic resonance images (MRIs), assessing a set of radiological features related to FCD [10]. Moreover, the diagnosis of these features depends almost entirely on the radiologist expertise, which causes that the localization of a FCD be a subjective process [1]. Therefore, systems that can locate FCD automatically get high importance in terms of avoiding possible wrong when the specialist is locating these abnormalities.

Among the systems that have been developed to locate automatically FCD, we can recognize that they are mainly focused on a binary classification problem. Authors in [1] characterize MRI images by establishing structural markers of FCD like cortical thickness, intensity contrast at the gray-white matter boundary, and fluid attenuation inversion recovery (FLAIR) signal intensity. Besides, they create a “doughnut” method which calculates the difference between an area of cortex and its surrounding annulus at each vertex (points of brain surface). Also, intrinsic curvature and local cortical deformation (LCD) are computed to encourage the FCD localization robustness regarding the scale and cortical shape. Finally, these features are used to train a Neuronal Network (NN) to classify cortical regions into lesional and non-lesional vertex. Nevertheless, a remarkable issue in this type of approach is that it does not take into account the imbalance between lesional and non-lesional examples. Consequently, imbalanced data can compromise the performance of most standard learning algorithms, which assume balanced classes or equal misclassification costs, yielding to biased results towards the majority class (non-lesional) [4]. To address this drawback, authors in [2] used a random bagging approach to built a set of “base-level” classifiers, each trained using a logistic regression approach. Otherwise, the approach in [5] proposes to randomly select non-lesional vertex, with the aim of balancing the number of samples in both classes. But this may cause that the remaining non-lesional examples be redundant to discriminate both classes conducing to spurious results. Recently, [6] proposed a novel technique for assessing the predictive performance using a Cluster-based Under-sampling NN with bagging, as an alternative to address the class imbalance in FCD classification. Although state-of-the-art works tend to deal with the imbalance problem in FCD trough sub sampling-based techniques, the contribution of each provided input feature are not revealed. The latter can be helpful to enhance the localization performance while favoring the classification training stage and the data interpretability.

In this work, we present image processing approach to support FCD localization based on a sequential search feature selection strategy that employs as cost function a minimal redundancy maximal relevance (mRMR) criterion [8]. Indeed, mRMR relies on a mutual information measure to reveal discriminative input features concerning the target labels (lesional and non-lesional vertex). So, mRMR relaxes the imbalance issue in FCD leveraging a probability functional representation within a supervised feature selection technique. Indeed, we built an input feature set of structural markers from MRIs and then an ensemble classifier with RUSBoost algorithm using a decision tree as base learner, is trained based on a sequential searching scheme based on the mRMR function. Attained results on a public available dataset for FCD localization with 22 patients with confirmed FCD, demonstrate that our methodology, named mRMR sequential search (mRMR-SS), achieves higher results in term on locating the FCD by finding a suitable set of feature that can explain the most the target labels.

The remainder of this paper is organized as follows: Sect. 2 explains the introduce feature selection approach based on mRMR. Section 2 (Sequential Search Based on mRMR Criterion) explain the RUSBoost algorithm for classification with ensemble. Section 3 presents the experimental setup. Section 4 contains the results and discussions and Sect. 5 the concluding remarks.

2 Feature Selection Based on mRMR

Let \(\left\{ {\mathbf {x}}_i,y_i\right\} _{i=1}^N\) be a set holding N vertex examples \({\mathbf {x}}_i\in {\mathbb {R}^P}\), the full input matrix is defined as \({\mathbf {X}}=\left\{ \varvec{\zeta }_1,\ldots ,\varvec{\zeta }_j\right\} \), where \(\varvec{\zeta }_j\in {\mathbb {R}^N}\) (\(j\in {\{1,\ldots ,P\}}\)) is the j-th column vector of features of \({\mathbf {X}}\), and output labels \(y_i\in \left\{ 1,0\right\} \) concerning to lesional and non-lesional vertex. Due to the high imbalance present in data, we introduce a mRMR-based feature selection that can deal with it, and supports the FCD localization.

mRMR-Based Cost Function: In order to select feature with the imbalance present in FCD data, we employ the criterion proposed by Peng et al. [8], called minimal-redundancy-maximal-relevance (mRMR), which is a combination of Max-Relevance (d) and min-Redundancy criteria (r). mRMR finds a set \(\tilde{{\mathbf {X}}} \!\,\in \!\,\mathbb {R}^{N\times L}\) with \(L\le P\), which contains the best features \(m = \left\{ 1, \ldots , P\right\} \), corresponding to the most relevant features, which jointly achieves the highest explanation for the target class \({\mathbf {y}}\). mRMR is obtained by maximizing \(\varPhi \left( \text {d},\text {r}\right) \), where \(\varPhi \) is defined as:

where d is Max-Relevance and r minimal-Redundancy where \(|\tilde{{\mathbf {X}}}|\) represents the cardinality of \({\mathbf {s}}\), and \({\text {I}}(\cdot ; \cdot )\) is the mutual information is defined as following:

It is important to remark that mutual information is a measure of dependence between the two distribution, in this sense, this measure is based on the probability distributions, which avoids the imbalance and can find the features that explain the best the vector class. In order to find the best subset of feature, the enhanced sequential searches are defined with the following algorithm:

Sequential Search Algorithm

Given the set \({\mathbf {X}}=\left\{ \varvec{\zeta }_1,\ldots ,\varvec{\zeta }_j\right\} \) (\(j\in {\{1,\ldots ,P\}}\)) find a solution set \(\tilde{{\mathbf {X}}}=\left\{ \varvec{\zeta }_1,\ldots ,\varvec{\zeta }_e\right\} \) (\(e\in {\{1,\ldots ,L\}}\)), where \(L\le {P}\) that minimizes an objective function \(J(\cdot )\).

Do While (\(j\le P\))

-

1.

(BE) Start with the full set \({\mathbf {X}}_0={\mathbf {X}}\)

(FS) Start with the empty set \({\mathbf {X}}_0=\{\emptyset \}\)

-

2.

(BE) Remove the worst feature \(\varvec{\zeta }'=\arg \max _{\varvec{\zeta }_j\in {{\mathbf {X}}_e}}{J({\mathbf {X}}_e-\varvec{\zeta }_j)}\)

(FS) Select the next best feature \(\varvec{\zeta }'=\arg \max _{\varvec{\zeta }_j\in {{\mathbf {X}}_e}}{J({\mathbf {X}}_e+\varvec{\zeta }_j)}\)

-

3.

(BE) Update \({\mathbf {X}}_{e+1}={\mathbf {X}}_e-\varvec{\zeta }',e=e+1\)

(FS) Update \({\mathbf {X}}_{e+1}={\mathbf {X}}_e+\varvec{\zeta }',e=e+1\)

-

4.

(BE/FS) If \(J_e>J_{e-1}\) repeat since step (2). If don’t stop algorithm.

The objective function J for this case, will be the mRMR criterion described in paragraph “mRMR-Based Cost Function” in Sect. 2.

Sequential Search Based on mRMR Criterion: Given an unbalanced training data \(\left\{ \tilde{{\mathbf {X}}}, {\mathbf {y}}\right\} \), the RUSBoost algorithm proposed by Seiffert et al. [9] is a combination of tow components: Random Under-sampling and Adaptive Boosting (AdaBoost), both use for imbalance classification. Firstly, data sampling techniques attempt to alleviate the problem of class imbalance by adjusting the class distribution of the training data set. This can be accomplished by removing examples from the majority class at random until a desired class distribution is achieved [9]. Finally the main idea of boosting is to iteratively create an ensemble of weak hypothesis, which are combined to predict the class of unlabeled examples. Initially, all examples in the training dataset are assigned with equal weights. During the iterations of AdaBoost, a weak hypothesis is formed by the base learner. The error associated with the hypothesis is calculated, and the weight of each example is adjusted such that misclassified examples have their weights increased while correctly classified examples have their weights decreased. Therefore, subsequent iterations of boosting will generate hypothesis that are more likely to correctly classify the previously mislabeled examples. After all iterations are completed, a weighted vote of all hypothesis is used to assign a class to the unlabeled examples. Since boosting assigns higher weights to misclassified examples and minority class examples are those most likely to be misclassified. The above is the reason for which minority class examples will receive higher weights during the boosting process, making it similar in many ways to cost-sensitive classification [3].

3 Experimental Setup

The aim of our work is to build an automatic framework to locate FCDs in MRI studies, which takes into account the problem of unbalance (see Fig. 1). The scheme of our proposal begins with the unbalanced data, this data is the characterizations proposed by Adler et al. [1] over the MRI images of 22 patients (more information below). Due to the high amount of samples, it necessary to perform a stage of features selection, every of the 28 features in this dataset, has about 3 million of samples, then if it is possible to reduce at least 1 of them, the algorithm does not need to deal with 3 million less of values, which considerably may reduce the computational cost. Finally, the classification stage is focus in dealing with the actual unbalanced in the data with has relation of 1:49 samples from non-lesional vertex over the lesional ones.

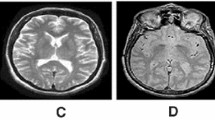

Dataset: This proposal is tested in a public FCDs datasetFootnote 1 used by Adler et al. [1]. This dataset was generated originally with 27 patient with radiologically FCD (mean age = \(11.57\pm 3.97\), range = \(3.79-16.21\) years, 10 females) who underwent 3D T1 and FLAIR imaging following permission by the Great Ormond Street Hospital ethical review board. To generate this structural features, FreeSurfer Software v5.3 was used and 5 patients were excluded due to severe motion artifacts. For the 22 included patients the lesion masks were created manually on axial slices of volumetric scan, these were identified combining information from T1 and FLAIR images, previous radiological reports, report from multi-disciplinary team meeting, as well as oversight from a consultant pediatric neurologist. These identified lesion were then registered onto the cortical surface reconstruction with FreeSurfer. It is important to say that for this dataset \({\mathbf {X}}\in {\mathbb {R}^{P\times {N}}}\) with \(P=28\) and \(N=3307529\), \({\mathbf {y}} =\left\{ y_1, \dots , y_N\right\} \!\,\in \!\,\mathbb {R}^N\) as the labels vector, the imbalance class order is \(97.71\%\) for non-lesional vertex (0) and the remaining \(2.29\%\) for the lesional vertex (1). From MRI reconstructed with FreeSurfer the features generated were: the cortical thickness, define as the mean minimum distance between each vertex on the pial and white matter surfaces, the grey-white matter intensity contrast calculated as the ratio of the gray matter to the white matter signal intensity, the FLAIR intensity was sampled at the grey-white matter boundary as well as at 25%, 50% and 75% depths of the cortical thickness and at \(-0.5\) mm and \(-1\) mm below the grey-white matter boundary, the mean curvature was measured at the grey-white matter boundary as the inverse of the radius, where the radious is an inscribed circle and is equal to the mean of the principal curvatures, and finally, the sulcal depth as the dot product of the movement vector of the cortical surface during inflation.

mRMR-SS Training: To increase the predictive performance of the ensemble classifier using the RUSBoost technique, a feature selection method based on mutual information with the common sequential searches was performed, to give more stability to the classifier for identification of FCDs with unbalanced data. The relevant features were selected using a threshold in the normalized explanation scoring for the features, allowing to keep those feature which jointly achieve the set threshold. The WeakLearn used for this ensemble was decision tree, and the following statistics were computed to compare the estimate performance of the method class-by-class: G-mean, which is the root of the product of class-wise sensitivity, sensitivity that quantifies the ability to avoid false negatives, and specificity that quantifies the ability to avoid false positives. A hold out validation was implemented, the data was split into 70% for training and 30% for validation. The first 70% of the data was split again into 70%–30% (train-test) 10 times. Then a nested cross-validation was employed for each of the 10 repetitions to determinate the optimal parameters of the classifier (Percentage of total instances represented by the minority class (M), number of iterations (T) and learning rate (Lr)). The grid for this nested cross-validation goes for M from 10% to 100%, for T from 100 to 1000 iterations, and for the learning rate of the ensemble from 0.1 to 1. Once all the results were obtained, the best configurations of parameters was selected and a the initial 30% of data for validation were used to test the model trained model.

Comparison Methods: We compare our results with the methodologies to address the class imbalance oriented to automatic detection of FCD proposed: Cluster-based under-sampling (CBUS) and CBUS with Baggin [6], without under-sampling (WUS) [1], random under-sampling (RUS) [5] and bagging approach [2] on five random samples of majority class, each one of equal size of the minority class. Similar to [6] we test all those mentioned schemes with Neural Network classifiers (NN), trained using surface based measures from samples selected by each method. A single hidden layer neural network is chosen as the classifier because it can be rapidly trained on large datasets [1]. The number of hidden neurons in the network is determined through a principal component analysis applied to the input features, using the number of components that explained over 99% of the variance. In our case, we fit an ensemble with a grid of ten values for each parameter, only was reported the optimal parameter configuration.

4 Results and Discussion

For every feature Fig. 2 shows the explanation score calculated using the sequential search based on mRMR criterion between these features and the target class. Even these scores are for every single feature, due to the nature of the FS and BE searches, the scores of subsequence features depend on the previous. The number of relevant features for both searches were established by computing the cumulative explanation score, and cutting when this decrease from previous stages. In this case, for FS the amount of features were 15, and 14 for BE, the top ranking of feature of this two, are nearly the same features, they have 13 features in common, this exhibit that our feature selection stage is stable. Is important to note, that those best feature in both cases belongs to asymmetry between both hemispheres of the brain, and the features related to the contrast between gray and white matter in the FLAIR images.

As we mention in Sect. 3, the RUSBoost ensemble has three free parameters to be tuned, implementing the nested cross-validation scheme we determinate the best set of parameters for the classification problem, obtaining for \(T=100\) iterations, \(Lr=0.1\) and \(M=60\%\), latter results shown in Table 1 were obtained using this parameters configuration. Table 1 shows the results obtained for the state art methods; Without under-sampling (WUS), Random under-sampling (RUS), random bagging, Cluster-based under-sampling (CBUS) and the combination of CBUS and bagging, All those under-sampling methods were used on a Neuronal Network classifier. Without under-sampling the classifier achieves a high specificity (99.7%), nevertheless the sensitivity is low (49.8%). This happens because the classifier is biased towards the healthy class due to the high class imbalance. When input data is randomly under-sampled the sensitivity results increases, but the specificity decreases. However, the G-mean value, which encompasses sensitivity and specificity information, increases significantly. The bagging approach improves the G-mean value, with respect to the two previews methods. Besides of the state of art methods here implemented, shown equivalent results for the RUSBoost classifier, for the database with no selected features (NFS). But it is important to note that after using out mRMR-SS stage, the ensemble with the same parameters and same base learner improve significantly in terms of G-mean, specificity and sensitivity the FCD localization.

It is clearly shown a high performance and stability for RUSBoost classifier over the NN, in addition, the selected features under mRMR-SS exhibit more stability than all the set of features. The RUSBoost technique probe to be a solid alternative for unbalance data classification like FCDs datasets. Even when result with all the features shows more efficient than other methods, the mRMR-SS feature selection strategy can provide more stability to the classifier by selecting an optimal set of feature that satisfactory explain the target labels, even when dealing the imbalance data, in this case FCD data.

5 Conclusion and Future Work

We proposed a classification approach to locate FCD by using a mRMR sequential search for feature selection that avoids biased results due to imbalance issue, and provides stability to the classification stage, doing a efficient complement with the ensemble classifier with RUSBoost algorithm for this task, the results shows high performance in FCD localization in terms of G-mean, specificity and sensitivity. Thus, we conclude this approach probe to be more stable and gets better results than other proposed in literature by applying an efficient feature selection stage. Also, the mRMR-SS proved to be a solid and suitable feature selection strategy for selecting feature that explain the best a target label, because of it uses the probability distribution which obviate the imbalance, instead of variability that may be comprised for the number of examples making this kind of strategy to bias to majority class. We propose as future work, to reduce the computational cost by implementing this methodology in parallel. Other proposal is to implement the COBRA strategy for feature selection proposed by Naghibi et al. [11], and complement this feature selection stage with a posterior feature extraction stage, which also, relays on mutual information measures. In terms of classification we propose to prove other weak learners, which may present similarly results to the decision tree, but improve the computational time, or even, which may have less free parameters to be tuned.

Notes

- 1.

Available online in https://doi.org/10.17863/CAM.6923.

References

Adler, S., Wagstyl, K., Gunny, R., et al.: Novel surface features for automated detection of focal cortical dysplasias in paediatric epilepsy. NeuroImage Clin. 14, 18–27 (2017)

Ahmed, B., et al.: Cortical feature analysis and machine learning improves detection of “MRI-negative” focal cortical dysplasia. Epilepsy Behav. 48, 21–28 (2015)

Elkan, C.: The foundations of cost-sensitive learning. In: International Joint Conference on Artificial Intelligence, vol. 17, pp. 973–978. Lawrence Erlbaum Associates Ltd. (2001)

He, H.: Imbalanced learning. In: Self-Adaptive Systems for Machine Intelligence, pp. 44–107 (2013)

Hong, S.-J., Kim, H., Schrader, D., Bernasconi, N., Bernhardt, B.C., Bernasconi, A.: Automated detection of cortical dysplasia type II in MRI-negative epilepsy. Neurology 83(1), 48–55 (2014)

Hoyos-Osorio, K., Álvarez, A.M., Orozco, Á.A., Rios, J.I., Daza-Santacoloma, G.: Clustering-based undersampling to support automatic detection of focal cortical dysplasias. In: Mendoza, M., Velastín, S. (eds.) CIARP 2017. LNCS, vol. 10657, pp. 298–305. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-75193-1_36

Najm, I.M., Tassi, L., Sarnat, H.B., Holthausen, H., Russo, G.L.: Epilepsies associated with Focal Cortical Dysplasias (FCDs). Acta Neuropathol. 128(1), 5–19 (2014)

Peng, H., Long, F., Ding, C.: Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Analy. Mach. Intell. 27(8), 1226–1238 (2005)

Seiffert, C., Khoshgoftaar, T.M., Van Hulse, J., Napolitano, A.: Rusboost: a hybrid approach to alleviating class imbalance. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 40(1), 185–197 (2010)

Wiwattanadittakul, N., et al.: Location, size of focal cortical dysplasia, and age of seizure onset in children who underwent epilepsy surgery. In: EPILEPSIA, NJ, USA, vol. 58 (2017)

Naghibi, T., Hoffmann, S., Pfister, B.: A semidefinite programming based search strategy for feature selection with mutual information measure. IEEE Trans. Pattern Anal. Mach. Intell. 37(8), 1529–1541 (2015)

Acknowledgments

Under grants provided by the project “Desarrollo de un sistema de apoyo al diagnóstico no invasivo de pacientes con epilepsia farmacoresistente asociada a displasias corticales cerebrales: método costo efectivo basado en procesamiento de imágenes de resonancia magnética” with code 111074455778 funded by COLCIENCIAS. J. Castañeda is partially funded by “Metodología para la segmentación automática de la corteza cerebral sobre imágenes MRI con base en características volumétricas usadas en técnicas de renderizado tridimensional por funciones de transferencia” by the Vicerrectoria de Investigación and the Maestría en ingeniría eléctrica program, both from the Universidad Tecnológica de Pereira.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Castañeda-Gonzalez, J., Alvarez-Meza, A., Orozco-Gutierrez, A. (2019). An Enhanced Sequential Search Feature Selection Based on mRMR to Support FCD Localization. In: Vera-Rodriguez, R., Fierrez, J., Morales, A. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2018. Lecture Notes in Computer Science(), vol 11401. Springer, Cham. https://doi.org/10.1007/978-3-030-13469-3_57

Download citation

DOI: https://doi.org/10.1007/978-3-030-13469-3_57

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-13468-6

Online ISBN: 978-3-030-13469-3

eBook Packages: Computer ScienceComputer Science (R0)