Abstract

Several deaths occur each year because of burns. Despite advancements in burn care, proper burns diagnosis and treatment of burn patients still remains a major challenge. Automated methods to give an early assessment of the total body surface area (TBSA) burnt and/or the burns depth can be extremely helpful for better burns diagnosis. Researchers are considering the use of visual images of burn patients to develop these automated burns diagnosis methods. As the skin architecture varies across different parts of the body, and so the burn impact on different body parts. So, it is likely that the body part specific visual images based automatic burns diagnosis assessment methods would be more effective than generic visual images based methods. Considering this, we explore this problem of classifying the body part of burn images. To the best of our knowledge, ours is the first attempt to classify burnt body part images. In this work, we consider 4 different burnt body parts: face, hand, back, and inner arm, and we present the effectiveness of independent and dependent deep learning models (using ResNet-50) in classifying the different burnt body parts images.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Burns are amongst the severe global health issues, and as per WHO Burns Reports 2018, an estimated 180,000 persons lose their life because of burns each year [1]. And many more become crippled forever. Just in India alone, more than 1 million get moderately or severely burnt every year [1, 2]. Around 70% burn injuries occur in the most productive age group (25 ± 10 years), and most patients belong to poor socioeconomic strata. Also, the post burn life of burns survivor is never the same - socially, economically, mentally and physically. Timely done burns diagnosis and adequate first aid treatment can check the burns severities and significantly reduce the number of burn death cases. However, there are several challenges such as limited number of expert dermatologists, limited number of burn centres, lack of awareness about the first aid and prevention strategies, use of manual methods in diagnosis, and heavy treatment costs. Despite advancements in burn care, proper burns diagnosis and treatment of burn patients remains a major challenge. Automated methods to give an early assessment of the total body surface area (TBSA) burnt and/or the burns depth can be extremely helpful for better burns diagnosis [2,3,4,5]. Laser Doppler imaging (LDI) has been found as most reliable technique for burn depth assessment but high costs, delays and limited portability limits its real world usage [4, 6, 7]. Considering high penetrations of Smartphones having good resolution cameras and advances in data sciences, visual images based automated burns diagnosis methods can be very useful.

A group of researchers from University of Seville, Spain had been actively contributing in this domain of burn depth assessment methods using color images from around last two decades [3, 8,9,10]. Lately, they presented a psychophysical experiment and multidimensional scaling analysis to determine the physical characteristics that physicians employ to diagnose a burn depth [3]. In this work, they used a k-nearest neighbor classifier on a dataset of 74 images, and the accuracy of 66.2% was achieved in classifying the burn images considered into three burn depths with their approach. Earlier, Wantanajittikul et al. described a support vector machine (SVM) based approach for burn degree assessment [11] but they also considered very less images. Recently, Badea et al. [5] proposed an ensemble method build upon fusing decision from standard classifiers (SVM and Random Forest) and the CNN architecture ResNet [12] for burn severity assessment. For feature extraction, they used primarily the Histogram of Topographical features (HoT). Their proposed system was able to identify light/serious burns with an average precision of 65%.

As the skin architecture varies across different parts of the body [13, 14], and so the burns impact on different body parts skin would be different. Therefore, it is likely that the body part specific visual images based automatic burns diagnosis assessment methods would be more effective than generic visual images based methods. Considering this, we explore this problem of classifying the body part of burn images. To the best of our knowledge, ours is the first attempt to classify burnt body part images. In this work, we consider four different burnt body parts: face, hand, back, and inner arm, and we aim to identify the body-part of the given input burnt image. We then discuss the performance of ResNet50 deep learning architecture based independent and dependent deep learning models in classifying the different burnt body parts images.

2 Data Collection

Using Google Search engine, we gathered burned images of different body parts [15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39]. Out of these total 109 burnt images that we collected, there are 30 face images, 35 hand images, 23 back images and rest are inner forearm images. The average resolution of these images is in the range of (350 − 450) × (300 − 400) pixels. Figures 1, 2 and 3 show some sample burnt images of back, hand and inner forearm.

We also used in one of our models a dataset of 4981 non-burnt skin images of these four different parts: back, face, hand and inner forearm. We used some available datasets for these images [40,41,42,43].

3 Methodology

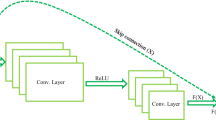

We use the ResNet50 architecture deep learning models (as shown in Fig. 4) for feature extraction [12]. We freeze all the layers of the ResNet-50 model and replace its output layer by a dense layer with 4 nodes, representing 4 classes. The average pooling method is used for feature extraction. We use Softmax activation function, ADAM optimizer and categorical cross-entropy loss function in our model. We used two variants of this model: M1 - dependent and M2 - independent (considering limited number of burn images), for the classification of the body-part of the input burnt image.

Block diagram of the ResNet50 architecture used [44]

For dependent model M1, we used leave-one-out cross validation (LOOCV) for classification. Noting the challenge of limited number of burnt images availability, we explored the independent deep learning model and its effectiveness for this classification problem. In the independent model M2, we used a total of 4981 non-burnt skin images of four different parts (face images: 2000 [40], hand images: 2000 [41], back: 81 [42], and inner forearm: 800 images [43]) for the training, a total of 1798 non-burnt skin images for the validation [40,41,42,43], and the same 109 burnt body part images for the testing. To account for class imbalance in training, we normalized the class weights to be inversely proportional to number of images in that class.

4 Experimental Results

For the 109 burnt body parts images, we obtained overall classification accuracy of 90.83% and 93.58% using dependent (M1) and independent (M2) deep learning ResNet-50 model, respectively. The M1 used LOOCV and thus training was performed 109 times. It accurately classified 22 back body part burn images, 27 face images, 31 hand images and 19 inner forearm burnt images.

The model M2 that was trained using no burn images classified in comparison 2 back and 3 forearm burnt image inaccurately, but M2 performed much better in classifying other body-parts burnt images as 29 face and 34 hand burnt images were classified accurately (Tables 2 and 3). Table 1 presents the classification accuracy results and effectiveness of these two models. When we consider the top 2 estimated classes for each of the test image, both the models were found to be more than 99% accurate.

5 Conclusions and Future Work

We discussed a new problem of classifying burnt body part images that can help in the development of better burns diagnosis methods and we present the effectiveness of some deep learning models in addressing this problem, considering the constraint of limited number of burnt images availability. It is encouraging and interesting to note that a ResNet50 architecture based model, trained using non burnt images, was able to classify with more than 93% accuracy the burnt images of 4 different body parts: face, back, hand, and inner forearm. Further, there is a scope of exploring the robustness and effectiveness of the proposed method using much larger number of burnt images and considering more classes by including other body parts e.g. feet, stomach or chest.

References

Burns (2018). http://www.who.int/mediacentre/factsheets/fs365/en/. Accessed 28 June 2018

A WHO plan for burn prevention and care Geneva: World Health Organization (2008). http://apps.who.int/iris/bitstream/10665/97852/1/9789241596299_eng.pdf. 28 June 2018

Acha, B., Serrano, C., Fondon, I., Gomez-Cia, T.: Burn depth analysis using multidimensional scaling applied to psychophysical experiment data. IEEE Trans. Med. Imaging 32(6), 1111–1120 (2013)

Pape, S.A., Skouras, C.A., Bryne, P.O.: An audit of the use of laser doppler imaging (LDI) in the assessment of burns of intermediate depth. Burns 27, 233–239 (2001)

Badea, S. M, Vertan, C., Florea, C., Florea, L., Bădoiu, S.: Severe burns assessment by joint color-thermal imagery and ensemble methods. In: IEEE HealthCom (2016)

Monstrey, S., Hoeksema, H., Verbelen, J., Pirayesh, A., Blondeel, P.: Assessment of burn depth and burn wound healing potential. Burns 34(6), 761–769 (2008)

Wearn, C., et al.: Prospective comparative evaluation study of laser doppler imaging and thermal imaging in the assessment of burn depth. Burns 44(1), 124–133 (2018)

Roa, L., Gómez-Cía, T., Acha, B., Serrano, C.: Digital imaging in remote diagnosis of burns. Burns 25(7), 617–624 (1999)

Acha, B., Serrano, C., Roa, L.: Segmentation and classification of burn color images. In: Proceedings of the 23rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, vol. 3 (2001)

Carmen, S., Rafael, B.-T., Gomez-Cia, T., Acha, B.: Features identification for automatic burn classification. Burns 41, 1883–1890 (2015)

Wantanajittikul, K., Theera-Umpon, N., Auephanwiriyakul, S., Koanantakool, T.: Automatic segmentation and degree identification in burn color images. In: Biomedical Engineering International Conference (BMEiCON), pp. 169–173 (2012)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

Adabi, S., et al.: Universal in vivo textural model for human skin based on optical coherence tomograms. Sci. Rep. 7 (2017)

Langer, K.: On the anatomy and physiology of the skin. Br. J. Plast. Surg. 31(1), 3–8 (1978)

www.google.com. Accessed 03 Aug 2018

www.pinsdaddy.com/. Accessed 03 Aug 2018

www.Ytimg.com. Accessed 03 Aug 2018

www.istockphoto.com. Accessed 04 Aug 2018

www.dailystar.co.uk/real-life/459698/Rare-skin-conditions-third-degree-burns-inspirational-man. Accessed 04 Aug 2018

www.deviantart.com/arrowkitillashwood/art/Third-Degree-Burns-271699844. Accessed 04 Aug 2018

http://asktheburnsurgeon.blogspot.com/p/burn-pictures-and-videos.html. Accessed 04 Aug 2018

http://practicalplasticsurgery.org/2011/05/case-3-hand-injury-severe-burn-scar-contracture/. Accessed 04 Aug 2018

https://burnssurgery.blogspot.com/2012/01/?view=classic. Accessed 04 Aug 2018

www.dalafm.co.ke/a-woman-burns-her-grandson-in-siaya/news/county-news/. Accessed 30 July 2018

https://lekton.info/plus/t/third-degree-skin-burns/. Accessed 31 July 2018

http://styleupnow.com/beauty-tips-4-simple-ways-to-treat-burns-at-home/. Accessed 31 July 2018

https://salamanders.neocities.org/burnface.htm. Accessed 31 July 2018

http://olivero.info/bari/f/first-degree-burns-on-face/. Accessed 31 July 2018

www.thinglink.com/scene/716464757611167744. Accessed 31 July 2018

www.cyclingweekly.com/news/chris-froomes-mesh-skinsuit-sunburn-18852. Accessed 31 July 2018

www.dreamstime.com/stock-photo-burn-sun-body-human-back-burnt-sunburn-scald-back-s-beams-image97972700. Accessed 31 July 2018

commons.wikimedia.org/wiki/File:1Veertje_hand_burn.jpg. Accessed 30 July 2018

http://makeupbygill.blogspot.com/2011/10/special-effects-burns-assessment.html. Accessed 30 July 2018

www.derma-gel.net/case-studies/second-degree-burn-face/. Accessed 30 July 2018

csn.cancer.org/node/262311. Accessed 28 July 2018

www.thepinsta.com. Accessed 28 July 2018

http://1s-pozycjonowanie-narzedzia.info/2rd-degree-burn-pictures.html. Accessed 20 June 2018

www.enews.tech/chemical-burns-on-hands.html. Accessed 02 Aug 2018

http://obryadii00.blogspot.com/2011/03/pictures-of-2nd-degree-burns.html. Accessed 02 Aug 2018

Gourier, N., Hall, D., Crowley, L.J.: Estimating face orientation from robust detection of salient facial features. In Proceedings of Pointing 2004, ICPR, International Workshop on Visual Observation of Deictic Gestures, Cambridge, UK (2004)

Mahmoud, A.: 11 K hands: gender recognition and biometric identification using a large dataset of hand images. arXiv:1711.04322 (2017)

Nurhudatiana, A., et al.: The individuality of relatively permanent pigmented or vascular skin marks (RPPVSM) in independently and uniformly distributed patterns. IEEE Trans. Inf. Forensics Secur. 8(6), 998–1012 (2013)

Zhang, H., Tang, C., Kong A., Craft, N.: Matching vein patterns from color Images for forensic investigation. In: Proceedings of IEEE International Conference on Biometrics: Theory, Applications and Systems, pp. 77–84 (2012)

https://www.codeproject.com/Articles/1248963/Deep-Learning-using-Python-plus-Keras-Chapter-Re. Accessed 31 July 2018

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Chauhan, J., Goswami, R., Goyal, P. (2019). Using Deep Learning to Classify Burnt Body Parts Images for Better Burns Diagnosis. In: Lepore, N., Brieva, J., Romero, E., Racoceanu, D., Joskowicz, L. (eds) Processing and Analysis of Biomedical Information. SaMBa 2018. Lecture Notes in Computer Science(), vol 11379. Springer, Cham. https://doi.org/10.1007/978-3-030-13835-6_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-13835-6_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-13834-9

Online ISBN: 978-3-030-13835-6

eBook Packages: Computer ScienceComputer Science (R0)