Abstract

Online Lesbian, Gay, Bisexual, and Transgender (LGBT) support communities have emerged as a major social media platform for sexual and gender minorities (SGM). These communities play a crucial role in providing LGBT individuals a private and safe space for networking because LGBT individuals are more likely to experience social isolation and family rejection. However, the emergence of these online communities introduced new public health concerns and challenges. Since LGBT individuals are vulnerable to mental illness and risk of suicide as compared to the heterosexual population, crisis prevention and intervention are important. Nevertheless, such a protection mechanism has not yet become a serious consideration when it comes to the design of LGBT online support communities partially because of the difficulties of identifying at-risk users effectively and timely. This pilot study aims to explore the potential of identifying LGBT user discussions related to help-seeking through natural language processing and topic model. The findings suggest the feasibility of the proposed approach by identifying topics and representative forum discussions that contain help-seeking information. This study provides important data to suggest the future direction of improving data analytics and computer-aided modules for LGBT online communities with the goal of enhancing crisis suicide prevention and intervention.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 LGBT Internet Support Communities

Online Lesbian, Gay, Bisexual, and Transgender (LGBT) communities have emerged as a major social media platform for sexual and gender minorities (SGM). Due to family rejection, isolation, stigma, and discrimination, many LGBT individuals choose to engage with LGBT online communities where they can network with peers in a relatively private and safe space. Notably, however, LGBT individuals experience high rates of mental illness (e.g., mood disorders, anxiety, personality disorders, etc.) as well as high risk of suicide as compared to heterosexual population [1]. Recent studies also indicated an increasing number of LGBT youth in these online communities [2]. The Centers for Disease Control and Prevention (CDC) have reported that suicide is the third leading cause of death for youth ages 10 to 14 and the second leading cause of death for youth ages 15 to 24 in the US [3].

These LGBT support communities have been an ideal venue for LGBT users to expose themselves and to seek advises, but thus far most of the communities are missing the function to protect this vulnerable population from high-lethality suicide risk. More specifically, there is a missing module to identify at-risk users in a timely manner, in which those users may expose signs of urgent needs of support, severe suicidal ideations, or suicidal behaviors. As a result, timely prevention and intervention are barely possible.

1.2 Identifying Help-Seeking Behaviors from Users’ Written Speech

Help-seeking behaviors are an observable measure for the state of users’ psychosocial functioning, which may be used for identification of at-risk users. The proactive intervention will become feasible if at-risk users and their posts can be accurately identified in a timely manner. In the traditional research environment in which researchers gain direct interactions with participants, such behavioral data is collected through interviews, surveys, and clinical observations. Although it is a less challenging data collection process, studies reported that many participants are reluctant to provide information about their needs [4, 5]. When it comes to the online environment, user-generated written speech is the key data source that enables indirect observation of users’ help-seeking behaviors.

Users in LGBT online support communities raise a variety of help-seeking topics such as identity confusion, networking, crises in relationships, mental disorders, etc. Many topics do not necessarily relate to suicide risks, but some others deserve immediate investigation and intervention, e.g., those express depression and suicidal ideations. Unfortunately, there is only a very small number of LGBT support communities, e.g., TrevorSpace, that recruited specialized forum administrators to provide referral information and interventions to those who are at risk. Even so, the service is not provided in a timely manner due to the costly labor. Most LGBT support communities are only able to share the suicide referral information in the announcement column. Hence, there is a pressing need to improve the timely identification of critical help-seeking topics for proactive intervention.

Presently, there is a limited number of studies that focus on help-seeking behaviors of online LGBT users through their written speech. Among published work, most studies adopted content analysis in which human judges are performed on coded free text [6]. The content analysis presents unique advantages of disclosing detailed and clinically valuable information about users’ psychobehavioral states but is also criticized for intensive labor and questionable inter-rater reliability [7]. In recent years, computer-aided data processing and analyses have been increasingly used in social, behavioral, and health sciences. Computational methods that were originally developed from computer and information sciences are now used in psychological studies, such as natural language processing (NLP) and machine learning [8]. For example, topic modeling has also been used as a replacement or supplement of content analysis in processing written speech that contains mental health-related information [9, 10]. One of the unique advantages of these computational methods is the efficiency and enhanced capability of processing large-scale data.

1.3 Approach

The primary aim of this study is to identify signs of help-seeking behaviors by analyzing LGBT online users generated written speech. These help-seeking behaviors are important data valuable to identify at-risk users.

To achieve this aim, we employed natural language processing (NLP) to assist in automated analysis of textural data and clustering of topics of posts. In specific, we developed topic models [11] to automatically cluster various topics of help-seeking posts. Our approach allows us to distinguish different posts by clusters of lexical information a thread carries. Recent advances in clinical psychology, NLP, and machine learning have already demonstrated the feasibility of automated identifying suicide-risk related clues through analyzing linguistic information such as individuals’ written speech on social media [12,13,14,15,16] and electronic health records [17]. We analyzed the historical posts from LGBT Chat & Forums, an anonymous LGBT online community consisting of ten thousand of threads. The experimental procedures are as follows. (1) We employed standard NLP preprocess to clean the free text data. (2) We implemented the Latent Dirichlet Allocation (LDA) algorithm to construct topic models. (3) We used the trained topic models to cluster posts by topics. Based on the model output, we examined topics, keywords representing the topics, and associated posts relevant to help-seeking behaviors. Discussion of potential design of an interactive module to timely identify at-risk users followed.

Our study provided important data to demonstrate the efficiency and effectiveness of identifying critical help-seeking behaviors by users generated written speech. The findings will serve as the preliminary data to our future plan of developing computational tools for the emerging LGBT online support communities. Our study also provided data to inform potential changes in public health policy that benefits the SGM population.

2 Methods

2.1 Materials

We used historical data of LGBT users’ written speech communications from LGBT Chat & Forums (https://lgbtchat.net/). This is an open-registration and anonymized forum that allows LGBT users for networking, chatting, and experience sharing. Historical data refers to data that was generated six months before data collection.

Data were extracted by web crawling technique. We employed the Python3 wrapped package of Boilerpipe3 to fetch the data. A corpus consisting of 65,120 forum posts generated from December 2012 to June 2018 was created. Data collection was completed in January 2019.

Although researchers do not need to comprehend forum posts during the automated data collection and processing, it is still possible for observing any adverse events or suicidal behaviors that still have any influence at present. In case any of these adverse events and suicidal behaviors are observed during the study, researchers were instructed to report immediately to forum administrators and local crisis intervention agencies.

2.2 Procedures

We performed standardized NLP procedures to prepare the corpus before it could be used to generate the topic model. Below we described the NLP pre-processing and topic modeling, respectively.

NLP Pre-processing.

The motivation of pre-processing the corpus is to extract the bag-of-words (BOW) representation of free text. In the corpus, each post is in the free text format, which can be represented by a multiset of words disregarding the sequence and grammatical rules, i.e., BOW. Topics can be extracted from such a BOW representation. We followed the procedures below. The resulting dataset was in the BOW representation with indexes and word frequency that were ready for topic modeling.

Cleaning-Up Text.

We used regular expressions to remove text irrelevant to the users’ written speech (e.g., HTML heading and tagged text), new-line characters, and symbols.

Tokenization.

This step was to tokenize sentences into words, removing punctuations. We used the tokenization module built in the Gensim package.

Removing Stop Words.

Stop words (e.g., “the”, “a”, “an”, etc.) are interfering when included in the BOW representation. To remove the stop words, we used the list of stop words included in the nltk package as a dictionary.

Bigram Modeling.

We considered words frequently occurring together in the corpus to be bigram words (e.g., “Southern Europe”). The identification of bigrams and words combining were performed by employing the Gensim package.

Lemmatization.

This step was to convert the words into the root format. For example, the word “laughing” should be converted to “laugh” and the word “students” is converted to “student”. Lemmatization was performed by employing the spaCy package.

Topic Modeling.

The topic model we used was built on LDA algorithm and was implemented in the Gensim package. LDA develops probabilistic graphical modeling based on BOW representation. To discover an optimized topic model, we considered a balance between the coherence of words captured by topics and the interpretability of topics.

Building LDA Topic Models.

We built the models by employing the Gensim package. We used default parameters including chunksize (the number of posts in each training chunk), passes (total number of training passes), and alpha and eta (control of sparsity of topics). The only parameter we manipulated was num_topics, which represents the number of topics the model generated, discussed next.

Finetuning the Model with Optimized Coherence Score.

We conducted an experiment in which we trained topic models with different numbers of topics (i.e., num_topics). The number of topics ranged from 5 to 100 with an increment of 5, resulting in 20 different values for num_topics. These models were measured by the coherence score [18] ranging from 0 to 1. A higher score represents a better coherence. Models with outperformed coherence scores were used to generate topics for further analysis.

Visualization.

We created an interactive 2D visualization for generated topics. The pyLDAvis package was used for creating visualization.

Topic Analysis and Interpretation.

We first identified topics relevant to help-seeking behaviors by examining the set of topic keywords. Second, we retrieved the most representative posts for relevant topics. This procedure enabled a detailed observation by establishing a direct interaction between topics and posts.

3 Results

3.1 Experimental Results for Optimized Models

We tested 20 different topic models with different a number of topics (num_topics). Figure 1 shows the convergence scores for every run of the test. In general, the coherence scores drop slowly when we increased the number of topics. The best coherence (0.47) was recorded when there were 5 topics specified. Moreover, in order to identify an optimized model, we also need to evaluate the interoperability of the topics the model produced, detailed in the next section.

3.2 Topic Interpretation

We started to identify an optimized topic model that can generate meaningful topics from the model with num_topics = 5 with an increment of num_topics each time. Models with comparatively high convergence scores often trade off with the number of meaningful topics. To balance between these two factors, we generated a model with 35 topics (coherence score = 0.4) for downstream analysis.

Examining Relevant Topics.

In Table 1, we selected the interpretable topics and the corresponding keywords. Each topic is represented by a set of 10 keywords that have the highest contribution to the topic.

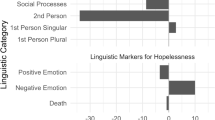

Among the 35 topics, Topic #22 is relating to posts that contain help-seeking behaviors. As shown on the left-hand side of Fig. 2, the bubbles represent topics. The area of a bubble represents the prevalence of a topic. Semantically close topics are close, or even overlapped, in the figure. The bar chart on the right-hand side of the figure shows the top represented keywords of a topic as well as the frequency and proportion of the keywords. For Topic #22, it was distributed over a number of salient keywords including “die”, “kill”, “cry”, and “dead”. Most of these keywords were unique to Topic #22 except for “medical”, “would”, and “alone”.

Topic #30 is also relevant to help-seeking posts. It was represented by “pain”, “angry”, “apart”, etc. See Fig. 3. All of the keywords made unique contributions to topic #30. Overall, both topics are less prevalent as compared to topics #1, #2, #3, #4, etc.

Of particular interest to the potentially vulnerable users in the LGBT online support communities, we found that the keyword “school” occur in many posts, suggesting that there may be a substantial number of LGBT youth users in the online communities. Our model shows that “school” is primarily contributing to the Topic #5. See Fig. 4. Although this topic was not included in Table 1 due to the lack of a meaningful interpretation, such a finding suggests interesting follow-up research questions.

Representative Posts over Topics.

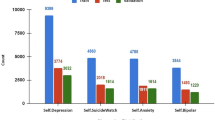

We explored further on identifying representative posts for a topic of interest. Posts relating to help-seeking behaviors contain shared-experience about depression, hopeless, pain, self-harm, etc. Users tended to be supportive and provided emotional support, social support, and information about suicide hotlines. See Table 2 for examples of discussion in the posts.

4 Discussion

4.1 Major Findings

In this study, we explored the potential of identifying LGBT forum posts that are relating to help-seeking behaviors by topic models. One premise of this approach is that topics of posts are represented by multisets of words. Our findings suggested that a spectrum of meaningful topics can be identified by developing topic models over LGBT forum posts. This finding is in line with a number of studies leveraging topic models to discover topics of interest from textual social media data [19,20,21].

In a number of topics that we found, we would like to underscore help-seeking topics with the scope of this study. Help-seeking behaviors exist in the LGBT online support communities. Such posts are often associated with shared negative emotion and experience, mental/physical pain, suicidal ideations, and even signs of attempts. Causes include rejection, self-disappointment, gender & sexual identity-related confusion, etc. Based on our observation, users from the LGBT forum are supportive, especially those who have been in the same or similar situations before.

In addition to the help-seeking related topics, we also identified a number of meaningful topics that are widely discussed in the community. These topics are equally interesting to research questions with regard to gender and sexual identity, isolation, rejection, bully, and a number of contributing factors to mental disorders. These research questions are traditionally studied in a face-to-face setting such as interview and questionnaire. Our approach holds potentials to provide an innovative alternative to collect data from LGBT users generated written speech. As compared to the content analysis, which is commonly used to analyze data collected from interview and narrative data, NLP and topic modeling can overcome shortcomings such as less efficiency.

4.2 Implications for LGBT Online Support Communities

In this study, we strived to collect preliminary data to contribute to the improvement of LGBT online communities. LGBT population is vulnerable to mental health problems and suicide. Presently, most of the LGBT online support communities have limited protective mechanism for proactive suicide intervention and prevention, remaining to be a significant public health concern. Since the findings suggest the feasibility of automated identification of at-risk posts, we recognized the potential to develop a real-time monitoring module to identify users who need immediate assistance.

4.3 Limitations and Future Direction

The present study is less valuable without the discussion of its limitations. First, outcomes of the topic model are limited in terms of interpretability. As it has been recognized as a common problem of topic modeling, in our study, only 11 out of 35 topics carry obvious meanings. The rest of the topics are either less salient or containing implicit meanings. Second, identifying meaningful topics requires domain knowledge. The process is less objective, but it was compromised by calculating the coherence score of the model. Third, the corpus of LGBT forum posts contains a considerable portion of noisy information. We noticed that meaningful topics are generally less prevalent, whereas many less-meaningful topics are not. It is probably because the discussion in the LGBT forum involves a broad range of mixed themes, including lyrics, movies, and jargons that are less suitable to be captured by a BOW based model, i.e., topic model.

In the future study, we aim to develop further on the present approach to improve the accuracy, interoperability, and generalizability of NLP methods. For example, we believe that a specialized language system can provide references for the machine to understand contextual semantic information from LGBT users generated narratives. Presently, there is no published tool for that purpose. In addition, a customized NLP pipeline may improve the text pre-process and, further, the performance of the model. Our next step is also to develop data processing tool specialized for LGBT online communities with the goal of improving proactive intervention through data science.

References

Haas, A.P., et al.: Suicide and suicide risk in lesbian, gay, bisexual, and transgender populations: review and recommendations. J. Homosex. 58, 10–51 (2010)

GLSEN, CiPHR, & C.: Out online: the experiences of LGBT youth on the internet, New York (2013)

Xu, J., Murphy, S.L., Kochanek, K.D., Arias, E.: Mortality in the United States, 2015 (2016)

Provini, C., Everett, J.R., Pfeffer, C.R.: Adults mourning suicide: self-reported concerns about bereavement, needs. Death Stud. 24, 1–19 (2000)

Prescott, T.L., Gregory Phillips, I.I., DuBois, L.Z., Bull, S.S., Mustanski, B., Ybarra, M.L.: Reaching adolescent gay, bisexual, and queer men online: development and refinement of a national recruitment strategy. J. Med. Internet Res. 18, e200 (2016)

Griffiths, K.M., Calear, A.L., Banfield, M., Tam, A.: Systematic review on Internet Support Groups (ISGs) and depression (2): what is known about depression ISGs? J. Med. Internet Res. 11, e41 (2009)

Lombard, M., Snyder-Duch, J., Bracken, C.C.: Content analysis in mass communication: assessment and reporting of intercoder reliability. Hum. Commun. Res. 28, 587–604 (2002)

Kern, M.L., et al.: Gaining insights from social media language: methodologies and challenges. Psychol. Methods 21, 507 (2016)

Nguyen, T., Phung, D., Dao, B., Venkatesh, S., Berk, M.: Affective and content analysis of online depression communities. IEEE Trans. Affect. Comput. 5(3), 217–226 (2014)

Carron-Arthur, B., Reynolds, J., Bennett, K., Bennett, A., Griffiths, K.M.: What’s all the talk about? Topic modelling in a mental health internet support group. BMC Psychiatry 16, 367 (2016)

Steyvers, M., Griffiths, T.: Probabilistic topic models. In: Handbook of Latent Semantic Analysis. A Road to Meaning, vol. 55, pp. 424–440 (2007)

Zhang, L., Huang, X., Liu, T., Li, A., Chen, Z., Zhu, T.: Using linguistic features to estimate suicide probability of chinese microblog users. In: Zu, Q., Hu, B., Gu, N., Seng, S. (eds.) HCC 2014. LNCS, vol. 8944, pp. 549–559. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-15554-8_45

De Choudhury, M., Kiciman, E., Dredze, M., Coppersmith, G., Kumar, M.: Discovering shifts to suicidal ideation from mental health content in social media. In: Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, CHI 2016, pp. 2098–2110 (2016)

Burnap, P., Colombo, W., Scourfield, J.: Machine classification and analysis of suicide-related communication on Twitter. In: Proceedings of the 26th ACM Conference on Hypertext & Social Media, HT 2015, pp. 75–84 (2015)

O’Dea, B., Wan, S., Batterham, P.J., Calear, A.L., Paris, C., Christensen, H.: Detecting suicidality on Twitter. Internet Interv. 2, 183–188 (2015)

Braithwaite, S.R., Giraud-Carrier, C., West, J., Barnes, M.D., Hanson, C.L.: Validating machine learning algorithms for Twitter data against established measures of suicidality. JMIR Ment. Health 3, e21 (2016)

Walsh, C.G.: Predicting risk of suicide attempts over time through machine learning. Clin. Psychol. Sci. 5(5), 457–469 (2017)

Newman, D., Lau, J.H., Grieser, K., Baldwin, T.: Automatic evaluation of topic coherence. In: Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics, pp. 100–108 (2010)

Kumar, M., Dredze, M., Coppersmith, G., De Choudhury, M.: Detecting changes in suicide content manifested in social media following celebrity suicides. In: Proceedings of the 26th ACM Conference on Hypertext & Social Media, pp. 85–94 (2015)

Resnik, P., Armstrong, W., Claudino, L., Nguyen, T., Nguyen, V.-A., Boyd-Graber, J.: Beyond LDA: exploring supervised topic modeling for depression-related language in Twitter. In: Proceedings of the 2nd Workshop on Computational Linguistics and Clinical Psychology: From Linguistic Signal to Clinical Reality, pp. 99–107 (2015)

Huang, X., Li, X., Liu, T., Chiu, D., Zhu, T., Zhang, L.: Topic model for identifying suicidal ideation in Chinese microblog. In: Proceedings of the 29th Pacific Asia Conference on Language, Information and Computation, pp. 553–562 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Liang, C., Abbott, D., Hong, Y.A., Madadi, M., White, A. (2019). Clustering Help-Seeking Behaviors in LGBT Online Communities: A Prospective Trial. In: Meiselwitz, G. (eds) Social Computing and Social Media. Design, Human Behavior and Analytics. HCII 2019. Lecture Notes in Computer Science(), vol 11578. Springer, Cham. https://doi.org/10.1007/978-3-030-21902-4_25

Download citation

DOI: https://doi.org/10.1007/978-3-030-21902-4_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-21901-7

Online ISBN: 978-3-030-21902-4

eBook Packages: Computer ScienceComputer Science (R0)