Abstract

In the recent past, some electoral decisions have gone against the pre-election expectations, what led to greater emphasis on social networking in the creation of filter bubbles. In this article, we examine whether Facebook usage motives, personality traits of Facebook users, and awareness of the filter bubble phenomenon influence whether and how Facebook users take action against filter bubbles. To answer these questions we conducted an online survey with 149 participants in Germany. While we found out that in our sample, the motives for using Facebook and the awareness of the filter bubble have an influence on whether a person consciously takes action against the filter bubble, we found no influence of personality traits. The results show that Facebook users know for the most part that filter bubbles exist, but still do little about them. Therefore it can be concluded that in today’s digital age, it is important not only to inform users about the existence of filter bubbles, but also about various possible strategies for dealing with them.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the recent past, the results of some political elections have been surprising, because the pre-election polls, which were supposed to give an idea of how the election would turn out, strongly indicated different outcomes. Nowadays, many people find information—including political information—on the Internet and by social media. This has also led to social networks being viewed more critically [11]. Accordingly, their role in the emergence of filter bubbles and echo chambers was discussed [18]. In the meantime, it can be assumed that many Facebook users have heard about the phenomenon of the filter bubble, as it has been increasingly addressed in the media as well as on social media platforms [2]. Nevertheless, it is believed that people who use social media for finding information are more likely to be in filter bubbles compared to the time when traditional media were the mainstream. While some are beginning to believe that the use of social networks may even lead to the inclusion of more diverse information [25], others are still of the opinion that they lead to stronger filter bubble effects. Personalization algorithms ensure that users only see certain content depending on their preferences [29, 46] and thus limit the diversity of opinions that are visible to the users [4, 40, 41]. Users often do not notice this and are not made aware of it either [51]. This is why users might take no measures against the filter bubbles in which they find themselves.

So far, little has been said about whether the knowledge about filter bubbles and perceiving them as problematic makes people take action against the effects of filter bubbles. That is why, as part of this study, we investigate whether Facebook users are actually aware that filter bubbles exist, whether they consider them to be problematic, whether they still do not know that filter bubbles exist and therefore do not inform themselves comprehensively outside of possible bubbles. We also consider whether filter bubbles are problematic for democracies in the eyes of our participants, whether they actively take action against them, and if so, which factors lead to these actions. Furthermore, we examine how the participants’ Facebook usage motives, Big Five personality traits, and awareness of filter bubbles influences if and how they take action against filter bubbles. We not only asked the participants to indicate whether they would generally take active action against filter bubbles, but we also asked them about specific strategies, such as deleting the browser history or the stored cookies.

2 Related Work

With the digital age and the rise of social media phenomena such as filter bubbles, echo chambers, and algorithms have emerged. What these are, is described in this chapter.

2.1 Social Media, Algorithms and Filter Bubbles

According to agenda setting theory, the media determine which topics are part of the public discourse and which are not. They draw attention to a topic and put it on the public agenda by reporting them as news [42]. Thus, the media have a direct influence on the formation of opinion [13].

Digitalization is also accompanied by the trend that traditional media is upstaged by social media and user-generated content [35]. Today, anyone can write and publish their own news and reach a wide audience through social media [5]. Even most traditional media outlets nowadays provide online platforms and spread their news via social media. The fact that anyone can spread news on the Internet leads to a flood of information that is hardly manageable. People can now access news and political information on the Internet from a variety of media and sources [1]. Thus, individuals can no longer read all content and absorb all available information. Instead they have to choose in advance which content they want to consume.

When they have the choice, individuals usually select content that reflects their own opinion and avoid content that contradicts their own opinion. In research, this effect is referred to as selective exposure (see e.g. [7, 14, 19, 36]). According to the cognitive dissonance theory by Festinger (1957) (see e.g. [7, 14, 19]) people experience it as positive when they are confronted with information that matches their opinion and are more likely to reject it when it contradicts their opinion.

As the logical extension of this effect, echo chambers become apparent as a consequence. This means that individuals surround themselves with like-minded people, non-confrontational information, and communicate about certain events taking place in echo chambers [53].

Another culprit in the equations comes from a different problem mentioned earlier. The Internet and social media provide so much information for the individual that it is difficult to decide which content is relevant to them. A technical development that can and should help Internet users to select relevant information are recommender systems [50]. In these systems, an algorithm filters which user sees which information. It attempts to provide content according to the user’s taste and interests.

A possible consequence of recommendation algorithms are filter bubbles [46] and algorithmic echo chambers. In such bubbles or chambers the users only see what they like, such as opinions and political positions that match their own beliefs [24] unknowingly to them.

Recommender systems are well-intended—they aim to reduce information overload—but come with a cost: If applied to news content, they might restrict the diversity of the content that a user is shown, thus leaving them in an opinionated filter bubble of news [10, 24, 46]. There are tools, however, that attempt to dampen the filter bubble effect of recommender system. Some of those tools leave the responsibility of defining rules for content selection entirely with the user, while others work in the background and do not notify the user about the changes [11].

2.2 Underlying Algorithms

Ideally, the quality of a recommender system is measured by whether the users are satisfied with it. In order to achieve the greatest possible user satisfaction, filter algorithms are often personalized [38]. Various techniques or procedures are used to personalize the recommendations. Content-based recommendations or collaborative filtering are most frequently used. Content-based recommendation uses the content in the data uploaded by users. The content is used to estimate what users would like to see. In collaborative filtering, recommendations are made on the basis of similarity of users. Preferences of people who show similar interests are used here [9, 38]. Besides these, there are many combinations of techniques used as well [47]. One well-known example of websites that use the above described algorithms is Facebook (see e.g. [4]).

2.3 Filter Bubbles and Echo Chambers

The possibility to choose information sources on the Internet can lead to echo chambers being more frequent and more influential [19], but nevertheless this kind of balkanization can also happen offline. For instance, people usually buy only newspapers they already know and like, which again reinforces their specific views [26, 52, 53].

Filter bubbles are seen as negative because people inside of them get used to different truths about our world. Pariser also sees filter bubbles as a stronger threat than echo chambers. He justifies this view with the fact that people in filter bubbles are alone, since the algorithms present individualized content to each user [46]. Furthermore, filter bubbles are invisible, as individuals usually cannot see how personalization algorithms decide which content is eventually shown to them. In addition, individuals do not consciously make the decision to go into a filter bubble, but this happens automatically and they are not notified when they are inside. In contrast, the choice of a newspaper with a certain political direction seems more like a conscious decision. Moreover, some studies dealing with strategies for bursting filter bubbles have shown that the continuous improvement of personalization algorithms is accompanied by some fears [11, 31, 45, 55].

Filter bubbles also affect political opinion formation and can be a threat for democracies, regardless of the form of democracy [10, 11]. For this purpose, aspects like customization and selective exposure and their effects on users have been investigated extensively [20]. For Dylko et al. the effect of filter bubbles on political opinion formation is stronger when individuals are in groups with mostly ideologically moderate others and for messages which contradict their own opinions. In the past, political campaign makers have taken advantage of this such as for the Donald Trump presidential campaign in 2016. In order to win voters, the social media profiles were analyzed and campaigns were tailored to individual users [30].

Algorithms may influence the formation of political opinion as well (see e.g. [32, 33]). The possible negative consequences of algorithms would be eliminated if all people were aware that filter bubbles do exist and whether they are caught inside of one. However, some studies have shown that most people are not aware that algorithms filter their content [22, 23, 48].

The possible negative consequences of recommender systems, echo chambers, and filter bubbles have often been considered, but many scientists are still unable to see forsee additional consequences. While some studies showed that selective exposure occurs, they also pointed out that people still perceive opinions that contradict their attitudes (see e.g. [12, 24, 26, 37]).

Especially with regard to politics, frequently discussed effects such as increasing polarization and fragmentation of opinions were not clearly shown in some studies [8, 25, 54]. Dubois and Blank argue that even if people use a relatively limited information gathering platform, they are likely to receive more information on different platforms [19].

There are studies that have found echo chambers on Twitter (see e.g. [6, 15]) and other studies that have not found them on Facebook (see e.g. [4, 27]).

Nevertheless, other researchers have found that algorithms accurately predict user characteristics such as political orientation [3, 21, 39]. Bakshy et al. (2015) discovered that Facebook filters a small amount of cross-sectional content in the United States, but they also could determine that the decisions of individuals have a greater impact on whether they see different content. For example, the choice of friends on Facebook has an influence [4].

As shown, there is little empirical evidence on the influence of filter bubbles. However, it is conceivable that the consequences of filter algorithms will only become visible when the algorithms function better. A possible threat to democracy can nevertheless exist and should not be ignored.

2.4 Strategies Against Filter Bubbles

Following the criticism of filter bubbles, research is being conducted for figuring out how to combat filter bubbles [50]. Strategies that can be used to combat filter bubbles include deleting web history, deleting cookies, using the incognito option of a browser, and liking different things or everything on a social media site [11].

Some developers of avoidance strategies argue that filter bubbles are actually an unsuccessful consequence of algorithms. They do not ensure that users see the search results they want, but that they do not see them and deprive users of their autonomy. These developers are mainly trying to raise people’s awareness of the existence of filter bubbles to give them more control [11]. For instance, Munson et al. have developed a tool that shows the user a histogram that politically classifies the read texts from left to right [44].

3 Method

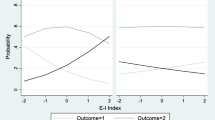

To find out whether individuals are aware of filter bubbles and to see if and how awareness of filter bubbles, Facebook usage motives, and personality traits influence whether individuals consciously take action against filter bubbles (see Fig. 1), we conducted an online survey from December 2017 to January 2018 in Germany.

The survey consisted of three parts. First, we asked for some demographic data and user factors (part 1), then we looked at the participants’ motives for using Facebook (part 2). Finally, we asked the participants if they had heard of filter bubbles before, what they thought about them and if and how they would take active action against filter bubbles (part 3). Subsequently, we describe the assessed variables.

Demographics. As demographics we asked the participants for their age, gender, and educational level.

Big Five. We further surveyed personality traits using items from the Big Five model inventory [49]. The Big Five personality traits relate to a very established model of personality psychology (cf. [17, 28, 34]): It divides the personality of individuals into five main categories: openness, conscientiousness, extraversion, agreeableness, and neuroticism. We used the BFI-10 short scale introduced by Rammstedt et al. [49].

Facebook Usage Motives. Further we measured what our participants use Facebook for. In the questionnaire we offered six possible reasons for choice. The reasons were stay in touch with friends, find new friends, for professional purposes, inform yourself about political/social topics, inform others about political/social topics, and express one’s opinion on political/social topics.

Filter Bubble Awareness. We also investigated the participants’ awareness of filter bubbles. We asked the participants, if they have already heard of filter bubbles, if they believe that filter bubbles exist, if filter bubbles are a problem, if filter bubbles affect them personally, and if filter bubbles display only interesting posts. Besides, we asked the participants, if they would take conscious action against filter bubbles. Finally, we asked which avoidance strategies they apply. Participants should think about five given strategies. We asked if they, delete the browser history, use the incognito function, click and like many different posts to enforce diversity, use the explore-button, which shows many different news, and if they subscribe some friends/pages.

Except for age, gender, and education, all scales were measured using agreement on a six-point Likert scale from 1 = do not agree at all to 6 = fully agree.

3.1 Statistical Methods

For the data analysis we used R version 3.4.1 using RStudio. To check the reliability of the scales, we used the jmv package. For correlations we used the corrplot package. Further, we used the likert package to analyze Likert data. To measure internal reliability we use Cronbach’s Alpha. Alpha values of \(\alpha > 0.7\) indicate a good reliability of a scale. We provide the 95% confidence intervals for all results and we tested the null-hypothesis significance on the significance level \(\alpha < .01\).

4 Results

To see what our sample looks like, we first look at the results from a descriptive point of view. The participants of our survey are on average 26.2 years old (\(n =149, SD = 7.45\)) and 84 (56%) are female and 65 (44%) are male. Further the participants are highly educated. A total of 85 (57%) have a university degree, 52 (35%) baccalaureate, six (4%) vocational baccalaureate, five (3%) a completed training and one a secondary education.

Looking at the Big Five personality traits, we see that the participants are the most open-minded and the least neurotic, but participants did not show unusual outliers (see Fig. 2).

When we asked if the participants were using Facebook uniformly, less or more than they did in the past, most participants indicate a less frequent use (65%), some indicate the same (33%) and only a few indicate to use it more frequently (2%).

Our participants use Facebook for different reasons, but as Fig. 3 shows, their primary use is to stay in touch with friends, whereas they use it less for other reasons such as to express their opinions on political/social topics, or to inform themselves or others about political and/or social topics.

Filter Bubbles. Next we look at the results regarding filter bubbles. Here, we measured the participants’ awareness of filter bubbles, if they took active action against filter bubbles as well as if they applied given avoidance strategies against filter bubbles.

Regarding the awareness of filter bubbles, we found out that the majority (73%, see Fig. 4) of the participants have already heard before this study about the theory of the filter bubble and also assume that filter bubbles exist (96%) and that most of them evaluate them as problematic (80%) A little less, but still the majority of the participants (63%) know that they are affected by filter bubbles. However, considerably fewer participants (31%) deliberately take action against the filter bubble and slightly fewer (41%) think that more interesting contributions are indicated to them by filter bubbles.

Next, we looked for the reasons why someone provides to take active action against filter bubbles. Thus we computed correlation analyses for the Facebook usage motives, awareness of the filter bubble, and Big Five factors with the dependent variable active action against filter bubbles.

While we found out with these correlations that the awareness of the filter bubble (see Table 1) and motives for using Facebook (see Table 2) have an influence on whether a person indicates to consciously take action against the filter bubble, we found no relations of the Big Five personality traits to take generally active action against filter bubbles (\(p_{s}>.05\)). Looking at the awareness of filter bubbles, people who have already heard of the filter bubble and people who evaluate filter bubbles as a problem would rather take active action against filter bubbles (see Table 1). Regarding Facebook usage motives, individuals who use Facebook for professional purposes, to inform others about political/social topics or to express their opinion are more likely to take active action against filter bubbles (see Table 2).

After the described correlation analyses, we computed a stepwise linear regression with the general willingness to take active action as dependent variable and the five variables that showed a significant relationship (see Tables 1 and 2). With this analysis we wanted to examine on which variables the general willingness to take active action against filter bubbles depends.

As Table 3 shows, we have received three models with the multiple linear regression. All three models contain the variable already heard of filter bubbles, the second model contains in addition the variable use Facebook to express opinion and the third model contains in beside the variable the filter bubble is a problem. Since the third model with \(R^2=.23\) clarifies the largest part of the variance, we consider this one for further interpretations. So it is more likely, that individuals who have already heard of filter bubbles, rate them as a problem, and use Facebook to express their opinion as well as take active action against filter bubbles. Because this model still only explains 23% of the variance, there are further aspects that determine if someone takes active action against filter bubbles. Nevertheless the named variables favour it. Since all three variables in the model show similarly high beta coefficients, they influence the dependent variable to similar parts.

So far, we looked at the general willingness to take active action against filter bubbles. Below we look at certain avoidance strategies against filter bubbles.

Of the given prevention strategies against filter bubbles, participants seem to delete cookies and browser history most frequently (69% see Fig. 5), whereas only few use the Explore-button (14%) or click on different pages to force diversity (18%).

Looking more detailed at the relationship between Facebook usage motives and the avoidance strategies, we see that some reasons to use Facebook correlate significantly with some avoidance strategies (see Table 4). For example people who use Facebook for professional purposes click and like different pages to force diversity.

Besides we found that individuals who evaluate filter bubbles as a problem like and click more different pages to force diversity (\(r(140)=0.27, p=.001\)). We did not find further correlations for the awareness of filter bubbles and avoidance strategies against filter bubbles.

Furthermore, our study showed some correlations between the Big Five factors and avoidance strategies (see Table 5).

5 Discussion

Our results revealed, that Facebook users know for the most part that filter bubbles exist, but still do little against them. This makes it clear that in today’s digital age it is important not only to inform users about the existence of filter bubbles, but also about various possible strategies for dealing with them.

With the queried avoidance strategies the participants favoured to delete the browser history or to unsubscribe friends/page. Both variants require less active effort than the other proposed strategies. For example, it is conceivable that individuals would simply subscribe to friends when they notice that they frequently publish content that is perceived as uninteresting or perhaps even inappropriate. For example, to mark more different pages with like would require more personal effort.

However, if individuals do claim to take action against filter bubbles, this is favoured by three aspects in particular. Not surprisingly, our study showed that the fact that someone has heard of filter bubbles affects whether someone claims to be active against filter bubbles. Also, it seems plausible that people who consider filter bubbles a problem would be more likely to become active. More interestingly, people who use Facebook to spread their opinions are more likely to say they are taking action against filter bubbles. This could be because they want to use the platform to spread their opinions, reach as many people as possible and not just the people in a filter bubble or their own. Perhaps they see the advantage of user-generated content and that they themselves can contribute something to opinion-forming. This would certainly be desirable for a strengthening of democracy and unrestricted opinion-forming. However, the group of those who say they want to spread their opinions on Facebook is a very small group in our sample.

Despite the fact that many participants consider filter bubbles to be problematic and almost no one denies the existence of filter bubbles. Participants did not report as much active action against filter bubbles. It is possible that some people may not feel affected or may not know how to fight against filter bubbles. In addition, some people may not feel the effects of filter bubbles as particularly negative and therefore do not see any need for action.

While some scientists, like Pariser (2011), suggest that users should take action against filter bubbles by, for example, liking different pages, deleting web history, deleting cookies and so on, other scientists (see e.g. [11]) believe that algorithms are not only negative, but can also provide users with a broader view of the world. Perhaps, therefore, it is not necessary to take action against filter bubbles, but rather to try to teach the users of social networks how to deal with personalization algorithms and possible filter bubbles. Thus, the positive aspects of recommender systems could increasingly come to the fore. Users could include algorithms in their searches and benefit from them, but at the same time be aware that the information received is pre-filtered.

Online-platforms such as Facebook often argue that they are not a news platform [11], but according to some studies such platforms are being used more and more to spread different opinions. Mitchell et al. found in their study that almost half (48%) of the 10,000 panelists consumed news about politics and government on Facebook at least once a week [43]. Further studies showed that the use of social media for political news distribution has increased even more and continues to increase [11]. Facebook must therefore also be seen as a medium that contributes to opinion-forming. This means that it is important for people to learn how to deal with opinion contributions on social networks and how to deal with filter bubbles or use algorithms in a targeted way.

5.1 Limitations and Future Work

We used convenience sampling for our study, but the results must be considered against the background that the sample is very young and well educated, so that it can be assumed that the knowledge about filter bubbles in our sample is higher than in the total population. The results should therefore be reviewed against a more heterogeneous sample. Nevertheless, pure knowledge about filter bubbles does not seem to lead people to take action against them thus solving the arising problems of misinformation and opinion manipulation in social networks.

In addition, we asked the participants if they would take action against filter bubbles. So the results show whether the participants could imagine using avoidance strategies in principle, but not whether they would actually do so. Therefore we try to find a better way in future studies to see if any avoidance strategies are used.

Also with regard to the question of whether filter bubbles are considered problematic, it is conceivable that the test persons have largely agreed in the sense of social desirability bias, as we have asked them to do so. We cannot therefore be sure whether they really regard filter bubbles as a problem. Although many participants indicated that filter bubbles were problematic for them, far fewer indicated that they would use avoidance strategies. So in the future it would also be interesting to find out why people do not want to take action against filter bubbles. It is conceivable that they see no reason for this, either because they are not so critical about filter bubbles or because they believe that they themselves are not affected. It is also possible that the strategies are too complex or time-consuming.

6 Conclusion

With this paper we showed that the most people, at least in our sample, have already heard about the phenomenon of the filter bubble. Nevertheless, the readiness for avoidance strategies is low. Besides we showed that the reasons why Facebook is used and the awareness of the filter bubble ensures that individuals are more likely to take action against filter bubbles, whereas the personality (here Big Five) has no effect on predicting it.

References

Van Aelst, P., et al.: Political communication in a high-choice media environment: a challenge for democracy? Ann. Int. Commun. Assoc. 41(1), 3–27 (2017)

Allcott, H., Gentzkow, M.: Social media and fake news in the 2016 election. J. Econ. Perspect. 31(2), 211–236 (2017)

Azucar, D., Marengo, D., Settanni, M.: Predicting the big 5 personality traits from digital footprints on social media: a meta-analysis. Pers. Individ. Differ. 124, 150–159 (2018)

Bakshy, E., Messing, S., Adamic, L.A.: Exposure to ideologically diverse news and opinion on Facebook. Science 348(6239), 1130–1132 (2008)

Bakshy, E., et al.: The role of social networks in information diffusion. In: Proceedings of the 21st International Conference on World Wide Web, pp. 519–528 (2012)

Barberá, P., et al.: Tweeting from left to right: is online political communication more than an echo chamber? Psychol. Sci. 26(10), 1531–1542 (2015)

Beam, M.A.: Automating the news: how personalized news recommender system design choices impact news reception. Commun. Res. 41(8), 1019–1041 (2014)

Beam, M.A., et al.: Facebook news and (de)polarization: reinforcing spirals in the 2016 US election. Inf. Commun. Soc. 21(7), 940–958 (2018)

Bellogin, A., Cantador, I., Castells, P.: A comparative study of heterogeneous item recommendations in social systems. Inf. Sci. 221, 142–169 (2013)

Zuiderveen Borgesius, F.G., et al.: Should we worry about filter bubbles? https://policyreview.info/articles/analysis/should-we-worryabout-filter-bubbles. Accessed 26 Feb 2019

Bozdag, E., van den Hoven, J.: Breaking the filter bubble: democracy and design. Ethics Inf. Technol. 17(4), 249–265 (2015)

Jonathan, B.: Explaining the emergence of political fragmentation on social media: the role of ideology and extremism. J. Comput. Mediat. Commun. 23(1), 17–33 (2018)

Calero Valdez, A., Burbach, L., Ziefle, M.: Political opinions of us and them and the influence of digital media usage. In: Meiselwitz, G. (ed.) SCSM 2018. LNCS, vol. 10913, pp. 189–202. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-91521-0_15

Colleoni, E., Rozza, A., Arvidsson, A.: Echo chamber or public sphere? Predicting political orientation and measuring political homophily in twitter using big data. J. Commun. 64(2), 317–332 (2014)

Conover, M.D., et al.: Political polarization on Twitter. In: Fifth International Conference on Weblogs and Social media (ICWSM), pp. 89–96 (2011)

Cumming, G.: The new statistics: why and how. Psychol. Sci. 25(1), 7–29 (2014)

De Raad, B.: The Big Five Personality Factors: The Psycholexical Approach to Personality. Hogrefe & Huber Publishers, Göttingen (2000)

DiFranzo, D.J., Gloria-Garcia, K.: Filter bubbles and fake news. XRDS: Crossroads ACM Mag. Stud. 23(3), 32–35 (2017)

Dubois, E., Blank, G.: The echo chamber is overstated: the moderating effect of political interest and diverse media. Inf. Commun. Soc. 21(5), 729–745 (2018)

Dylko, I., et al.: The dark side of technology: an experimental investigation of the influence of customizability technology on online political selective exposure. Comput. Hum. Behav. 73, 181–190 (2017)

Efron, M.: Using cocitation information to estimate political orientation in web documents. Knowl. Inf. Syst. 9(4), 492–511 (2006)

Epstein, R., Robertson, R.E.: The search engine manipulation effect (SEME) and its possible impact on the outcomes of elections. Proc. Nat. Acad. Sci. 112(33), E4512–E4521 (2015)

Eslami, M., et al.: I always assumed that i wasn’t really that close to [her]: reasoning about invisible algorithms in news feeds. In: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, pp. 153–162 (2015)

Flaxman, S.R., Goel, S., Rao, J.M.: Filter bubbles, echo chambers, and online news consumption. Public Opin. Q. 80(S1), 298–320 (2016)

Fletcher, R., Nielsen, R.K.: Are news audiences increasingly fragmented? A cross-national comparative analysis of cross-platform news audience fragmentation and duplication. J. Commun. 67(4), 476–498 (2017)

Garrett, R.K.: Echo chambers online? Politically motivated selective exposure among internet news users. J. Comput.-Mediat. Commun. 14(2), 265–285 (2009)

Goel, S., Mason, W., Watts, D.J.: Real and perceived attitude agreement in social networks. J. Pers. Soc. Psychol. 99(4), 611–621 (2010)

Goldberg, L.R.: An alternative “description of personality": the big-five factor structure. J. Pers. Soc. Psychol. 59, 1216–1229 (1990)

Goldman, E.: Search engine bias and the demise of search engine utopianism. In: Spink, A., Zimmer, M. (eds.) Web Search: Multidisciplinary Perspectives. ISKM, vol. 14, pp. 121–133. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-75829-7_8

González, R.J.: Hacking the citizenry? Personality profiling, ‘big data’ and the election of Donald Trump. Comput. Human Behav. 33(3), 9–12 (2017)

Helberger, N., Karppinen, K., D’Acunto, L.: Exposure diversity as a design principle for recommender systems. Inf. Commun. Soc. 21(2), 191–207 (2018)

Hoang, V.T., et al.: Domain-specific queries and Web search personalization: some investigations. In: Proceedings of the 11th International Workshop on Automated Specification and Verification of Web Systems (2015)

Introna, L.D., Nissenbaum, H.: Shaping the web: why the politics of search engines matters. Inf. Soc. 16(3), 169–185 (2000)

John, O.P., Naumann, L.P., Soto, C.J.: Paradigm shift to the integrative big-five trait taxonomy: history, measurement, and conceptual issues. In: John, O.P., Robins, R.W., Pervin, L.A. (eds.) Handbook of Personality: Theory and Research, pp. 114–158. Guilford Press, New York (2008)

Kaplan, A.M., Haenlein, M.: Users of the world, unite! The challenges and opportunities of social media. Bus. Horiz. 53(1), 59–68 (2010)

Karlsen, R., et al.: Echo chamber and trench warfare dynamics in online debates. Eur. J. Commun. 32(3), 257–273 (2018)

Kobayashi, T., Ikeda, K.: Selective exposure in political web browsing: empirical verification of ‘cyber-balkanization’ in Japan and the USA. Inf. Commun. Soc. 12(6), 929–953 (2009)

Koene, A., et al.: Ethics of personalized information filtering. In: Tiropanis, T., Vakali, A., Sartori, L., Burnap, P. (eds.) INSCI 2015. LNCS, vol. 9089, pp. 123–132. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-18609-2_10

Kosinski, M., Stillwell, D., Graepel, T.: Private traits and attributes are predictable from digital records of human behavior. Proc. Nat. Acad. Sci. 110(15), 5802–5805 (2013)

Leese, M.: The new profiling: algorithms, black boxes, and the failure of anti-discriminatory safeguards in the European Union. Secur. Dialogue 45(5), 494–511 (2014)

Macnish, K.: Unblinking eyes: the ethics of automating surveillance. Ethics Inf. Technol. 14(2), 151–167 (2012)

McCombs, M.E., Shaw, D.L.: The agenda-setting function of mass media. Public Opin. 36(2), 41–46 (1972)

Mitchell, A., et al.: Political polarization and media habits (2014). http://www.journalism.org/2014/10/21/political-polarizationmedia-habits/

Munson, S.A., Lee, S.L, Resnick, P.: Encouraging reading of diverse political viewpoints with a browser widget. In: International Conference on Weblogs and Social Media (ICWSM) (2013)

Nagulendra, S., Vassileva, J.: Providing awareness, explanation and control of personalized filtering in a social networking site. Inf. Syst. Front. 18(1), 145–158 (2016)

Pariser, E.: The Filter Bubble: What the Internet Is Hiding from You. Penguin, London (2011)

Portugal, I., Alencar, P., Cowan, D.: The use of machine learning algorithms in recommender systems: a systematic review. Expert Syst. Appl. 97, 205–227 (2018)

Rader, E., Gray, R.: Understanding user beliefs about algorithmic curation in the Facebook news feed. In: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI 2015, pp. 173–182 (2015)

Rammstedt, B., et al.: A short scale for assesing the big five dimensions of personality: 10 item big five inventory (BFI-10). Methoden Daten Anal. 7(2), 233–249 (2013)

Resnick, P., et al.: Bursting your (filter) bubble: strategies for promoting diverse exposure. In: Proceedings of the Conference on Computer Supported Cooperative Work Companion, CSCW 2013, pp. 95–100 (2013)

Sandvig, C., et al.: Auditing algorithms: research methods for detecting discrimination on internet platforms (2018)

Stroud, N.J.: Media use and political predispositions: revisiting the concept of selective exposure. Polit. Behav. 30(3), 341–366 (2008)

Sunstein, C.: # Republic: Divided Democracy in the Age of Social Media. Princeton University Press, Princeton (2017)

Trilling, D., van Klingeren, M., Tsfati, Y.: Selective exposure, political polarization, and possible mediators: evidence from The Netherlands. J. Public Opin. Res. 29(2), 189–213 (2016)

Yom-Tov, E., Dumais, S., Guo, Q.: Promoting civil discourse through search engine diversity. Soc. Sci. Comput. Rev. 32(2), 145–154 (2014)

Acknowledgements

The authors would like to thank Nils Plettenberg and Johannes Nakayama for their help in improving this article. We would like to thank Nora Ehrhardt and Marion Wießmann for their support in this study. This research was supported by the Digital Society research program funded by the Ministry of Culture and Science of the German State of North Rhine-Westphalia.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Burbach, L., Halbach, P., Ziefle, M., Calero Valdez, A. (2019). Bubble Trouble: Strategies Against Filter Bubbles in Online Social Networks. In: Duffy, V. (eds) Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management. Healthcare Applications. HCII 2019. Lecture Notes in Computer Science(), vol 11582. Springer, Cham. https://doi.org/10.1007/978-3-030-22219-2_33

Download citation

DOI: https://doi.org/10.1007/978-3-030-22219-2_33

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22218-5

Online ISBN: 978-3-030-22219-2

eBook Packages: Computer ScienceComputer Science (R0)