Abstract

This paper presents a comparative analysis of algorithms belonging to manifold learning and linear dimensionality reduction. Firstly, classical texture image descriptors, namely Gray-Level Co-occurrence Matrix features, Haralick features, Histogram of Oriented Gradients features and Local Binary Patterns are combined to characterize and discriminate textures. For patches extracted from several texture images, a concatenation of the image descriptors is performed. Using four algorithms to wit Principal Component Analysis (PCA), Locally Linear Embedding (LLE), Isometric Feature Mapping (ISOMAP) and Laplacian Eigenmaps (Lap. Eig.), dimensionality reduction is achieved. The resulting learned features are then used to train four different classifiers: k-nearest neighbors, naive Bayes, decision tree and multilayer perceptron. Finally, the non-parametric statistical hypothesis test, Wilcoxon signed-rank test, is used to figure out whether or not manifold learning algorithms perform better than PCA. Computational experiments were conducted using the Outex and Salzburg datasets and the obtained results show that among twelve comparisons that were carried out, PCA presented better results than ISOMAP, LLE and Lap. Eig. in three comparisons. The remainder nine comparisons did not presented significant differences, indicating that in the presence of huge collections of texture images (bigger databases) the combination of image feature descriptors or patches extracted directly from raw image data and manifold learning techniques is potentially able to improve texture classification.

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Texture analysis plays an important role in the computer vision area. It is responsible for extracting meaningful information from texture images. Texture is considered as an essential attribute, among all characteristics present in an image, which can be used as a rich source of information in many application areas, such as object recognition, remote sensing, content-based image retrieval and so on.

In the computational context, texture analysis can be defined as a set of techniques capable of processing texture information in every perspective or in certain regions of interest (ROIs) of an image. According to Materka, Strzelecki et al. [12], this set is basically composed of four major categories, namely: feature extraction, texture classification, texture segmentation and shape reconstruction from texture. Feature extraction is the first stage of texture analysis. It is responsible for translating the correlation between visual patterns contained in an image into quantitative values. The methods responsible of performing this function are known as texture descriptors. The purpose of texture classification is to associate textured samples with two or more classes according to a given criteria of similarity [8, 12, 21]. Texture segmentation, unlike classification, focuses on the delimitation of regions based on texture information. In this case there is no need for prior knowledge of the characteristics of each surface meaning that unsupervised grouping techniques can be used [12, 21, 22]. Shape reconstruction through textures is directly linked to the recomposition of three-dimensional surfaces by means of texture information [12, 13, 21, 22]. This work is confined mainly to texture classification which is composed of two main processes, regarding feature extraction and classification of patterns.

Many methods of texture analysis have been developed over the years, each one exploring a novel approach to extract the image’s texture information. For instance, we have classical methods based on second-order statistics (such as Co-occurrence Matrices (GLCM)), Haralick, Histogram of Oriented Gradients (HoG) and Local Binary Patterns (LBP). Over many years, these four descriptors have gained a large amount of interest in many computer vision researching groups. The features extracted using the aforementioned descriptors have proven to be discriminative in classifying texture patterns.

By using those four descriptors to choose a subset of features that really represent a texture image separately, some texture information can be lost which may result in decreased recognition performance. According to this paper, in order to compensate for lost texture information and to fill the gaps left by each descriptor separately, the features extracted by those four descriptors are combined. Feature vector representing the patches are then taken through dimensionality reduction methods which give an improvement in recognition performance. On the other hand, we also propose to consider as feature vector a concatenation of 32 \(\times \) 32 patches extracted directly from an image raw data.

Finally, we use this effective combination of the features of those four descriptors and also those ones extracted directly from an image raw data (after concatenating them and applying dimensionality reduction algorithms) with four classifiers (KNN [10], Naive Bayes [23], Decision Trees [14] and Multilayer Perceptron [5]) on Outex [19] and Salzburg [11] datasets. Finally, a comparative analysis is achieved using the Wilcoxon test [24] to figure out if nonlinear dimensionality reduction methods are better than the linear ones using the two proposed approaches.

The rest of this paper is organized as follows. We give a brief overview of PCA and manifold learning methods in Sects. 2 and 3 respectively. Section 4 is dedicated to present our proposal. Computational experiments and results for this work are presented in Sects. 5 and 6 respectively. Finally, we conclude the paper’s work in Sect. 7.

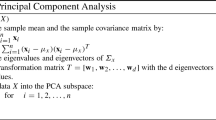

2 Principal Component Analysis

High-dimensional datasets (i.e datasets with many features) may present difficulty in visualization process, may require high computational performance or may contain noise. To overcome these issues, there exist methods related with linear dimensionality reduction with the purpose of shrinking datasets by transformation and/or selection of features, while minimizing information loss. In this section, we will focus particularly on PCA [9].

It is common that some datasets follow certain distributions that are majorly embedded in a few orthogonal components, where a component is the result of a linear combination of the original features. PCA is a statistical technique that aims at transforming a n-dimensional dataset X to a m-dimensional dataset Y, where, obviously, \(m \le n\). Moreover, the dimensions of Y are captured in the direction in which the variance of samples in X is maximum and should necessarily be orthogonal components, commonly known as principal components [9]. In Fig. 1, the orange and purple arrows are the principal components of the synthetic dataset K, with 1000 samples and 2 features (2 principal components). In addition, the Algorithm 1 summarizes in a few simple steps the idea behind PCA method.

3 Manifold Learning

Manifold learning is a popular approach to nonlinear dimensionality reduction. Algorithms for this task are based on the idea that the dimensionality of many datasets is only artificially high; though each data point consists of perhaps thousands of features, it may be described as a function of only a few underlying parameters. That is, the data points are actually samples from a low-dimensional manifold that is embedded in a high-dimensional space. Manifold learning algorithms attempt to uncover these parameters in order to find a low-dimensional representation of the data. Manifold learning algorithms include methods such as ISOMAP, LLE, Lap. Eig. that we are going to present briefly in this section.

3.1 ISOMAP

Firstly, ISOMAP has been suggested by Tenenbaum, de Silva and Langfor [20] and was one of the first algorithms introduced for manifold learning. It can be thought of as an extension of Multidimensional Scaling (or simply MDS). ISOMAP algorithm can be summarized in three big steps:

-

1.

Construct the weighted graph G from the distances pairwise for all points in the input and find the graph \(G'\) by applying the nearest-neighbor algorithm on the graph G.

-

2.

Compute the shortest path graph \(G''\) between all pairs of nodes from graph \(G'\). This might be done by the all-pairs Dijkstra’s [6] or by the Floyd Warshall algorithm [1].

-

3.

Use \(G''\) to construct the p-dimensional embedding using the MDS algorithm. In other words, the MDS method can now be used to construct a representation in sub-spaces of the \(R^n\).

3.1.1 Multidimensional Scaling (MDS)

Isomap algorithm finds points whose Euclidean distances equal the geodesic distances that were calculated in steps 1 and 2. In fact, such points exist and are unique, since the manifold is isometrically embedded. Multidimensional Scaling [7] is a classical technique that may be used to find such points. The main goal of the MDS algorithm can be formalized as follows: Let \(\left\{ {x}_i, y_i\right\} \) be the input dataset for \(i=1, 2, . .., n\) where \({x}_i\) denotes the feature vector that represents the ith sample and \(y_i\) denotes the class or category to which the vector belongs, being generally an integer greater than zero. Given a Euclidean pairwise distances matrix, retrieve which are the coordinates of \({x}_r \in R^k, r=1, 2, . .., n\), where k is defined by the user (plan, space 3D, etc.). A distance matrix is given by \(D=\left\{ d^2_{rs}\right\} , r, s=1, 2, . .., n\) where \(d^2_{rs}\) is a distance between \({x}_r\) and \({x}_s\) vectors. Its expression is given by

Now, let B be the matrix of inner products defined as \(B=\left\{ b_{rs}\right\} \), where \(b_{rs} = {x}^T_r{x}_s\). Based on these configurations, the MDS method seeks to address two main problems:

-

(i)

From the distance matrix D find a matrix B.

-

(ii)

From B retrieve the coordinates \({x}_r \in R^p\) where p is the rank of B.

To address the first problem, MDS method assume that points are mean-centered—i.e. \(\sum _{r=1}^{n} {x}_r = 0\). After some algebraic operations on D and using the initial form of matrix B, the final expression of matrix B is as \(B = HAH\) such that \(A = -\frac{1}{2}D\) and \(H = I -\frac{1}{n}{1}{1}^T\).

Finally, for retrieving the coordinates of the points, some properties of matrix B can be used. In fact B has three important properties, namely B is symmetric, the rank of B is p (this corresponds to the maximal number of linearly independent rows/columns of B that generates a base in \(R^p\)) and B is positive semidefinite. This implies that the matrix B has p non-negative eigenvalues and \(n - p\) eigenvalues are null. Thus, by the spectral decomposition of B one can write \(B = \vee ^{'} \wedge ^{'} \vee ^{'T}\) where \({\wedge ^{'}} = diag(\lambda _1, \lambda _2, ..., \lambda _n)\) is a diagonal matrix of eigenvalues of B and \({\vee ^{'}}\) with \(n\times p\) dimension is defined as

However, as B can be written in these following format \(B = X_{n\times p} X_{p\times n}^T\), thus, the observed \(X_{n\times p}\) can be expressed as \(X_{n\times p} = {\vee ^{'}}_{n\times p}\wedge _{p\times p}^{'\frac{1}{2}}\) where \({\wedge ^{'\frac{1}{2}}} = diag(\sqrt{\lambda _1}, \sqrt{\lambda _2}, ..., \sqrt{\lambda _p})\).

Each row of \( X_{n\times p}\) will have the coordinate of a vector \({x}_i \in R^p\), where p is a parameter that controls the number of dimensions of the output space: if we want a 2D plot, \(p = 2\), in case of a 3D plot, \(p = 3\). The Algorithm 2 summarizes the process of MDS.

It should be noted that the ISOMAP algorithm is totally unsupervised, in the sense that it does not use any information about class distribution. It attempts to find a more compact representation of the original data only by preserving the distances.

3.2 LLE

LLE has been suggested by Saul and Roweis [16] and was introduced at about the same time as ISOMAP, but it is based on different idea than that of ISOMAP. The idea comes from visualizing a manifold as a collection of overlapping coordinate patches. If the neighborhood sizes are small and the manifold is sufficiently smooth, then these patches will be approximately linear. Moreover, the chart from the manifold to \(R^d\) (d is the dimensionality of the manifold that the input is assumed to lie on and, accordingly, the dimensionality of the output i.e. \( y_i \in R^d\)) will be roughly linear on these small patches. The idea is to identify these linear patches, characterize the geometry of them, and find a mapping to \(R^d\) that preserves this local geometry and is nearly linear. It is assumed that these local patches will overlap with one another so that the local reconstructions will combine into a global one [3]. LLE algorithm can be summarized in two big steps:

-

1.

Model the manifold as a collection of linear patches and attempts to characterize the geometry of these linear patches.

-

2.

Find a configuration in d-dimensions (the dimensionality of the parameter space) whose local geometry is characterized well by W (\(W_i\) is a characterization of the local geometry around \(x_i\) of the manifold). Unlike ISOMAP, d must be known a priori or estimated.

3.3 Laplacian Eigenmaps

The idea behind the Lap. Eig. [2] is to use spectral graph theory in order to capture local information about the manifold. Given a graph and the corresponding matrix of edge weights, W, the Laplacian graph is defined as \(L = D - W\) in which D represents the diagonal matrix with elements \(D_{ii} = \sum _j W_{ij}\).

Here, a matrix W is used as a local similarity in order to measure the degree to which points are near to one another. Thus \(W_{ij}\) = \(e^{-\Vert {x}_i - {x}_j\Vert ^2/2\sigma ^2}\) if \(x_j\) is one of the k-nearest neighbors of \(x_i\) and equals 0 otherwise. The main goal is to use W in order to find output points \(y_1,..., y_n \in R^d\) that are the low-dimensional analogs of input points \(x_1,..., x_n \in R^D\) where \(d < D\). Lap. Eig. algorithm can be summarized in two big steps:

-

1.

Given \(x_1,..., x_n \in R^D\), d, k, \(\sigma \) as input, define a local similarity matrix \(W_{ij}\).

-

2.

Let U be defined as the matrix whose columns are the eigenvectors of \(L\mathbf y = \lambda D\mathbf y \) associated to the non-zero eigenvalues. Here L represents Lagrange multipliers. Y is then given by the \(n \times d\) matrix whose columns are these d eigenvectors and whose rows are the embedded points (\(y_1,..., y_n \in R^d\)).

4 Proposed Method

This section presents a dimensionality reduction based approach for texture classification. Our method is firstly inspired by the observation of how dimensionality reduction techniques can effectively learn relevant information from the raw data, and secondly, by a wide variety of characteristics that present the feature vectors generated by each one of the classical descriptors adopted in this work. Based on this, we propose a straightforward solution which concatenates all these feature vectors (referred as first approach) and also those feature vectors constructed from patches extracted directly from the raw data (referred as second approach) and makes a feature selection through PCA and manifold learning techniques to choose the most effective features for class separability.

The idea consists in creating an approach for texture classification strongly based on the application of dimensionality reduction techniques. According to the block diagram of the proposed method (refer to Fig. 2), the two stages in Fig. 2(a) are part of the first approach and consist in extracting HoG, GLCM, Haralick and LBP features followed by their concatenation. We concatenated these four feature vectors aiming at constructing one big feature vector to serve as an input for dimensionality reduction using PCA and manifold learning algorithms. The stage in Fig. 2(b) is part of the second approach and consists in extracting 32 \(\times \) 32 patches from an image which will then be concatenated to form a feature vector of the underlying image. In the next stage of the diagram flow, feature selection is achieved through PCA, ISOMAP, LLE and Lap. Eig. algorithms during which thresholds are used to maintain only the most effective features among all belonging to the concatenated feature vector. From this process, we have as an output a discriminative and reduced feature vector that contains only the best elements (the most relevant informations) of the input descriptors. Then, the selected feature vector is taken through the classification process using KNN, Naive Bayes, Decision Trees and ML Perceptron. Finally, a comparative analysis is achieved using the Wilcoxon test to figure out if nonlinear dimensionality reduction methods are better than the linear ones using the two proposed approaches.

5 Experimental Setup

5.1 Image Datasets

Two texture image datasets were used in the experiments:

-

Salzburg [11] contains a collection of 476 color texture images that have been captured around Salzburg (Austria) and, herein, depicted in Fig. 4. For each texture class (from total of 10 classes used in this paper), there were \(128\times 128\) source images, of which 80% was used for training the classifier, while the other 20% was used for testing.

-

Outex [19] has a total of 960 (\(24\times 20\times 2\)) image instances of illuminant “inca”. The training set consists of 480 (\(24\times 20\)) images and the other 480 (\(24\times 20\)) images are part of the test set. The test suite for Outex used in this work is Outex_TC_00011-r. Some of the texture instances for each class are depicted in Fig. 5.

5.2 Description of Experiments

In Fig. 2 we present the flow diagram of the proposed method, including details of the pipeline, and the methods used in the experiments. Note that our contribution is to perform a statistical comparative analysis to show which approach is better than other between PCA and manifold techniques and how these approaches can be used to reduce the dimensionality of the feature space in texture classification task.

In this paper, we perform the following two types of experiments:

-

Experiments using the full feature vector created by concatenating all descriptors, followed by classification with feature selection;

-

Experiments using the feature vector created by extracting 32 \(\varvec{\times }\) 32 patches, followed by classification with feature selection.

The main topic under investigation is the effect of different methods in reducing dimensionality for concatenation of descriptors and 32 \(\times \) 32 image patches-based feature vector in texture classification. In our experiments we analyze if PCA reduction produces better results than manifold algorithms for both feature extraction approaches adopted in this paper. It is for this reason that we used the Wilcoxon test to find which method has significant difference. A significance level of \(\alpha = 0.05\) was used in all tests. In this case the comparison is pair-wise. In addition, all experiments were performed with a stratified \(k-fold\) cross validation setting. The accuracy proved to be a suitable measure to evaluate the classification performance, since all datasets are balanced and also the sampling for the cross validation is stratified [18].

Accuracies of PCA, ISOMAP, LLE and Lap. Eig. for the Salzburg dataset using the 32 \(\times \) 32 image patch-based feature vector (a), Salzburg dataset using the concatenated feature vector (b) and Outex dataset using the concatenated feature vector (c). Boxplots in gray correspond to significances when compared to the accuracy of other method with p-value < 0.05. Here, the name Salzburg-2 (a) refers to the second approach applied to Salzburg dataset. Analogously, the names Salzburg-1 (b) and Outex-1 (c) refer to the first approach applied to Salzburg and Outex datasets, respectively.

6 Results and Discussion

6.1 Full Feature Space Experiments

The accuracies for the first set of experiments are shown in Table 1 and Table 3. They present a general perspective of the results. For each dataset and dimensionality reduction method among PCA, ISOMAP, LLE and Lap. Eig., we displayed ten accuracies, corresponding to the dimensionality reduction using 1, 2, 3, 4, 5, 6, 7, 8, 9 and 10 features (attributes).

It is evident that, in general, the methods used to reduce the dimensionality of the full feature space have significant impact on classification accuracy. In this way, the choice of d (target feature vector size) can improve or hamper the discriminative capacity of texture descriptors. While the brute force search for the best value of d seems a major drawback of many works in feature selection using dimensionality reduction techniques, in this paper, we adopted an intrinsic dimensionality estimation approach to find the intrinsic value of d. This approach computes the accumulated percentual of variance of the \(i^{th}\) component from a list of n components (dimensions). Then the intrinsic dimensionality of the reduced space will be the first component with accumulated variance ratio greater than 95% for ISOMAP algorithm, for instance. One of the important observations from this result is that the reduction from the computed d to a higher number of dimensions often maintains the accuracies and sometimes can even slightly increase them. Figure 6 shows the observed value of d, varying from 0 to 100, based on the percentage of variance retained in the attributes. It also shows that the intrinsic dimensionalities of Salzburg and Outex datasets for ISOMAP algorithm are 10 and 24, respectively.

Since the results showed in Tables 1 and 3 are only an overall view, we performed a deeper analysis using the following pairs of methods: PCA with ISOMAP; PCA with LLE and PCA with Lap. Eig. Also, because of the comparison must be carried out in pairs and under no assumption about the distribution of the data, the Wilcoxon’s test was performed to compare the accuracies obtained by the reduction methods showed in Tables 1 and 3. A significance level of \(\alpha = 0.05\) was used in all tests. To observe the results in more detail, boxplots for Salzburg and Outex datasets using PCA with LLE and PCA with Lap. Eig are shown in Figs. 3 (b) and (c), respectively. The boxplots shaded in gray correspond to data with significant difference when compared to the accuracy of the other method, obtaining p-value < 0.05.

We obtained significantly better results for PCA when compared with the LLE method for Salzburg (see Fig. 3 (b)) and with the Lap. Eig method for Outex (see Fig. 3 (c)), according to the Wilcoxon’s statistical test. On a more general note, it can be observed that the results were not significantly worse, except for the value of d equals to 1 for all the methods in the two datasets.

6.2 32 \(\times \) 32 Patch-Based Feature Space Experiments

An overview of the results for the second set of experiments is shown in Tables 2 and 4. For each data set and dimensionality reduction method, we showed eleven accuracies, corresponding to the dimensionality reduction with the value of d equals to 5, 10, 15, 20, 25, 30, 35, 40, 45, 50 and 100. Here, the idea is to test if the extraction of patches directly from a texture image raw data can improve or maintains the results, and also to compare the use of PCA and manifold learning methods in this way.

The only result that significantly presented difference among the pair-wise comparisons was between PCA and ISOMAP for Salzburg data set as shown in Fig. 3 (a). PCA significantly presented better accuracies than ISOMAP for salzburg data set, according to the Wilcoxon’s statistical test for p-value < 0.05. More importantly, on a more general perspective (i.e., for all accuracies), the results were not significantly worse.

7 Conclusions

This research presented an important contribution regarding the use of dimensionality reduction techniques for texture classification task. We stated that an original feature vector of D dimensions could be reduced to only d features (where \(d < D\)) that can better represent the data, achieving similar or higher accuracies. To produce an higher feature vector size to be used as input of the dimensionality reduction methods, we proposed two approaches: one using the full feature vector created by concatenating HoG, LBP, GLCM and Haralick descriptors; and other using the feature vector created by extracting 32 \(\times \) 32 patches of each texture image. In this paper we especially carried out investigations through Wilcoxon’s statistical test with regard to figure out whether or not manifold learning algorithms perform better than PCA. In this case for each data set, we performed six pair-wise comparisons, namely PCA with ISOMAP; PCA with LLE and PCA with Lap. Eig such as each comparison was done for both approaches. Thus, we had twelve pair-wise comparisons in total for all experiments.

In the first place, it is important to note that the use of the two approaches (full feature vector and 32 \(\times \) 32 patches-based feature vector) followed by feature selection using both PCA and manifold learning produced, on average, superior results than the individuals descriptors for texture classification task. In the second one, among all the pair-wise comparisons involved in the experiments, only three results (from a set of twelve ones) were significantly differents, according to the boxplots in Fig. 3. These results clearly indicated that PCA overperformed the ISOMAP in Salzburg using the second approach, also overperformed the LLE in Salzburg using the first approach, and finally, overperformed the Lap. Eig in Outex using the first approach.

Since the results for the rest of nine comparisons did not significantly show the difference between the accuracies of PCA and each one of the manifold learning method, we believe that increasing the dimension of the feature vectors of both approaches by respectively adding more descriptors and by extracting 8 \(\times \) 8 patches from texture image, may promote better performance in favour of manifold learning methods. The reason for this is that manifold learning methods are more efficient in dimensionality reduction than PCA in the presence of huge collections of texture images (bigger databases) and especially for texture classification task [4, 25].

Future studies can take into account new approaches for estimating the intrinsic dimensionality value of d for each manifold learning method, can also explore different manifold learning methods and variations of the supervised classifier’s algorithms in order to produce better accuracies, as well as investigate the sub-spaces generated by such methods in order to understand better their discriminative power.

References

Chumbley, J.Y.A., Moore, K., et al.: Floyd-warshall algorithm. https://brilliant.org/wiki/floyd-warshall-algorithm/. Accessed Nov 2018

Belkin, M., Niyogi, P.: Laplacian eigenmaps and spectral techniques for embedding and clustering. In: Dietterich, T.G., Becker, S., Ghahramani, Z. (eds.) Advances in Neural Information Processing Systems, vol. 14, pp. 585–591. MIT Press (2002)

Cayton, L.: Algorithms for manifold learning (2005)

Chen, J., et al.: Updating initial labels from spectral graph by manifold regularization for saliency detection. Neurocomputing 266, 79–90 (2017)

Cireşan, D.C., Meier, U., Gambardella, L.M., Schmidhuber, J.: Deep big multilayer perceptrons for digit recognition. In: Montavon, G., Orr, G.B., Müller, K.-R. (eds.) Neural Networks: Tricks of the Trade. LNCS, vol. 7700, pp. 581–598. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-35289-8_31

Rivest, R.L., Stein, C., Cormen, T.H., Leiserson, C.E.: Introduction to Algorithms. The MIT Press, Cambridge (2009)

Cox, T., Cox, M.: Multidimensional scaling. In: Chen, C.-H., Härdle, W.K., Unwin, A. (eds.) Handbook of Data Visualization. Springer Handbooks of Computational Statistics. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-33037-0_14

Dash, S., Chiranjeevi, K., Jena, U.R., Trinadh, A.: Comparative study of image texture classification techniques, pp. 1–6, January 2015

Dunteman, G.H.: Principal Components Analysis. Sage Publications, Newbury Park (1989)

Guo, G., Wang, H., Bell, D., Bi, Y., Greer, K.: KNN model-based approach in classification. In: Meersman, R., Tari, Z., Schmidt, D.C. (eds.) OTM 2003. LNCS, vol. 2888, pp. 986–996. Springer, Heidelberg (2003). https://doi.org/10.1007/978-3-540-39964-3_62

Kwitt, R., Meerwald, P.: Salzburg texture image database. http://www.wavelab.at/sources/STex/. Accessed Feb 2018

Materka, A., Strzelecki, M.: Texture analysis methods - a review. Technical report, Institute of Electronics, Technical University of Lodz (1998)

Mirmehdi, M., Xie, X., Suri, J.: Handbook of Texture Analysis. Imperial College Press, London (2009)

Quinlan, J.R.: Induction of decision trees. Mach. Learn. 1(1), 81–106 (1986)

Raschka, S.: Principal Component Analysis (PCA) Step by Step, April 2014

Saul, L.K., Roweis, S.T.: Think globally, fit locally: unsupervised learning of low dimensional manifolds. J. Mach. Learn. Res. 4, 119–155 (2003)

Smith, L.I.: A tutorial on principal components analysis. Technical report, Cornell University, USA, 26 February 2002

Sun, Y.: Cost-sensitive boosting for classification of imbalanced data. Pattern Recogn. 40, 3358–3378 (2007)

Pietikainen, M., Viertola, J., Kyllonen, J., Ojala, T., Maenpaa, T., Huovinen, S.: Outex - new framework for empirical evaluation of texture analysis algorithms, vol. ICPR/1, pp. 701–706 (2002)

Tenenbaum, J.B., de Silva, V., Langford, J.C.: A global geometric framework for nonlinear dimensionality reduction. Science 290(5500), 2319 (2000)

Tuceryan, M., Jain, A.K.: Texture Analysis, pp. 207–248 (1998)

Wankhade, P.D.: A review on aspects of texture analysis of images. Int. J. Appl. Innov. Eng. Manag. (IJAIEM) 3, 229–232 (2014)

Webb, G.I.: Naïve Bayes, pp. 713–714. Springer, Boston (2010). https://doi.org/10.1007/978-0-387-30164-8

Wilcoxon, F.: Individual comparisons by ranking methods. In: Kotz, S., Johnson, N.L. (eds.) Breakthroughs in Statistics. Springer Series in Statistics (Perspectives in Statistics), pp. 196–202. Springer, New York (1992). https://doi.org/10.1007/978-1-4612-4380-9_16

Yang, C., Zhang, L., Lu, H., Ruan, X., Yang, M.-H.: Saliency detection via graph-based manifold ranking. In: Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2013, pp. 3166–3173. IEEE Computer Society, Washington, DC (2013)

Acknowledgment

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Nsimba, C.B., Levada, A.L.M. (2019). Nonlinear Dimensionality Reduction in Texture Classification: Is Manifold Learning Better Than PCA?. In: Rodrigues, J., et al. Computational Science – ICCS 2019. ICCS 2019. Lecture Notes in Computer Science(), vol 11540. Springer, Cham. https://doi.org/10.1007/978-3-030-22750-0_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-22750-0_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22749-4

Online ISBN: 978-3-030-22750-0

eBook Packages: Computer ScienceComputer Science (R0)