Abstract

Previous work has shown that motion performed by children is perceivably different from that performed by adults. What exactly is being perceived has not been identified: what are the quantifiable differences between child and adult motion for different actions? In this paper, we used data captured with the Microsoft Kinect from 10 children (ages 5 to 9) and 10 adults performing four dynamic actions (walk in place, walk in place as fast as you can, run in place, run in place as fast as you can). We computed spatial and temporal features of these motions from gait analysis, and found that temporal features such as step time, cycle time, cycle frequency, and cadence are different in the motion of children compared to that of adults. Children moved faster and completed more steps in the same time as adults. We discuss implications of our results for improving whole-body interaction experiences for children.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Whole-body interaction requires robust and accurate recognition of the user’s motions. Most whole-body interaction or recognition research has focused on adults [1, 2]. However, there is reason to believe that children’s motions may differ significantly from those of adults based on differences in physiological factors such as body proportion [3] and development of the neuro-muscular control system [4]. In fact, previous work by Jain et al. [5] has shown that the motion of a child is perceivably different from the motion of an adult. The authors found that naïve viewers can perceive the difference between child and adult motion, when presented as point-light displays (points of lights representing each joint of the human body), at a rate significantly above chance, and with 70% accuracy for dynamic motions such as walking and running.

However, what exactly is being perceived has not been identified: what are the quantifiable differences between child and adult motion for dynamic actions, specifically, walking and running actions? If these quantifiable differences could be identified, we could improve recognition of child motion and enhance whole-body interaction experiences (e.g., exertion games [6]). To identify these differences, we considered features from literature on the analysis of gait. We chose to focus on these features because they have often been used to characterize walking and running motions from a physiological perspective [7,8,9].

This paper presents results on gait features that characterize the differences between adult and child motion. We chose a subset of walking and running motions from the Kinder-Gator dataset [10], collected by our research groups. This dataset includes child and adult motions from 10 children (ages 5 to 9) and 10 adults (ages 19 to 32) performing 58 actions forward-facing the Kinect [11]. Of the 58 actions in the dataset, we analyze four actions: walk in place (walk), walk in place as fast as you can (walk fast), run in place (run), and run in place as fast as you can (run fast). We grouped the gait features into two categories based on the gait literature: spatial features, dependent on distance, e.g., step width, step height, relative step height, and walk ratio; and temporal features, dependent on time, e.g., cadence, step time, cycle time, cycle frequency, and step speed. Each feature was computed on the motion data and results were analyzed statistically based on two factors: age group (child vs. adult) and action type.

We found a significant effect of age group across all temporal features except step speed. Hence, step time, cycle time, cycle frequency, and cadence are significantly different in the motion of children compared to that of adults. For spatial features, we found no significant effect of age group. There was a significant effect of action type in both temporal and spatial features; we found differences between pairs of actions across all features. The contributions of this paper are to (a) identify a set of nine features from gait analysis that can be used to (b) quantify the differences between child and adult motion. Our paper advances the understanding of child and adult motion in terms of measurable differences in their motion to inform the design and development of whole-body interactive systems (e.g., smart environments, exertion games). Our paper will also improve how motion recognition algorithms classify child and adult motion. These motion recognition algorithms can in turn help to improve how child actions are recognized in whole-body interaction applications.

2 Related Work

Prior work has studied recognition and perception of human motion, though most has focused on children or adults only, rather than comparing or contrasting the two.

2.1 Perception of Human Motion

Behavioral researchers [12,13,14] studied infants’ preferences for point-light display representations of human motion compared to non-human motion, and asserted that humans develop the ability to perceive and detect biological motion during infancy. Point-light displays have also been used in various perception studies to understand what identifying information people can perceive. Cutting and Kowlozski [15] and Beardsworth and Buckner [16] found that adults could identify themselves and their friends from point-light display representations of walking motions. Furthermore, Wellerdiek et al. [17] studied a wider range of motions, such as spontaneous dancing and ping-pong, and showed that adults were able to detect their own motion from point-light displays. Golinkoff et al. [18] found that children could identify different motions such as walking and dancing from point-light display videos. Jain et al. [5] examined whether naïve viewers could perceive the difference between the motion of a child and an adult from point-light displays. They found that adults can perceive the difference between child and adult motion at levels significantly above chance, and with about 70% accuracy for dynamic actions such as “run” and “walk”. However, what cues were helping participants identify whether the motion came from a child or an adult were not determined. In our study, we analyze child and adult motions to understand the features that differentiate child motion from adult motion.

2.2 Motion Recognition Using the Kinect

Human motion tracking has been studied extensively using image sequences [14, 19,20,21,22,23]. For example, Ceseracciu [20] analyzed people’s gait by processing grayscale images from video recordings using multiple cameras. With the advent of low-cost commercial motion tracking devices such as the Microsoft Kinect [11], researchers shifted attention to recognizing actions using depth sensors [24, 25] and developing Kinect-based applications [26,27,28,29]. Nirjon et al. [29] used the 3D skeleton joint coordinates tracked by the Kinect to distinguish between aggressive actions, such as kicking from punching. The Kinect has also been applied to hand gesture recognition [26, 30, 31]. Oh et al. [26] created the Hands-Up system, which issues commands to a computer by tracking adults’ hand gestures using a Kinect attached to the ceiling. Zafrulla et al. [30] and Simon Lang [31] both applied the Kinect to sign language recognition. Jun-Da Huang [27] created a Kinect-based system, KineRehab, to track the movements of young adults with muscle atrophy and cerebral palsy. KineRehab detects movements with 80% accuracy. Dimitrios et al. [28] used the Kinect to track adults’ dance movements and to automatically align the movement to those of a dance expert in the real world. Few researchers have used the Kinect to successfully recognize children’s motions. Zhang et al. [32] tracked children’s movements in a classroom using multiple Kinect sensors, but did not attempt to classify specific motions. Connell et al. [33] used a Wizard-of-Oz approach to elicit gestures and body movements from children when interacting with a Kinect. Lee et al. [6] and Smith and Gilbert [34] have both created interactive games for children to solve math problems by performing gestures tracked by the Kinect, but did not include real-time recognition. In our study, we expand upon previous work by tracking and logging the motion of both children and adults with the Kinect in order to quantify the differences between these motions to inform better recognizers in the future.

3 Gait and Gait Analysis Background

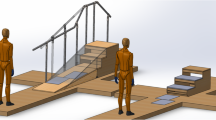

Gait is defined as one’s manner or style of walking [35]. The analysis of gait is defined as the systematic study of human locomotion [36, 37], using the cycles and steps in the motion (Fig. 1). A gait cycle (stride) is defined as the period between a foot contact on the ground to the next contact of the same foot on the ground again [7, 36]. A gait step is defined as the period between a foot contact on the ground to the next contact of the opposite foot, also known as half a gait cycle [7].

3.1 Gait Analysis

Wilheim and Eduard Weber [38] pioneered the study of spatial and temporal gait features by showing that human locomotion can be measured quantitatively. This finding led to the development of different quantitative methods for analyzing gait kinematics, of which the most commonly used is the placement of 3D markers along segments of the human body [39]. Since then, gait kinematics have been studied extensively. Researchers [7, 8, 36, 40,41,42] have placed reflective markers on adults walking at different speeds, and have analyzed distance and time features of their gait such as stride length, step length, walk ratio, stride time, cadence, and speed. Gait analysis has also been used to identify individuals from their gait. Gianaria et al. [43] achieved 96% accuracy on classifying adults by gait by extracting gait features from Kinect data and feeding the features into a support vector machine (SVM). Prior work has also analyzed features from children’s gait [44,45,46,47]. Dusing and Thorpe [46] analyzed the cadence of children ages 1 to 10 walking at a self-selected pace, and found that cadence reduces as age increases. Barreira et al. [44] also studied the cadence of children walking freely in their environment. They found that children spent more time at lower cadences (0–79 steps/minute) compared to cadences signifying moderate or vigorous physical intensity (120 steps/min).

A limitation of the studies reviewed above is that they mainly focus on either children or adults. Some prior work has studied the comparison between child and adult motion, but they either focus on very young children [48] or older children [49] rather than a range of younger and older children. Davis [48] extracted and fed features collected from children’s and adults’ gait into a two-class linear perceptron to differentiate between the walking motion of young children (ages 3 to 5) and adults. He found that gait features can be used to differentiate between young children’s and adults’ walking patterns with about 93–95% accuracy. Oberg et al. [49] also compared gait features across ages from 10 to 79 years, and found that the speed of the gait and length of a step reduces with age. In our study, we extracted gait features such as cadence, step time, and step length from the motion of children ages 5 to 9 to quantify the differences between child and adult motion. We focus on ages within the gap of age ranges previously studied in the literature (ages 5 to 9) because of the rapid development of motor skills during this age [50, 51].

3.2 Gait Features

We surveyed the literature on gait analysis [7,8,9, 36, 40,41,42, 48, 49, 52], and identified ten features commonly used to characterize a person’s gait. Gait analysis has been historically utilized to analyze walking or running motion that involves moving a distance away from the starting point. One feature that is commonly examined in gait analysis is the step length. The step length measures the distance between feet along the direction of motion, which for moving motions is parallel to the floor. However, the walking and running actions in the Kinder-Gator dataset [10] involve moving in place instead of moving away from a starting point. Therefore, the direction of motion is perpendicular to the floor instead of parallel to the floor, so we calculated the perpendicular distance (i.e., step height). This adaptation from step length to step height is valid because both measure the peak distance between feet.

Of the ten features we identified, we eliminated cycle length, which is the distance between successive placements of the same foot (measured as two step lengths). We eliminated this feature because, for in-place motions, the same foot returns to nearly the same location between steps. Measuring successive placements of the same foot using two step heights instead of two step lengths would imply the participant is moving continuously upward, e.g., climbing up a ladder, rather than the in-place actions in our dataset [10]. We categorized the remaining nine gait features into spatial and temporal feature groups (Fig. 1). Spatial features are distance-based; they include features which are dependent on the length (height) of a step in their computation. Temporal features are time-based. They include features which are dependent on time in their computation. We chose these nine features because prior research has shown that they are unique per person [41], that is, analogous to a fingerprint, and can be used as a biometric measure [53]. The nine features include:

Step Width (m).

This is a spatial feature. The step width is the maximum lateral distance between feet during a step [9]. It is measured as the horizontal distance between the position of one foot and the other foot during a step. This feature evaluates how wide or narrow the step taken is.

Step Height (m).

This is a spatial feature and is an adaptation of the step length. Step length is defined as the distance by which a foot moves in front of the opposite foot [7, 36, 41, 54]. Since the walking and running actions in the Kinder-Gator dataset [10] involve moving in place rather than forward over a distance, we define the step height as the distance a foot travels above the other foot during a step. It is measured as how high above the ground vertically a foot is during the highest part of a step.

Relative Step Height.

This is a spatial feature, and is defined as the length of a step in relation to the height of the person [48]. It measures the ratio between the step length (we use step height because they are walking in place) and height of the person. The relative step height is an important feature to consider as it normalizes the step height by the person’s height, hence eliminating differences in step heights due to variations in height across people (e.g., children and adults).

Walk Ratio (m/Steps/Minute).

This is a spatial feature. It is an index used to characterize a person’s walking pattern, and is measured as the ratio between the step length (we use step height) and the cadence (rate at which a person walks) [8, 40]. This feature is relevant to dynamic motions, as previous research notes that it reflects participants’ balance and coordination when performing a motion [8, 40].

Step Time (s).

This is a temporal feature which defines the time duration of a step [9]. It can further be defined as the time it takes a foot to complete one step. It is measured as the duration from when the foot leaves the ground to the time when the foot touches the ground again in completion of a step.

Cycle Time (s).

This is a temporal feature and is also known as stride time. The cycle time can be defined as the time it takes a foot to complete one cycle (two steps). It can be measured as the time between two consecutive steps of the same foot along the horizontal (we use vertical) trajectory [48].

Cycle Frequency (1/s).

This is a temporal feature and is also known as stride frequency. It is defined as the number of cycles per unit time and can be computed as the inverse of the cycle time [42, 48, 55]. Prior research shows that participants’ preferred cycle frequency optimizes energy cost [42].

Step Speed (m/s).

This is a temporal feature, and is defined as the ratio between the step length (we use step height) and the step time [9]. It defines how fast a step is completed and can help in understanding the pace of an action.

Cadence (steps/min).

This is a temporal feature and is defined as the rate at which a person walks. It is measured as the number of steps taken per minute [36, 41, 42, 52], and reflects the level of energy being exerted.

4 Dataset Used

The data used in this study is from our publicly available dataset, Kinder-Gator [10]. Kinder-Gator is a dataset our research groups collected from 10 children, ages 5 to 9 (5 females), and 10 adults, ages 19 to 32 (5 females), performing 58 natural motions, in-place, forward-facing the Microsoft Kinect 1.0. The dataset includes 3D positions (x: horizontal, y: vertical, z: depth) of 20 joints in the body at 30 frames per second (fps). Since we are focusing on walking and running motions, we used the four walking/running motions in the dataset, namely: “walk in place” (walk), “walk in place as fast as you can” (walk fast), “run in place” (run), and “run in place as fast as you can” (run fast). Each participant-action pair contained at least 10 steps. We created point-light display videos [14], which present each joint as a white dot on a black background animating through the course of the motion, for each participant-action pair.

5 Analysis

To compute the gait features for each person-action pair, we depend on knowledge of the stance phase (when the foot is on the ground [41]) and swing phase (when the foot is away from the ground [41]). Hence, we needed to identify the frames of each motion that corresponded to the step boundaries. We manually extracted these frames from the point-light display videos using a video annotation toolkit called EASEL [56]. Two researchers annotated subsets of the point-light display videos of the Kinect data for all of the actions. To ensure balanced labeling, the videos were counterbalanced between each annotator by age group (child, adult) and action. Also, for similar actions (run & run fast, walk & walk fast), the same annotator annotated the same participant for both motions. For each video to be annotated, we created three tracks in EASEL. Frames for the start (foot is on the ground), peak (foot is at its maximum position), and end (foot is returned to the ground) were recorded on the first, second, and third track respectively. The frames start-peak-end are the frames within a step. Previous research [36] has suggested that the analysis of gait can be done with either the foot, knee, hip, or pelvis joint, so we used the left foot joint from the Kinect skeleton tracking data in our analysis. Once all the frames had been annotated, we exported the annotation session, which creates an output CSV file with all the frames and the corresponding tracks recorded. We used this file for feature computation based on the start-peak-end frames.

We automated the feature computation process by extracting the corresponding foot positions and time stamps from the data for the frames we had manually extracted. The foot positions and time stamps were used to compute the gait features. For features involving computations per step or cycle, we averaged the values over the total number of steps or cycles in that motion. Therefore, each participant has one data point per action for each gait feature. A two-way repeated measures ANOVA was used to analyze the main effect of age group and action type and the interaction effect between them. Whenever we found no interaction effect between age group and action, we recomputed the two-way repeated measures ANOVA without the interaction effect in the model, and report that. For features where we found a significant effect of action, we conducted a Tukey post-hoc test to identify action pairs that are significantly different. We present results for all of the gait features we considered in our study. All means and standard deviations for features in the analysis can be found in Table 1, and they are expressed in units commonly used in the analysis of gait [57].

Our results show that the following temporal features differ between child motion and adult motion: step time, cycle time, cycle frequency, and cadence.

5.1 Spatial Features

Distance-based features generally showed no significant effect of age group; hence, we conclude that they cannot be used to distinguish adult and child motion. However, these features show a significant effect of action type, which serves to validate our approach of using features from the analysis of gait, despite the differences in motion structure (i.e., in-place motions versus moving along a distance).

Step Width.

Recall that the width of a step is computed as the horizontal distance between both feet during a step. A two-way repeated measures ANOVA on step width with a between-subjects factor of age group (child, adult) and a within-subjects factor of action type (walk, walk fast, run, run fast) found no significant effect of age group (F1,18 = 0.18, n.s.). The lateral placement of the feet for adults is roughly the same as that for children. This similarity may be because both adults and children are spending less effort to control the horizontal distance between their feet since in-place actions involve vertical movements. However, we found a significant main effect of action (F3,57 = 5.27, p < 0.01). Post-hoc tests identified a difference between walk and run fast (p < 0.001). People have wider step widths when running fast than when walking (see Table 1), irrespective of age group. Bauby and Kuo [57] asserted that wider steps are an advantage in stability; thus, participants widen their steps when running fast compared to walking to improve coordination. No difference in step width was found between other pairs of actions.

Step Height.

The height of a step is computed as the vertical distance between when the foot is on the ground, and when the foot is at its maximum position (peak). We focused on the left foot step heights because we annotated the left foot joints. We computed the ground for each step as the minimum between the (start) and (end) position. A two-way repeated measures ANOVA on step height with a between-subjects factor of age group (child, adult) and a within-subjects factor of action type (walk, walk fast, run, run fast) found no significant effect of age group (F1,18 = 0.002, n.s.). Our analysis found that the average step height for children and adults is about the same (see Table 1). This finding is surprising given the typical difference in height between children and adults. The range helps to illuminate what is really happening: children: min: 0.01 m, max: 0.25 m, med: 0.11 m; adults: min: 0.008 m, max: 0.41 m, med: 0.06 m. Thus, adults can raise their feet higher than children, but children tend to raise them proportionally higher on average. We also found a significant main effect of action (F3,57 = 5.03, p < 0.01). Post-hoc tests showed the following action pairs differed: run/run fast (p < 0.01), walk/run fast (p < 0.05), and walk fast/run fast (p < 0.01). Participants have higher step heights when running fast compared to the other actions (see Table 1): this confirms their exertion level was higher when running fast.

Relative Step Height.

The relative step height is computed as the ratio of the step height to the height of the person performing the action. We estimated the height of each Kinder-Gator participant using the difference between the head and the foot along the vertical dimension (y-axis). A two-way repeated measures ANOVA on relative step height with a between-subjects factor of age group (child, adult) and a within-subjects factor of action type (walk, walk fast, run, run fast) found no significant effect of age group (F1,18 = 1.51, n.s.). The large variance in the relative step height in children and adults may be the reason why we found no significant difference (Table 1). However, adults generally have a lower average relative step height compared to children. This finding follows, since their average step heights were the same, but adults are taller than children. We also found a significant effect of action (F3,57 = 4.91, p < 0.01). Post-hoc tests showed that the following action pairs differed: run/run fast (p < 0.01) and walk fast/run fast (p < 0.01). Like step height, children and adults have a higher relative step height when running fast compared to just running or walking fast (see Table 1).

Walk Ratio.

The walk ratio, a measure of balance and coordination, is computed as the ratio of the step height and the cadence (a temporal feature). A two-way repeated measures ANOVA on walk ratio with a between-subjects factor of age group (child, adult) and a within-subjects factor of action type (walk, walk fast, run, run fast) found no significant effect of age group (F1,18 = 0.07, n.s.). The similarity in average walk ratio between children and adults (Table 1) may be because walking pattern is influenced by how high participants raise their foot during a motion, and there was no significant difference in the average step height between children and adults. The standard walk ratio for adults in the gait literature is 0.0065 m/steps/min [8, 40]. However, we found a lower walk ratio for adults (M = 0.00070 m/steps/min, SD = 0.00078). The lower walk ratio is because the maximum step height that has been achieved while moving in place in our dataset is much less than the average step lengths noted in the literature (M = 0.68 m) [40]. Children had an average walk ratio of 0.00062 m/steps/min (SD = 0.00061). We also found a significant effect of action (F3,57 = 7.64, p < 0.001). Post-hoc tests showed that the following action pairs differed: walk/walk fast (p < 0.001), walk/run fast (p < 0.01), and walk/run (p < 0.001). Participant’s walk ratios were higher when walking in place compared to any of the other actions (see Table 1). This result follows what we might expect: participants have more coordination and balance in the (slowest) walking action when performing the action in place.

5.2 Temporal Features

We discuss the computation of time-based gait features, and present our findings using the same statistical analysis we used for the spatial features. Time-based features (except step speed) show significant effects by both age group and action. Thus, these are promising features to use to differentiate between child and adult motion.

Step Time

(Fig. 2a). The time for each step during an action is computed as the difference between the time stamp for the end frame (when the foot is back on the ground) and the timestamp for the start frame (when the foot first leaves the ground). A two-way repeated measures ANOVA on step time with a between-subjects factor of age group (child, adult) and a within-subjects factor of action type (walk, walk fast, run, run fast) found a significant main effect of age group (F1,18 = 12.15, p < 0.01). Children moved faster compared to adults (Table 1). During collection of the Kinder-Gator dataset [10], we observed that, given the same prompts as adults (e.g., “run in place as fast as you can”), children were more energetic and enthusiastic when performing the actions. We also found a significant effect of action (F3,54 = 55.86, p < 0.0001). Post-hoc tests showed that the following action pairs differed: walk/walk fast (p < 0.001), walk fast/run fast (p < 0.001), walk fast/run (p < 0.01), walk/run fast (p < 0.001), and walk/run (p < 0.001). As expected, people have a faster step time when running, and become slower when walking fast or walking (Table 1). We also found a significant interaction effect (F3,54 = 4.74, p < 0.01). Children have a faster step time than adults when performing all of the actions except running fast (see Fig. 2a). It is possible that the prompt “run as fast as you can” encouraged adults to “pick up the pace” and exert themselves more than they did in the previous actions.

Cycle Time

(Fig. 2b). The time for a cycle is computed as the time it takes to complete two consecutive steps of the same foot. A two-way repeated measures ANOVA on cycle time with a between-subjects factor of age group (child, adult) and a within-subjects factor of action type (walk, walk fast, run, run fast) found a significant main effect of age group (F1,18 = 7.61, p < 0.05). Like step time, the children completed cycles with a shorter time duration compared to adults (Table 1). We also found a significant effect of action (F3,57 = 102.13, p < 0.0001). Post-hoc tests showed that the following action pairs differed: walk/run (p < 0.001), walk/run fast (p < 0.001), walk/walk fast (p < 0.001), run/run fast (p < 0.05), and walk fast/run fast (p < 0.001). Intuitively, people exhibit the fastest cycle time when running fast, and cycle time is slowest for walking (Table 1). Compared to step time, participants completed individual steps faster when running than when walking fast, but the time to complete successive steps (in this case, two steps of the same foot) is roughly the same in both actions. On the other hand, unlike step time, we found no significant interaction between age group and action. Figure 2b shows a similar relationship in cycle time as Fig. 2a shows in step time except for the walk action. One possible explanation is that people may walk with each foot at a slightly different frequency, depending on their exertion level. Previous research [58] has shown people exhibit a strength imbalance on their non-dominant side, which could also lead to higher variability in the motion.

Cycle Frequency

(Fig. 2c). The cycle frequency is computed as the inverse of the cycle time (1/cycle time). A two-way repeated measures ANOVA on cycle frequency with a between-subjects factor of age group (child, adult) and a within-subjects factor of action type (walk, walk fast, run, run fast) found a significant main effect of age group (F1,18 = 10.53, p < 0.01). Children have a higher cycle frequency compared to adults (Table 1). We also found a significant effect of action (F3,54 = 46.53, p < 0.0001). Like cycle time, post-hoc tests showed that the following action pairs differed: walk/run (p < 0.001), walk/run fast (p < 0.001) walk/walk fast (p < 0.01), run/run fast (p < 0.01), and walk fast/run fast (p < 0.001). Unlike cycle time, we found a significant interaction effect (F3,54 = 3.55, p < 0.05) between age group and action. Children have a higher cycle frequency than adults when performing all actions, except walking in place (see Fig. 2c). Previous research has correlated lower cycle frequency to a lower physical energy cost [42], and young children are not as experienced at optimizing this cost as adults, especially for high energy motions.

Step Speed.

The speed of a step is computed as the ratio of step height to step time. A two-way repeated measures ANOVA on step speed with a between-subjects factor of age group (child, adult) and a within-subjects factor of action type (walk, walk fast, run, run fast) found no significant main effect of age group (F1,18 = 0.66, n.s.), unlike all the other temporal features. We believe age group is not significant here because step speed is highly dependent on step height, a distance-based feature (r = 0.90, p < 0.0001). However, we did find a significant effect of action (F3,57 = 19.06, p < 0.0001). Post-hoc tests showed that the following action pairs differed: run/run fast (p < 0.001), walk/run (p < 0.05), walk/run fast (p < 0.001), and walk fast/run fast (p < 0.001). Step speed shows the same pattern by action type with respect to action intensity as does step height due to changing levels of exertion.

Cadence

(Fig. 2d). The cadence is computed as the ratio of the total number of steps taken during an action to the total time duration of that action. A two-way repeated measures ANOVA on cadence with a between-subjects factor of age group (child, adult) and a within-subjects factor of action type (walk, walk fast, run, run fast) found a significant main effect of age group (F1,18 = 5.87, p < 0.05). The number of steps taken per minute for children is higher compared to adults (Table 1). This higher number is expected because we know from step time that children move faster and are more enthusiastic in their motions than adults. Thus, it follows that children will complete more steps than adults in a similar time. Our analysis showed that adults in our dataset exhibited an average cadence of 96 ± 19 steps/min when walking in place, which is lower than the average walking cadence of 120 steps/min for adults observed in prior work [36]. This difference may be because adults did not walk long enough (<20 steps) during the collection of the Kinder-Gator dataset [10] compared to other gait studies, and may not have settled into a regular cadence. The finding could also be because the walking actions in the Kinder-Gator dataset [10] involved moving in place compared to moving forward along a distance. Similarly, children in our study had alower cadence (M = 103 steps/min, SD = 13) than the average cadence of 138 steps/min in D using and Thorpe’s study for children ages 5 to 10 [46]. However, Barreira et al. [44] found that children spent more time in their open walking study walking at cadences of 100–119 steps/min compared to cadences of 120+ steps/min. We also found a significant effect of action (F3,54 = 55.75, p < 0.0001). Post-hoc tests showed that the following action pairs differed: walk/run (p < 0.001), walk/run fast (p < 0.001) walk/walk fast (p < 0.001), run/run fast (p < 0.01), and walk fast/run fast (p < 0.001). Cadence exhibits a similar pattern by action type as cycle frequency and cycle time; we discuss the relationship between these features in the next section. We also found a significant interaction effect (F3,54 = 2.99, p < 0.05). Children have a higher cadence than adults when performing all actions except walk (see Fig. 2d). Like cycle frequency, children display the same pattern of cadence by action type; they expend higher energy and exhibit lower coordination than adults.

6 Discussion

We analyzed the differences between child and adult motion based on spatial and temporal gait features from prior work in the analysis of gait [7,8,9, 36, 40,41,42, 52, 55]. Our results found significant differences in temporal features such as step time, cycle time, cycle frequency, and cadence. Children have a faster step time and cycle time and a higher cadence, but a lower cycle frequency compared to adults. Hence, these features are promising to use to differentiate between child and adult motion. We found no significant differences between child and adult motion for spatial features; thus, these features cannot be used to differentiate between the age groups.

6.1 Feature Dependency

We analyzed pairs of features for inter-dependency. Our results show a high correlation between cadence and cycle frequency (r = 0.97, p < 0.001). Although most papers we found define cadence as we have (number of steps taken per minutes [36, 41, 42, 52]), Cooper [41] acknowledged that cadence is highly related to step frequency (frequency for half a gait cycle). Our correlation analysis also showed that features that are an aggregation of two other features are more dependent on the feature in the numerator than the feature in the denominator. For example, walk ratio, which has step height in the numerator and cadence in the denominator, is more correlated with step height (r = 0.86, p < 0.001) than cadence (r = −0.43, p < 0.001). Similarly, step speed is more correlated with its numerator step height (r = 0.90, p < 0.01) than its denominator step time (r = −0.16, n.s.). As expected, we see a high negative correlation between cycle time and cycle frequency since cycle frequency is the inverse of cycle time (r = −0.92, p < 0.001). Furthermore, we also found a strong positive correlation between cadence and cycle time (r = 0.91, p < 0.001), and step time and cycle time (r = 0.89, p < 0.001). These results show that the correlations between features can be used to corroborate findings from different features. However, it is important to note that each individual feature is worth considering, because the information they provide about particular parts of a person’s gait is unique.

6.2 Implications

Our findings have implications regarding improving whole-body recognizers for children and designing prompts for whole-body interaction experiences.

Whole-Body Recognition for Children.

There are some proposed recognizers for whole-body interaction [32, 41, 59]. However, since most of these recognizers are not tailored for children, and our results show that children’s movements are quantifiably different than adults’ in ways that might affect tracking and recognition (e.g., speed and movement time), we hypothesize that children’s motions will be poorly recognized. Thus, future work can examine tailoring whole-body recognizers to child motion qualities. For example, the coordination of a child’s movement depends on the action being performed. In our study, children exhibited higher coordination during the walking in place action versus the running fast action. Recognizers will need higher tolerance for variance in the motion to be able to recognize less coordinated motion from children.

Whole-Body Interaction Prompts.

Our post-hoc analysis found no significant difference between walk fast and run in any feature except step time, and conversely, we found a significant difference between run and run fast in all features except step time. Furthermore, our results for cycle frequency and cadence show that children exhibited more energy than adults when walking and running. Taken together, we can assert that children demonstrate higher exertion than adults for the same action prompts. This finding suggests that designers of motion applications should tailor interaction prompts given to users based on the age group and desired level of exertion. For example, for higher levels of exertion, designers need only prompt children to “run,” but must prompt adults to “run fast.”

7 Limitations and Future Work

We are limited by the small number of steps per action (<20 steps) in the Kinder-Gator dataset [10], compared to 100 or more steps commonly seen in the analysis of gait (e.g., [9, 57]). This disparity may have led to increased variability in some of the features, resulting in lack of sensitivity for significant differences. However, there are precedents for this approach when studying children’s gait [46, 54, 60], likely due to their shorter attention spans [46]. Furthermore, we only focused on the left foot when computing the step features, and future work could consider both the left and right foot for the computation to investigate differences that may exist between the dominant and non-dominant foot. The increase in variability in some of the features may also be because people are less familiar with the experience of moving in-place, since the typical form of walking involves moving forward along a distance. However, it is worth noting that in-place motions are common in motion applications (e.g., exertion games [6]). Though the features we analyzed are representative of the most common features in the analysis of gait, they are not exhaustive, and future work could identify additional features which may be more discriminating for distinguishing child and adult motion. Lastly, future work can also classify children and adults based on their motions by training a binary classifier using these gait features.

8 Conclusion

In this paper, we aimed to answer the question: what are the quantifiable differences between child and adult motion for different whole-body motions? The main contributions of this paper are to (a) identify a set of nine features from the analysis of gait that can be used to (b) quantify the differences between child and adult motion. We analyzed data collected from 10 children (ages 5 to 9), and 10 adults performing four actions (walk in place, walk in place as fast as you can, run in place, run in place as fast as you) based on spatial and temporal gait features. We found that temporal features such as step time, cycle time, cycle frequency, and cadence are significantly different in the motion of children compared to that of adults. Children complete more steps in a faster time compared to adults. However, we found no significant difference in step speed, possibly due to its high dependence on step height, a spatial feature. Similarly, we found no significant difference in age group for all spatial features. Our work has implications for improving whole-body recognition algorithms for child motion and the design of whole-body interaction experiences.

References

Park, S., Aggarwal, J.K.: Recognition of two-person interactions using a hierarchical Bayesian network. In: ACM SIGMM International Workshop on Video Surveillance (IWVS 2003), pp. 65–76 (2003). http://dx.doi.org/10.1145/982452.982461

Yang, H.D., Park, A.Y., Lee, S.W.: Human-robot interaction by whole body gesture spotting and recognition. In: International Conference on Pattern Recognition, pp. 774–777 (2006). http://dx.doi.org/10.1109/ICPR.2006.642

Huelke, D.F.: An overview of anatomical considerations of infants and children in the adult world of automobile safety design. Assoc. Adv. Automot. Med. 42, 93–113 (1998). https://doi.org/10.1145/982452.982461

Thelen, E.: Motor development: a new synthesis. Am. Psychol. 50, 79–95 (1995). https://doi.org/10.1037/0003-066X.50.2.79

Jain, E., Anthony, L., Aloba, A., Castonguay, A., Cuba, I., Shaw, A., Woodward, J.: Is the motion of a child perceivably different from the motion of an adult? ACM Trans. Appl. Percept. 13(2), Article no. 22 (2016). http://dx.doi.org/10.1145/2947616

Lee, E., Liu, X., Zhang, X.: Xdigit: An arithmetic kinect game to enhance math learning experiences. In: Fun and Games Conference, pp. 722–736 (2013)

Hunter, J.P., Marshall, R.N., McNair, P.J.: Interaction of step length and step rate during sprint running. Med. Sci. Sports Exerc. 36, 261–271 (2004). https://doi.org/10.1249/01.MSS.0000113664.15777.53

Rota, V., Perucca, L., Simone, A., Tesio, L.: Walk ratio (step length/cadence) as a summary index of neuromotor control of gait: application to multiple sclerosis. Int. J. Rehabil. Res. 34, 265–269 (2011). https://doi.org/10.1097/MRR.0b013e328347be02

Terrier, P.: Step-to-step variability in treadmill walking: influence of rhythmic auditory cueing. PLoS ONE 7(10), e47171 (2012). https://doi.org/10.1371/journal.pone.0047171

Aloba, A., et al.: Kinder-Gator: the UF kinect database of child and adult motion. In: Diamanti, O., Vaxman, A. (eds.) Eurographics 2018 - Short Papers, pp. 13–16 (2018). http://dx.doi.org/10.2312/egs.20181033

Microsoft: Kinect for Windows. https://developer.microsoft.com/en-us/windows/kinect

Bertenthal, B.I., Proffitt, D.R., Kramer, S.J.: Perception of biomechanical motions by infants: implementation of various processing constraints. J. Exp. Psychol. Hum. Percept. Perform. 13, 577–585 (1987). https://doi.org/10.1037/0096-1523.13.4.577

Fox, R., McDaniel, C.: The perception of biological motion by human infants. Science 218, 486–487 (1982). https://doi.org/10.1126/science.7123249

Johansson, G.: Visual perception of biological motion and a model for its analysis. Percept. Psychophys. 14, 201–211 (1973). https://doi.org/10.3758/BF03212378

Cutting, J., Kozlowski, L.: Recognizing friends by their walk: gait perception without familiarity cues. Bull. Psychon. Soc. 9, 353–356 (1977). https://doi.org/10.3758/BF03337021

Beardsworth, T., Buckner, T.: The ability to recognize oneself from a video recording of one’s movements without seeing one’s body. Bull. Psychon. Soc. 18, 19–22 (1981). https://doi.org/10.3758/BF03333558

Wellerdiek, A.C., Leyrer, M., Volkova, E., Chang, D.-S., Mohler, B.: Recognizing your own motions on virtual avatars. In: ACM Symposium on Applied Perception (SAP 2013), pp. 138–138 (2013). http://dx.doi.org/10.1145/2492494.2501895

Golinkoff, R.M., et al.: Young children can extend motion verbs to point-light displays. Dev. Psychol. 38, 604–614 (2002). https://doi.org/10.1037/0012-1649.38.4.604

Wang, L., Hu, W., Tan, T.: Recent developments in human motion analysis. Pattern Recognit. 36, 585–601 (2003). https://doi.org/10.1016/S0031-3203(02)00100-0

Ceseracciu, E., Sawacha, Z., Cobelli, C.: Comparison of markerless and marker-based motion capture technologies through simultaneous data collection during gait: Proof of concept. PLoS ONE 9(3), e87640 (2014). https://doi.org/10.1371/journal.pone.0087640

Steele, K., Corazza, S., Scanlan, S., Sheets, A., Andriacchi, T.P.: Markerless vs. marker-based motion capture: a comparison of measured joint centers. In: North American Conference on Biomechanics Annual Meeting, pp. 5–9 (2009)

Nieto-Hidalgo, M., Ferrández-Pastor, F.J., Valdivieso-Sarabia, R.J., Mora-Pascual, J., García-Chamizo, J.M.: A vision based proposal for classification of normal and abnormal gait using RGB camera. J. Biomed. Inform., 82–89 (2016). doi:http://dx.doi.org/10.1016/j.jbi.2016.08.003

Bobick, A.F., Davis, J.W.: The recognition of human movement using temporal templates. IEEE Trans. Pattern Anal. Mach. Intell., 257–267 (2001). http://dx.doi.org/10.1109/34.910878

Asteriadis, S., Chatzitofis, A., Zarpalas, D., Alexiadis, D.S., Daras, P.: Estimating human motion from multiple kinect sensors. In: Conference on Computer Vision/Computer Graphics Collaboration Techniques and Applications (MIRAGE 2013), 6 p. (2013). http://dx.doi.org/10.1145/2466715.2466727

Berger, K., Ruhl, K., Schroeder, Y., Bruemmer, C., Scholz, A., Magnor, M.: Markerless motion capture using multiple color-depth sensors. Vision, Model. Vis., 317–324 (2011). http://dx.doi.org/10.2312/PE/VMV/VMV11/317-324

Oh, J., Jung, Y., Cho, Y., Hahm, C., Sin, H., Lee, J.: Hands-up: motion recognition using kinect and a ceiling to improve the convenience of human life. In: ACM SIGCHI Conference on Human Factors in Computing Extended Abstracts (CHI EA 2012), pp. 1655–1660 (2012). http://dx.doi.org/10.1145/2212776.2223688

Huang, J.-D.: Kinerehab: a kinect-based system for physical rehabilitation: a pilot study for young adults with motor disabilities. In: ACM SIGACCESS Conference on Computers and Accessibility (ASSETS 2011), pp. 319–320. (2011). http://dx.doi.org/10.1145/2049536.2049627

Alexiadis, D.S., Kelly, P., Daras, P., O’Connor, N.E., Boubekeur, T., Moussa, M.B.: Evaluating a dancer’s performance using kinect-based skeleton tracking. In: ACM International Conference on Multimedia, pp. 659–662 (2011). http://dx.doi.org/10.1145/2072298.2072412

Nirjon, S., et al.: Kintense: a robust, accurate, real-time and evolving system for detecting aggressive actions from streaming 3D skeleton data. In: IEEE International Conference on Pervasive Computing and Communications (PerCom 2014), pp. 2–10 (2014). http://dx.doi.org/10.1109/PerCom.2014.6813937

Zafrulla, Z., Brashear, H., Hamilton, H.: American sign language recognition with the kinect. In: International Conference on Multimodal Interfaces, pp. 279–286 (2011). http://dx.doi.org/10.1145/2070481.2070532

Lang, S.: Sign language recognition using kinect. In: International Conference on Artificial Intelligence and Soft Computing, pp. 394–402 (2011)

Zhang, B., Nakamura, T., Ushiogi, R., Nagai, T., Abe, K., Omori, T., Oka, N., Kaneko, M.: Simultaneous children recognition and tracking for childcare assisting system by using kinect sensors. J. Sig. Inf. Process. 07, 148–159 (2016). https://doi.org/10.4236/jsip.2016.73015

Connell, S., Kuo, P.-Y., Liu, L., Piper, A.M.: A Wizard-of-Oz elicitation study examining child-defined gestures with a whole-body interface. In: ACM Conference on Interaction Design and Children (IDC 2013), pp. 277–280 (2013). http://dx.doi.org/10.1145/2485760.2485823

Smith, T.R., Gilbert, J.E.: Dancing to design: a gesture elicitation study. In: ACM Conference on Interaction Design and Children (IDC 2018), pp. 638–643 (2018). https://dx.doi.org/10.1145/3202185.3210790

Fish, D., Nielsen, J.-P.: Clinical assessment of human gait. J. Prosthetics Orthot. 5, 39–48 (1993). https://doi.org/10.1097/00008526-199304000-00005

Gage, J.R., Deluca, P.A., Renshaw, T.S.: Gait analysis: principles and applications. J. Bone Jt. Surg. 77, 1607–1623 (1995)

Tao, W., Liu, T., Zheng, R., Feng, H.: Gait analysis using wearable sensors. Sensors 12, 2255–2283 (2012). https://doi.org/10.3390/s120202255

Weber, W., Weber, E.: Mechanics of Human Walking Apparatus. Springer, Heidelberg (1992)

Benedetti, M., Cappozzo, A.: Anatomical landmark definition and identification in computer aided movement analysis in a rehabilitation context II (Internal Report). U Degli Stud. La Sapienza, 31 p. (1994)

Sekiya, N., Nagasaki, H.: Reproducibility of the walking patterns of normal young adults: test-retest reliability of the walk ratio (step-length/step-rate). Gait Posture 7, 225–227 (1998). https://doi.org/10.1016/S0966-6362(98)00009-5

Cooper, R.A.: Rehabilitation Engineering Applied to Mobility and Manipulation. CRC Press, Boca Raton (1995)

Marais, G., Pelayo, P.: Cadence and exercise: physiological and biomechanical determinants of optimal cadences-Practical applications. Sports Biomech. 2, 103–132 (2003). https://doi.org/10.1080/14763140308522811

Gianaria, E., Grangetto, M., Lucenteforte, M., Balossino, N.: Human classification using gait features. In: International Workshop on Biometric Authentication, pp. 16–27 (2014). http://dx.doi.org/10.1007/978-3-319-13386-7_2

Barreira, T.V., Katzmarzyk, P.T., Johnson, W.D., Tudor-Locke, C.: Cadence patterns and peak cadence in US children and adolescents: NHANES, 2005–2006. Med. Sci. Sports Exerc. 44, 1721–1727 (2012). https://doi.org/10.1249/MSS.0b013e318254f2a3

Shultz, S.P., Hills, A.P., Sitler, M.R., Hillstrom, H.J.: Body size and walking cadence affect lower extremity joint power in children’s gait. Gait Posture 32, 248–252 (2010). https://doi.org/10.1016/j.gaitpost.2010.05.001

Dusing, S.C., Thorpe, D.E.: A normative sample of temporal and spatial gait parameters in children using the GAITRite(R) electronic walkway. Gait Posture 25, 135–139 (2007)

Bjornson, K.F., Song, K., Zhou, C., Coleman, K., Myaing, M., Robinson, S.L.: Walking stride rate patterns in children and youth. Pediatr. Phys. Ther. 23, 354–363 (2011). https://doi.org/10.1097/PEP.0b013e3182352201

Davis, J.W.: Visual categorization of children and adult walking styles. In: International Conference on Audio- and Video-Based Biometric Person Authentication (AVBPA 2001), pp. 295–300 (2001). http://dx.doi.org/1007/3-540-45344-X_43

Oberg, T., Karsznia, A., Oberg, K.: Basic gait parameters: reference data for normal subjects, 10–79 years of age. J. Rehabil. Res. Dev. 30, 210–223 (1993)

Piaget, J.: Piaget’s Theory. In: Mussen, P. (ed.) Handbook of Child Psychology. Wiley, New York (1983)

Thomas, J.R.: Acquisition of motor skills: information processing differences between children and adults. Res. Q. Exerc. Sport 51, 158–173 (1980). https://doi.org/10.1080/02701367.1980.10609281

Moe-Nilssen, R., Helbostad, J.L.: Estimation of gait cycle characteristics by trunk accelerometry. J. Biomech. 37, 121–126 (2004). https://doi.org/10.1016/S0021-9290(03)00233-1

Bhanu, B., Han, J.: Bayesian-based performance prediction for gait recognition. In: Workshop on Motion and Video Computing (MOTION 2002), pp. 145–150 (2002). http://dx.doi.org/10.1109/MOTION.2002.1182227

Rose Jacobs, R.: Development of gait at slow, free, and fast speeds in 3- and 5-year-old children. Phys. Ther. 1251–1259 (1983). http://dx.doi.org/10.1093/ptj/63.8.1251

Danion, F., Varraine, E., Bonnard, M., Pailhous, J.: Stride variability in human gait: the effect of stride frequency and stride length. Gait Posture 18, 69–77 (2003). https://doi.org/10.1016/S0966-6362(03)00030-4

Wang, I., et al.: EGGNOG: a continuous, multi-modal data set of naturally occurring gestures with ground truth labels. In: IEEE International Conference on Automatic Face Gesture Recognition (FG 2017), pp. 414–421 (2017). http://dx.doi.org/10.1109/FG.2017.145

Bauby, C.E., Kuo, A.D.: Active control of lateral balance in human walking. J. Biomech. 33, 1433–1440 (2000). https://doi.org/10.1016/S0021-9290(00)00101-9

Sadeghi, H., Allard, P., Prince, F., Labelle, H.: Symmetry and limb dominance in able-bodied gait: a review. Gait Posture 12, 34–45 (2000). https://doi.org/10.1016/S0966-6362(00)00070-9

Yang, H.D., Park, A.Y., Lee, S.W.: Gesture spotting and recognition for human-robot interaction. IEEE Trans. Robot. 23, 256–270 (2007). https://doi.org/10.1109/TRO.2006.889491

Lechner, D.E., McCarthy, C.F., Holden, M.K.: Gait deviations in patients with juvenile rheumatoid arthritis. Phys. Ther. 67(9), 1335–1341 (1987). https://doi.org/10.1093/ptj/67.9.1335

Acknowledgements

This work is partially supported by National Science Foundation Grant Award #IIS-1552598. Opinions, findings, and conclusions or recommendations expressed in this paper are those of the authors and do not necessarily reflect these agencies’ views.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Aloba, A. et al. (2019). Quantifying Differences Between Child and Adult Motion Based on Gait Features. In: Antona, M., Stephanidis, C. (eds) Universal Access in Human-Computer Interaction. Multimodality and Assistive Environments. HCII 2019. Lecture Notes in Computer Science(), vol 11573. Springer, Cham. https://doi.org/10.1007/978-3-030-23563-5_31

Download citation

DOI: https://doi.org/10.1007/978-3-030-23563-5_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-23562-8

Online ISBN: 978-3-030-23563-5

eBook Packages: Computer ScienceComputer Science (R0)