Abstract

We propose a method to quickly process non-rigid registration between 3D deformable data using a GPU-based non-rigid ICP(Iterative Closest Point) algorithm. In this paper, we sequentially acquire whole-body model data of a person moving dynamically from a single RGBD camera. We use Dense Optical Flow algorithm to find the corresponding pixels between two consecutive frames. Next, we select nodes to estimate 3D deformation matrices by uniform sampling algorithm. We use a GPU-based non-rigid ICP algorithm to estimate the 3D transformation matrices of each node at high speed. We use non-linear optimization algorithm methods in the non-rigid ICP algorithm. We define energy functions for an estimate the exact 3D transformation matrices. We use a proposed GPU-based method because it takes a lot of computation time to calculate the 3D transformation matrices of all nodes. We apply a 3D transformation to all points with a weight-based affine transformation algorithm. We demonstrate the high accuracy of non-rigid registration and the fast runtime of the non-rigid ICP algorithm through experimental results.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In recent years there have been many studies on the 3D restoration of deformable objects in successive frames [1,2,3,4]. However, it is still challenging to reconstruct 3D models of deformable objects from consecutive frames in the field of computer vision and graphics [2]. One of the methods for reconstructing the 3D model of a deformable object is to estimate the transformation matrix for the object’s transformed part from a continuous frame. One of the more detailed approaches is to use non-rigid deformation [5,6,7] to transform the vertices of the model. This approach uses spatial transformations that consist of graph vertices. The transformation for one vertex affects the deformation of the neighboring space because it is associated with the connected node. They solve the non-linear minimization problem and estimate the optimal value of the affine transformation matrix for each vertex. However, because it takes a lot of computation time to estimate the transformation matrix for all vertices of an object, most of them work offline or they use the GPU’s parallel processing technique to reduce non-rigid registration computation time. Another method is to use only non-rigid parts, except rigid parts like backgrounds [2, 8]. This method can reduce calculation time. Another method [8, 12, 17, 18] is to apply the GPU’s parallel processing technique to reduce non-rigid registration computation time. If calculations do not need to be calculated sequentially, the application of parallelism can produce very effective results.

The goal of this paper is to quickly and precisely match sequential 3D data of a dynamically moving whole body model. Our approach does not estimate the 3D transformation matrix for all points to efficiently perform deformable registration. After selecting uniformly sampled points as in [5], we obtain the 3D transformation matrix. Unselected points are related to the movement of neighboring points. This property can have the form of a graph structure [5]. This method results in computationally very efficient and smooth outputs. However, it still requires a high computational complexity to estimate the 3D transformation matrix of all selected points. Therefore, a lot of processing time is needed. We propose a method that a fast and accurate estimation of the 3D transformation matrix of selected points using the GPU’s parallel processing.

Figure 1 shows our system flow chart. First, we obtain dynamic full-body model data from a single RGBD camera. Moreover, we use only the non-rigid part by removing the background based on the distance threshold. We use two sequential data as inputs to our system, as shown in Fig. 1. The input data is used as a pair of color and depth images. We use Gunnar Farneback’s optical flow [10] to search for the correspondence of sequence data. We used a simple method of selecting pixels at regular intervals as nodes. We project the pixels selected as nodes into the 3D space based on the camera coordinate system. Next, we estimate the 3D affine transformation matrix between the previous frame and the current frame for all nodes through our proposed GPU-based non-rigid ICP algorithm. The 3D transformation matrix of each node has a size of 3 × 4 in the form of an affine matrix. Finally, we use weight-based affine transformation method we propose to apply the 3D transformation of all points.

The last section of this paper shows the accuracy and runtime test results for non-rigid ICP for all nodes. It also shows the result of applying the weight-based affine transformation method.

2 Corresponding Pixel Search, Node Selection, and 3D Reconstruction [-]

2.1 Corresponding Pixel Search, Node Selection

We search for corresponding pixels for successive frames using Optical-flow algorithm. We then use the uniform-sampling method to select pixels without using all the pixels. Selected pixels are used as nodes. We used a uniform-sampling method to select pixels at regular intervals. The selected nodes estimate 3D transformation matrix using the method in Sect. 3. The unselected pixels estimate 3D transformation matrix using the 3D transformation matrix of neighboring nodes.

We use Gunnar Farneback’s optical flow [10] algorithm to find the corresponding pixels of the previous frame and the current frame. Figure 2 shows an example of the Optical-flow algorithm.

2.2 3D Reconstruction

In this paper, we aim to do 3D non-rigid registration of deformable whole body model. So we project all the pixels into the 3D space. We transformed the pixels into the 3D coordinate system of the camera reference using Eq. (1).

In Eq. (1), (x, y) is the pixel coordinates in the color image. \( P_{{\left( {x,y} \right)}} \) is a 3D point based on the camera coordinate system. That is, \( P_{{\left( {x,y} \right)}} \) is a result of converting a 2D pixel into 3D coordinates. \( D_{{\left( {x,y} \right)}} \) is the depth value for the pixel obtained from the depth image. \( K \) is an internal parameter of the camera. The resolution of the color image and the depth image are the same.

3 Non-rigid Registration Using GPU

3.1 Grouping Nearest Neighbor Node

To estimate the 3D transformation matrix between two point groups in 3D space, we must know three or more corresponding points. When the number of corresponding points is less than three, the 3D rotation vector cannot be estimated. In this case, only a 3D translation vector can be estimated.

In this paper, nodes are grouped to estimate the 3D transformation matrix of selected nodes. Our method is as follows. As shown in Fig. 3, we set a distance to a threshold from one node. We have grouped N-closest points within this area. If there are not N-number of nodes in this area, we have chosen the center node. We applied this method to all nodes. If the grouping is successful, the movement of neighbor points affects all neighbor points.

When the grouping method is applied as shown in Fig. 3, edges are created between the points. And the overall structure shows the graph form. Therefore, we refer to these points as nodes in this paper. In Fig. 3, \( i \) is the index of the selected node, and \( j \) is the index of the neighboring node.

3.2 Energy Function

In general, Non-rigid registration algorithm for the deformation model uses a method of defining the 3D transformation matrix as an affine matrix and optimizing the non-rigid ICP energy function [1,2,3, 5,6,7,8]. In this paper, we use the affine matrix as the 3D transformation matrix of each node. The size of the affine matrix is 3 × 4. We defined the energy function to estimate the 3D transformation matrix of all nodes for the deformation model for the previous frame and the current frame. This section describes how to optimize energy functions at high speed using the GPU. We have defined two energy functions, \( E_{data} \) and \( E_{rigid} \), as in Eq. (2). We then use Levenberg-Marquardt (LM) algorithm to optimize the energy function and estimate the affine matrix for all nodes.

In the Eq. (2), \( X \) is defined as \( X = \left\{ {X_{1} , \cdots ,X_{n} } \right\} \). \( X_{i} \) is a 3 \( \times \) 4 affine transformation matrix for the \( i \)-th node. \( E_{data} \) and \( E_{rigid} \) are called the data term and rigid term. \( E_{rigid} \) is also called an orthogonality constraint [2, 11]. \( \alpha_{data} \) and \( \alpha_{rigid} \) are constant values for weighting each energy function.

3.2.1 Data Term

We define the Data term as Eq. (3). The data term is an energy term for estimating the optimal 3D transformation matrix for a point group corresponding to a specific 3D point cloud. After applying the method in Sect. 3.1, suppose that there is one group of point clouds. In Eq. (3), \( M \) is the total number of nodes for the whole-body model, and \( N \) is the number of grouped nodes. \( X_{i} \) represents the affine matrix of the \( i \)-th node. \( P \) is the node of the previous frame, and \( Q \) represents the node corresponding to \( P \) in the current frame.

3.2.2 Rigid Term

We define the rigid term as Eq. (4). The rigid term allows Affine matrix to have a property similar to a rigid transformation matrix. In other words, the rigid term is an energy term that causes the affine matrix to have the property of an orthogonal matrix. In Eq. (4), \( a_{i} = \left( {a_{i1} , a_{i2} , a_{i3} } \right) \) is a row vector of \( X_{i} \) in Eq. (3). \( a_{i} = \left( {a_{i1} , a_{i2} , a_{i3} } \right) \) is shown in Fig. 4. \( i \) is the index number of the nodes. \( M \) is the total number of nodes for the whole body model.

3.3 Optimization

3.3.1 Energy Minimization Method

We use a GPU-based optimization algorithm to obtain the \( X \) matrix with the size of \( E_{tol} \left( X \right) \) in Sect. 3.2 minimized. The optimization algorithm as shown in Eq. (5) uses a combination of Levenberg-Marquardt (LM) algorithm and the Gauss-Newton algorithm. In Eq. (5), \( {\mathcal{J}} \) is a Jacobian matrix for \( E_{tol} \left( X \right) \). \( \lambda \) is a constant value and \( I \) is an identity matrix.

We initialize the initial affine transformation matrix for each node to the Identity matrix. We used Eq. (6) to find \( h \) of Eq. (5). \( A \) of Eq. (6) uses \( \left( {{\mathcal{J}}^{T} {\mathcal{J}} + \lambda I} \right) \) in Eq. (5). B of Eq. (6) uses \( - {\mathcal{J}}^{T} E_{tol} \left( X \right) \) in Eq. (5). We used GPU based PCG (Preconditioned Conjugate Gradient) algorithm for the linear solver. We applied the variation \( h \) obtained from the linear solver for optimization to Eq. (7). Equation (7) is an expression for reaching the optimal solution of X.

The size of the affine transformation matrix for each node is 3 \( \times \) 4. Therefore, we must estimate 12 non-parameters per node through the solver. The larger the number of non-parameter, the larger the number of nodes, the higher the amount of computation required to obtain the Jacobian matrix. Section 3.3.2 suggests how to process Jacobian operations at high speed using the GPU.

3.3.2 GPU Parallelism for Jacobian Calculations

Independent and iterative calculation structures are advantageous for parallel processing systems. Jacobian calculations are dependent on a group of nodes and are independent of other groups. We designed the data term and rigid term as two asynchronous kernels in parallel, as shown in Fig. 5.

In Data term kernel, we have assigned the number of CUDA blocks equal to the number of nodes. We used GPU shared memory as much as possible by assigning one node group to one CUDA block. CUDA shared memory has the advantage of faster access speed than CUDA global memory. This structure makes maximum use of parallel processing. The number of threads in Data term kernel is ‘neighboring node size + 1’. The reason for adding one is because it includes the core node. In Rigid term kernel, the number of CUDA blocks is the total number of nodes divided by the number of threads allocated in a block.

4 Weight-Based Affine Transformation

In this paper, we propose a weight-based affine transformation method to apply the spatial transformation of all points using the affine transformation matrix for each point. Our method is defined by Eq. (8). This method weights each transformation matrix of all points to estimate a 3D transformation matrix of points. We used the exponential function to define the weighted value in inverse proportion to the distance as shown in Eq. (8). The nearest node has a greater effect than the farther node.

In the above equation, \( P \) is the point of the previous frame, and \( P^{{\prime }} \) is the result of applying the weight-based affine transformation. And \( i \) denotes an index of a node. \( N \) is the total number of nodes. \( X_{i} \) is the affine transformation matrix of the \( i \)-th node and \( \lambda \) is a constant.

5 Experimental Results

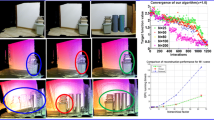

We used one Intel RealSense D415 camera. All the experiments are worked in a general purpose Intel i7-4790 computer running Windows 10 (64 bit) and an NVIDIA Titan XP graphics card. We evaluated the performance by experimenting with four data sets as shown in Table 1. Table 1 shows information in the dataset and the experimental results. It shows the average number of points and the average number of selected nodes in order. And it shows the calculation time of the non-rigid ICP algorithm and distance error value between nodes. The average non-rigid ICP calculation time was measured at 71.17 FPS. The average distance error of the nodes was measured as 4.24 mm. Figure 6 shows the result of applying our distortion transformation using the transformation matrix obtained from the non-rigid ICP in the previous frame and the current frame. In Fig. 6(a), the red part is the 3D data of the previous frame. In Fig. 6(b), the red color is the result of our proposed method.

6 Conclusion

This paper presents a non-rigid registration method for deformable objects and a GPU-based non-rigid ICP acceleration method. To reduce the amount of computation, we first select uniformly sampled points and then estimate the transformation matrix of each point. And we proposed a method of smooth registration of all points through the weight-based affine transformation method. Also, we evaluated the accuracy and speed of the proposed method through experiments.

We used a single camera, and there was an occlusion problem between frames due to the movement of the whole body model. It caused a loss of 3D data. Also, when the deformation of the model between successive frames occurs to a large extent, we have a problem that the error increases. In the future, we will study ways to improve our limitations.

References

Slavcheva, M., Baust, M., Ilic, S.: SobolevFusion: 3D reconstruction of scenes undergoing free non-rigid motion. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2646–2655 (2018)

Guo, K., Xu, F., Wang, Y., Liu, Y., Dai, Q.: Robust non-rigid motion tracking and surface reconstruction using l0 regularization. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3083–3091 (2015)

Xu, W., Salzmann, M., Wang, Y., Liu, Y.: Deformable 3D fusion: from partial dynamic 3D observations to complete 4D models. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2183–2191 (2015)

Zeng, M., Zheng, J., Cheng, X., Liu, X.: Templateless quasi-rigid shape modeling with implicit loop-closure. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 145–152 (2013)

Sumner, R.W., Schmid, J., Pauly, M.: Embedded deformation for shape manipulation. ACM Trans. Graph. (TOG) 26(3), 80 (2007)

Szeliski, R., Lavallée, S.: Matching 3-D anatomical surfaces with non-rigid deformations using octree-splines. Int. J. Comput. Vis. 18(2), 171–186 (1996)

Bonarrigo, F., Signoroni, A., Botsch, M.: Deformable registration using patch-wise shape matching. Graph. Models 76(5), 554–565 (2014)

Newcombe, R.A., Fox, D., Seitz, S.M.: Dynamicfusion: reconstruction and tracking of non-rigid scenes in real-time. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 343–352 (2015)

Elanattil, S., Moghadam, P., Sridharan, S., Fookes, C., Cox, M.: Non-rigid reconstruction with a single moving RGB-D camera. In: 2018 24th International Conference on Pattern Recognition (ICPR), pp. 1049–1055. IEEE, August 2018

Farnebäck, G.: Two-frame motion estimation based on polynomial expansion. In: Bigun, J., Gustavsson, T. (eds.) SCIA 2003. LNCS, vol. 2749, pp. 363–370. Springer, Heidelberg (2003). https://doi.org/10.1007/3-540-45103-X_50

Li, K., Yang, J., Lai, Y.K., Guo, D.: Robust non-rigid registration with reweighted position and transformation sparsity. IEEE Trans. Vis. Comput. Graph. 25, 2255–2269 (2018)

Dou, M., et al.: Fusion4D: real-time performance capture of challenging scenes. ACM Trans. Graph. (TOG) 35(4), 114 (2016)

Zach, C.: Robust bundle adjustment revisited. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 772–787. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_50

Loke, M.H., Dahlin, T.: A comparison of the Gauss-Newton and quasi-Newton methods in resistivity imaging inversion. J. Appl. Geophys. 49(3), 149–162 (2002)

Hartley, H.O.: The modified Gauss-Newton method for the fitting of non-linear regression functions by least squares. Technometrics 3(2), 269–280 (1961)

Moré, J.J.: The Levenberg-Marquardt algorithm: implementation and theory. In: Watson, G.A. (ed.) Numerical Analysis. LNM, vol. 630, pp. 105–116. Springer, Heidelberg (1978). https://doi.org/10.1007/BFb0067700

Zollhöfer, M., et al.: Real-time non-rigid reconstruction using an RGB-D camera. ACM Trans. Graph. (ToG) 33(4), 156 (2014)

Newcombe, R.A., et al.: Kinectfusion: Real-time dense surface mapping and tracking. ISMAR 11(2011), 127–136 (2011)

Yang, Z., Zhu, Y., Pu, Y.: Parallel image processing based on CUDA. In: 2008 International Conference on Computer Science and Software Engineering, vol. 3, pp. 198–201. IEEE (2008)

Acknowledgments

This work was supported by ‘The Cross-Ministry Giga KOREA Project’ grant funded by the Korea government (MSIT) (No. GK17P0300, Real-time 4D reconstruction of dynamic objects for ultra-realistic service).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Lee, J., Kim, Es., Park, SY. (2019). 3D Non-rigid Registration of Deformable Object Using GPU. In: Morales, A., Fierrez, J., Sánchez, J., Ribeiro, B. (eds) Pattern Recognition and Image Analysis. IbPRIA 2019. Lecture Notes in Computer Science(), vol 11867. Springer, Cham. https://doi.org/10.1007/978-3-030-31332-6_53

Download citation

DOI: https://doi.org/10.1007/978-3-030-31332-6_53

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-31331-9

Online ISBN: 978-3-030-31332-6

eBook Packages: Computer ScienceComputer Science (R0)