Abstract

Most Convolutional Neural Networks make use of subsampling layers to reduce dimensionality and keep only the most essential information, besides turning the model more robust to rotation and translation variations. One of the most common sampling methods is the one who keeps only the maximum value in a given region, known as max-pooling. In this study, we provide pieces of evidence that, by removing this subsampling layer and changing the stride of the convolution layer, one can obtain comparable results but much faster. Results on the gait recognition task show the robustness of the proposed approach, as well as its statistical similarity to other pooling methods.

João Paulo Papa–The authors acknowledge FAPESP grants 2013/07375-0, 2014/12236-1, 2016/06441-7, and 2017/25908-6, CNPq grants 429003/2018-8, 427968/2018-6 and 307066/2017-7, as well as Petrobras research grant 2017/00285-6.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

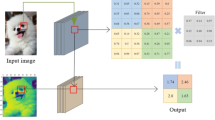

Sub-sampling layers, known as pooling, perform two essential tasks on Convolutional Neural Networks (CNN): (i) to reduce the number of hyperparameters, thus decreasing the computational cost for training and inference; and (ii) to hold a certain degree of space invariance by keeping the most relevant information. Deep learning techniques have achieved state-of-the-art results on image processing tasks since 2010. Image classification and localization competitions, such as ImageNET Large Scale Visual Recognition Challenge (ILSVRC) [22] and COCO (Common Objects in Context) [15], comprise such neural models in their top results mostly. Inception-V4 [24] and ResNET [6], for instance, achieved outstanding results in image classification tasks. Their basic structure has been used in several other works by adopting transfer learning techniques [3, 4, 11, 20].

However, a considerable drawback of these networks concerns the computational cost for both training and inference, taking several days (or even weeks) to achieve the desired results. Therefore, any gain on speed is always welcomed in such models. This work aimed to introduce a more efficient way to reduce the number of parameters and still to keep the spatial invariance expected in CNN-based models. The idea is to replace pooling layers by 2D convolutions with stride as of two. Such modification keeps the average accuracy in different networks, with the boost in both training and inference time.

The remainder of this work is organized as follows: Sect. 2 describes several types of sub-sampling approaches, and Sect. 3 presents the proposed approach. Sections 4 and 5 discuss the methodology and the experiments, respectively. Finally, Sect. 6 states conclusions and future worksFootnote 1.

2 Related Works

Convolutional Neural Networks were designed based on human visual cortex [13]. In short, such a brain region has two main types of cells: (i) simple cells, which are computationally emulated by the CNN kernels; and (ii) complex cells, that can be found either in the primary visual cortex [7], secondary visual cortex, and the Broadman area 19 of the human brain [9]. The former cells are allocated in the primary visual cortex, and such structures respond mainly to edges and bars [8]. The former cells respond both to edges and gradings, like a simple cell, but also to spatial invariance. It means that such cells react to light patterns in a large receptive field on a given orientation.

Based on this biological information, LeCun et al. [13] developed the first successful CNN model. Its structure consists of a total of seven layers: two pairs of convolutions followed by an average pooling, two multi-layer perceptrons layer, and a final layer responsible for classification. Roughly speaking, a CNN uses pooling since its beginning.

Max-pooling was first proposed in 2011 [17] as a solution for gesture recognition problems. Since then, several works claim that such operation is the best sub-sampling rule for a CNN. However, some other rules, such as Global Averaging Pooling [14], may also be applied in other circumstances: in this specific case, it was designed to replace a multi-layered perceptron network in the final layers of a CNN since it tries to impose correspondences between feature maps and categories. Another sub-sampling approach is a forced concatenation of information from MaxPooling combined with the convolution of stride two. The work of Romera et al. [21], for instance, aimed at performing real-time pixel-level segmentation using such paradigm, achieving near state-of-the-art segmentation results.

Sometimes, data sub-sampling is not desired because spatial information is quite important, and any loss could affect the results. DeepMind claims, on its reinforcement learning work [16], that any kind of pooling could remove relevant spatial information in several games so that the CNN used in their work consists only on convolutional and perceptron layers. Therefore, such arguments suggest it may be necessary to develop new pooling techniques in order to improve results on several problems.

In this work, we proposed GEINet, a deep network for the problem of gait recognition that does not contain any pooling layer. Besides, we also showed that the lack of such a layer could provide satisfactory results, but pretty much faster.

3 Proposed Approach

The main goal of this work is to find out the best neural structure in order to perform gait recognition successfully. Proposed by Han and Bhanu [5], the Gait Energy Image (GEI) approach can be used to classify or identify a given individual. Such technique consists of an average of pictures from a person in a given activity, such as walking or jogging. Roughly speaking, it can be understood as a heatmap indicating what the most frequent positions assumed by a person are. Figure 1 depicts some examples of images generated by the GEI approach.

Example of a GEI image for three different people. Image extracted from the “OU-ISIR Gait Database, Large Population Dataset (OULP)” [10].

State-of-the-art GEI classification results were achieved by Shiraga et al. [23], which proposed three other architectures to identify people from their gait images. The original network is straightforward, consisting of two blocks with a convolutional step (18 \(7\,\times \,7\) and 45 \(5\,\times 5\,\) kernels), a \(2\,\times \,2\) max-pooling, as well as local response normalization [12]. Following the convolutions, are two fully-connected layers of size 1, 024 and 956 (number of classes). All layer outputs are activated with ReLU, except for the last one, which is activated with the well-known softmax function.

In this paper, we proposed three other architectures for comparison purposes:

-

1.

A re-trained GEINet structure composed of two sets of layers of convolution, pooling, and Local Response Normalization (LRN) [12]. Such layers are then followed by two multilayer perceptrons and finally by a softmax for baseline purposes;

-

2.

A similar model, but removing the pooling layer, and changing the convolution stride from one to two (GEINet no-pool); and

-

3.

A third model based on the first one, but replacing the pooling layer for a convolution layer of stride two, acting as a dimensionality reducer. This model doubles the number of convolution layers in comparison to the other two (Double-conv).

Figure 2 depicts the architectures of the neural networks proposed in this work.

We followed the protocol described by Shiraga et al. [23] to construct the energy images, which consists of taking four consecutive video silhouette masks to further obtaining their pixel-wise averages.

4 Methodology

In this section, we described the methodology employed to validate the robustness of the proposed approach. The equipment used in the paper was an Intel Xeon Bronze® 3104 CPU with 6 cores (12 threads), 1.70 GHz, 96 GB RAM 2666 Mhz, and GPU Nvidia Tesla P4 8 GB. The framework MXNet [1] was used for the neural network architecture implementation. We provided a better description of data sets used, models, and the evaluation protocol in the following subsections.

4.1 Data Set

We considered the “OU-ISIR Gait Database, Large Population Dataset (OULP)” [10], which consists of silhouettes from 3, 961 people from several ages, size, and gender, walking on a controlled environment. Data have been collected since March 2009 through outreach activity events in Japan and recorded at 30 frames per second, from four different angles: 55, 65, 75 and 85 degrees. The original images have a resolution of \(640\,\times \,480\) pixels, but the silhouettes were further cropped originating another set of image with a resolution of \(88\,\times \,128\) pixels. In this work, we resized the images to a resolution of \(44\,\times \,64\) pixels for the sake of computational load.

4.2 Evaluation Protocol

We performed the cross-validation protocol described by Iwama et al. [10]. The dataset is divided into five subgroups of 1, 912 people each, and each subset i is further divided into two equal parts of 956 individuals, hereinafter called \(g_{i1}\) and \(g_{i2}\), respectively, \(\forall i=1,2,\ldots ,5\). The former group (\(g_{i1}\)) is used for feature extraction purposes using the proposed approaches and baseline, and the latter set (\(g_{i2}\)) is employed for the classification step. Each subset is further divided in half, i.e., \(g_{i1}=g^T_{i1}\cup g^V_{i1}\) and \(g_{i2}=g^T_{i2}\cup g^V_{i2}\), where \(g^T_{ij}\) and \(g^V_{ij}\) stand for training and validating sets, respectively, \(\forall j=1,2\). In this work, we opted to use two fast and parameterless techniques for the classification step: the well-known nearest neighbor (NN) [2] and the Optimum-Path Forest (OPF) [18, 19]Footnote 2. Figure 3 depicts the aforementioned protocol.

Protocol adopted in the work, as described by Iwama et al. [10]. The dataset is divided into 5, and each part is further subdivided twice: one is used for feature learning and the other for classification. Then the parts are switched, so that there are a total of 10 evaluation steps.

As mentioned earlier, the dataset provides four camera angles: 55\(^{\circ }\), 65\(^{\circ }\), 75\(^{\circ }\), and 85\(^{\circ }\). Therefore, we opted to use a cross-angle methodology, i.e., we used a given angle for training purposes and all angles to evaluate the models. Each video contains between 15 and 45 frames, but we used only 4 to build the gait energy imagesFootnote 3. To train the neural networks, in each batch iteration, we selected four random contiguous frames. For evaluation purposes, we divided the videos into consecutive non-overlapping clips and further classified each. The final prediction is the mode of all predictions in the sequence.

Since the networks are trained with a single video from each subject, we employed data augmentation to improve training diversity. For this purpose, we employed four image transformations, each with \(50\%\) chance of occurring independently: horizontal flip, Gaussian noise with zero mean and standard deviation as of 0.02, as well as random vertical and horizontal black stripes of width 3. Additionally, the random temporal cropping step functions as augmentation. Lastly, due to the low number of videos and the high number of possible variations in the augmentation step, we trained the networks on 12, 500 epochs. In addition, we considered three measurements: (i) training and (ii) classification times, and (iii) accuracy. Notice we used the Wilcoxon signed-rank test [25] for the statistical analysis of each measurement.

5 Results

In this section, we presented the experimental results and discussion. We showed that replacing the pooling layer by a larger convolutional stride is sufficient to obtain a good trade-off between computational load and accuracy. As aforementioned, in this paper we evaluated three models and compared their performance.

5.1 Accuracy

We evaluated how the models perform when predicting with different camera angles. All the training step was performed on a single camera angle and the same for the classifier. Therefore, the idea is to predict gaits from all four viewpoints. Tables 1 and 2 depict the accuracy results using NN and OPF, respectively. The results concern the average from all five folds, as described in Sect. 4.2. It is worth noticing that, the closer the test angle is to 90\(^{\circ }\), the better the overall accuracy is, i.e., when the camera records the actor from the side view. As expected, the accuracies tend to be higher when the train and test angles are the same.

When replacing the pooling layer from GEINet by a stride in its convolution layer, the accuracy results go down marginally – around \(1\%\). Besides, Wilcoxon test returned a p-value around to \(10^{-7}\), indicating they probably do not diverge. Trading the pooling step by a new convolutional layer with stride as of 2 results in slightly better results, but still not quite better than the original model. The Wilcoxon test outputted a p-value as of 0.102, indicating that their distribution might be similar as well.

5.2 Execution Time

Since the protocol employed in this paper establishes ten runs, and the models were trained once for each angle, each model has 40-time measurements. Therefore, all results presented in this section correspond to the average of all runs.

Table 3 presents the network training and inference times. Although the non-pooling model achieved slightly smaller accuracies than the original one, its training time is considerably lower. The reduction from 3, 753 seconds to 3, 322 corresponds to a gain of \(11.5\%\), while such gain was \(8.3\%\) for inference purposes.

6 Conclusion and Future Works

In this work, we introduced two variants of a simple but efficient model for gait recognition purposes (GEINet): one replaces the pooling layers by a convolutional stride (GEINet no-pool), and the other replaces the pooling layers by a convolutional layer with stride (double-conv). We showed the non-pooling version achieved slightly smaller accuracies than GEINet, but with a considerable speed-up (11.5%). On the other hand, the double-conv model ran 6.3% slower without any perceptible gain in accuracy. Regarding future works, we intend to use GEI to identify people directly from the video streams. Besides, different activation functions shall be investigated too.

Notes

- 1.

The source code is available at https://github.com/thierrypin/gei-pool.

- 2.

We used the Python OPF implementation available at https://github.com/marcoscleison/PyOPF.

- 3.

We observed that only four images were enough to obtain a reasonable energy image.

References

Chen, T., et al.: Mxnet: A flexible and efficient machine learning library for heterogeneous distributed systems. CoRR abs/1512.01274 (2015). http://arxiv.org/abs/1512.01274

Cover, T.M., Hart, P.E., et al.: Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 13(1), 21–27 (1967)

Esteva, A., et al.: Dermatologist-level classification of skin cancer with deep neural networks. Nature 542(7639), 115 (2017)

Habibzadeh, M., Jannesari, M., Rezaei, Z., Baharvand, H., Totonchi, M.: Automatic white blood cell classification using pre-trained deep learning models: Resnet and inception. In: Tenth International Conference on Machine Vision (ICMV 2017), International Society for Optics and Photonics, vol. 10696, p. 1069612 (2018)

Han, J., Bhanu, B.: Individual recognition using gait energy image. IEEE Trans. Pattern Anal. Mach. Intell. 28(2), 316–322 (2006)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. CoRR abs/1512.03385 (2015). http://arxiv.org/abs/1512.03385

Hubel, D., Wiesel, T.: Receptive fields, binocular interaction, and functional architecture in the cat’s visual cortex. J. Physiol. 160, 106–154 (1962)

Hubel, D.H., Wiesel, T.N.: Receptive fields of single neurons in the cat’s striate cortex. J. Physiol. 148, 574–591 (1959)

Hubel, D.H., Wiesel, T.N.: Receptive fields and functional architecture in two nonstriate visual areas (18 and 19) of the cat. J. Neurophysiol. 28(2), 229–289 (1965)

Iwama, H., Okumura, M., Makihara, Y., Yagi, Y.: The ou-isir gait database comprising the large population dataset and performance evaluation of gait recognition. IEEE Trans. Inf. Forensics Secur. 7(5), 1511–1521 (2012)

Kong, B., Wang, X., Li, Z., Song, Q., Zhang, S.: Cancer metastasis detection via spatially structured deep network. In: Niethammer, M., Styner, M., Aylward, S., Zhu, H., Oguz, I., Yap, P.-T., Shen, D. (eds.) IPMI 2017. LNCS, vol. 10265, pp. 236–248. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59050-9_19

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Lin, M., Chen, Q., Yan, S.: Network in network. CoRR abs/1312.4400 (2013). http://arxiv.org/abs/1312.4400

Lin, T., et al.: Microsoft COCO: common objects in context. CoRR abs/1405.0312 (2014). http://arxiv.org/abs/1405.0312

Mnih, V., et al.: Human-level control through deep reinforcement learning. Nature 518(7540), 529–533 (2015). https://doi.org/10.1038/nature14236

Nagi, J., et al.: Max-pooling convolutional neural networks for vision-based hand gesture recognition. In: 2011 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), pp. 342–347. IEEE (2011)

Papa, J.P., Falcão, A.X., Suzuki, C.T.N.: Supervised pattern classification based on optimum-path forest. Int. J. Imaging Syst. Technol. 19(2), 120–131 (2009). https://doi.org/10.1002/ima.v19:2

Papa, J.P., Falcão, A.X., Albuquerque, V.H.C., Tavares, J.M.R.S.: Efficient supervised optimum-path forest classification for large datasets. Pattern Recogn. 45(1), 512–520 (2012)

Rakhlin, A., Shvets, A., Iglovikov, V., Kalinin, A.A.: Deep convolutional neural networks for breast cancer histology image analysis. In: Campilho, A., Karray, F., ter Haar Romeny, B. (eds.) ICIAR 2018. LNCS, vol. 10882, pp. 737–744. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-93000-8_83

Romera, E., Alvarez, J.M., Bergasa, L.M., Arroyo, R.: Efficient convnet for real-time semantic segmentation. In: IEEE Intelligent Vehicles Symposium (IV), pp. 1789–1794 (2017)

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. (IJCV) 115(3), 211–252 (2015). https://doi.org/10.1007/s11263-015-0816-y

Shiraga, K., Makihara, Y., Muramatsu, D., Echigo, T., Yagi, Y.: Geinet: view-invariant gait recognition using a convolutional neural network. In: 2016 International Conference on Biometrics (ICB), pp. 1–8. IEEE (2016)

Szegedy, C., Ioffe, S., Vanhoucke, V.: Inception-v4, inception-resnet and the impact of residual connections on learning. CoRR abs/1602.07261 (2016). http://arxiv.org/abs/1602.07261

Wilcoxon, F.: Individual comparisons by ranking methods. Biom. Bull. 1(6), 80–83 (1945)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

dos Santos, C.F.G., Moreira, T.P., Colombo, D., Papa, J.P. (2019). Does Pooling Really Matter? An Evaluation on Gait Recognition. In: Nyström, I., Hernández Heredia, Y., Milián Núñez, V. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2019. Lecture Notes in Computer Science(), vol 11896. Springer, Cham. https://doi.org/10.1007/978-3-030-33904-3_71

Download citation

DOI: https://doi.org/10.1007/978-3-030-33904-3_71

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33903-6

Online ISBN: 978-3-030-33904-3

eBook Packages: Computer ScienceComputer Science (R0)