Abstract

The image object detection methods based on deep learning have achieved remarkable results in recent years. However, as object sizes of Chinese Traditional Costume Images (CTCI-4) data set are smaller than that of natural images, and there are not enough training samples, the previous excellent object detection methods cannot achieve good detection result. To tackle this issue, mainly inspired by GRP-DSOD, we propose an effective network, namely GRP-DSOD++ network, to detect objects in the CTCI-4 data set. In order to collect multi-scale context information and capture a wider range of features, we introduce Dilated-Inception module (DI module) and applied it to object detection framework that is learned from scratch. We also applied other advanced components of several excellent object detectors to the proposed network architecture. The proposed detector in the CTCI-4 data set achieves 77.08% mAP, higher than the GRP-DSOD detector (75.33% mAP). And the detector (learning on VOC “07+12” trainval) also can achieve good performance on PASCAL VOC2007.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Current the advanced deep convolutional neural network has scored outstanding results in computer vision tasks such as image classification [1,2,3,4], object detection [5,6,7,8,9,10,11,12,13] and image segmentation [14,15,16,17,18,19]. The CNN-based object detection methods can be divided into two-stage approach and one-stage approach.

Two-stage approach is a two-step process: first generate region proposals and then classify these candidate regions. The typical representations of such method are the series of R-CNN algorithms, such as R-CNN [5], Fast R-CNN [6], Faster R-CNN [7] and R-FCN [8]. One-stage approach does not require region proposal stage, which can directly generate the category probability and position coordinates of objects. Examples of such approach are YOLO [9], SSD [10], DSOD [11], GRP-DSOD [12] and RetinaNet [13].

In order to achieve good performance, most of the advanced object detection frameworks fine-tune models pre-trained on ImageNet. There are many deep models publicly available. Fine-tuning from the public pre-trained models requires less training data than learning object detectors from scratch. Besides, it can obtain the final model with less training time. But object detection systems that directly adopt the pre-trained networks have little flexibility to adjust the network structures. Some object detectors such as DSOD [11], GRP-DSOD [12] and RetinaNet [13] can train models from scratch through which the network structure of those can be flexibly designed according to application requirements or computing platforms. Learning object detection networks directly without pre-trained models can eliminate the learning bias due to the difference on both the loss functions and the category distributions between classification and detection tasks. Moreover, training object detectors from scratch can eliminate the mismatch between source dataset and target dataset.

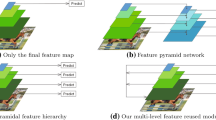

Although DSOD is developed by following the single-shot detection (SSD) framework, it is the first framework that can train object detectors from scratch with outstanding performance, even with limited training data. GRP-DSOD is an extension of DSOD network, GRP-DSOD explores a new network architecture that can adaptively recalibrate the supervision intensities of layers for prediction based on input object sizes. Inspired by DSOD and GRP-DSOD, Gated Recurrent Feature Pyramid DSOD++ (GRP-DSOD++) framework is proposed in this paper.

We propose Dilated-Inception module (DI module) that combines the merits of dilated convolution and Inception structure. DI module can extract multiple range of information for prediction layers, we will describe the details in Sect. 3.2. SE block [4] is also applied in the early layer of our network, as illustrated in Fig. 5. Last but not the least, we introduce balanced confidence loss in the objective loss function which is proposed in SSD network to keep the balance between positive samples and negative samples. Details are in Sect. 3.3. We incorporate DI module, SE block and new loss function into GRP-DSOD, so the proposed network is called GRP-DSOD++, and it has achieved new state-of-the-art results on our dataset of Chinese traditional costume images.

To summarize, our main contributions are as follows:

-

(1)

We proposed Dilated-Inception module that can aggregate multiple long-range information.

-

(2)

In GRP-DSOD network framework, we add a Dilated-Inception module, introduce a SE block, and change standard convolution to dilation convolution/atrous convolution in partial convolution layer. Besides, we introduce a weight factor into the confidence loss function to balance the positive and negative samples.

-

(3)

The GRP-DSOD++ network is applied to the object detection task of Chinese traditional costume images, which has higher accuracy compared with other excellent object detection networks.

2 Related Work

Classic Object Detectors:

Classic object detection methods extract the features of artificial design such as LBP [20, 21], Haar [22], HOG [23], HSC [24] and SIFT [25] in each sliding window. Subsequently, SVM [26], Cascade Classifier [27] or other classifier was utilized for recognition. HOG [23] is a landmark milestone in pedestrian detection, later HOG-based deformable part model (DPM) proposed by Felzenszwalb et al. [28] had best detection performance on PASCAL [29] for several years. The sliding-window approach was the leading detection paradigm in classic computer vision. Since the resurgence of deep learning, two-stage detectors have held a dominant position in modern object detection.

Two-Stage Detectors:

Two-stage detectors first generate a sparse set of candidate proposals, then classify the proposals with high-quality classifier. Selective Search [30] is a class-independent, data-driven strategy that combines the best of the intuitions of segmentation and exhaustive search and it can generate a small set of high-quality object locations for object recognition. R-CNN [5] extracts convolutional features on each selective search region proposal, and then classifies each proposal using class-specific linear SVMs. Fast R-CNN [6] network takes as input a full-image and a set of regions of interest (RoIs). The network computes the convolutional features of whole image, and gets features of each RoI by RoI projection. Thus the objector is faster than RCNN on both training and testing. Faster R-CNN [7] explores a Region Proposal Network (RPN) that shares whole-image convolutional features with the detection network, thus proposals computational cost is negligible. RPNs can generate high quality RoIs that are used by Fast RCNN for detection. R-FCN [8] develops position-sensitive score maps which achieve higher positioning accuracy than previous region-based detectors such as Fast/Faster R-CNN. Moreover, R-FCN adopts fully convolutional network, so it is more accurate and efficient.

One-Stage Detectors:

YOLO [9] is a single neural network that can predict object positions and classes directly from full images in one evaluation. YOLO has extremely fast speed but with poor accuracy. SSD [10] combines the virtues of YOLO and Faster R-CNN, ensuring both speed and accuracy. The definition of default boxes in SSD is similar to the anchors of RPN network. At test time, SSD generates the per-class scores for each default box and adjusts the coordinates of those boxes to better encompass the objects.

The previous one-stage detectors have obvious advantage in speed, but the precision is inferior to the two-stage detectors. It is not until the appearance of DSOD [11] that one-stage detector can achieve the accuracy comparable to many two-stage detector with fast real-time detection speed. GRP-DSOD [12] is an extended work of the DSOD, its framework uses the gating mechanism of SENets [4] for reference to achieve adaptive feature recalibration. The performance of GRP-DSOD system outperforms a lot of advanced two-stage detection methods. RetinaNet [13] address class imbalance by using a novel, simple and highly effective focal loss. DSOD, GRP-DSOD and RetinaNet can be learned from scratch on a small dataset and achieve high precision. Our proposed method is a further improvement and development of GRP-DSOD architecture and incorporates several excellent components of other modern detectors.

Dilated Convolution:

Fisher Yu et al. [14] proposed dilated convolution module that can be applied in semantic segmentation to keep more details. The module expands the receptive field exponentially without losing resolution and does not add extra parameter, and it is also effective to increases the accuracy of image classification task. DeepLab [15,16,17] also utilized dilated convolution in the network structure and achieved good segmentation results. We apply dilated convolution to our detecting framework, which proves that dilated convolution is suitable for object detection task.

3 Method

In this section, we will first review the recently proposed Gated Recurrent Feature Pyramid DSOD (GRP-DSOD). Then, we will present the Dilated-Inception module (DI module), a module that combines the merits of dilation convolution and Inception, and briefly introduce the SE block of literature [4]. Following that, we will describe a balanced confidence loss. Finally, we will describe the structure of GRP-DSOD++ in detail.

3.1 GRP-DSOD

The GRP-DSOD object detection framework is an extension of DSOD, which can be trained directly without pre-training model, and the detection accuracy of small objects is improved. The Recurrent Feature Pyramid structure in the GRP-DSOD compresses rich spatial and semantic features into a single prediction layer, further reducing the number of parameters to be learned. The DSOD model needs to learn half of new features for each scale in prediction layers, while the GRP-DSOD model only needs to learn one-third of new features, the convergence speed of the GRP-DSOD model is therefore faster. In addition, the GRP-DSOD model introduces a new gating prediction strategy, which can enhance or weaken the supervision adaptively on feature maps of different scales according to the size of the input object.

The GRP-DSOD++ proposed in this paper is an improvement on the GRP-DSOD. The GRP-DSOD++ detector is described in detail below.

3.2 DI Module

DI module adopts the advantages of dilated convolution and Inception structure. The DI module takes into account the multiple long-range information with a small amount of computation added.

The Inception module in literature [2] uses different convolution kernel sizes (e.g. \( 3\times 3 \), \( 5\times 5 \)) to extract the multi-scale information, the Inception module structure is shown in Fig. 1. The parallel superposition of different convolution kernel sizes in the Inception module increases the width of network and improves the adaptability of network to scale. The convolution kernel of \( 1\times 1 \) plays a role in reducing the thickness of feature maps, which appropriately reduces the computation of convolution.

Dilated convolution with a sampling rate of \( r \) refers to the convolution of input with upsampled filters which is produced by inserting \( r - 1 \) zero weights between two adjacent filter weights in the vertical and horizontal directions. Standard convolution is equivalent to dilated convolution with a sampling rate of 1. The receptive field of filter can be adjusted by changing the sampling rate of dilated convolution. As shown in Fig. 2, the larger the sampling rate, the larger the corresponding receptive field of filter on the input.

Dilated convolution with kernel size \( 3\times 3 \) and different sampling rates. (a) dilated convolution with a sampling rate of 1 (standard convolution). (b) The sampling rate is 6, and the perceptive field of convolution kernel is \( 2 3\times 2 3 \). (c) The sampling rate is 12, and the perceptive field of convolution kernel is \( 4 7\times 47 \).

DI module proposed in this paper can be considered as Inception module combined with dilated convolution. The structure of DI module is shown in Fig. 3, where the convolution kernel size is \( 3\times 3 \) and the sampling rate is \( r = \left\{ {1,6,12} \right\} \). Compared with Inception module, as DI module obtains multi-scale context information through dilated convolution, it can capture longer range information and requires fewer parameters.

3.3 SE Block

SENets [4] won the first place in ILSVRC 2017 classification competition. Inspired by SENets, we use SE block in the early layer of our network to improve the expression ability of lower level features. SE block can enhance the channel features that are useful for the later layers and suppress the channel features that are less useful.

The basic structure of a SE block is illustrated in Fig. 4. \( {\mathbf{U}} = \left[ {u_{1} ,u_{2} , \cdots ,u_{3} } \right] \in R^{w \times h \times c} \) is a set of feature maps. The output of SE block is \( {\tilde{\mathbf{U}}} \in R^{w \times h \times c} \), therefore a SE block can be formulated as:

Squeeze operation can be thought of as a global average pooling of feature map channel by channel. \( s \in R^{c} \) is the channel-wise descriptor obtained by squeezing U. The c-th element in \( s \) is obtained by the following calculation formula:

The activation is composed of two fully connected layers and a sigmoid activation:

Where, \( \delta \) is ReLU [31] function and \( \sigma \) is sigmoid function. \( W_{1} \in R^{{\frac{c}{r} \times c}} \) and \( W_{2} \in R^{{c \times \frac{c}{r}}} \) are the weights of the two fully connected layers respectively (set \( r = 16 \) in this paper). The final output of SE block is:

Where, \( \otimes \) stands for channel-wise multiplication.

3.4 Balanced Confidence Loss

We improve the objective loss function in SSD detection system [10] by introducing a weighting factor \( \beta \in [0,1] \) into confidence loss to control the balance of loss of positive and negative samples. In practice, \( \beta \) can be set by inverse class frequency treated as a hyperparameter set by cross validation. We write the balanced confidence loss (b-conf) of multiple classes confidences (c) as:

where N is the number of matched default boxes, \( \hat{c}_{i}^{k} = \frac{{\exp (c_{i}^{k} )}}{{\sum\nolimits_{k} {\exp (c_{i}^{k} )} }} \), \( H_{ij}^{k} = \left\{ {1,0} \right\} \) is an indicator that is if the \( i \)-th default box is matched to the \( j \)-th ground truth box of category \( k \), its value is 1 otherwise is 0.

3.5 GRP-DSOD++ Architecture

DI modules integrate different range of feature information, and DI modules can be flexibly applied at any depth in an architecture. Due to the GPU memory constraints, we applied only one DI module to the proposed network, as shown in Fig. 5. The DI module is placed between the first and second prediction layer so that the features extracted by the DI module can be used by all the prediction layers except the first prediction layer.

SE block allows a network to perform feature recalibration, through which it can learn to use global information to selectively emphasize informative features and suppress less useful ones. In this paper, SE block is placed in the early layer of the proposed model to enhance the expression ability of lower level features.

The score of the object category in candidate boundary box that may contain an object is affected by contextual information. For example, if there is grassland in the image, the probability that the objects in the image are flowers or animals is relatively high, while the probability of office supplies is extremely low. Therefore, in this paper, the standard convolution in the last two Dense blocks of the network structure is changed to the dilated convolution to capture a wider range of feature information for the second to sixth prediction layers. A schematic view of the resulting network is depicted in Fig. 5.

4 Experiments

We conducted experiments on the CTCI data set and also on PASCAL VOC2007. Object detection performance is measured by mean Average Precision (mAP). All experiments are trained from scratch without the ImageNet [32] pre-trained models. Our code is implemented on Caffe platform. The whole network is optimized via the SGD optimizer on one GTX 1080GPU. We set the momentum to 0.9 and weight decay to 0.0005. We use the “xavier” method [33] to randomly initialize the parameters in the proposed model.

4.1 Experiments Performance and Results on CTCI Data Set

Chinese traditional costume images (CTCI) is an open and shared resource created by Beijing University of Posts and Telecommunications and Minzu University of China. By the image culture gene semantic tagging system construction, realize the traditional dress image semantic segmentation and cultural gene multi-label labeling learning. We used 1450 images from CTCI data set for this experiment, which included 4 categories, including 763 images of peony, 433 images of bird, 707 images of person and 363 images of butterfly. In the 1450 images, there are 2,462 objects for peony, 882 objects for bird, 1,881 objects for person and 748 objects for butterfly. In the experiment, we used 1020 images for training and 439 images for testing.

In this paper, the effectiveness of the proposed object detection framework is illustrated by experiments on the CTCI-4 data set. Our implementation setting being the same as that of GRP-DSOD network ensure a fair comparison. We use batch size of 4 and accum_batch_size of 64 to train our models on the CTCI-4 data set. The initial learning rate is set to 0.1, then update the learning rate by multistep policy during the iteration. Different object detection frameworks are used for object detection on CTCI-4 data set, and the experimental results are shown in Table 1. It can be seen that the accuracy of GRP-DSOD (75.33% mAP) is slightly lower than that of R-FCN (75.41% mAP). Our GRP-DSOD++320 has the highest accuracy (77.08% mAP), which is better than baseline detector GRP-DSOD320 (75.33% mAP) in the same parameter settings, also higher than R-FCN. Some detection examples on the CTCI-4 data set using GRP-DSOD++320 detector are shown in Fig. 6.

4.2 Performance Analysis

We studied the effectiveness of each component in the GRP-DSOD++ model in this section. In order to prove that each component can improve detection accuracy, the control experiment is carried out on the CTCI-4 dataset, including: (1) DI module; (2) SE block; (3) Dilated convolution; (4) Balanced confidence loss.

-

(1)

Effectiveness of DI module

DI module, SE block and dilated convolution in GRP-DSOD++ model are retained, and the improved objective loss function is used to train the model (where the value of \( \beta \) is fixed at 0.999). Different sampling rates are used for experiments in DI module. The experimental results are shown in Table 2. The results show that the detection performance is best when the sampling rate of DI module is set to (1, 6, 12).

The experimental results of removing SE block and dilated convolution in GRP-DSOD++ model, retaining DI module, where the sampling rate of DI module is (1, 6, 12), and training the model with the improved objective loss function are shown in Table 3. When using DI module and balanced confidence loss, the mAP is 77.05% (line 1, Table 3). Compared with using the improved loss function only (line 4, Table 3), the accuracy is improved, which proves that DI module can improve the accuracy of object detection.

-

(2)

Effectiveness of SE block

The experimental results of removing DI module and dilated convolution in GRP-DSOD++ model, retaining SE block, and training the model with the improved objective loss function are shown in Table 3. When using SE block and balanced confidence loss, the result is 76.51% (line 2, Table 3). Compared with the result of only using the improved loss function (line 4, Table 3), the accuracy is slightly improved, which can prove the effectiveness of SE block in our network. The experimental results show that these blocks can be stacked together to form GRP-DSOD++ architectures that generalise extremely effectively across on CTCI-4 that images categories vary widely.

-

(3)

Effectiveness of dilated convolution

The experimental results of removing DI module and SE block in GRP-DSOD++ model, retaining dilated convolution, and training the model with the improved objective loss function (where the sampling rate of DI module is (1, 6, 12) and the value of \( \beta \) is 0.999) are shown in Table 3. The result of using dilated convolution and balanced confidence loss is 76.97% (line 3, Table 3), which is improved compared with the result of only using the improved loss function (line 4, Table 3). It proves that the application of dilated convolution in Dense blocks of network can improve the accuracy of object detection to some extent.

-

(4)

Effectiveness of balanced confidence loss

DI module, SE block and dilated convolution in GRP-DSOD++ model are removed, and only the improved loss function was used to train the model. The experimental results are shown in Table 4 mAPremoved. When only balanced confidence loss is used, set different \( \beta \) all improves the detection accuracy, and the mAPremoved is highest when \( \beta \) is 0.999.

The experimental results of retained all components in GRP-DSOD++ model, where the sampling rate of DI module is (1, 6, 12), and training the model with the improved objective loss function (using different \( \beta \)) are shown in Table 4 mAPretained. The accuracy is highest when all components are applied to the network and the \( \beta \) value in balanced confidence loss is 0.999.

The experimental results show that the large class imbalance encountered during training of dense detectors overwhelms the cross entropy loss. Easily classified negatives comprise the majority of the loss and dominate the gradient. While \( \beta \) balances the importance of positive/negative examples, it does not differentiate between easy/hard examples. Instead, we propose to reshape the loss function to down-weight easy examples and thus focus training on hard negatives.

4.3 Results on PASCAL VOC

We use batch size of 4 and accum_batch_size of 128 to train GRP-DSOD model and GRP-DSOD++ model on the PASCAL VOC 2007 train/val, and test on the PASCAL VOC 2007 test set respectively. The mAP of GRP-DSOD reached 76.51%, and the mAP of GRP-DSOD++ reached 77.06%. While we use batch size of 18 and accum_batch_size of 128 to train our model on two K80 GPUs, the mAP of GRP-DSOD++ reached 78.80%. That is because the precision of the object detector is greatly affected by the batch size, and within a certain range, the larger the batch size, the higher the precision. Due to the limitations of hardware, our batch size (18) is much smaller than the batch size (48) in GRP-DSOD. This explains why our accuracy (78.8%) is slightly lower than GRP-DSOD (79.0%) on the PASCAL VOC dataset. Our result of GRP-DSOD++320 is shown in Table 5.

The backbone network of Faster RCNN and SSD300 is VGG-16. The backbone network of YOLO is Darknet. R-FCN adopts the ResNet-50/101 backbone network, DSOD300, GRP-DSOD320 and GRP-DSOD++320 use DS/64-192-48-1 backbone network.

5 Conclusion

Dilated-Inception module proposed in this paper combines Inception module and dilated convolution, and DI module can be applied to one-stage object detector to improve the detection performance. Our improved GRP-DSOD++ detection framework integrates DI module, SE block, dilated convolution and class-balanced loss. A great deal of control experiments were carried out on our CTCI-4 data set to clarify the role of the individual components. GRP-DSOD++ achieves 77.08% mAP on CTCI-4 data set, that is 1.75% higher than GRP-DSOD (75.33% mAP) and 1.67% higher than R-FCN (75.41% mAP). The experiment shows that one-stage object detector learned directly without the ImageNet pre-trained models can achieve better performance than two-stage object detector. This work suggests that one-stage object detection approaches have a lot of improvement room in performance.

References

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: ICLR International Conference on Learning Representation, pp. 1–14. ICLR, San Diego (2015)

Szegedy, C., Liu, W., Jia, Y., et al.: Going deeper with convolutions, pp. 1–9. IEEE, Boston (2015)

He, K., Zhang, X., Ren, S., et al.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778. IEEE, Vegas (2016)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141. IEEE, Salt Lake City (2018)

Girshick, R., Donahue, J., Darrell, T., et al.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587. IEEE, Columbus (2014)

Girshick, R.: Fast R-CNN. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1440–1448. IEEE, Santiago (2015)

Ren, S., He, K., Girshick, R., et al.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2017)

Dai, J., Li, Y., He, K., et al.: R-FCN: object detection via region-based fully convolutional networks. In: Advances in Neural Information Processing Systems, pp. 379–387 (2016)

Redmon, J., Divvala, S., Girshick, R., et al.: You only look once: unified, real-time object detection. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788. IEEE, Las Vegas (2016)

Liu, W., Anguelov, D., Erhan, D., et al.: SSD: single shot multibox detector. In: European Conference on Computer Vision, pp. 21–37. IEEE, Cham (2016)

Shen, Z., Liu, Z., Li, J., et al.: DSOD: learning deeply supervised object detectors from scratch. In: IEEE International Conference on Computer Vision, pp. 1937–1945. IEEE, Venice (2017)

Shen, Z., Shi, H., Feris, R., et al.: Learning object detectors from scratch with gated recurrent feature pyramids, Venice (2017)

Lin, T.Y., Goyal P., Girshick, R., et al.: Focal loss for dense object detection. In: IEEE International Conference on Computer Vision, pp. 2999–3007. IEEE, Venice (2017)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions, San Juan (2016)

Chen, L.C., Papandreou, G., Kokkinos, I., et al.: Semantic image segmentation with deep convolutional nets and fully connected CRFs, San Diego (2015)

Chen, L.C., Papandreou, G., Kokkinos, I., et al.: DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2018)

Chen, L.C., Papandreou, G., Schroff, F., et al.: Rethinking atrous convolution for semantic image segmentation, Hawaii (2017)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. IEEE Trans. Comput. Vis. Pattern Recogn. 39(4), 640–651 (2017)

He, K., Gkioxari, G., Dollár, P., et al.: Mask R-CNN. In: IEEE International Conference on Computer Vision, pp. 2980–2988. IEEE, Venice (2017)

Ojala, T., Pietikainen, M., Harwood, D.: Performance evaluation of texture measures with classification based on Kullback discrimination of distributions. In: IEEE International Conference on Pattern Recognition, pp. 582–585. IEEE, Jerusalem (1994)

Ojala, T., Pietikinen, M., Harwood, D.: A comparative study of texture measures with classification based on featured distributions. Pattern Recogn. 29(1), 51–59 (1996)

Papageorgiou, C.P, Oren, M., Poggio, T.: A general framework for object detection. In: IEEE International Conference on Computer Vision, pp. 555–562. IEEE, Bombay (2002)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Vision and Pattern Recognition, pp. 886–893. IEEE, San Diego (2005)

Ren, X., Ramanan, D.: Histograms of sparse codes for object detection. In: 2013 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3246–3253. IEEE, Portland (2013)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2014)

Chen, P.H., Lin, C.J., Schlkopf, B.: A tutorial on support vector machines. Appl. Stochast. Models Bus. Ind. 21(2), 111–136

Viola, P., Jones, M.J.: Robust real-time face detection. IEEE Int. J. Comput. Vis. 57, 137–154 (2004)

Felzenszwalb, P.F., Girshick, R.B., McAllester, D., et al.: Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 32(9), 1627–1645 (2010)

Everingham, M., Van, G.L., Williams, C.K.I., et al.: The PASCAL visual object classes (VOC) challenge. Int. J. Comput. Vis. 88(2), 303–338 (2010)

Uijlings, J.R.R., Van De Sande, K.E.A., Gevers, T., et al.: Selective search for object recognition. Int. J. Comput. Vis. 104(2), 154–171 (2012)

Nair, V., Hinton, G.E.: Rectified linear units improve restricted Boltzmann machines. In: ICML International Conference on Machine Learning, pp. 807–814. ICML, Haifa (2010)

Deng, J., Dong, W., Socher, R., et al.: ImageNet: a large-scale hierarchical image database. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255. IEEE, Miami (2009)

Acknowledgements

This work was supported by the key project of the national social science fund of China (18VDL001).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhao, H., Yang, T., Hou, X., Zhu, H., Yang, Z. (2019). Object Detection for Chinese Traditional Costume Images Based GRP-DSOD++ Network. In: Zhao, Y., Barnes, N., Chen, B., Westermann, R., Kong, X., Lin, C. (eds) Image and Graphics. ICIG 2019. Lecture Notes in Computer Science(), vol 11901. Springer, Cham. https://doi.org/10.1007/978-3-030-34120-6_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-34120-6_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34119-0

Online ISBN: 978-3-030-34120-6

eBook Packages: Computer ScienceComputer Science (R0)