Abstract

Real scene images contain rich contextual visual cues. How to use contextual information is a fundamental problem in object recognition task. Contextual information mainly includes visual relevance and spatial relations. In this work we propose a context model that utilize both kinds of context. Our model is based on common detection framework Faster R-CNN. A multi-scale feature module extract features from different scales, and use a gated bi-directional structure to control message pass. This module collects contextual information from relevant region and different scales. A spatial relation inference module builds object spatial relations through a GRU structure, which can learn effective object spatial state. By special designing to adapt to CNN structure, both modules work well in the integrated detection model. And the whole model can be trained end-to-end. Experiments prove the effectiveness of our context model. Our method achieves 76.82% mAP on PASCAL VOC 2007 dataset, which outperforms Faster R-CNN by 3.5%.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Object detection is a classic recognition task in the field of computer vision. The goal of object detection is to use bounding boxes to localize the region of every target object instance in an image, and to recognize object category of each region. Most of the current object detection methods use deep convolutional neural networks (DCNNs) [1], which aim to extract deep visual features of the image and build efficient detectors to generate accurate target regions. While these methods have made great breakthroughs and achieved good performance in many detection benchmarks, they only use visual features in local regions around object instances independently. Usually there are often close relationships between object instances in a same scene [2]. In other words, rich contextual information in an image can contact all independent object instances together. Taking good advantage of this information can help us infer the category of each target region more accurately.

Utilizing contextual information can improve the recognition result, which has been proved by many studies [2,3,4]. There are two main types of contextual information between objects: visual relevance and spatial relations. There are two types of method for modeling the corresponding contextual information. One is to incorporate global scene visual features or local visual features around object regions [2, 5,6,7],The other to describe spatial relations by building a structured model [8,9,10,11,12,13]. The goal of this paper is to utilize both types of contextual information, and build a better context model in detection task.

To achieve this goal, we use both multi-scale visual features and object relationships separately. In order to make better use of contextual visual information, we introduce multi-scale visual feature extraction. Different scales of contextual information may contain different object relationships. However, directly combined features may not be the most effective. To preserve the useful parts of multi-scale features, we use a gated bi-directional network (GBD-Net) [7, 14]. This network passes messages between different scales of features, which can learn the visual relevance of neighbor regions. To model object spatial relations we are inspired by structure inference network (SIN) [15]. Structure inference network is a graphical model to infer object state, which takes objects as nodes and relationships between them as edges. It uses a structured manner to learn some spatial and semantic relationships between different visual concepts, e.g. a man is riding a horse, birds are in the sky. Both of the two modules can be used in a typical detection framework such as Faster R-CNN.

The significance of this paper is to combine two types of contextual information modeling methods and build an integrated model. Through the experiments on PASCAL VOC2007, we validate the effectiveness of our approach, and rich context cues can help on boosting the performance detection frameworks.

2 Related Work

Object Detection Pipeline. Most current object detection systems use deep convolutional neural networks (DCNNs) to extract deep visual features. The mainstream detection framework pipelines can be grouped into one of two types: region-based pipelines and unified pipelines. Region based framework was proposed by Girshick et al. [16] in the name of R-CNN. It regards detection task as region proposal and region classification task, which first generates many candidate boxes those may contain object instances, and then classify these regions using deep CNN structure. R-CNN framework has achieved the state-of-art detection performances on the mainstream benchmarks, and continued to be improved by many studies, e.g. Fast R-CNN [17], Faster R-CNN [18]. Although region-based approaches have achieved outstanding detection performances, they could be computationally expensive which may not suitable for some mobile devices. The other type of approaches is called unified pipelines, which use CNN to predict bounding box offsets and category probabilities directly from the whole images. The representative methods are YOLO [19] and SSD [20]. Unified pipelines are fast enough for real-time detection but with a little loss of accuracy. While for salient objects detection these methods work well, their performances are limited in complex scenes.

Contextual Visual Features. It is natural to believe that objects coexisting in the same scene usually have strong visual correlations. Many studies have validated the role of contextual visual features in object recognition tasks in early years [2,3,4]. With the widespread use of DCNN methods in the field of computer vision, many researchers tried to use CNN feature representations to utilize contextual information. It is well known that DCNNs can learn hierarchical feature representations, which implicitly integrate contextual information [5]. The value of contextual information based on DCNN representations can be further explored. MR-CNN [21] uses some additional net branches to extract features inside or around original object proposal, in order to obtain richer contextual representation. ION [6] uses skip pooling to extract multiple scales of information, and uses spatial recurrent neural networks to integrate contextual information outside the region of interest. Zeng et al. [7, 14] proposed a gated bi-directional CNN (GBD-Net) to incorporate different scales of features of regions around objects, and pass effective message between different levels. From these methods, the effective extraction and integration of multi-region and multi-scale information is the key to build contextual visual features.

Spatial Relation Inference. The construction of an object spatial relation inference model is usually a combination of a graphical model and CNN structure [8,9,10,11,12,13, 15]. Deng et al. [12] proposed structure inference machines, which use recurrent neural networks (RNNs) for analyzing relations in group activity recognition. Xu et al. [13] proposed a graph inference model which uses conditional random fields (CRF) and RNN structure to generate scene graph from an image. SIN [15] proposes a graph structure inference model, which can learn the representation of spatial relationships between objects during training stage, and infer object state during detection stage.

Our work is mainly based on the most effective detection methods at present, and proposes a detection model that effectively integrates two types of context modeling methods. This model makes full use of both visual relevance and spatial relations, which can further improve detection performance than original approaches.

3 Method

We use Faster R-CNN as our base detection framework, and added a multi-scale visual feature module and a spatial relation inference module. The multi-scale visual feature module uses different scales of local feature and whole image feature as contextual information. Different scales of features are incorporated through a gated bi-directional network (GBD-Net). The spatial relation inference module uses the scheme in structure inference network (SIN) to represent spatial relationships between objects. The whole model can be trained end-to-end. We explain the details of each part in the following subsections.

3.1 Main Framework

The main structure of our model consists of a base detection framework, a multi-scale feature module and a spatial relation inference module. We adopt Faster R-CNN as our base detection framework because it is a standard region based detection model. It first generates some candidate regions by region proposal network (RPN) on an image, and then classifies these regions through deep CNN structure. As either of the multi-scale feature module and the spatial relation inference module needs region proposals as input, so a region based framework is necessary. The whole framework of our detection model are shown in Fig. 1.

The framework of the whole detection model. The base model is Faster R-CNN. It first generates region proposals using RPN, and calculate features through CNNs, shown in the bottom of the figure. NMS method selects best boxes and input them to the two context modules. The multi-scale feature module is shown in the top part, which integrate multi-scale features through GBD-Net structure. The spatial relation inference module is in the middle of the figure, which learns object spatial relations through a GRU.

3.2 Multi-scale Feature Module

This module is connected behind RPN. After RPN generates a large number of region proposals from an image, we use Non-Maximum Suppression (NMS [22]) to choose proper number of ROIs (Region of Interest). Multi-scale feature module takes these ROIs as input. For each ROI \(r_{0} = (x, y, w, h)\), we choose several scales of region with the same center location (x, y). In this paper we use three scales, with the parameters \(\lambda _{1}\) = 0.8, \(\lambda _{2}\) = 1.2 and \(\lambda _{3}\) = 1.8, so the three regions \(r_{i} = (x, y, \lambda _{i}w, \lambda _{i}h)\). We crop the size of \(r_{i}\) from the output feature map of the last convolutional layer \(f_{1}\) as our local contextual features. We then use the whole feature map as global contextual features \(f_{g}\). All fi are resized to 7 \(\times \) 7 \(\times \) 512, which is the same size as \(f_{g}\). Figure 2 illustrates different scales of features.

These multiple scales of features give richer information of the target object, but also may bring redundancy or noisy information. Different from directly concatenate all these features together, we use a gated bi-direction structure [7] to control information flow. For region \(r_{i}\), we use \(h^{0}_{i}\) in \(\{h^{0}_{1}, h^{0}_{2}, h^{0}_{3}, h^{0}_{4}\}\) to represent the original input features \(f_{1},f_{2},f_{3},f_{g}\) in different scales, \(h^{1}_{i}\) and \(h^{2}_{i}\) are middle features, and \(h^{3}_{i}\) is the output features. The detailed calculation process can be represented as follows:

\(h^{1}_{i}\) and \(h^{2}_{i}\) represent two directions of feature integration, and \(h^{3}_{i}\) is the concatenation of \(h^{1}_{i}\) and \(h^{2}_{i}\). G(x, w, b) is a sigmoid function as the gate to control effective messages and prevent noisy information. More implementation details can refer to [7]. With the help of multi-scale feature module, the detection model can get optimized features with rich contextual information from different scales, which for region classification and bounding box regeression.

3.3 Spatial Relation Inference Module

In this module, we model object relations as a graph \(G = (V, E)\). Each node \(v \in V\) represents an object, and each edge \(e \in E\) represents the spatial relationships between a pair of objects. We define the spatial relationships in the similar form in SIN method [15]. Different from SIN, our module only encodes message from object spatial relationships, but no message from scene. That is because the form of message from scene is similar to the feature form in multi-scale visual feature module, so we drop this part to avoid repetitive work. Each ROI is a node in G, which can be represented as \(v_{i} = (x_{i}, y_{i}, w_{i}, h_{i}, s_{i}, f_{i})\), where \({(x_{i}, y_{i})}\) is the center location, wi and hi are the width and height, \({s_{i}}\) is the area of \({v_{i}}\), and fi is the convolutional feature vector of \({v_{i}}\). The spatial relationship of \(v_{i}\) and \({v_{j}}\) can be represented as edge \({R_{ij}}\):

The form of \({R_{ij}}\) is a set of relationships between \(v_{i}\) and \({v_{j}}\) including location, shape, orientation and other type of spatial relationships. Thus we get the content of each node and edge in G. Similar to our multi-scale visual feature module, we use a RNN structure to learn spatial relations during training process. In this module we use GRU [23] to learn and remember long-term information. GRU is a lightweight but efficient memory cell to enhance useful spatial relation state, and ignore useless state. It uses an aggregation function to fuse messages from object spatial relationships into a meaningful representation. Implementation details of GRU can refer to [23]. For each node \(v_{i}\), message from object spatial relationships mei comes from an integration of all other nodes. The integrated message can be calculated as:

\(W_{p}\) and \(W_{v}\) are weight matrixes. Maxpooling is to integrate important messages from all other nodes. Visual feature vector [\(f_{i}, f_{j}\)] present visual relationship. Detailed calculation process is mostly same as the form in SIN [15]. After training on different kinds of images, the final hidden state of GRU can learn effective spatial relation state representations, and finally help to predict the region category and the offsets of bounding box.

4 Experimental Results

4.1 Implementation Details

We use Faster R-CNN with a VGG-16 model [24] pre-trained on ImageNet [1] as base detection framework, all parameters are same as the original paper [18]. Specially, we use NMS [25] during training and testing process, which chooses top 100 rated proposals as ROIs. We use PASCAL VOC Dataset to train and test our method. In each set of experiment, we use VOC 07 trainval and VOC 12 trainval as training set, and VOC 07 test as test set. We compared the detection results of the baseline model, baseline model with either single module in our paper, and with both modules. In addition, we also compared the relevant methods under the same conditions. For all experiments, we use dynamic learning rate: \(5e^{-4}\) as initial for 80 K iterations and \(5e^{-5}\) for the rest 50 K iterations. We choose \(1e^{-4}\) as weight decay and 0.9 as momentum.

4.2 Results

The results of mAP of all compared methods are shown in Table 1. The upper part of Table 1 shows the results of baseline and methods in relevant papers. The lower part of Table 1 shows the results of our methods, where the effect of each module can be seen. SIN edge is the structure inference network method with edge GRU part only, which is the same as our spatial relation inference module. The result of GBD-Net is our implementation because the original method uses different base net and parameters. Table 2 shows detailed detection precision of our model on each category.

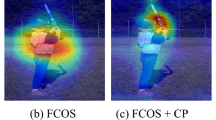

From the experimental results, we can see the significance of contextual information. In our methods, both multi-scale feature module and spatial relation inference module obviously improve detection accuracy comparing to the baseline. The two modules work well together and bring more accuracy improvement. These results benefit from reasonable context model design, which makes full use of the advantages of network structure, and is very suitable for end-to-end training.

5 Conclusion

In this paper, we study the contextual information modeling methods in detection task. Contextual information is mainly divided into visual relevance and spatial relations. In order to adapt to the common CNN detection structure, we propose a context model with two special modules to two types of contextual information. The multi-scale feature module incorporates features in different scales, where a gated bi-directional structure helps to select useful context messages. The spatial relation inference module uses a graphical model to learn and predict object spatial relations. Both modules are designed to adapt to a CNN structure, which can be trained end-to-end. And our method achieved good performance on PASCAL VOC dataset.

References

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Divvala, S.K., Hoiem, D., Hays, J.H., Efros, A.A., Hebert, M.: An empirical study of context in object detection. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1271–1278. IEEE (2009)

Torralba, A.: Contextual priming for object detection. Int. J. Comput. Vis. 53(2), 169–191 (2003)

Oliva, A., Torralba, A.: The role of context in object recognition. Trends Cogn. Sci. 11(12), 520–527 (2007)

Zeiler, M.D., Fergus, R.: Visualizing and understanding convolutional networks. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8689, pp. 818–833. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10590-1_53

Bell, S., Lawrence Zitnick, C., Bala, K., Girshick, R.: Inside-outside net: detecting objects in context with skip pooling and recurrent neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2874–2883 (2016)

Zeng, X., Ouyang, W., Yang, B., Yan, J., Wang, X.: Gated bi-directional CNN for object detection. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9911, pp. 354–369. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_22

Li, Y., Tarlow, D., Brockschmidt, M., Zemel, R.: Gated graph sequence neural networks (2015). arXiv preprint arXiv:1511.05493

Hu, H. , Zhou, G.-T., Deng, Z., Liao, Z., Mori, G.: Learning structured inference neural networks with label relations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2960–2968 (2016)

Battaglia, P., Pascanu, R., Lai, M., Rezende, D.J., et al.: Interaction networks for learning about objects, relations and physics. In: Advances in Neural Information Processing Systems, pp. 4502–4510 (2016)

Jain, A., Zamir, A.R., Savarese, S., Saxena, A.: Structural-RNN: deep learning on spatio-temporal graphs. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5308–5317 (2016)

Deng, Z., Vahdat, A., Hu, H., Mori, G.: Structure inference machines: recurrent neural networks for analyzing relations in group activity recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4772–4781 (2016)

Xu, D., Zhu, Y., Choy, C.B., Fei-Fei, L.: Scene graph generation by iterative message passing. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5410–5419 (2017)

Zeng, X., et al.: Crafting GBD-Net for object detection. IEEE Trans. Pattern Anal. Mach. Intell. 40(9), 2109–2123 (2018)

Liu, Y., Wang, R., Shan, S., Chen, X.: Structure inference net: object detection using scene-level context and instance-level relationships. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6985–6994 (2018)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference On Computer Vision And Pattern Recognition, pp. 580–587 (2014)

Girshick, R.: Fast R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, pp. 91–99 (2015)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788 (2016)

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Gidaris, S., Komodakis, N.: Object detection via a multi-region and semantic segmentation-aware CNN model. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1134–1142 (2015)

Felzenszwalb, P.F., Girshick, R.B., McAllester, D., Ramanan, D.: Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 32(9), 1627–1645 (2009)

Cho, K., Van Merriënboer, B., Bahdanau, D., Bengio, Y.: On the properties of neural machine translation: Encoder-decoder approaches (2014). arXiv preprint arXiv:1409.1259

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition (2014). arXiv preprint arXiv:1409.1556

Everingham, M., Van Gool, L., Williams, C.K.I., Winn, J., Zisserman, A.: The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 88(2), 303–338 (2010)

Acknowledgments

This work is supported by the NSFC 61672089, 61273274, 61572064, and National Key Technology R&D Program of China 2012BAH01F03.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhou, T., Miao, Z., Wang, J. (2019). Multi-scale Feature and Spatial Relation Inference for Object Detection. In: Zhao, Y., Barnes, N., Chen, B., Westermann, R., Kong, X., Lin, C. (eds) Image and Graphics. ICIG 2019. Lecture Notes in Computer Science(), vol 11901. Springer, Cham. https://doi.org/10.1007/978-3-030-34120-6_54

Download citation

DOI: https://doi.org/10.1007/978-3-030-34120-6_54

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34119-0

Online ISBN: 978-3-030-34120-6

eBook Packages: Computer ScienceComputer Science (R0)