Abstract

The goal of super-resolution (SR) face image reconstruction is to generate a high quality and high resolution (HR) image based on low resolution (LR) input face images, to support video face recognition. This paper proposes a novel hybrid SR reconstruction framework of combining a multiframe SR interpolation method and a learning based single frame SR method. The proposed framework first formulates a simple but effective frame selection strategy as a preprocessing step. A weight map based on canonical correlation analysis (CCA) theory is then estimated to blend the results from single frame SR method and multiframe SR approach. Experimental results demonstrate that the proposed method achieves competitive performance against the state-of-the-art both simulated and real LR sequences.

This work was supported in part by the programs of International Science and Technology Cooperation and Exchange of Sichuan Province under Grant 2017HH0028, Grant 2018HH0102 and Grant 2019YFH0014.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Among many biological identification features, face is the most commonly used one as evidenced by recently increasing interest of face recognition in computer vision community. Faces often appear in monitoring system of banks, shopping malls, and other public areas, as important clues for public security systems. However, under uncontrolled natural conditions, nonfrontal, noisy low resolution (LR) images tend to be produced. These LR images can neither be reliably evaluated by human readers, nor effectively used as direct input of face recognition systems. Super resolution (SR) reconstruction technology represents great potential to solve this problem. Face super resolution, which is also called face hallucination, is firstly proposed by Baker [1].

Generally, from the number of input images, existing face image SR methods can distinguish into two types, namely, multiframe SR (MFSR) and single frame SR (SFSR) techniques. The MFSR approaches include two kinds of typical algorithms: (i) interpolation based and (ii) reconstruction based methods. Non-uniform interpolation approaches [2, 3] intuitively generate improved resolution images by fusing the information of a sequence of consecutive LR frames with the same scenario. The reconstruction based methods [4, 5] in addition to the use of multiframe information, but also join various priori information to regularize HR solution space based on the Bayesian framework. These methods ensure restored HR image is consistent with the original LR face. However, they are usually subtle to inappropriate blurring operations and rely on severely the accurate image registration.

In the other hand, learning based approaches are the most representative face SR algorithms in SFSR methods for latest decade. Learning based technique intends to estimate an HR image according to the relationship learned from the LR and HR training image pairs, which can distinguish into global face based and local patch based methods, from the manner of processing face image. The global face based approaches usually regard the holistic face as a whole to hallucinate the LR face images, which have a certain degree of robustness. Some commonly used machine learning models include the Principal Component Analysis (PCA) [6], the locality preserving projections [7], and the canonical correlation analysis (CCA) [8]. Though these methods can preserve the structure of global face variations, they ignore some individual facial details beyond principal components. Compared with the global methods, local patch based approaches achieve better visual quality since they divide the whole face into several overlapped patches to derive HR blocks. Ma et al. [9] present a position patch based face SR technique, where all the training patches located at the same positions are combined to produce the query patch, and the reconstruction weights are obtained by solving the least square regression (LSR) problem. Recently, the idea of locality constrained representation (LCR) has been proposed by Jiang et al. [10] to constrain the least square reconstruction and give more robust results to input noise. These methods are easy to get the embedding dictionary and yield content results. Two limitations of these methods are that they rely on aligning same size inputs as the training set LR image, which results in performance degradation without accurate alignment, otherwise, they fail to preserve the fine details of a face when the high quality training samples are not sufficient, video sequences dataset, for example.

Recently, some methods based on deep learning has been developed [13,14,15]. These methods have achieved good results in an poor environment. However, the neural network based methods have to input large-scale data during training stage, which will inevitably result in higher time and space complexity.

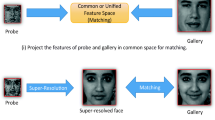

To tackle this problem, this paper presents a novel hybrid SR reconstruction framework to super-resolve LR faces in video sequences. Firstly, we propose a simple frame selection mechanism to discard nonfrontal fuzzy frames and reduce registration calculating pressure. Then, we utilize a weighted map based on CCA theory to combine single frame local learning based method and multiple frames non linear interpolation two different approaches, which are conductive to preserve local facial details and the global face consistency with original LR input simultaneously.

The rest of the paper is organized as follows. Section 2 briefly describes related methods. In Sect. 3, the face frame selection criterion and hybrid mechanism are both explained. We present experimental results and implementation details in Sect. 4 and draw conclusions and future work in Sect. 5.

2 Related Work

Towards our proposed face reconstruction method, we select representative global method and local method: traditional multiframe interpolation method and popular learning based single frame algorithm to simultaneously enhance local and global faces features. Among the many possible SFSR and MFSR methods, we choose a specific multiframe ANC (adaptive normalized convolution) interpolation method [3] and a single frame learning based approach [11] as a concrete implementation of our proposed approach. Both the two methods have the prominent advantage of performance stability and low computational cost. The chosen SR methods are briefly described in the following sections.

2.1 Multiframe Global Non-uniform Interpolation SR

In order to make a trade-off between computational complexity and reconstruction performance of the overall algorithm, the selected global method is a kind of nonlinear interpolation method based on normalized convolution (NC) framework by TQ Pham et al. [3], which suggests a solution for fusion of irregularly sampled images using adaptive normalized convolution. According to their algorithm, the window function of adaptive NC is adapted to local linear structures, and each sample would carry its own robust certainty which can minimize smoothing of sharp corners and tiny details by ignoring samples from other intensity distributions in local analysis. Besides, the registration method applied before reconstruction multiple images is Keren et al. [12].

2.2 Single Frame Local Learning Based SR

For single frame SR approach, the fast direct learning based SFSR method proposed in [11] are selected to preserve local feature of images by creating effective mapping functions. They first split the original input image feature space into numerous subspaces and then learn mapping priors between HR and LR images for each subspace by collecting sufficient training exemplars. All learned regression functions are simple and fast to handle subspace image reconstruction problem. The novelty of this approach lies in subspace mapping functions which facilitate feasibility of fast resolving HR patches and generates high quality SR images with sharp edges and rich textures.

3 Proposed Method

3.1 Frame Selection

In a continuous collection of facial image sequences, due to the movement of the human body, a variety of head poses, facial expressions may be present. While side face image, fuzzy, occlusive and low light face image cannot provide useful information for multiframe face super-resolution reconstruction. In [16], the authors proposed a new method to measure the canonical view face images via rank and symmetry of matrix. The frontal state and sharpness of face image can be measured easily and efficiently based on this metric, but the change of the picture brightness is not taken into account. We adopt this method in this paper, and propose a new selection mechanism by adding the brightness factor to remove relatively dark frames. The new mechanism is formulated as shown below. Let a matrix  denote the j-th face image of person i. \(S_i\) is the set of all face images of person i, \(I^i_j\in {S_i}\). The canonical view, sharpness and brightness measurement index can be written as follows.

denote the j-th face image of person i. \(S_i\) is the set of all face images of person i, \(I^i_j\in {S_i}\). The canonical view, sharpness and brightness measurement index can be written as follows.

where \(\alpha ,\beta \) are both contant coefficient, respectively control weight of sharpness and brightness factor. \(\parallel \cdot \parallel _F\) is the Frobenius norm, and \(\parallel \cdot \parallel _*\) denotes the nuclear norm, which is the sum of the singular values of a matrix. \(I^i_j\_lu\) denotes brightness factor of face image \(I^i_j\) which is calculated as a mean of illumination component of the image in YCbCr color space.  are two diagonal constant matrixes with elements \(A=diag([1_{N/2},0_{N/2}])\) and \(B=diag([0_{N/2},1_{N/2}])\). The first term in Eq. (1) measures the facial symmetry, which is the difference between the left half and the right half of the face. We can evaluate the facial pose based on face’s symmetry. The second term measures the rank of the face, which reflects the sharpness degree of face because the rank of an image can characterize the structure information of the image and larger rank indicates sharper images. The third term measures brightness of the face image, notice that larger mean of illumination component of the image in YCbCr color space corresponds to brighter faces in our formulation. Smaller value of \(SF(I^i_j)\) indicates that the face is more likely to be useful for MFSR. The selected several frontal face images when input into multiple images registration is hypothesized to result in more reliable estimates of motion parameters. The face with smallest value of Eq. (1) is chosen as reference image of registration process. In this paper, experimentally, we select five face images to participate in the MFSR reconstruction.

are two diagonal constant matrixes with elements \(A=diag([1_{N/2},0_{N/2}])\) and \(B=diag([0_{N/2},1_{N/2}])\). The first term in Eq. (1) measures the facial symmetry, which is the difference between the left half and the right half of the face. We can evaluate the facial pose based on face’s symmetry. The second term measures the rank of the face, which reflects the sharpness degree of face because the rank of an image can characterize the structure information of the image and larger rank indicates sharper images. The third term measures brightness of the face image, notice that larger mean of illumination component of the image in YCbCr color space corresponds to brighter faces in our formulation. Smaller value of \(SF(I^i_j)\) indicates that the face is more likely to be useful for MFSR. The selected several frontal face images when input into multiple images registration is hypothesized to result in more reliable estimates of motion parameters. The face with smallest value of Eq. (1) is chosen as reference image of registration process. In this paper, experimentally, we select five face images to participate in the MFSR reconstruction.

3.2 Combination Weight Map Estimation

In this part, we discuss the estimation of weight map for effectively combining multiframe interpolation and single frame learning-based method results. For face images, we often make resolved HR faces as input of a series of important identification, such as face recognition. So we need to guarantee the reconstructed HR face image is similar to the ground truth face as far as possible. As mentioned above, the HR image information recovered by the MFSR method is mainly derived from fusing LR images of the same scene, while the SFSR method is mostly from learned mapping relation between HR and LR example images from training datasets. In other words, the MFSR method ensures the global consistency of the super-resolved face but with confining performance, while the local SFSR method can effectively protect facial details but with low reliability of the global face. Hence, the idea of making use of resolved HR face by MFSR to constrain the HR face from SFSR method is proposed, compensating for the effects of insufficient high quality training samples in video sequences at the same time.

We propose a fusion weight map based on CCA theory, because when CCA theory is used for feature extraction, it can effectively ensure the maximum consistency between high and low resolution facial features. CCA theory is first proposed by Hotelling [17] in 1936, which is a classical multivariate data processing method of interdependent vector. The same pair of high and low resolution images is different in resolution and dimension while they have a common internal structure. In a correlated subspace, the correlation of same pair of high and low resolution image can be maximized. CCA for feature extraction can effectively ensure the highest pertinence between high and low resolution face features and now is widely used in the field of face SR [8, 18]. The following experiment demonstrates that it can enhance the consistency between the HR face image and the original LR face, simultaneously improve SR reconstruction performance.

Suppose there is a pair of high and low resolution image, after removing the mean, they respectively are \(X_{LR}\in \mathfrak {R}\), \(Y_{HR}\in \mathfrak {R}^{P\times Q}\). The aim of CCA is to find the projection direction \(\mu \in \mathfrak {R}^{M\times d}\) and \(\nu \in \mathfrak {R}^{P\times Q}\) to form the CCA subspace, d is the CCA subspace dimension, so that the LR image in CCA subspace \(X_{LR\_CCA}=\mu ^TX_{LR}\) and the HR image in CCA subspace \(Y_{HR\_CCA}=\nu ^TY_{HR}\) have the largest correlation. Therefore, the projection direction \(\mu ,\nu \) can be obtained by maximizing the correlation coefficient:

where, \(E[\cdot ]\) denotes mathematical expectation, \(S_{XX}\), \(S_{YY}\), \(S_{XY}\) represent the autocovariance matrix and the mutual crosscovariance matrix between \(X_{LR}\) and \(Y_{HR}\), respectively. More details about the solution of Eq. (2) can be found in [17].

In this paper, an LR face image sequence \(I_{LR,k} \in \mathfrak {R}^{M\times N}\) is used as input, k denotes the number of LR face images. To super-resolve these face frames \(I_{LR,k}\) by our proposed means, five face frames with acceptable canonical view, sharpness and brightness are obtained firstly by proposed frame selection mechanism, then both \(HR_M\) reconstructed by MFSR method and \(HR_S\) resolved by SFSR method are calculated. In the end, enhanced HR face image \(HR_F\) is computed by our algorithm described in the following.

For achieving greatest relevance between the final reconstructed HR face image and the original GT (Ground Truth) face, we first designate a fixed size sliding window, \(HR_M\) reconstructed by MFSR is typically subdivided into overlapping patches with this window. Then, a CCA subspace is computed to calculate the maximum correlation coefficients between the segmented image blocks and the original LR reference face image in this subspace. The CCA subspace and correlation coefficient can be calculated according to (2). The highest correlation coefficients between the obtained image blocks and the original LR face image are arranged from high to low, and a certain number of \(HR_M\) image blocks are selected and added to the \(HR_S\) face image as constraint information of keeping consistent face features with the LR face images. Figure 1 gives a block diagram illustration. The final HR face image \(HR_F\) can be calculated by the following formula.

where \(\lambda \) represents the weight map to control the area that needs to be fused and the fusion weight, \(windows_{max-correlation}\) denotes checked \(HR_M\) mage blocks corresponding window positions, \(\omega \) is constant coefficient, controlling the weight of \(HR_M\) face image blocks blended into corresponding area of \(HR_S\). The proposed fusion weight map strategy can effectively improve the consistency between the reconstructed HR face and the original GT face. Moreover, the quality of the final \(HR_F\) image is improved too because of the addition of \(HR_M\) image information.

4 Experiments

In this section, we show experimental results of proposed hybrid method and compare its objective quality and visual impression to other state of the art methods, using both the PSNR and SSIM metrics. Experiments were carried out to test SR performance of the proposed method in both simulated and real LR image sequences. Simulated LR image sequences super-resolution experiments are conducted on three datasets namely, Prima head pose image dataset [19], YUV Video Sequences [20], and Head Tracking Image Sequences [21]. The proposed algorithm is also evaluated on the Choke Point database [22] to further demonstrate its efficacy in real world surveillance scenarios.

4.1 Implementation Details

In this section, we show experimental results of proposed hybrid method and compare its objective quality and visual impression to other state of the art methods, using both the PSNR and SSIM metrics. Experiments were carried out to test SR performance of the proposed method in both simulated and real LR image sequences. Simulated LR image sequences super-resolution experiments are conducted on three datasets namely, Prima head pose image dataset [19], YUV Video Sequences [20], and Head Tracking Image Sequences [21]. The proposed algorithm is also evaluated on the Choke Point database [22] to further demonstrate its efficacy in real world surveillance scenarios.

4.2 Implementation Details

For color images, we apply the proposed algorithm on brightness channel (Y) and magnify color channels (UV) by Bicubic because human vision is more sensitive to brightness change. HR face sequences are first blurred by Gaussian kernel with variance \(\sigma \) and then subsampled by scale factor s to obtain a sequence of simulated multiple LR faces.

We take \(\sigma =2.0\) and \(s=4\) as the case of high degradation degree. Furthermore, for evaluating a resolution enhancement by \(s=2\), \(\sigma =0.4\) was employed.

Weight Coefficient \(\omega \). Extensive experiments are performed to decide the value of \(\omega \), based on these experiments, we draw some rules for the value of \(\omega \). Figure 2 shows the average PSNR gained over SFSR [11] method for various values of \(\omega \) from 0 to 1. It should be noted that other parameters were fixed during the course of changing \(\omega \). As can be seen from the diagram, the test images in YUV dataset achieve the best performance at \(\omega \) value of 0.1 or 0.3, while the best results of the Prima dataset images are obtained at a value of \(\omega \) of 0.75. It is noteworthy that the size of YUV dataset picture is \(176 *144\) does not exceed 200, while the picture size of Prima dataset is \(384 *288\), which exceeds 200. Thus, we can conclude some \(\omega \) value rules which are suitable for most of cases. When the ground truth image size does not exceed 200, \(\omega \) value of 0.1–0.3 can get better results; on the contrary \(\omega \) value of 0.7–0.9 is more appropriate. The special case is that when the performance of MFSR or SFSR methods is particularly poor, the value of w tends to be marginalized around 0 or 1, which is not applicable to the above rule.

Sliding Window Size and Overlapping Rate. The number of \(HR_M\) image blocks hinges on the sliding window size and overlapping rate. We keep sliding window width and height a same value to make the algorithm runs faster. During the experiment, it is found that setting window size around 0.6 or 0.84 of the original HR imge small edge size can achieve better results than \(HR_S\). According to experience when the sliding window size exceeds half of small edge size of \(HR_M\) image, the overlap rate is better to be 0.25, otherwise, set it to 0.6. Specially, for very small window size 1–15, setting overlap rate as 1 is advisable. Theses parameter values yield not only appropriate number of blocks but excellent performance.

The CCA subspace dimension d, is empirically set to 0.3 of input LR face images small size edge to gererate pleasing HR results. The number of selected image patches superimposed on \(HR_S\) image will be given by evaluation criterion of making the final reconstructed image optimal quality. For frame selection part, we empirically set \(\alpha =0.03\) and \(\beta =1.5\) in most cases.

4.3 Simulated LR Sequences

Our method is compared with the MFSR, SFSR method reported in [3, 11] and state of the art patch-based approach, Jiang’s locality-constrained representation (LCR) [10]. All implementation parameters setting and code are adopted according to their papers to achieve their best possible results. Visual results comparison is depicted in Fig. 3. In order to substantiate the objective quality results, we also give Table 1, which is used quantitatively to measure the results of different SR methods. Each cell 2 results in Table 1 shows: Top - image PSNR (dB), Bottom-SSIM index, which shows the improvement in PSNR and SSIM index by applying our method versus the other methods. The best result for each sequence is highlighted. It can be seen that the proposed method outperforms theses method in [3, 10, 11]. Moreover, as shown in Table 1, correlation coefficient between \(HR_M\) and input LR face in CCA subspace is indeed larger than \(HR_S\) do. Furthermore, correlation coefficients of our hybrid method are higher than that of SFSR method, which show that the results of the multiframe method do bring consistency constraint information to the blended HR image.

In Fig. 3, it can be seen that our images are visually better such as the left face of the girl has more details in our proposed case compared to Bicubic, SFSR and even LCR method. Besides, in the results produced by the LCR method, we can observe some fake edges on the edge of the girl’s hair and the boy’s shoulder, which are clearly visible in our results. Similar observations can also be made for the other sequence. Our results gain in PSNR of up to 0.67 dB and 0.51 dB over advanced SFSR method, which can be found in Table 1 for ‘Person03’ and ‘Person08’ sequence respectively. Our results as well as improve over SFSR algorithm in SSIM, which demonstrate that the proposed method indeed increased the correspondence between resolved and original HR face. In Table 1, notice that the result of MFSR method is very bad for ‘suzie’ and ‘villains1’, because ‘villains1’ present substantial movement and ‘suzie’ contain interference object (telephone handset) nearby the faces, which may impact the reconstruction of face parts. In this case, our results also exceed 0.15 dB over advanced SFSR method for ‘suzie’. Similarly, we obtain the max value in SSIM.

4.4 Real LR Sequences

We use our algorithm to enlarge face images captured in challenging real world surveillance video sequence from Choke Point database [22], where LR faces in those sequences are collected under natural conditions. LR faces in the sequences are super-resolved by scale factor \(s = 2\), where five consecutive frames are first selected via frame selection function. Examples of results are shown in Fig. 4. It can be observed that while in hair regions our results look similar to the conventionally obtained results, but appear better in textured areas such as facial contours, glasses and the corner of mouth, eyes. Although the results of the LCR method appear to have more detail, they are infused with false information such as the outline of the glasses around the eyes of the girl and the artifacts of the boy’s face. This shows that our framework is promising in the field of face reconstruction problem in video surveillance scenario.

5 Conclusions and Future Work

This paper presented a new hybrid SR reconstruction framework for super-resolution multiple LR face image inputs. Contrary to the conventional multiframe reconstruction strategy of numerous complex iterations and heavily dependent on the registration link, our algorithm, first introduces a simple frame selection mechanism as a preprocessing step to simplify the registration problem, then utilizes proposed CCA-based fusion strategy to combine SFSR and MFSR estimates to obtain our reconstructed HR face. The proposed method improves face SR performance by adding trained prior from external datasets and enhanced consistency restriction information via CCA fusion theory. We have demonstrated that our algorithm outperforms several state of the art SFSR algorithms.

References

Baker, S., Kanade, T.: Hallucinating faces. In: The Fourth International Conference on Automatic Face and Gesture Recognition, pp. 83–88. IEEE, Grenoble (2000)

Park, S.C., Park, M.K., Kang, M.G.: Super-resolution image reconstruction: a technical overview. IEEE Signal Process. Mag. 20(3), 21–36 (2003)

Pham, T.Q., et al.: Robust fusion of irregularly sampled data using adaptive normalized convolution. EURASIP J. Adv. Signal Process. 2006(1), 236–236 (2006)

Dai, S., Han, M., Xu, W., et al.: SofCuts: a soft edge smoothness prior for color image super-resolution. IEEE Trans. Image Process 18(5), 969–981 (2009)

Sun, J., Sun, J., Xu, Z., et al.: Gradient profile prior and its applications in image super-resolution and enhancement. IEEE Trans. Image Process. 20(6), 1529–1542 (2011)

Park, J.S., Lee, S.W.: An example-based face hallucination method for single-frame, low-resolution facial images. IEEE Trans. Image Process 17(10), 1806–1816 (2008)

Zhang, X., Peng, S., Jiang, J.: An adaptive learning method for face hallucination using locality preserving projections. In: The 8th IEEE International Conference on Automatic Face and Gesture Recognition, pp. 1–8. IEEE, Amsterdam (2008)

Huang, H., He, H., Fan, X., Zhang, J.: Super-resolution of human face image using canonical correlation analysis. Pattern Recogn. 43(7), 2532–2543 (2010)

Ma, X., Zhang, J., Qi, C.: Hallucinating face by position-patch. Pattern Recogn. 43(6), 2224–2236 (2010)

Jiang, J., Hu, R., Wang, Z., Han, Z.: Noise robust face hallucination via locality-constrained representation. IEEE Trans. Multimed. 16(5), 1268–1281 (2014)

Yang, C.Y., Yang, M.H.: Fast direct super-resolution by simple functions. In: ICCV, pp. 561–568. IEEE (2013)

Keren, D., Peleg, S., Brada, R.: Image sequence enhancement using sub-pixel displacements. In: CVPR, pp. 742–746. IEEE (1988)

Bulat, A., Tzimiropoulos, G.: Super-FAN: integrated facial landmark localization and super-resolution of real-world low resolution faces in arbitrary poses with GANs. In: CVPR, pp. 109–117. IEEE (2018)

Hui, Z., Wang, X., Gao, X.: Fast and accurate single image super-resolution via information distillation network. In: CVPR, pp. 723–731. IEEE (2018)

Yu, X., Fernando, B., Hartley, R., Porikli, F.: Super-resolving very low-resolution face images with supplementary attributes. In: CVPR, pp. 908–917. IEEE (2018)

Zhu, Z., Luo, P., Wang, X., Tang, X.: Recover canonical-view faces in the wild with deep neural networks. arXiv preprint (2014)

Hotelling, H.: Relations between two sets of variates. In: Kotz, S., Johnson, N.L. (eds.) Breakthroughs in Statistics. SSS, pp. 162–190. Springer, New York (1992). https://doi.org/10.1007/978-1-4612-4380-9_14

An, L., Bhanu, B.: Face image super-resolution using 2D CCA. Signal Process 103, 184–194 (2014)

Gourier, N., Hall, D., Crowley, J.L.: Estimating face orientation from robust detection of salient facial structures. In: FG Net Workshop on Visual Observation of Deictic Gestures, Cambridge (2004)

YUV video sequences (QCIF). http://trace.eas.asu.edu/yuv/index.html

BMP Image Sequences for Elliptical Head Tracking. http://cecas.clemson.edu/~stb/research/headtracker/seq/

Wong, Y., Chen, S., Mau, S., Sanderson, C., Lovell, B.C.: Patch-based probabilistic image quality assessment for face selection and improved video-based face recognition. In: CVPR, pp. 74–81. IEEE (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Gao, J., Tang, H., Gee, J.C. (2019). Hybird Single-Multiple Frame Super-Resolution Reconstruction of Video Face Image. In: Zhao, Y., Barnes, N., Chen, B., Westermann, R., Kong, X., Lin, C. (eds) Image and Graphics. ICIG 2019. Lecture Notes in Computer Science(), vol 11901. Springer, Cham. https://doi.org/10.1007/978-3-030-34120-6_63

Download citation

DOI: https://doi.org/10.1007/978-3-030-34120-6_63

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34119-0

Online ISBN: 978-3-030-34120-6

eBook Packages: Computer ScienceComputer Science (R0)