Abstract

In the last few decades, k-means has evolved as one of the most prominent data analysis method used by the researchers. However, proper selection of k number of centroids is essential for acquiring a good quality of clusters which is difficult to ascertain when the value of k is high. To overcome the initialization problem of k-means method, we propose an incremental k-means clustering method that improves the quality of the clusters in terms of reducing the Sum of Squared Error (\(SSE_{total}\)). Comprehensive experimentation in comparison to traditional k-means and its newer versions is performed to evaluate the performance of the proposed method on synthetically generated datasets and some real-world datasets. Our experiments shows that the proposed method gives a much better result when compared to its counterparts.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The prime objective of the k-means method is to divide the datasets D into k number of clusters by minimizing the Sum of Squared Error [8]. It is a more effective method if the initial k centroids are selected from respective k clusters. The selection of initial k centroids is another challenging task of k-means that produces different clusters for every set of different initial centroids. Thus, the initial random k centroids influence the quality of clustering. To overcome this problem, we proposed an incremental k-means clustering method (incrementalKMN) that attempts to improve the quality of the result in terms of minimizing the \(SSE_{total}\) error. The proposed method starts with a single seed and in each iteration; it selects one seed from the maximum \(SSE_{partial}\) of a cluster (explained later in Sect. 3) and repeats the steps until it reaches k number of centroids.

2 Related Work

Among the partition-based clustering methods, k-means is the pioneering and most popular method that finds its use in almost every field of science and engineering. As the k-means method is too sensitive to initial centroids, many procedures were introduced by the researchers to initialize centroids for k-means [4]. A Partitioning Around Medoids (PAM) method was introduced in [7], which starts with a random selection of k number of data objects. In each step, a swap operation is made between a selected data object and a non-selected data object, if the cost of swapping is an enhancement of the quality of the clustering. A Maximin method introduced in [6] that selects the first centroid arbitrarily, and the \(i^{th}\) center, i.e. \((i \in \{2,3,...,k\})\) is selected from the point that has the greatest minimum-distance to the previously chosen centers. In [3], the clustering process starts with randomly dividing the given data set into J subsets. These subsets are grouped by the k-means method producing J sets of intermediate centers with k points each. These center sets are joined into a superset and clustered by k-means into J stages, each stage initialized with a different center set. The members of the center set that gives the minimum total sum of squared error (\(SSE_{total}\)) are then taken as the final centers. In [2], k-means++ is introduced, that selects the first centroid arbitrarily and the i-th center i.e. \((i \in { 2,3,...,k})\) is selected from \(x \in X \) with a probability of \(\dfrac{D(X)^2}{\sum _{x\in X}D(X)^2}\), where D(X) indicates the least-distance from a point x to the earlier nominated centroids. To resolve the initialization problem of k-means method, a MinMax k-means method is introduced in [12], that changes the objective function, as an alternative of using the sum of squared error. It starts with randomly selected k number of centroids and tries to minimize the maximum intra-cluster variance using a potential objective function \(Eps_{max}\) instead of the sum of the intra-cluster variances. Besides, each cluster allots a weight, such that clusters with larger intra-cluster variance are allotted higher weights, and a weighted version of the sum of the intra-cluster variances are clustered automatically. It is less affected by initialization, and it produces a high-quality solution, even with faulty initial centroids. A variance-based method is introduced in [1], that initially sorts the points on the attribute based on the highest variance and then divides them into k number of groups along with the same dimension. The centers are then selected to be the points that correlate with the medians of these groups. Note that this method contempt all attributes, but one with the highest variance probably useful only for data sets in which the changeability is more in a single dimension. In [11], initially, a kd-tree of the data objects is created to perform density estimation, and then a modified maximin method is used to select k centroids from densely inhabited leaf buckets. Here, the computational cost is dominated by kd-tree construction. An efficient k -means clustering filtering algorithm using density based initial cluster centers (RDBI) is introduced in [10], it improves the performance of k-means filtering method by locating the centroid value at a dense area of the data set and also avoid outlier as the centroid. The dense area is identifying by representing the data objects in a kd-tree. A new approach IKM-+ introduced in [9], iteratively improves the quality of the result produced by k-means by eliminating one cluster and separating another and again re-clustering. It uses some heuristic method for faster processing. From, our brief selected survey, we conclude that proper initialization of k number of centroids is a challenging task, especially when the number of clusters is high. In this paper, an incremental k-means (incrementalKMN) clustering method is proposed, which gives k desired number of clusters even when the number of clusters is high. In the next section, we discuss the preliminaries of incrementalKMN method.

3 Preliminaries

Our incremental k-means (incrementalKMN) is based on the k-means [8] method, which is the most popular method. k-means uses a total sum of squared error (\(SSE_{total}\)) as an objective function, which tries to minimize the \(SSE_{total}\) of each cluster. The \(SSE_{total}\) is computed as:

In other way, for \(i\in \{1,2,....k\}\) and k is the number of clusters then \(SSE_{total}=SSE_{1}+SSE_{2}+,......SSE_{k} \), where \(SSE_{i}\) is considered as partial SSE. In this paper, partial SSE will be reffered to as \(SSE_{partial}\) of cluster \(c_{i}\), and can be computed using the following equation:

where, \(c_{i}\) is a mean of the \(i^{th}\) cluster.

To minimize the \(SSE_{total}\), and producing k-number of centroids, the k-means method follows the following steps:

-

Step 1:

Select k numbers of centroids arbitrarily as initial centroids.

-

Step 2:

Assign each data objects to its nearest centroid.

-

Step 3:

Recompute the centroids C.

-

Step 4:

Repeat step 2 and 3 until the centroids no longer change.

Even though the k-means method is more effective and widely used method and the performance in terms of acquired \(SSE_{total}\) is strongly dependent on the selection of the initial centroids. Due to arbitrarily initial centroids selection, it can not produces desired clusters. Therefore, selection of initial centroids is very important for maintaining the quality of clustering.

4 Our Proposed IncrementalKMN Method

Here, we introduced an incremental k-means (incrementalKMN) clustering method which produces k number of clusters effectively. The central concept of the proposed method is, in each iteration, it increments the seed values by one until it reaches k number of seeds. Initially, the first seed is selected as a mean data object of a given dataset D. In each iteration, the \(i^{th}\) center i.e. \((i \in { 2,3,...,k})\) is selected from maximum \(SSE_{partial}\) of a cluster \(c_{i}\). The \(i^{th}\) center is a maximum distance from the data object and the centroid of maximum \(SSE_{partial}\) cluster. Similarly, repeat the iteration until it reaches k number of seeds. Finally, we formed k desired number of clusters and also minimized the \(SSE_{total}\) error.

4.1 The IncrementalKMN Method

We introduce an incremental method for selecting centers for the k-means algorithm. Let \(SSE_{partial}\) and \(SSE_{total}\) denotes the partial sum of squared error of a cluster and summation of the partial sum of squared error of all the clusters in a given dataset are computed in each iteration. Then we describe the following algorithm, which we call incrementalKMN method:

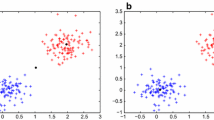

In Fig. 1(a), represents the DATA1 self-generated dataset with seven number of distinct clusters. Now, apply the incrementalKMN method on it. Initially, the first seed, i.e., initial centroid \(C=\{c_{1}\}\) is assigned as a mean of the given dataset, which shows in Fig. 1(a). In each iteration, compute the \(SSE_{total}\) of the clusters and also assigned the \(SSE_{partial}\) to each centroid. Now we compute the maximum \(SSE_{partial}\) of centroid \(c_{1}\), in this case, the \(SSE_{total}\) and \(SSE_{partial}\) both are equal and considered as a maximum \(SSE_{partial}\). Then compute the farthest distance between data objects and its centroid \(c_{1}\) of a maximum \(SSE_{partial}\) of a cluster, select the farthest distance data object i.e., second centroid \(c_{2}\) and update the centroid list C i.e. \(C=\{c_{1},c_{2}\}\), which shows in Fig. 1(b) and update the centroid. In Fig. 1(c), \(c_{1}\) shows the maximum \(SSE_{partial}\) compared with \(c_{2}\) now select the data object from cluster \(c_{1}\) whose distance is maximum with respect to centroid \(c_{1}\) and update the centroid C i.e. \(C=\{c_{1},c_{2},c_{3}\}\) and recompute the centroid values. Similarly, in Fig. 1(d)–(h), continue the process until we have selected k number of centroids. Finally, we obtain k-number of clusters and also minimize the error in terms of \(SSE_{total}\).

5 Experimental Analysis

In this section, we evaluate the performance of incrementalKMN k-means method with k-means, k-means++ [2], PAM [7], IKM-+ [9] partition-based clustering methods. We have used two different types of datasets. The first type of datasets are two-dimensional synthetic datasets [5], which shows the precision of methods graphically, where the result of the method can be identified as the location of centroids. The second type of datasets is used from online UCI machine learning repository. Results are averaged over 40 runs of each of the competing algorithms on synthetically generated datasets and the average \(SSE_{total}\) of each method presented in Table 1, where k determines the number of clusters. Table, 1 shows that PAM, IKM-+ and the incrementalKMN methods radically reduces the \(SSE_{total}\). However, the incrementalKMN method provides better results in terms of \(SSE_{total}\) as compared with PAM and IKM-+ method, and also it increases the accuracy of clusters.

Here, we have visualized the results of some datasets, and we select the high quality \(SSE_{total}\) of each dataset for k-means, k-means++, PAM, IKM-+ and worst solution of \(SSE_{total}\) of the incrementalKMN method. For S-series datasets S-1, the quality of results of the proposed method is better then the results of k-means and k-means++ method, however, PAM and IKM-+ method gives the same results, which can be seen in Fig. 2(a)–(e). Similarly, for A-series A-3 dataset, the quality of results of the proposed method is better than the k-means and k-means++ method, which is reported in Fig. 3. However, comparing with \(SSE_{toatal}\), the proposed method is better than the PAM and IKM-+ method, which is reported in Table 1. Due to the restriction of paper size, we could not report the result for all the datasets. In Fig. 4, the results of incrementalKMN method for S-2, S-3, S-4, A = 1, A-2, Brich-1, Brich-2, D31 and R15 datasets is reported. We observe, k-means++ method is more effective as compared with the k-means method, especially when the number of clusters is low. The clustering quality of k-means and k-means++ method degrades when the number of clusters is high. From Table 1, we observed that the proposed method is more effective, especially when the number of clusters is high and also useful for reducing the \(SSE_{total}\). Therefore, we can say that incrementalKMN method is better than the competing methods.

The result of quality of clusters based on \(SSE_{total}\) of k-means, k-means++, PAM, IKM-+ and proposed method for average of 40 repeatations on each of the real-world datasets is shown in Table 2, where k determines the number of clusters. In the proposed method, the average \(SSE_{total}\) is slightly less than the IKM-+ [9] method and much lesser than for the other competing methods. Therefore, we can say that incrementalKMN gives better clusters with respect to \(SSE_{total}\).

We have also compared our algorithm and its counterparts using the external validity index, Adjusted Rand Index. Table 3, shows that the adjusted Rand Index on synthetic datasets (R15 and D31) and real datasets is high as compared with its competitors.

6 Conclusion

In this paper, we proposed an incremental k-means method, which is partition-based clustering method for handling initialization problem of k-means. In each iteration, it increments the centroid list by adding one data object from given dataset based on the maximum distance between data objects and centroid of the cluster with \(SSE_{partial}\). In terms of average \(SSE_{total}\) of synthetically generated datasets and real datasets, the incremental method is less as compared to its competitors. Similarly, in terms of Adjusted Rand Index, the incremental method is high as compared to its competitors. So, the incremental method could give a guaranteed solution even if, the number of clusters is high. For many datasets, its poor result is better than its competing algorithm. The time complexity of this method is linear order.

In real-world datasets, need further work different distance similarity measures on high dimension.

References

Al-Daoud, M.B.: A new algorithm for cluster initialization. In: WEC 2005: The Second World Enformatika Conference (2005)

Arthur, D., Vassilvitskii, S.: k-means++: the advantages of careful seeding. In: Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 1027–1035. Society for Industrial and Applied Mathematics (2007)

Bradley, P.S., Fayyad, U.M.: Refining initial points for k-means clustering. In: ICML, vol. 98, pp. 91–99. Citeseer (1998)

Celebi, M.E., Kingravi, H.A., Vela, P.A.: A comparative study of efficient initialization methods for the k-means clustering algorithm. Expert Syst. Appl. 40(1), 200–210 (2013)

Fränti, P., Sieranoja, S.: K-means properties on six clustering benchmark datasets (2018). http://cs.uef.fi/sipu/datasets/

Gonzalez, T.F.: Clustering to minimize the maximum intercluster distance. Theoret. Comput. Sci. 38, 293–306 (1985)

Hadi, A.S., Kaufman, L., Rousseeuw, P.J.: Finding groups in data: an introduction to cluster analysis. Technometrics 34(1), 111 (1992)

Han, J., Pei, J., Kamber, M.: Data Mining: Concepts and Techniques. Elsevier, Amsterdam (2011)

Ismkhan, H.: Ik-means-+: an iterative clustering algorithm based on an enhanced version of the k-means. Pattern Recogn. 79, 402–413 (2018)

Kumar, K.M., Reddy, A.R.M.: An efficient k-means clustering filtering algorithm using density based initial cluster centers. Inf. Sci. 418, 286–301 (2017)

Redmond, S.J., Heneghan, C.: A method for initialising the K-means clustering algorithm using kd-trees. Pattern Recogn. Lett. 28(8), 965–973 (2007)

Tzortzis, G., Likas, A.: The MinMax k-means clustering algorithm. Pattern Recogn. 47(7), 2505–2516 (2014)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Prasad, R.K., Sarmah, R., Chakraborty, S. (2019). Incremental k-Means Method. In: Deka, B., Maji, P., Mitra, S., Bhattacharyya, D., Bora, P., Pal, S. (eds) Pattern Recognition and Machine Intelligence. PReMI 2019. Lecture Notes in Computer Science(), vol 11941. Springer, Cham. https://doi.org/10.1007/978-3-030-34869-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-34869-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34868-7

Online ISBN: 978-3-030-34869-4

eBook Packages: Computer ScienceComputer Science (R0)