Abstract

Multi-focus fusion technique is used to combine images obtained from single or different cameras with different focal distance, etc. In the proposed method, the non-subsampled shearlet transform (NSST) is employed to decompose the input image data into the low-frequency and high-frequency bands. These low-frequency and high-frequency bands are combined using sparse representation (SR) and modified difference based fusion rules, respectively. Then, inverse NSST is employed to get the fused image. Both qualitative and quantitative results confirm that the proposed approach yields a better performance as compared to state-of-the-art fusion schemes.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

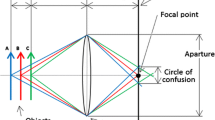

The image fusion is an integration process of knowledge obtained from different or similar sources of images. Sometimes, it is impracticable to obtain an image having, all important focused objects due to the restricted depth of focus of an optical lens in digital cameras, optical microscopes, etc. Fusion techniques can be employed here to get a fused image, which consists of all the focus parts of source images. In our proposed approach, the non-subsampled shearlet transform (NSST) is employed for fusion over curvelet and contourlet. NSST have different characteristics, for example, better localization in both spatial and frequency domain, optimal sparse representation and fine detail sensitivity using parabolic scaling scheme [2].

In the last few years, sparse representation (SR)-based methods have arisen as an effective branch in image fusion research with many improved approaches [8, 17]. In the proposed approach, a novel fusion criterion is proposed to combine high-frequency bands after source image decomposition using NSST. For fusion of low-frequency bands, SR-based technique is employed with a hybrid of learned and local cosine bases dictionaries [10, 11]. Local cosine bases dictionary efficiently captures texture information of the input images, while learned dictionary well approximates the fine details, low- and high-frequency features.

NSST [2,3,4] is employed to capture high-frequency details such as edges. contours, etc. NSST have good localized waveforms at various scales, positions, and angles. Moreover, it also minimizes the misregistration error because of the shift-invariant feature in the fused image.

Hybrid Dictionary Based on Learning and Local Cosine Bases: Local cosine dictionary obtained from the cosine modulated filter bank is efficient to represent the texture information of source images. K-SVD technique based learned dictionary efficient to describe fine features of the source image. Therefore, in the proposed fusion approach dictionaries obtained using local cosine bases and K-SVD method are concatenated to make a single dictionary to represent significant details of the input images.

2 The Proposed Method

The layout of the proposed fusion procedure is shown in Fig. 1. The presented approach first decomposes the input images into low and high-frequency coefficients using NSST. Later implementing proposed fusion schemes and taking inverse NSST fused version of the image is attained.

2.1 Modified Differences

To seize fine details from high-frequency coefficients generally, a variation of the center pixel to its horizontal and vertical pixels are estimated. This rule ignores the relationship of diagonal neighboring pixels with the center pixel. To overcome this limitation improved version of the operation is proposed, where the variation of the center point pixel to each neighboring pixels are estimated. We named this method as modified difference method [13] due to a large number of different operations in \(3 \times 3\) window as shown in (1). Let f(i, j) be an image of dimension \(M \times N\) and kernel dimension is \(3 \times 3\). Applying the modified difference method, we get an image \(\mathbf {MDf}(i,j)\) considering \(w_1\) = \(w_2\) = 3. Note, (1) is applicable only for a window of size \(3 \times 3\) [13].

2.2 The Proposed Fusion Framework

Our presented method apply NSST for the source image decomposition upto 2-level. Input images \(\mathbf {A}\) and \(\mathbf {B}\) are initially decomposed into the low-frequency band and high-frequency coefficients. NSST decomposition of the two source images \(\{ {\mathbf {I}_A},{\mathbf {I}_B}\} \) are carried out, which will give for each source images, one low-frequency bands \(\{ \mathbf {L}_A,\mathbf {L}_B\} \) and four high-frequency coefficients \(\{\mathbf {H}_{k,l}^A(i,j),\mathbf {H}_{k,l}^B(i,j)\}\). Here, l and k represent directional band and the level of decomposition, respectively.

Diagram of proposed image fusion approach [8].

Low-Frequency Coefficients Fusion Scheme: Low-frequency coefficients obtained using NSST decomposition are combined in five steps, given as [8]:

-

(I)

Using the sliding window technique, images \({\mathbf {L}_A}\) and \({\mathbf {L}_B}\) are divided into \(\sqrt{n} \times \sqrt{n}\) patch sizes initiated from upper left to down right with s pixel step length. In \({\mathbf {L}_A}\) and \({\mathbf {L}_B}\), T number of patches are represented as \(\left\{ {{p^i}_A} \right\} _{i = 1}^T\) and \(\left\{ {{p^i}_B} \right\} _{i = 1}^T\), respectively.

-

(II)

Then we rearrange \(\{ {p^i}_A,{p^i}_B\}\) into column vectors \(\{ {{\mathbf {{q}}}^i}_A,{{\mathbf {{q}}}^i}_B\}\) for every position i in images. Then we attain normalized vectors \(\{ {{\mathbf {{\hat{q}}}}^i}_A,{{\mathbf {{\hat{q}}}}^i}_B\}\) as follows:

$$\begin{aligned} \begin{array}{l} {\mathbf {{{\hat{q}}}}^i}_A = {\mathbf {{{q}}}^i}_A - {{\bar{q}}^i}_A.\mathbf {1},\\ {\mathbf {{{\hat{q}}}}^i}_B = {\mathbf {{{q}}}^i}_B - {{\bar{q}}^i}_B.\mathbf {1}, \end{array} \end{aligned}$$(2)where \({{\bar{q}}^i}_A\) and \({{\bar{q}}^i}_B\) are mean vectors of \({{\mathbf {q}}^i}_A\) and \({{\mathbf {q}}^i}_B\), respectively. \(\mathbf {1}\) represent \(n \times 1\) vector having all one entry.

-

(III)

Now we perform sparse coding using orthogonal matching pursuit (OMP) algorithm to find sparse coefficient vectors \(\{ {\alpha ^i}_A,{\alpha ^i}_B\}\) from \(\{ {{\mathbf {{{\hat{q}}}}}^i}_A,{{\mathbf {{{\hat{q}}}}}^i}_B\} \) with dictionary \(\mathbf {D}\) as follows [8]:

$$\begin{aligned} \begin{array}{l} {\alpha ^i}_A = \mathop {\arg \min }\limits _\alpha ||\alpha |{|_0}\mathrm{{ }}\quad such \quad that\quad \mathrm{{ ||}}{\mathbf {{{\hat{q}}}}^i}_A{{ - \mathbf {{D}}}}\alpha \mathrm{{|}}{\mathrm{{|}}_\mathrm{{2}}}\mathrm{{< \varepsilon ,}}\\ {\alpha ^i}_B = \mathop {\arg \min }\limits _\alpha ||\alpha |{|_0}\mathrm{{ }}\quad such \quad that\quad \mathrm{{ ||}}{\mathbf {{{\hat{q}}}}^i}_B{{ - \mathbf {{D}}}}\alpha \mathrm{{|}}{\mathrm{{|}}_\mathrm{{2}}}\mathrm{{ < \varepsilon ,}} \end{array} \end{aligned}$$(3) -

(IV)

Using max-L1 fusion rule \({\alpha ^i}_A\) and \({\alpha ^i}_B\) are fused to get the fused sparse vector given as [8]:

$$\begin{aligned} {\alpha ^i}_F = \left\{ \begin{array}{l} {\alpha ^i}_A\quad \mathrm{{ if\quad ||}}{\alpha ^i}_A\mathrm{{|}}{\mathrm{{|}}_\mathrm{{1}}}\mathrm{{ > ||}}{\alpha ^i}_B\mathrm{{|}}{\mathrm{{|}}_\mathrm{{1}}}\\ {\alpha ^i}_B\mathrm{{ \quad otherwise}} \end{array} \right. \end{aligned}$$(4)fused mean value \({{\bar{q}}^i}_F\) is obtained by

$$\begin{aligned} {{\bar{q}}^i}_F = \left\{ \begin{array}{l} {{\bar{q}}^i}_A\quad \mathrm{{ if }}\quad {\alpha ^i}_F = {\alpha ^i}_A\\ {{\bar{q}}^i}_B\quad \mathrm{{ otherwise}} \end{array} \right. \end{aligned}$$(5)Now fused version of \({\mathbf {{{q}}}^i}_A\) and \({\mathbf {{{q}}}^i}_B\) is obtained as [8]:

$$\begin{aligned} {\mathbf {{{q}}}^i}_F = \mathbf {{{D} }}{\alpha ^i}_F + {{\bar{q}}^i}_F.\mathbf {1} \end{aligned}$$(6) -

(V)

Above four steps are iteratively applied for all image patches. For all patches \(\left\{ {{p^i}_A} \right\} _{i = 1}^T\) and \(\left\{ {{p^i}_B} \right\} _{i = 1}^T\) fused vectors \(\left\{ {{\mathbf {{{q}}}^i}_F} \right\} _{i = 1}^T\) is obtained. Let \(\mathbf {L}_F\) is low-pass fused image. Now for each \({\mathbf {{{q}}}^i}_F\) convert it by reshaping, into \({{p^i}_F}\) then put it into its original position in \(\mathbf {L}_F\). Due to overlapped patches every pixel in \(\mathbf {L}_F\) is averaged over number of times it is accumulated [8].

High-Frequency Coefficients Fusion Scheme: To combine high-frequency coefficients \(\{\mathbf {H}_{k,l}^A(i,j)\}\) and \(\{\mathbf {H}_{k,l}^B(i,j)\}\), modified difference fusion rule is proposed. (1) is applied on each high-frequency band. \(\mathbf {H}_{k,l}^A(i,j)\), \(\mathbf {H}_{k,l}^B(i,j)\) and \(\mathbf {H}_{k,l}^f(i,j)\) are high-frequency coefficients at \(l^{th}\) direction sub-image at \(k^{th}\) level of decomposition and (i, j) location of input and fused images, respectively. Then applying modified difference in \(\mathbf {H}_{k,l}^A(i,j) \) and \(\mathbf {H}_{k,l}^B(i,j)\), modified difference features \(\mathbf {MDH}_{k,l}^A(i,j)\) and \(\mathbf {MDH}_{k,l}^B(i,j)\) are attained. Here fused coefficients i.e. \(\mathbf {H}_{k,l}^f(i,j)\) are obtained as:

For fusion of high-frequency bands modified difference rule is used to enhance the significant high-frequency information. This rule enhances the corners, points, edges, contrast information in the final fused image. Moreover, these information improves the interpretation capability of the fused images. Now from fused low-frequency and high-frequency sub-images, inverse NSST is implemented to obtain a fused version of source images.

Qualitative comparative study of state-of-the-art and proposed method of dataset-1. (a1)–(b1) and (a2)–(b2) are source image pairs. (c1)–(c2) GP [12], (d1)–(d2) DWT [5], (e1)–(e2) HOSVD [7], (f1)–(f2) GFF [6], (g1)–(g2) MST SR [8], (h1)–(h2) DCT SF [1], (i1)–(i2) DSIFT [9], (j1)–(j2) NSCT FAD [16], (k1)–(k2) Proposed.

Qualitative comparative study of state-of-the-art and proposed method of dataset-2. (a1)–(b1) and (a2)–(b2) are source image pairs. (c1)–(c2) GP [12], (d1)–(d2) DWT [5], (e1)–(e2) HOSVD [7], (f1)–(f2) GFF [6], (g1)–(g2) MST SR [8], (h1)–(h2) DCT SF [1], (i1)–(i2) DSIFT [9], (j1)–(j2) NSCT FAD [16], (k1)–(k2) Proposed.

3 Results and Comparison

To perform experiments database of multi-focus images are obtained from URLs given in [8]. The presented approach is compared with number of state-of-the-art approaches [1, 5,6,7,8,9, 12, 16]. The proposed method is examined by employing three fusion metrics for example mutual information index (MI) [16], edge-based similarity index \(Q^{AB/F}\) [14], and structure similarity measure \(Q_s\) [15]. Metric MI indicates the mutual information between the fused and source images. Metric \(Q^{AB/F}\) indicates the edge preserving information in the fused image and metric \(Q_{s}\) indicates the structural similarity information between the fused and source images. For better fusion performance MI should be high, in addition, values of the \(Q^{AB/F}\) and \(Q_s\) should be near to one.

Proposed fusion techniques are tested in two image pairs of two databases. Our approach for multi-focus modalities images is compared with the methods proposed using gradient pyramid (GP) [12], discrete wavelet transform (DWT) [5], high-order singular value decomposition (HOSVD) [7], guided filtering (GFF) [6], multi-scale transform and sparse representation (MST SR) [8], discrete cosine transform beside spatial frequency (DCT SF) [1], dense scale-invariant feature transform (DSIFT) [9] and NSCT focus area detection (NSCT FAD) [16]. In our proposed approach, the Max-L1 rule is used to select sparse vectors and coefficients of high-frequency bands are fused or combined using modified difference information.

In Figs. 2 and 3, qualitative comparison of various fusion schemes is presented. The main objective analysis of the presented approach is shown in Tables 1 and 2. From Tables 1 and 2, metrics values of our presented scheme are better as compared to state-of-the-art methods. Bold metric values indicate better results. If the input images are not registered perfectly, then both GP and DWT methods result in some ringing artifacts in the fused images due to shift variance of GP and DWT. The HOSVD scheme generates distorted edges and blur artifacts in the fused/combined image. Fused image obtained through GFF approach usually loses some crucial texture information and have some blurring artifact. MST SR method affected from few noisy distortions because of the absolute value max select fusion scheme for high-frequency coefficients fusion.

DCT SF and DSIFT methods perform better than GFF and MST SR but underperformed as compared to our proposed method. DCT approach is inefficient to seize directional contents of the input images and have some blocking artifacts. Due to spatial frequency based fusion rule, the results of the DCT scheme is superior to GFF, MST SR and comparable with DSIFT. At last, NSCT FAD method unable to perform well because of inefficient sum-modified-Laplacian-based fusion rule for low-frequency bands.

4 Conclusion

In our proposed method, we describe NSST and SR-based fusion methods for multi-focus images. Our proposed method uses the modified difference based fusion rule to select high-frequency coefficients. Our proposed method gives better subjective and objective results in contrasted to existing multi-focus image fusion methods.

References

Cao, L., Jin, L., Tao, H., Li, G., Zhuang, Z., Zhang, Y.: Multi-focus image fusion based on spatial frequency in discrete cosine transform domain. IEEE Signal Process. Lett. 22(2), 220–224 (2015)

Easley, G., Labate, D., Lim, W.Q.: Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmon. Anal. 25(1), 25–46 (2008)

Guo, K., Labate, D.: Optimally sparse multidimensional representation using shearlets. SIAM J. Math. Anal. 39(1), 298–318 (2007)

Guorong, G., Luping, X., Dongzhu, F.: Multi-focus image fusion based on non-subsampled shearlet transform. IET Image Process. 7(6), 633–639 (2013)

Li, H., Manjunath, B., Mitra, S.K.: Multisensor image fusion using the wavelet transform. Graph. Models Image Process. 57(3), 235–245 (1995)

Li, S., Kang, X., Hu, J.: Image fusion with guided filtering. IEEE Trans. Image Process. 22(7), 2864–2875 (2013)

Liang, J., He, Y., Liu, D., Zeng, X.: Image fusion using higher order singular value decomposition. IEEE Trans. Image Process. 21(5), 2898–2909 (2012)

Liu, Y., Liu, S., Wang, Z.: A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 24, 147–164 (2015)

Liu, Y., Liu, S., Wang, Z.: Multi-focus image fusion with dense SIFT. Inf. Fusion 23, 139–155 (2015)

Malvar, H.S.: Signal Processing with Lapped Transforms. Artech House, Norwood (1992)

Meyer, F.G.: Image compression with adaptive local cosines: a comparative study. IEEE Trans. Image Process. 11(6), 616–629 (2002)

Petrovic, V.S., Xydeas, C.S.: Gradient-based multiresolution image fusion. IEEE Trans. Image Process. 13(2), 228–237 (2004)

Vishwakarma, A., Bhuyan, M.K.: Image fusion using adjustable non-subsampled shearlet transform. IEEE Trans. Instrum. Measur. 68(9), 1–12 (2018)

Xydeas, C., Petrovic, V.: Objective image fusion performance measure. Electron. Lett. 36(4), 308–309 (2000)

Yang, C., Zhang, J.Q., Wang, X.R., Liu, X.: A novel similarity based quality metric for image fusion. Inf. Fusion 9(2), 156–160 (2008)

Yang, Y., Tong, S., Huang, S., Lin, P.: Multifocus image fusion based on nsct and focused area detection. IEEE Sens. J. 15(5), 2824–2838 (2015)

Yin, H., Li, S., Fang, L.: Simultaneous image fusion and super-resolution using sparse representation. Inf. Fusion 14(3), 229–240 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Vishwakarma, A., Bhuyan, M.K., Sarma, D., Bora, K. (2019). Multi-focus Image Fusion Using Sparse Representation and Modified Difference. In: Deka, B., Maji, P., Mitra, S., Bhattacharyya, D., Bora, P., Pal, S. (eds) Pattern Recognition and Machine Intelligence. PReMI 2019. Lecture Notes in Computer Science(), vol 11941. Springer, Cham. https://doi.org/10.1007/978-3-030-34869-4_52

Download citation

DOI: https://doi.org/10.1007/978-3-030-34869-4_52

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34868-7

Online ISBN: 978-3-030-34869-4

eBook Packages: Computer ScienceComputer Science (R0)