Abstract

Speech evaluation is an essential process of language learning. Traditionally, speech evaluation is done by experts evaluate voice and pronunciation from testers, which lack of efficiency and standards. In this paper, we propose a novel approach, based on deep learning and audio caption, to evaluate speeches instead of linguistic experts. First, the proposed approach extracts audio features from the speech. Then, the relationships between audio features expert evaluations are learned by deep learning. At last, an LSTM model is applied to predict expert evaluations. The experiment is done in a real-world dataset collected by our collaborative company. The result shows the proposed approach achieves excellent performance and has high potentials in the application.

Supported by organization ICEBE.

Similar content being viewed by others

Keywords

1 Introduction

Speech evaluation is an important language learning method. Currently, many language training institutions in the market use speech evaluation to evaluate the language ability of trainees. This makes it necessary for language training institutions to hire a large number of linguists to serve speech evaluation. However, due to the different evaluation criteria of different linguists and the insufficient number of linguists, speech evaluation on the market is expensive and not objective enough or accurate enough. Technically reducing the labor cost of speech evaluation and improving the accuracy of evaluation has become a significant demand in the current market.

With the rapid development of deep learning and speech recognition technology, some studies have been able to effectively perform speech recognition, can accurately identify different people’s speech, and can also score each person’s pronunciation [1, 2]. However, in addition to identification and scoring, these methods currently do not simulate linguists’ evaluation of Speech, which is difficult to meet the need of various language training institutions in the market.

Aiming at the above problems, this paper proposes an audio caption method based on speech recognition and natural language generation technology. The method takes the individual’s speech as input and outputs it like an expert’s comment. In the training process, the method trains the features of the speech with the comments of the linguist. In the prediction process, the method will output language expert comments according to the characteristics of the speech according to the grammar of the natural language. The method was tested in a real data set, and the experimental results show that the method can effectively generate corresponding expert comments based on speech. The accuracy and efficiency of the method are both acceptable to the market. In general, this article has the following three main contributions:

-

This paper technically reduces the costs of speech evaluation and effectively solves the practical problem that speech evaluation is not objective enough and accurate.

-

The audio caption method proposed in this paper can not only perform speech recognition and scoring, but also simulate language experts to evaluate various languages.

-

This article uses real data sets to conduct experiments, which can meet market requirements in terms of accuracy and efficiency.

In Sect. 1, a brief introduction to speech evaluation and the proposed audio caption method is presented. In Sect. 2, the overall background of deep learning, the current research status of speech recognition, and the development of natural language is introduced. In Sect. 3, related techniques for the intelligent speech evaluation model are described in detail and the overall framework and model details of the audio intelligent evaluation model are described. Section 4 evaluates based on the evaluation indicators of the audio intelligent evaluation model.

2 Related Work

In recent years, deep learning is changing the various fields of traditional industries [3]. As deep learning becomes commoditized, people’s needs become a creative application in different fields. For example, the applications in the medical field are mainly reflected in auxiliary diagnosis, rehabilitation intelligent equipment, medical record and medical image understanding, surgical robots, etc. [4]; the applications in the financial field mainly include intelligent investment, investment decision, intelligent customer service, precision marketing, risk control, anti-fraud, intelligent claims, etc. [6]; applications in the field of education mainly include one-on-one intelligent online counseling, job intelligence correction, digital intelligent publishing, etc. [5]. At present, deep learning has made the most outstanding progress in image and speech recognition, involving image recognition, speech recognition, natural language processing and other technologies [7,8,9]. The intelligent evaluation technology used in this paper also relies on deep learning and adopts a multi-model composite method to realize the language evaluation system.

In the field of speech recognition, the results have been fruitful in recent years. Shi et al. proposed to replace the traditional projection matrix with a higher-order projection layer [10]. The experimental results show that the higher-order LSTM-CTC model can bring 3% compared with the traditional LSTM-CTC end-to-end speech model. The decline in the 10% relative word error rate. Xiong Wang et al. proposed the use of confrontational examples to improve the performance of Keyword Spotting (KWS) [11]. Experiments were carried out on the wake-up data set collected on the intelligent voice. The experimental results showed that the threshold was set. In the case of 1.0 false wake-ups per hour, the proposed method achieved a reduction of 44.7% of the false rejection rate. Cloud has explored the end-to-end speech recognition network based on the technology. The proposed method is based on the improvement of the original CNN-RNN-CTC network [12]. This method is based on the Deep Speech 2 CNN-RNN-CTC model proposed by Baidu. Focus on improving the RNN part of the original network. In addition, in the field of speech recognition, the idea of cascading structure was first proposed, which improved the accuracy of these difficult samples. The experimental results showed that the WER of the Librispeech test-clean test reached 3.41%, saving 25% on training time. With the rapid development of deep learning, the rapid development of computing power, the rapid expansion of data volume, and the deep learning of large-scale applications in the field of speech recognition have made breakthroughs. This paper combines the research results of the previous speech in the field of speech recognition, and carries out a series of preprocessing and feature extraction on the input speech, which improves the accuracy of speech recognition.

Natural language generation is the focus of today’s machine learning research. Google launched the project of its automatic summary module in 2016. Textsum [13] this module also uses RNN to encode the original text, and uses another RNN digest, which also uses cluster search in the final stage of digest generation (beam-search) Strategies to improve summary accuracy. Britz et al. [14] conducted some experiments and analysis on the sequence mapping model. The results show that the cluster search has a great influence on the quality of the abstract. In 2017, Facebook’s AI Lab published its latest model [15], which uses a convolutional neural network as an encoder to add word position information to the word vector, using a Gated Linear Unit (GLU). As a gate structure, and refreshing the record on the automatic summary dataset, this does not mean that the CNN-based sequence mapping model must be better than RNN [16, 17]. Although CNN is efficient, but there are many parameters, and CNN can’t be sensitive to the sequence of words like RNN. It is necessary to introduce the position information of words into the word vector to simulate the timing characteristics of RNN. It can be seen that RNN has its natural advantages in dealing with serialized information. Advantage. In this paper, the model of cyclic neural network is used to realize the process from feature to natural language generation.

In summary, speech evaluation based on deep learning audio caption studied in this paper can be fully tested for various languages. In this paper, the audio caption method inputs various language audios, and the output is a specific evaluation of various languages, as shown in Fig. 1. In this paper, the MFCC feature extraction technology [18] and the LSTMP model [19] are combined to extract the audio features; the word vector [20]and the memory length model [21] are combined to evaluate the audio [22, 23]. According to relevant research, it has been found that this audio caption method has not appeared in the field of deep learning before this article. On the contrary, deep learning has a lot of correlation research on image loading subtitles in the field of image recognition [24]. Aiming at the shortage of models for deep learning in speech evaluation, this paper combines the relevant knowledge in the field of image recognition, and successfully designs an audio caption method with accurate speech feature extraction and accurate guidance.

3 The Method

3.1 Preliminaries

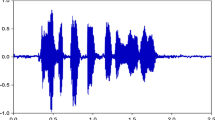

Audio Feature Extraction: At present, there are many audio feature extraction methods. The most common feature parameters are pitch period, formant, Linear Predictive Cepstral Coding (LPCC), and Mel-frequency Cepstrum Coefficients (MFCC). Among them, MFCC is a feature widely used in automatic speech and speaker recognition. MFCC audio feature extraction algorithm is based on human auditory characteristics and can be used to display the characteristics of a piece of speech energy, bass frequency, etc., so that a speech can be well expressed through a digital matrix. In this paper, the MFCC feature extraction method is applied to extract each time step feature, and the relationship between the frequency and the Mel frequency can be approximated as shown in (1). The preprocessing stage of the speech signal includes processes such as emphasis, framing, windowing, endpoint detection, and de-noising processing. After pre-processing, the data is distributed from the time domain to the frequency domain by the fast Fourier transform to obtain the energy distribution of the spectrum. The square of the modulus obtains the spectral line energy, and then it is sent to the Mel filter bank to calculate the energy of the Mel filter, taking the logarithmic energy of each filter output. Finally, the DCT transform is performed according to (2) to obtain the MFCC characteristics parameter.

The MFCC feature is composed entirely of N-dimensional MFCC feature parameters. The MFCC feature parameter of each time step is taken as a basic feature, and this feature can be used as a voice input feature after further processing. When processing the obtained MFCC feature parameters of a single time step, this paper adopts the MFCC feature parameters of multiple speech segments as the MFCC feature of the intermediate speech segment. This processing method overcomes the traditional MFCC feature because the speech segment is too short. The problem caused by the inability to fully express the syllables in the discourse. This paper will combine to obtain a more representative MFCC feature as an input to the final speech feature.

Speech Processing: In a deep neural network, a cyclic neural network uses a cyclic structure with time delay in the time dimension to make the network have a memory structure. Long short term memory is a deformed structure of a cyclic neural network. On the basis of the ordinary RNN, the memory unit is added to each neural unit in the hidden layer, so that the memory information in the time series is controllable, and the forgetting gate, the input gate, the candidate gate, and the output gate are passed each time passing between the hidden layer units. It can control the memory and forgetting of the previous information and the current information, so that the RNN network has long-term memory function. The LSTM model uses three gate structures to store and control information. For example, at time t, there are three inputs to the LSTM: the cell unit state \(c_{t-1}\) at the previous moment, the output value \(h_{t-1}\) of the LSTM at the previous moment, and the input value \(x_t\) of the current time network, LSTM there are two outputs: the cell unit state \(c_t\) at the current time and the output value \(h_t\) of the current time LSTM. Where x, h, t are vectors. The forgetting gate \(f_t\) is used to control how much the cell state \(c_{t-1}\) at the previous moment is retained to the current time \(c_t\), the input gate \(i_t\) controls how much the input \(x_t\) of the network is saved to the cell state \(c_t\), and the output gate \(o_t\) is the control unit. The effect of state \(c_t\) on the current output value \(h_t\) of the LSTM. The calculation formulas of the three gates at time t are as shown in (3), (4), and (5). Where \(W_i\), \(W_f\), \(W_o\) are the weight matrix of the corresponding gate, and are the splicing of two matrices, corresponding to \(h_{t-1}\) and \(c_t\), \(b_i\), \(b_f\), \(b_o\) are corresponding offset matrices, \(\sigma (\cdot )\) Activate the function for sigmoid. The current input unit state is as shown in (6), and the current unit state at time t is as shown in (7). Where \(\circ \) represents the product by point, and the above calculation is combined. The output of the final LSTM is as shown in (8).

In the speech processing phase, the LSTM model used in this paper is not a traditional LSTM architecture, but an improved LSTMP architecture [25]. The LSTMP layer adds a linear recursive projection layer to the original LSTM layer. The linear recursive projection layer acts like a fully connected layer, which compresses the output vector to reduce the dimensionality of the high-latitude information and reduce the dimensions of the neuron unit, thereby reducing the number of parameters in the associated parameter matrix. As a result, the model converges quickly and makes the model superior to deep feedforward neural networks with more orders of magnitude. LSTMP has proven to be very impressive in a variety of speech recognition problems.

Natural Language Generation: In natural language generation, this paper refers to an image captioning architecture based on deep loops [24]. This architecture combines the latest advances in computer vision and machine translation to generate natural language for describing images. The model has verified the fluency of the sentences of the model description image and the accuracy of the model giving a specific image description on several data sets. This model also takes advantage of long-short term memory models in cyclic artificial neural networks, and LSTM plays a key role in this architecture. This architecture requires training the LSTM model to predict what the words are after each word and what the corresponding image is for each sentence. In this process, an LSTM memory is created, so that all LSTMs share the same parameter, and when the word t−1 is outputting, the word at time t can be obtained. All duplicate connections are converted to image features and corresponding sentences for the feedforward connection in the expanded version. It is worth noting in this model that words are represented as a one-hot vector, and the dimension is equivalent to the size of a dictionary. Among them, \(S_0\) and \(S_N\) are defined as the words at the beginning and end of each sentence, and a complete sentence is formed when the LSTM issues a stop word. The input images and words are mapped to the same space, and the images are entered only once, in order to inform the LSTM to output sentences related to the image. The experimental results show that the robustness of the image captioning model based on the deep loop and the quantitative evaluation have obtained good results.

3.2 The Overview of the Method

With the extensive application of deep learning related techniques in speech feature extraction and natural language generation, speech evaluation based on deep learning audio caption designed in this paper uses two independent cyclic artificial neural networks. The task processing flow of this model is shown in Fig. 2, which is divided into the following three steps:

-

Audio feature extraction phase. The input audio is subjected to cepstral analysis on the Mel spectrum to extract features of each time step. The process also includes short-term FFT calculation for each frame of the spectrogram, cepstral analysis of the speech spectrogram, Mel frequency analysis, and Mel frequency cepstral coefficient calculation to obtain MFCC characteristic parameters. On this basis, the MFCC feature parameters of multiple time steps are combined to obtain the MFCC feature.

-

Voice processing stage. Entering the MFCC feature of the audio feature extraction stage. The extracted MFCC features are further analyzed by the three-layer LSTMP, and the recognition rate of the audio features is improved, and finally a more effective audio feature vector is obtained. At this stage, the paper cites the MSE loss function to score audio classifications and improve the accuracy of audio classification.

-

Natural language generation phase. The feature vector of the audio was obtained in the previous stage. At this stage, the comments are first segmented, and then the word vectorization is performed. The resulting word vector is used as an input to the natural language model. The LSTM in the natural language generation model has a memory function, and the trained LSTM model maps to the relevant word vector to form a corresponding comment after the milk transmission audio.

3.3 Audio Feature Extraction

In the audio intelligent evaluation model, the audio features are first extracted. In other words, the recognizable components of the audio signal need to be extracted and the other useless information is removed, such as background noise, emotions and the like. The model design of the audio feature extraction stage introduces a feature that is widely used in automatic speech and speaker recognition, namely MFCC. The MFCC feature extraction is performed on the audio file of the model input format of .MP3, and the MFCC feature parameters are obtained. In order to reduce the amount of calculation and improve the accuracy of audio features, this paper cites the MFCC feature parameters of 18 time steps to be combined to obtain more representative MFCC features. The value here should be noted. If there is insufficient depreciation in the merge time step, the insufficient part is complemented by 0. The audio feature extraction process is shown in Fig. 3 below.

3.4 Speech Processing Model

In the speech processing stage, the recognition effect of MFCC under noise is drastically reduced. This paper adopts the batch gradient descent algorithm in deep neural network adaptive technology, which compresses the most important information of speaker characteristics in low-dimensional fixed length. In order to reduce the parameter optimization of the matrix, the three-layer LSTMP is used to extract the audio features. The LSTMP used in this paper is built by the improved LSTM algorithm, and finally the score is obtained after the fully connected layer. Thus, the output characteristics of the LSTM model represent a mapping between speech and its score, with which comments are automatically generated. The model designs a loss function of the language ability score, citing the maximum entropy MSE, N data are divided into r groups, and the sample variance of the i-th group is \(s_i^2\), then the overall MSE is as shown in (9), the loss function As shown in (10).

In deep neural networks, features are defined by the pre-L-1 layer and are ultimately learned jointly by the maximum entropy model based on the training data. This not only eliminates the cumbersome and erroneous process of artificial feature construction, but also has the potential to extract invariant and discriminative features through many layers of nonlinear transformations, which are almost impossible to construct manually. Here, the two-classification method is applied to make the model output the degree of speech in the language standard (x = 0 means that the language is not standard; x = 1 means the language standard), the degree of emotional fullness (y = 0 means that the feeling is dull; y = 1 means that the feeling is rich. And the degree of fluency (z = 0 indicates that the expression is not smooth; z = 1 indicates that the expression is smooth).

In deep neural networks, the closer the hidden layer is the input layer, the lower the layer, and the closer the output layer is, the higher layer. Lower-level features typically capture local patterns, while these local patterns are very sensitive to changes in input characteristics. However, higher-level features are more abstract and more invariant to changes in input features because they are built on top of low-level features. Therefore, the model extracts the output of the penultimate layer to represent the audio features as an audio feature vector with each feature dimension being (1, 64). The voice processing process is shown in Fig. 4.

3.5 Comment Generation Model

In the comment generation model, in order to output the high-accuracy and fluent comments, this paper builds the basic natural language generation model and draws on the solution of the image caption problem. This method obtains the feature training natural language generation model through the pre-trained model. In the image captioning architecture, the long-short-term memory model in the cyclic artificial neural network is utilized. The long-short term memory model has a memory function. Its memory means that in a sequence, memory propagates in different time steps. The long-short term memory model solves the problem that in the traditional RNN, when the training time is long, the residuals that need to be returned will exponentially decrease, and the network weight update will be slow.

The comment generation model receives the output characteristics of the language scoring model LSTM layer and substitutes it into the LSTM natural language generation model. Before that, we need to process the corresponding comments of the corresponding audio, perform word vectorization processing, generate a digital vector of comments, and finally form a comment vocabulary. The model is based on the accumulation of multi-layer traditional LSTM units. The input audio features correspond to the digital vector of the generated comments. Finally, according to the corresponding vocabulary, the natural language composition comments corresponding to each number are found and the comments are output.

The model uses word level to generate texts, input enough comment datas for training, and LSTM has a memory function that can predict the next word. In the model, \(S_0\) and \(S_N\) are defined as the words \(<start>\) and \(<end>\) at the beginning and end of each sentence. When the LSTM issues the \(<end>\) word, a complete sentence is formed. For example, the source sequence contains [\(<start>\)], ‘Language’, ‘standard’, ‘emotional’, ‘full’, ‘expression’, ‘smooth’] and the target sequence is one containing [‘Language’, ‘standard’, ‘emotional’, ‘full’, ‘express’, ‘smooth’, ‘\(<end>\)’]. Using these sources and targets sequences and eigenvectors, the LSTM decoder is trained into a language model subject to eigenvectors. The LSTM language generation model is shown in Fig. 5 below. Finally, the vector corresponds to the comment and the comment corresponding to the audio is output.

4 Experiment

4.1 Data Setup

This model requires a certain amount of audio for training. It is difficult to find all kinds of language audio needed in this article on real social media. Therefore, we cooperate with external related companies to manually collect audio for model training and different degrees of testing. Because we need more data sets, all kinds of languages can not be enumerated, here we train and test models only use slang audio. Taking into account the uncertainties in the voice recording process, the environment is uncertain, etc., we use high-fidelity mobile audio recording equipment to collect. The data set statistics for the training and test audio of this article are shown in Table 1 below.

In order to train the speech recognition model and the comment generation model, this paper collects the audio of different people’s proverbs. At the same time, we will submit the collected audio data to professional linguists for evaluation. After analysis, experts will score the audio standard level, emotional fullness and fluency, and give the three aspects according to the above three aspects. When comments are outputted. First, the collected audio data sets and corresponding comment sets are input into the intelligent voice evaluation model for training. The collected test data sets are then imported into the trained model, and the model automatically generates comments and evaluations. Finally, testing the model.

4.2 Evaluation Metric

Speech evaluation based on deep learning audio caption consists of a speech recognition model and a comment generation model. The evaluation index of the speech recognition model is the auto-generated speech feature evaluation. Firstly, the model will be scored from three aspects, namely the standard degree of language, the degree of emotional fullness and the degree of fluency. Model performance is evaluated based on recall rate, accuracy and accuracy. The evaluation index of the comment generation model is a manual indicator. The manual selection index is given by the external personnel after the evaluation of the degree of conformity.

The formula for recall, precision and accuracy is as shown in (11), (12), (13) above. Where TP means predicting positive class as positive class number; TN means predicting negative class as negative class number; FP means that positive class prediction is negative class number misstatement; FN means that the number of negative classes predicted is the number of positive classes missed.

For the whole model, this paper adopts two aspects of time complexity and response time scalability in real-world applications, and estimates the response effect under real-life conditions. The time complexity will be compared and analyzed through the average response time of multiple tests. The scalability assessment is evaluated as the number of training data increases.

4.3 Experiment Result

In this paper, the model response time is tested several times to get the average response time. The results are shown in Table 2. Five audio numbers are randomly selected from the obtained data. It can be seen from the table that the response time of the evaluation model is in the range of 300 to 600 ms; the response time of the generated model is about 20 ms; and the total duration is about 500 ms. In the scalability analysis, we give a line graph of the model training as the number of training sets increases with time complexity, as shown in Fig. 6. As the number of samples in the test set grows linearly, the response time growth trend is basically linear. Explain that the overall operation of the model can be adapted to the real application environment.

The collected various language audios are input into the intelligent evaluation model of this article for training. The trained model is used as the benchmark model. On the test set, use the trained benchmark model for testing. In the language processing model, the scores of the standard level, emotional fullness and fluency of the language are obtained through testing, and Recall, Precision and Accuracy are displayed. Among them, the degree of language standard Recall is 1.0, Precision is 0.919, Accuracy is 0.922; the degree of Recall is 1.0, Precision is 0.805, Accuracy is 0.861; the smoothness of Recall is 0.982, Precision is 0.848, and Accuracy is 0.939. The scoring results show that the language processing model can be accurately evaluated in terms of audio feature extraction and speech scoring. The audio test set is input through the trained model in the comment generation model, and the comment corresponding to the audio is output. The output audio test set comment result is then sent to the outside commentator of the project, and the accuracy result is given against the audio and the comment. The final result is shown in Table 3. According to Table 3, we can see that the model gives an average accuracy of 85.9% for comments and manual comments.

4.4 Comparison

A Baseline in Speech Evaluation is compared with our Audio Capturing method to show the superiority of our Audio Capturing method by comparing the Accuracy evaluation indicators in the language processing model with the average accuracy of the comment generation model.

The basic structure of Baseline also uses two independent cyclic artificial neural networks. In the audio feature extraction part, the MFCC is applied to extract the audio features. In the speech processing stage, only one LSTM model is used for speech processing. In the natural language generation stage, the trained LSTM model is applied.

The experimental results of our Audio Capturing method have been obtained in Sect. 4.4. Through this comparison experiment, we can further illustrate the advantages of our proposed method. The comparison experimental results are shown in Table 4 below. By comparing the average accuracy and accuracy in the Accuracy evaluation index generation model, we can see that our proposed Speech Evaluation effectively solves the problem that the Speech Evaluation is not objective and accurate, and the evaluation of various languages will be more objective.

4.5 Discussion

Throughout the development of the audio caption method, we found that the generated comment fluency has a certain gap with the manual evaluation, and the comment generation model needs further correction and optimization. There is insufficient attention to some sensitive parts of speech and some rare phonetic words, and further optimization of the model is required, for example, to add an attention model. However, the method proposed in this paper can be trained under a small training set, and it can also have better effects. And our model is small and easy to load on other devices.

5 Conclusion

This paper develops speech evaluation based on deep learning audio caption for various language assessments. The system is divided into two modules: speech recognition and comment generation, which respectively extract the speech features and give an objective evaluation for the speech features. The audio caption method in this paper evaluates the speech feature extraction and audio correspondence evaluation. The results show that the audio caption method can output objective and smooth comments for different voice features. The comments have certain reference value and can help enterprises to use each. Intelligent training in language literacy.

References

Heigold, G., Moreno, I., Bengio, S., et al.: End-to-end text-dependent speaker verification. In: IEEE International Conference on Acoustics. IEEE (2016)

Sadjadi, S.O., Ganapathy, S., Pelecanos, J.W.: The IBM 2016 speaker recognition system (2016)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015)

Esteva, A., Kuprel, B., Novoa, R.A., et al.: Dermatologist-level classification of skin cancer with deep neural networks. Nature 542(7639), 115–118 (2017)

Bulgarov, F.A., Nielsen, R., et al.: Proposition entailment in educational applications using deep neural networks. In: AAAI vol. 32, no. 18, pp. 5045–5052 (2018)

Kolanovic, M., et al.: Big data and AI strategies: machine learning and alternative data approach to investing. Am. Glob. Quant. Deriv. Strategy (2017)

Zhang, J., Zong, C.: Deep Learning: Fundamentals, Theory and Applications. Springer, Cham (2019). 111

Chen, L.-C., Zhu, Y., et al.: Computer Vision – ECCV 2018. In: European Conference on Computer Vision, vol. 833, Germany (2018)

Goldberg, Y.: A primer on neural network models for natural language processing. Comput. Sci. (2015)

Shi, Y., Hwang, M.Y., Lei, X.: End-to-end speech recognition using A high rank LSTM-CTC based model (2019)

Shan, C., Zhang, J., Wang, Y., et al.: Attention-based end-to-end models for small-footprint keyword spotting (2018)

Zhou, X., Li, J., Zhou, X.: Cascaded CNN-resBiLSTM-CTC: an end-to-end acoustic model for speech recognition (2018)

Abadi, M., Barham, P., Chen, J., et al.: TensorFlow: a system for large-scale machine learning (2016)

Britz, D., Goldie, A., Luong, M.T., et al.: Massive exploration of neural machine translation architectures (2017)

Gehring, J., Auli, M., Grangier, D., et al.: Convolutional sequence to sequence learning (2017)

Hochreiter, S.: Recurrent neural net learning and vanishing gradient. Int. J. Uncertainty Fuzziness Knowl. Based Syst. 6(2), 107–116 (1998)

Chien, J.T., Lu, T.W.: Deep recurrent regularization neural network for speech recognition. In: IEEE International Conference on Acoustics. IEEE (2015)

Wang, W., Deng, H.W.: Speaker recognition system using MFCC features and vector quantization. Chin. J. Sci. Instrum. 27(S), 2253–2255 (2006)

Sak, H., Senior, A., Beaufays, F.: Long short-term memory based recurrent neural network architectures for large vocabulary speech recognition [EB/OL]. https://arxiv.org/abs/1402.1128v1 5 February 2014

Peng, Y., Niu, W.: Feature word selection based on word vector. Comput. Technol. Sci. 28(6), 7–11 (2018)

Hochreiter, S., Schmidhuber, J.: Long short-term momery. Neural Comput. 9(8), 1735–1780 (1997)

Kulkarni, G., Premraj, V., Ordonez, V., et al.: Baby talk: understanding and generating simple image descriptions. IEEE Trans. Pattern Anal. Mach. Intell. 35(12), 2891–2903 (2013)

Hong-Bin, Z., Dong-Hong, J., Lan, Y., et al.: Product image sentence annotation based on gradient kernel feature and N-gram model. Comput. Sci. 43(5), 269–273, 287 (2016)

Vinyals, O., Toshev, A., Bengio, S., et al.: Show and tell: lessons learned from the 2015 MSCOCO image captioning challenge. IEEE Trans. Pattern Anal. Mach. Intell. 39(4), 652–663 (2016)

Prabhavalkar, R., Alsharif, O., Bruguier, A., McGraw, I.: On the compression of recurrent neural networks with an application to LVCSR acoustic modeling for embedded speech recognition [EB/OL]. https://arxiv.org/abs/1603.08042 25 March 2016

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, L., Zhang, H., Guo, J., Ji, D., Liu, Q., Xie, C. (2020). Speech Evaluation Based on Deep Learning Audio Caption. In: Chao, KM., Jiang, L., Hussain, O., Ma, SP., Fei, X. (eds) Advances in E-Business Engineering for Ubiquitous Computing. ICEBE 2019. Lecture Notes on Data Engineering and Communications Technologies, vol 41. Springer, Cham. https://doi.org/10.1007/978-3-030-34986-8_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-34986-8_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34985-1

Online ISBN: 978-3-030-34986-8

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)