Abstract

This paper introduces a new vertex sampling method for big complex graphs, based on the spectral sparsification, a technique to reduce the number of edges in a graph while retaining its structural properties. More specifically, our method reduces the number of vertices in a graph while retaining its structural properties, based on the high effective resistance values. Extensive experimental results using graph sampling quality metrics, visual comparison and shape-based metrics confirm that our new method significantly outperforms the random vertex sampling and the degree centrality based sampling.

Research supported by ARC Discovery Project.

Similar content being viewed by others

1 Introduction

Nowadays many big complex networks are abundant in various application domains, such as the internet, finance, social networks, and systems biology. Examples include web graphs, AS graphs, Facebook networks, Twitter networks, protein-protein interaction networks and biochemical pathways. However, analysis and visualization of big complex networks is extremely challenging due to scalability and complexity.

Graph sampling methods have been used to reduce the size of graphs. Popular graph sampling methods include random vertex sampling, random edge sampling, random path sampling and random walk. However, previous work to compute graph samples based on random sampling techniques often fails to preserve the connectivity and important global skeletal structure in the original graph [16, 18].

In this paper, we introduce a new method called the Spectral Vertex (SV) sampling for computing graph samples, using an approach based on the spectral sparsification, a technique to reduce the number of edges in a graph, while retaining its structural properties, introduced by Spielman et al. [15]. Roughly speaking, we select vertices with high effective resistance values [15].

Using real-world benchmark graph data sets with different structures, extensive experimental results based on the graph sampling quality metrics, visual comparison with various graph layouts and shape-based metrics confirm that our new method SV significantly outperforms the Random Vertex sampling (RV) and the Degree Centrality (DC) based sampling. For example, Fig. 1 shows comparison between the graph samples of gN1080 graph with 25% sampling ratio, computed by RV, DC, and SV methods. Clearly, our SV method retains the structure of the original graph.

2 Related Work

2.1 Spectral Sparsification Approach

Spielman and Teng [15] introduced the spectral sparsification, which samples edges to find a subgraph that preserves the structural properties of the original graph. More specifically, they proved that every n-vertex graph has a spectral approximation with \(O(n \log n)\) edges, and presented a stochastic sampling method using the concept of effective resistance, which is closely related to the commute distance. The commute distance between two vertices u and v is the average time that a random walker takes to travel from vertex u to vertex v and return [15].

More formally, a sparsification \(G'\) is a subgraph of a graph G where the edge density of \(G'\) is much smaller than that of G. Typically, sparsification is achieved by stochastically sampling the edges, and has been extensively studied in graph mining. Consequently, there are many stochastic sampling methods available [8, 10]. For example, the most common stochastic sampling is random vertex (resp., edge) sampling (RV) (resp., RE): each vertex (resp., edge) is chosen independently with a probability p.

Furthermore, stochastic sampling methods have been empirically investigated in the context of visualization of large graphs [13, 16]. However, previous work to compute graph samples based on random sampling techniques often fails to preserve the connectivity and important structure in the original graph [16].

2.2 Graph Sampling Quality Metrics

There are a number of quality metrics for graph sampling [8,9,10]; here we explain some of most popular quality metrics used in [18].

-

(i)

Degree Correlation (Degree) associativity is a basic structural metric [11]. It computes the likelihood that vertices link to other vertices of similar degree, called positive degree correlation.

-

(ii)

Closeness Centrality (Closeness) is a centrality measure of a vertex in a graph, which sums the length of all shortest paths between the vertex and all the other vertices in the graph [5].

-

(iii)

Largest Connected Component (LCC) determines the size of the biggest connected component of a graph. It measures the variation on a fraction of vertices in the largest connected component upon link removals [7].

-

(iv)

Average Neighbor Degree (AND) is the measure of the average degree of the neighbors of each vertex [1]. It is defined as \(\frac{1}{|N(v_i)|} \sum _{v_j \in N(v_i)} d(v_j)\), where \(N(v_i)\) is the set of neighbors of vertex \(v_i\) and \(d(v_j)\) is the degree of vertex \(v_j\).

2.3 Shape-Based Metrics for Large Graph Visualization

The shape-based metrics [4], denoted as Q in this paper, is a new quality metrics specially designed for big graph visualization. The aim of these metrics is to measure how well the shape of the visualization represents the graph. Examples of shape graphs are proximity graphs such as the Euclidean minimum spanning tree, the Relative neighbourhood graph, and the Gabriel graph.

More specifically, the metric computes the Jaccard similarity indexes between a graph G and the proximity graph P, which was computed from the vertex locations in a drawing D(G) of a graph G. In particular, the shape-based metrics are shown to be effective for comparing different visualizations of big graphs; see [4].

3 Spectral Vertex Sampling

In this section, we introduce a new method for computing graph samples using spectral vertex (SV) sampling; effectively we sample vertices with high effective resistance values [15]. Let \(G=(V, E)\) be a graph with a vertex set V (\(n=|V|\)) and an edge set E (\(m=|E|\)). The adjacency matrix of an n-vertex graph G is the \(n \times n\) matrix A, indexed by V, such that \(A_{uv} = 1\) if \((u,v) \in E\) and \(A_{uv} = 0\) otherwise. The degree matrix D of G is the diagonal matrix with where \(D_{uu}\) is the degree of vertex u. The Laplacian of G is \(L = D - A\). The spectrum of G is the list \(\lambda _1, \lambda _2, \ldots , \lambda _n\) of eigenvalues of L.

Suppose that we regard a graph G as an electrical network where each edge e is a 1-\(\varOmega \) resistor, and a current is applied. The effective resistance r(e) of an edge e is the voltage drop over the edge e. Effective resistance values in a graph can be computed from the Moore-Penrose inverse of the Laplacian [15].

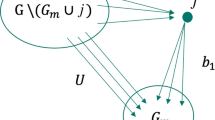

We now describe our new method called SV (Spectral Vertex) for computing spectral sampling \(G'=(V', E')\) of \(G=(V, E)\). More specifically, we define two variations of the effective resistance value r(v) and \(r_2(v)\) of a vertex v, as in Algorithms SV and SV2 below. Let deg(v) represents a degree of a vertex v (i.e., the number of edges incident to v), and \(E_v\) represents a set of edges incident to a vertex v.

-

1.

Algorithm SV:

-

(a)

Compute \(r(v) = \sum _{e\in {E_v}}r(e)\).

-

(b)

Let \(V'\) consist of the \(n'\) vertices with largest values of r(v).

-

(c)

Then \(G'\) is the subgraph of G induced by \(V'\).

-

(a)

-

2.

Algorithm SV2:

-

(a)

Compute \(r_2(v) = \frac{\sum _{e\in {E_v}}r(e)}{deg(v)}\).

-

(b)

Let \(V'\) consist of the \(n'\) vertices with largest values of \(r_2(v)\).

-

(c)

Then \(G'\) is the subgraph of G induced by \(V'\).

-

(a)

The running time of the algorithm is dominated by computing effective resistance values, which can be implemented in near linear time [15].

4 Comparison with Random Vertex Sampling

The main hypothesis of our experiment is that SV sampling method performs better than RV sampling method in three ways: better sampling quality metrics, better shape-based metrics, and visually better preserve the structure of the original graph. In particular, we expect a much better performance of the SV algorithm over RV, especially with small sampling ratio.

To test this hypothesis, we implemented the two spectral vertex sampling algorithms SV and SV2, the random vertex (RV) sampling method, and sampling quality metrics in Python. The Jaccard similarity index and the shape-based metrics Q were implemented in C++. For graph layouts, we used Visone Backbone layout [2, 12] and Yed Organic layout [17]. We ran the experiments on a Mac Pro 13 laptop, with 2.4 GHz Intel Core i5, 16 GB memory and macOS High Sierra.

Table 1 shows the details of the data sets. The first data set consists of Benchmark graphs of two types (scale-free graphs and grid graphs); these are real world graphs from Hachul’s library [3] and the network repository [14]. The second data set is the GION data set, which are RNA sequence graphs with distinctive shapes [4]. The third one is the Black-hole data set, which consist of synthetic locally dense graphs, which are difficult to sample.

4.1 Graph Sampling Quality Metrics

We used the most popular quality metrics used in [18]: Degree correlation (Degree), Closeness centrality (Closeness), Largest Connected Component (LCC) and Average Neighbor Degree (AND). More specifically, we used the Kolmogorov-Smirnov (KS) distance value to compute the distance values between two Cumulative Distribution Functions (CDFs) [6]. The KS distance value is between 0 to 1, where the lower KS value means the better result. Namely, the KS distance value closer to 0 indicates higher the similarity between the CDFs.

We computed the KS distance value to analyze how well the sampling metrics (Closeness, AND, Degree, and LCC) perform for graph samples computed by SV and RV. Specifically, we computed the average of the KS distance values with four metrics over the three types of data sets with sampling ratios from 5% to 90%.

Figure 2(a) shows the average (for each data set) of the KS distance values of the graph samples computed by SV (red), SV2 (blue), and RV (yellow), with four sampling quality metrics (Closeness, AND, Degree, and LCC) and sampling ratios 5% to 90%. Clearly, the SV method performed consistently the best, as we expected, especially for the Benchmark graphs.

On the other hand, the SV2 method performed a bit worse than RV, which is unexpected. This is due to the fact that in fact many real world graphs have important vertices with high degrees (i.e., hubs). However, the resistance value of such vertex was averaged by the degree of the vertex; therefore these vertices have low resistance values and were not selected. Therefore, for the rest of our experiments, we mainly compare performance between the SV and RV methods.

(a) Comparison of sampling quality metrics: the KS values of the sampling quality metrics (Closeness, AND, Degree, and LCC) for graph samples computed by SV (red), SV2 (blue), and RV (yellow), averaged over each data set with sampling ratios from 5% to 90%. The lower KS value means the better result. (b) Comparison of the improvement: Improvement by SV over the RV method (i.e., \((KS(SV)/KS(RV))-1\)), based on sampling metrics using all data sets. The percentage difference of the average KS distance was computed. The more negative value indicates that the SV method performs better than the RV method.

For detailed analysis per data sets, we computed the improvement by the SV method over RV method (i.e., \((KS(SV)/KS(RV))-1\)), based on sampling metrics. The percentage difference of the average KS distance was computed. The more negative value indicates that the SV method performs better than the RV method.

Figure 2(b) shows the detailed performance improvement of the SV method over the RV method for each data set (Benchmark, GION and Blackhole graphs). We can see that overall for most of data sets and most of sampling quality metrics, the SV method consistently performs better than the RV method, confirming our hypothesis. More specifically, for the Benchmark data set, the SV method produces significantly better results over RV around 35% improvement for all metrics (up to 70% for Closeness). Similarly, for the GION data set, the SV method showed around 30% improvement over RV for all metrics (up to 50% for Closeness). For the Black-hole data set, the SV method showed around 25% improvement for all metrics (up to 80% for Closeness).

Overall, for Benchmark graphs, the SV method gives significantly better improvement on the sampling metrics, especially for scale-free graphs. For the GION and Black-hole graphs, the SV method also showed significant improvement on all sampling metrics. In summary, our experimental results with sampling metrics confirm that the SV method shows significant (30%) improvement over the RV method, confirming our hypothesis.

4.2 Visual Comparison

We experimented with a number of graph layouts available from various graph layout tools including Yed [17] and Visone [2]. We found that many graph layouts gave similar results for our data sets, except the Backbone layout from Visone, which was specifically designed to untangle the hairball drawings of large and complex networks by Nocaj et al. [12]. Therefore, we report two graph layouts that gave different shapes: the Backbone layout from Visone and the Organic layout from Yed, which gave similar shapes to other layouts that we considered. We further observed that the Backbone layout shows better structure for Benchmark graphs (i.e., real world graphs), esp., scale-free graphs, and the Organic layout produces better shape for Black-hole graphs (i.e., synthetic graphs).

We conducted a visual comparison of graph samples computed by the SV method and the RV method for all data sets, using both Organic and Backbone layouts. Figures 3 and 4 show the visual comparison of graph samples computed by the SV and the RV methods. We used the Backbone layout for Benchmark graphs, and the Organic layout for the GION graphs and the Black-hole graphs. Overall, we can see that the SV method produces graph samples with significantly better connectivity with the similar structure to the original graph than the RV method.

In summary, visual comparison of samples computed by the SV method and the RV method using the Backbone and Organic layouts confirms that SV produces samples with significantly better connectivity and similar visual structure to the original graph than RV.

4.3 Shape-Based Metrics Comparison

To compare the quality of sampling for visualisation, we used the shape-based metric Q of graph samples computed by the SV (i.e. \(Q_{SV}\)) and RV method (i.e. \(Q_{RV}\)) using the three data sets. We expect that the shape-based metrics values increase as the sampling ratios increase.

To better compare the shape-based metrics computed by the SV and the RV methods, we use the shape-based metric ratio \(Q_{SV}/Q_{RV}\) (i.e., values over 1 means that SV performs better than RV).

Figure 5 shows the comparison of the average of shape-based metric ratios \(Q_{SV}/Q_{RV}\) per data sets, using the Backbone (red) and Organic (blue) layouts. The x-axis shows the sampling ratios from 5% to 90%, and the y-axis shows the value of the ratio. As we expected, for most of the data sets, the shape-based metric ratio is significantly above 1, especially for 5% to 25% sampling ratios. We can observe that overall the Backbone layout shows better performance than the Organic layout, consistent with all the data sets (Benchmark graphs, GION graphs, and Black-hole graphs).

In summary, the SV method performs significantly better than RV in shape-based metrics, esp., when the sampling ratio is low, confirming our hypothesis. The Backbone layout performs significantly better than the Organic layout in terms of the improvement in shape-based metrics by the SV over the RV method.

5 Comparison with Degree Centrality Based Sampling

In this section, we present experiments on comparison of the SV method with the Degree Centrality based sampling method (DC), using the shape-based metrics and visual comparison of graph samples.

Degree centrality is one of the simplest centrality measurements in network analysis introduced by Freeman [5]. More specifically, we compute the degree centrality for each vertex, and select the vertices with the largest degree centrality values to compute the graph sample.

The main hypothesis of our investigation is that the SV method perform better than the DC method in two ways: better shape-based metrics, and visually better preserving the structure of the original graph. In particular, we expect a much better performance of the SV method over the DC method, especially with small sampling ratio.

5.1 Visual Comparison

We conducted a visual comparison of graph samples computed by the SV and the DC methods for all data sets, using both Organic and Backbone layouts. We used the Backbone layout for Benchmark graphs and the Organic layout for the GION graphs and the Black-hole graphs.

Figures 6 and 7 show the visual comparison of graph samples computed by the SV and the DC methods with sampling ratio 25% and 30%. Overall, experiments confirm that the SV method produces graph samples with significantly better connectivity and the globally similar structure to the original graph than the DC method. We observe that the DC method produces graph samples with locally dense structure of original graphs rather than the global structure, especially for Black-hole graphs and GION graphs.

In summary, visual comparison of graph samples computed by the SV and the DC methods using Benchmark, GION, and Black-hole data sets with the Backbone and Organic layouts confirms that the SV method produces graph samples with significantly better connectivity structure as well as globally similar visual structure to the original graph than the DC method.

5.2 Shape-Based Metrics Comparison: SV, DC and RV

We now present the overall comparison between the SV, RV, and DC methods using the shape-based metrics. Figure 8 shows a summary of the average of shape-based metric ratios \(Q_{SV}/Q_{RV}\) (red) and \(Q_{DC}/Q_{RV}\) (blue) per data sets, using the Backbone layout. The y-axis values above 1 means that the SV (resp., DC) method performs better than RV.

We observe that overall the SV method performs significantly better than the DC method, consistent with all the data sets, especially with small sampling ratio. The DC method performs slightly better than the RV method for the Benchmark graphs when the sampling ratio is small, but mostly worse than the RV method for the GION graph and the Black-hole graphs.

In summary, experiments with shape-based metrics showed that the SV method outperforms the DC and RV methods, and the DC method performs slightly worse than the RV method.

6 Conclusion and Future Work

In this paper, we present a new spectral vertex sampling method SV for drawing large graphs, based on the effective resistance values of the vertices.

Our extensive experimental results using the graph sampling quality metrics with both benchmark real world graphs and synthetic graphs show significant improvement (30%) by the SV method over the RV (Random Vertex) sampling and the DC (Degree Centrality) sampling. Visual comparison using the Backbone and Organic layouts show that the SV method significantly better preserves the structure of the original graph with better connectivity, esp., for low sampling ratio. Our experiments using shape-based metrics also confirm significant improvement by SV over RV and DC methods, esp., for scale-free graphs.

For future work, we plan to improve the performance of the SV method, by integrating graph decomposition techniques and network analysis methods.

References

Barrat, A., Barthelemy, M., Pastor-Satorras, R., Vespignani, A.: The architecture of complex weighted networks. Proc. Natl. Acad. Sci. USA 101(11), 3747–3752 (2004)

Brandes, U., Wagner, D.: Analysis and visualization of social networks. In: Graph Drawing Software, pp. 321–340 (2004)

Davis, T.A., Hu, Y.: The University of Florida sparse matrix collection. ACM Trans. Math. Softw. 38(1), 1–25 (2011)

Eades, P., Hong, S.-H., Nguyen, A., Klein, K.: Shape-based quality metrics for large graph visualization. J. Graph Algorithms Appl. 21(1), 29–53 (2017)

Freeman, L.C.: Centrality in social networks conceptual clarification. Soc. Netw. 1(3), 215–239 (1978)

Gammon, J., Chakravarti, I.M., Laha, R.G., Roy, J.: Handbook of Methods of Applied Statistics (1967)

Hernández, J.M., Van Mieghem, P.: Classification of graph metrics. Delft University of Technology: Mekelweg, The Netherlands, pp. 1–20 (2011)

Hu, P., Lau, W.C.: A survey and taxonomy of graph sampling. arXiv preprint arXiv:1308.5865 (2013)

Lee, S.H., Kim, P.-J., Jeong, H.: Statistical properties of sampled networks. Phys. Rev. E 73(1), 16102 (2006)

Leskovec, J., Faloutsos, C.: Sampling from large graphs. In: Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 631–636 (2006)

Newman, M.E.J.: Mixing patterns in networks. Phys. Rev. E 67(2), 26126 (2003)

Nocaj, A., Ortmann, M., Brandes, U.: Untangling the hairballs of multi-centered, small-world online social media networks. J. Graph Algorithms Appl. 19(2), 595–618 (2015)

Rafiei, D.: Effectively visualizing large networks through sampling. In: VIS 05. IEEE Visualization, pp. 375–382 (2005)

Rossi, R.A., Ahmed, N.K.: The network data repository with interactive graph analytics and visualization. In: AAAI 2015 Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, pp. 4292–4293 (2015)

Spielman, D.A., Teng, S.-H.: Spectral sparsification of graphs. SIAM J. Comput. 40(4), 981–1025 (2011)

Wu, Y., Cao, N., Archambault, D.W., Shen, Q., Qu, H., Cui, W.: Evaluation of graph sampling: a visualization perspective. IEEE Trans. Visual Comput. Graph. 23(1), 401–410 (2017)

yWorks GmbH: yEd graph editor (2018). https://www.yworks.com/yed

Zhang, F., Zhang, S., Wong, P.C., Edward Swan II, J., Jankun-Kelly, T.: A visual and statistical benchmark for graph sampling methods. In: Proceedings IEEE Visual Exploring Graphs Scale, October 2015

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Hu, J., Hong, SH., Eades, P. (2020). Spectral Vertex Sampling for Big Complex Graphs. In: Cherifi, H., Gaito, S., Mendes, J., Moro, E., Rocha, L. (eds) Complex Networks and Their Applications VIII. COMPLEX NETWORKS 2019. Studies in Computational Intelligence, vol 882. Springer, Cham. https://doi.org/10.1007/978-3-030-36683-4_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-36683-4_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-36682-7

Online ISBN: 978-3-030-36683-4

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)