Abstract

Use of herbicides is rising globally to enhance crop yield and meet the ever increasing food demand. It adversely impacts environment and biosphere. To rationalize its use, variable rate herbicide based on weed densities mapping is a promising technique. Estimation of weed densities depends upon precise detection and mapping of weeds in the field. Recently, semantic segmentation is studied in precision agriculture due to its power to detect and segment objects in images. However, due to extremely difficult and time consuming job of labelling the pixels in agriculture images, its application is limited. To accelerate labelling process for semantic segmentation, a two step manual labelling procedure is proposed in this paper. The proposed method is tested on oat field imagery. It has shown improved intersection over union values as semantic models are trained on a comparatively bigger labelled real dataset. The method demonstrates intersection over union value of 81.28% for weeds and mean intersection over union value of 90.445%.

This work is supported by Mitacs Accelerate grant entitled “Weed Classification and Density Estimation for Variable Rate Herbicide Prescription”. We would like to thank CropPro Consulting (https://www.croppro.ca), our industry partner, for their financial and in kind support.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Approximately one third of all pest related agriculture production losses are attributed to weeds [1]. Weeds reduce crop yield by sharing nutrients, moisture and sunlight with host plants in an adaptive and competitive process [2]. Herbicide application is a common agriculture practice in mitigating the impact of weeds on crop yield. In USA, it constitutes two third of all chemical application to agricultural fields [3]. Increasing trend of chemical application have raised environmental, biological and sustainability concerns. Recent studies have shown their detrimental effects on human health [4]. To reduce harmful effects of chemicals while ensuring profitability of farmers, precision agriculture proposes site specific variable rate application of herbicides which requires accurate mapping of weed densities [5, 6]. Weed mapping on a large scale is a challenging task due to spectral similarity of weeds and host plants.

Weed mapping techniques can be classified into two broad categories: interline and intraline. The former assumes that host plants are planted in rows and everything outside of plant rows is weed [7]. This technique has inherit flaw of misclassifying intra row weeds as host plants and inter-row host plants as weeds. Intra-line approaches attempt to address these flaws by extracting shape features of plants and classifying them into host plant and weeds. With the advent of deep learning techniques, image classification tasks have become easier due to automated feature extraction. In precision agriculture, different deep learning based classification techniques are being employed. Semantic segmentation is a promising pixel level classification technique for weed density mapping. The bottleneck for this technique is labelling of data at pixel level which is time consuming. Recent works have concentrated on synthetic data for training semantic segmentation models and then employing them for real data. Training models on synthetic data do not generalize well on real datasets.

In this paper, semantic segmentation technique is used on the images acquired from oat fields in Saskatchewan for weed density estimation. The paper makes following contributions:

-

1.

It proposes a two step manual labelling procedure for pixels in agriculture images.

-

2.

Semantic segmentation is employed on a real oat field imagery for both training and testing.

The proposed methodology has shown Intersection Over Union (IOU) value of 81.28% for weeds and Mean Intersection Over Union (MIOU) value of 90.445%. Remainder of the paper is organized as follows: Sect. 2 surveys related works, Sect. 3 explains methodology, Sect. 4 discusses results and Sect. 5 concludes the paper.

2 Related Work

Distribution of weeds is not uniform in field. Its patchiness character prompts site specific weed management. Garibay et al. study site specific weed control by thresholding weed density for herbicide spray [8]. Site specific weed control is not readily adopted by farmers due to accuracy concerns, unavailability of robust weed recognition system and limitation of spraying machinery [9]. Castaldi et al. use Unmanned Aerial Vehicle (UAV) imagery to explore the economic potential of patch spraying and its effects on crop yield [10]. Korres et al. study relationship of soil properties and weed types with focus on weeds along highways [11]. Metcalfe et al. demonstrate correlation between weed and soil properties and make prediction of weed patches in wheat field with the objective to make cite specific weed control more effective [12].

Apart from weed patch prediction based on soil properties, weed detection using computer vision techniques is also widely studied. Traditionally, weed detection involves following four steps [13]:

-

1.

RGB or multispectral image acquisition through UAV or ground moving equipment.

-

2.

Background and foreground (vegetation) segmentation.

-

3.

Feature extraction from images like shape and colours.

-

4.

Classification of images based on extracted features.

Saari et al. study UAV and ground equipment mounted sensors for higher resolution imagery [14]. For background segmentation, numerous techniques like Otsu-Adaptive Thresholding, clustering algorithms and principle component analysis are employed to separate vegetation from soil [5, 15, 16]. These colour based segmentation techniques do not perform well under varying sunlight, weather conditions and shadows. Feature extraction and classification techniques can be further categorized in two main classes, interline approach and intraline approaches. Bah et al. implement interline approach using normalized Hough transform to detect crop rows [17]. This approach has disadvantage of misclassifying interline crop plants as weed and intraline weeds as host plants. Contrary to this, intraline approach assumes that weeds can be both interline and intraline [18]. For the purpose, extra features like texture and shape are extracted from weed and host plants to classify images [19]. Lastly, different machine learning techniques like support vector machines and artificial neural network are used to classify based on extracted features [20].

Deep learning has emerged as a powerful machine learning tool in the field of computer vision because of its ability to extract features automatically [21]. Dyrmann et al. detect the location of monocot and dicot weeds in cereal field images using Convolutional Neural Networks (CNN) [22]. Yu et al. apply object detection techniques like VGGNet, GoogLeNet and DetectNet for detecting weeds in turf-grass [23]. Semantic segmentation techniques are also being implemented. Bottleneck in semantic segmentation is pixel wise labelling of images. Dyrmann et al. overcome this problem by synthesizing training images and labels. Weeds and host plants are placed in randomly overlapping and nonoverlapping configurations [24]. Potena et al. use a small representative dataset to label large dataset for semantic segmentation [25]. To compensate the unavailability of large labelled data for semantic segmentation, Milioto et al. input vegetation indexes as additional variables to segmentation model [26]. These studies lack fully labelled real images at pixel level for semantic segmentation which is the focus of this work.

3 Methodology

The objective of the study is to estimate weed density for crops grown in Canadian Prairies. The weed density mapping will be used for variable rate herbicide application. Approach adopted in this paper can be summarized in three steps. First step is acquisition of images and second is labelling the pixels in a two step procedure. Third step is to train semantic segmentation model for automating weed mapping and weed density calculation. Following sub sections give details about these steps.

3.1 Two Step Manual Labelling

For deep learning applications in precision agriculture, large number of labelled agriculture images are not available [27]. Semantic segmentation requires images to be labelled at pixel level which is time consuming. In this study, focus is on developing an efficient and effective way of labelling RGB images. A two step manual labelling procedure is proposed as follows.

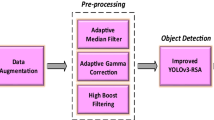

Background Removal Using Maximum Likelihood Segmentation. In first step, images are preprocessed by segmenting background and foreground using Maximum Likelihood Segmentation (MLS) [28]. Background removal is performed for two reasons, first is to label background pixels and second reason is to facilitate manual labelling of weeds as with background there are chances that some weed plants are missed in a highly varied background from being labelled. ARCGIS is used as a tool for this purpose. Unlike rule based scheme applied to all images, in our procedure we are making batch of similar images and then training MLS on each batch separately for background removal. MLS is applied in batches because RGB images vary in leave colours, light conditions, soil colour, moisture content of soil, mix of dead plants and some of images contain shadow of the sensing equipment. Figure 1 shows the instances of variations in the images.

Manual Labelling. In second step, minority class pixels are manually labelled using Labelme software package [29]. Instead of labelling both crop and weeds, only weeds are labelled assuming it to be a minority class in images. The crop pixels are zeroed out like background pixels in first step. Minority class labelling dramatically reduces time for manual labelling of pixels. Figure 2 is an example of manually labelled image.

3.2 Semantic Segmentation

Semantic segmentation has seen great progress in recent years thanks to advent of deep learning techniques. Deep learning based semantic segmentation consists of encoding and decoding blocks. Encoding block downsamples the image and extracts features out of it and decoder block up samples to target mask size. The network architecture of encoder and decoder blocks is determined by meta-architecture scheme like UNET [30] and SegNet [31]. The paper makes comparison of UNET and SegNet on given dataset. In UNET, whole feature map is transferred from encoder block to decoder block while in SegNet only pooling indexes are transferred from encoder block to decoder block. In both UNET and SegNet, decoding blocks are transpose of encoding block. Phased upsampling in UNET and SegNet improve accuracy of network [32].

After semantic segmentation is performed on images, weed densities are estimated by following equation:

Crop pixels are not separately classified because the objective of the study is to estimate weed density (\(w_{d}\)) for variable rate herbicide application. However, crop density (\(c_{d}\)) can be estimated by subtracting weed density from background segmented vegetation density (\(v_{d}\)) given by following equation:

where \(v_d\) is the vegetation density and \(c_d\) is the crop density in the image.

4 Results Discussion

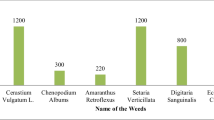

The study is conducted in collaboration with CropPro consulting, Canada. RGB images are collected from three oat fields at early growth stage using quad mounted Sony DSC-RX100M2 camera. A total of 2109 images are collected in a grid pattern of 60 ft by 80 ft. The dataset is augmented to 4702 images using different combinations of flipping, rotation, shearing, scaling, noise addition, colour variations and blurry effects. The original images are divided into four tiles of 800 \(\times \) 544 to deal with memory constraints as downsampling would remove details from the images.

For semantic segmentation UNET and SegNet are used with VGG16 and ResNet-50 as base models. To evaluate and fine tune models, dataset is divided into train, validation and test dataset with split ratio of 70%, 15% and 15% respectively. Thereafter it is augmented to avoid overfitting and better generalization. The trained models are evaluated on accuracy, precision, recall, F1, IOU, MIOU and Frequency Weighted Intersection Over Union (FWIOU). F1 score, IOU, MIOU and FWIOU are given by following equations:

where \(w_{i}\) and \(w_{j}\) are the weights of each class and k is number of pixel classes.

Table 1 summarizes the metrics for evaluation on test dataset. For comparison purpose, accuracy for majority class classifier is calculated to be 98.27%. Accuracy of the UNET model exceeds this by 1.30% while that of SegNet model exceeds majority class classifier (MCC) by 1.37%. SegNet performance is comparatively better than UNET. IOU for weed class is 81.28% for SegNet model. MIOU and FWIOU values for SegNet model are 90.445% and 99.29%.

As per developed methodology, models are trained in a way that crop pixels and background pixels are classified in to one class and weed pixels to other class. This means semantic models should ideally learn shape features of crop and spectral properties of background and club them together into one class while labelling remaining pixels as weeds. It is pertinent to mention that there are no means available to ascertain what model is actually learning except having clues from testing it on various images. If model is learning something close to ideal scenario then it should be able to map new types of weeds which were not included in data at learning stage. To evaluate model performance on new types of weeds, it is tested on images of oat crop containing new weeds. Figure 3a contains a new weed type called Horsetail (highlighted) which is not previously seen by the model. The trained SegNet model successfully detects and maps this weed as shown in Fig. 3b.

There are some points where models confuse weed and crop-background classes. In blurry images oat plants are mapped as weed. Models fail to identify crop plants because of indistinct shapes. So, model labels every vegetation in the image as weed. At image preprocessing stage, training images were made blurry to improve models performance on blurry images. However, when model is confronted with blurry images like Fig. 4, it fails to crop and weed pixels.

5 Conclusion and Future Recommendations

Accurate mapping of weed and crop densities in field provides basis for variable rate herbicide application. Semantic segmentation is a promising technique to estimate these densities. Using two step manual labelling procedure, a relatively bigger set of images can be labelled for model training resulting in better MIOU and accuracy values. As in proposed methodology, trained model eliminates crop pixels along with background pixels, the remaining pixels are labelled as weed pixels. It has advantage of detecting new weeds which are not seen by model during training. In performance comparison of UNET and SegNet, SegNet performs UNET. In future work, we plan to club different density zones to provide basis for variable rate herbicide quantification.

References

Oerke, E.: Crop losses to pests. J. Agric. Sci. 144(1), 31–43 (2006)

Guo, L., Qiu, J., Li, L.F., Lu, B., Olsen, K., Fan, L.: Genomic clues for crop-weed interactions and evolution. Trends Plant Sci. 23, 1102–1115 (2018)

Osteen, C.D., Fernandez-Cornejo, J., et al.: Herbicide use trends: a backgrounder. Choices 31(4), 1–7 (2016)

Myers, J.P., et al.: Concerns over use of glyphosate-based herbicides and risks associated with exposures: a consensus statement. Environ. Health 15(1), 19 (2016)

Bah, M.D., Hafiane, A., Canals, R.: Deep learning with unsupervised data labeling for weeds detection on UAV images (2018)

Plant, R., Pettygrove, G., Reinert, W., et al.: Precision agriculture can increase profits and limit environmental impacts. Calif. Agric. 54(4), 66–71 (2000)

Slaughter, D., Giles, D.K., Downey, D.: Autonomous robotic weed control systems: a review. Comput. Electron. Agric. 61(1), 63–78 (2008)

Garibay, S.V., et al.: Extent and implications of weed spatial variability in arable crop fields. Plant Prod. Sci. 4(4), 259–269 (2001)

Christensen, S., et al.: Site-specific weed control technologies. Weed Res. 49(3), 233–241 (2009)

Castaldi, F., Pelosi, F., Pascucci, S., Casa, R.: Assessing the potential of images from unmanned aerial vehicles (UAV) to support herbicide patch spraying in maize. Precision Agric. 18(1), 76–94 (2017)

Korres, N., Norsworthy, J., Brye, K., Skinner Jr., V., Mauromoustakos, A.: Relationships between soil properties and the occurrence of the most agronomically important weed species in the field margins of eastern Arkansas-implications for weed management in field margins. Weed Res. 57(3), 159–171 (2017)

Metcalfe, H., Milne, A., Coleman, K., Murdoch, A., Storkey, J.: Modelling the effect of spatially variable soil properties on the distribution of weeds. Ecol. Model. 396, 1–11 (2019)

Oerke, E.C., Gerhards, R., Menz, G., Sikora, R.A.: Precision Crop Protection-the Challenge and Use of Heterogeneity, vol. 5. Springer, Heidelberg (2010). https://doi.org/10.1007/978-90-481-9277-9

Saari, H., et al.: Unmanned aerial vehicle (UAV) operated spectral camera system for forest and agriculture applications. In: Remote Sensing for Agriculture, Ecosystems, and Hydrology XIII, vol. 8174. International Society for Optics and Photonics (2011) 81740H

Rusu, R.B.: Clustering and segmentation. In: Rusu, R.B. (ed.) Semantic 3D Object Maps for Everyday Robot Manipulation, pp. 75–85. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-35479-3_6

Valiente-Gonzalez, J.M., Andreu-García, G., Potter, P., Rodas-Jorda, A.: Automatic corn (Zea mays) kernel inspection system using novelty detection based on principal component analysis. Biosyst. Eng. 117, 94–103 (2014)

Bah, M.D., Hafiane, A., Canals, R.: Deep learning with unsupervised data labeling for weed detection in line crops in UAV images. Remote Sens. 10(11), 1690 (2018)

García-Santillán, I.D., Pajares, G.: On-line crop/weed discrimination through the Mahalanobis distance from images in maize fields. Biosyst. Eng. 166, 28–43 (2018)

Wendel, A., Underwood, J.: Self-supervised weed detection in vegetable crops using ground based hyperspectral imaging. In: 2016 IEEE International Conference on Robotics and Automation (ICRA), pp. 5128–5135. IEEE (2016)

Pantazi, X.E., Tamouridou, A.A., Alexandridis, T., Lagopodi, A.L., Kashefi, J., Moshou, D.: Evaluation of hierarchical self-organising maps for weed mapping using uas multispectral imagery. Comput. Electron. Agric. 139, 224–230 (2017)

Greenspan, H., Van Ginneken, B., Summers, R.M.: Guest editorial deep learning in medical imaging: overview and future promise of an exciting new technique. IEEE Trans. Med. Imaging 35(5), 1153–1159 (2016)

Dyrmann, M., Skovsen, S., Laursen, M.S., Jørgensen, R.N.: Using a fully convolutional neural network for detecting locations of weeds in images from cereal fields. In: International Conference on Precision Agriculture. International Society of Precision Agriculture (2018)

Yu, J., Sharpe, S.M., Schumann, A.W., Boyd, N.S.: Deep learning for image-based weed detection in turfgrass. Eur. J. Agron. 104, 78–84 (2019)

Dyrmann, M., Mortensen, A.K., Midtiby, H.S., Jørgensen, R.N., et al.: Pixel-wise classification of weeds and crops in images by using a fully convolutional neural network. In: Proceedings of the International Conference on Agricultural Engineering, pp. 26–29, Aarhus, Denmark (2016)

Potena, C., Nardi, D., Pretto, A.: Fast and accurate crop and weed identification with summarized train sets for precision agriculture. In: Chen, W., Hosoda, K., Menegatti, E., Shimizu, M., Wang, H. (eds.) IAS 2016. AISC, vol. 531, pp. 105–121. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-48036-7_9

Milioto, A., Lottes, P., Stachniss, C.: Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in CNNs. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 2229–2235. IEEE (2018)

Kamilaris, A., Prenafeta-Boldú, F.: A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 156(3), 312–322 (2018)

Sharma, A., Boroevich, K.A., Shigemizu, D., Kamatani, Y., Kubo, M., Tsunoda, T.: Hierarchical maximum likelihood clustering approach. IEEE Trans. Biomed. Eng. 64(1), 112–122 (2016)

MIT: LabelMe (2019)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2481–2495 (2017)

Siam, M., Gamal, M., Abdel-Razek, M., Yogamani, S., Jagersand, M.: RTSeg: real-time semantic segmentation comparative study. In: 2018 25th IEEE International Conference on Image Processing (ICIP), pp. 1603–1607. IEEE (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Asad, M.H., Bais, A. (2020). Weed Density Estimation Using Semantic Segmentation. In: Dabrowski, J., Rahman, A., Paul, M. (eds) Image and Video Technology. PSIVT 2019. Lecture Notes in Computer Science(), vol 11994. Springer, Cham. https://doi.org/10.1007/978-3-030-39770-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-39770-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-39769-2

Online ISBN: 978-3-030-39770-8

eBook Packages: Computer ScienceComputer Science (R0)