Abstract

This paper presents an Extended Delay Learning based Remote Supervised Method, called EDL, which extends the existing DL-ReSuMe learning method previously proposed by the authors for mapping spatio-temporal input spiking patterns into desired spike trains. EDL merges the weight adjustment property of STDP and anti-STDP with a delay shift method similar to DL-ReSuMe but also introduces the following distinct features to improve learning performance. Firstly, EDL adjusts synaptic delays more than once to find more precise value for each delay. Secondly, EDL can increase or decrease the current value of delays during a learning epoch by initialising the delays at a value higher than zero at the start of learning. Thirdly, EDL adjusts the delays related to a group of inputs instead of a single input. The ability of multiple changes of each delay in addition to the adjustment of a group of delays helps the EDL method to find more appropriate values of delays to produce a desired spike train. Finally, EDL is not restricted to adjusting only one type of inputs (inhibitory or excitatory inputs) at each learning time. Instead, it trains the delays of both inhibitory and excitatory inputs cooperatively to enhance the learning performance.

Similar content being viewed by others

Keywords

1 Introduction

It is broadly agreed that spikes (a.k.a pulses or action potentials), which represents short and sudden increases in voltage of a neuron, are used to transfer information between neurons. The principle of encoding information through spikes is a controversial matter. Previously, it was supposed that the brain encode information through spike rates [1]. However, neurobiological research findings have shown high speed processing in the brain that cannot be performed by rate coding scheme alone [2]. It has shown that human’s visual processing can perform a recognition task in less than 100 ms by using neurons in multiple layers (from the retina to the temporal lobe). It takes about 10 ms processing time for each neuron. The time-window is too small for rate coding to occur [2, 3]. High speed processing tasks can be performed using precise timing of spikes [3, 4]. The ability of capturing the temporal information of input signals enables spiking neurons to be more powerful than their non-spiking predecessors. However, the intrinsic complexity of spiking neurons needs the development of robust and biologically more plausible learning rules [3, 5].

SpikeProp [6] is one of the first supervised learning methods for spiking neurons. It is inspired by the classical back propagation algorithm. The multi-spike learning algorithm [7] is another example of gradient based methods which are based on the estimation of the gradient of an error function. The gradient based methods suffer from the problems of silent neuron and local minima. In [8] an evolutionary strategy based supervised method is proposed. Unlike the gradient-based methods, this is a derivative-free optimization method and has achieved better performance than SpikeProp. However, it is time consuming due to the iterative nature of evolutionary algorithms.

ReSuMe is a biologically plausible supervised learning algorithm for spiking neurons that can train a neuron to produce multiple spikes. It uses Spike-timing-dependent plasticity (STDP) and anti-STDP to adjust synaptic weights [9]. The STDP is driven by using a remote teacher spikes train to enhance appropriate synaptic weights to force the neuron to fire at desired times. Using remote supervised teacher spikes enables ReSuMe to overcome the silent neuron problem existing in the gradient based learning methods such as SpikeProbe. The ability of on-line processing and locality are two remarkable properties of ReSuMe. It works based on weight adjustment.

Delay Learning Remote Supervised Method (DL-ReSuMe) [4] integrates both the weight adjustment method and the delay shift approach to improve the learning performance. DL-ReSuMe uses STDP and anti-STDP of synapses to adjust synaptic weights similar to ReSuMe [9]. DL-ReSuMe learns the precise timing of a desired spike train in a shorter time with a higher accuracy compared to the well-known ReSuMe algorithm. ReSuMe has problem with silent window, a short time window in the input spatiotemporal pattern without any input spikes, around a desired spike. However, DL-ReSuMe uses delay adjustment to shift nearby input spikes to the appropriate time in the silent window at a desired spike. An extended version of DL-ReSuMe called Multi-DL-ReSuMe has been proposed in [10] to train multiple neurons for classification of spatiotemporal input patterns.

We have shown in our previous work [4] that the performance of DL-ReSuMe drops for high values of total simulation time T t and comparatively high frequencies of input spike train or desired spike train. Because, the number of input spikes and desired output spikes are increased when Tt is increased, and consequently increases the negative effect of a delay learning related to a desired spike on the other desired spikes. In DL-ReSuMe each delay is only adjusted once by considering one of the desired spikes and input spikes shortly before the desired spike. The adjusted delay could have negative effects on the other learned desired spikes. In DL-ReSuMe, there are no mechanisms to compensate the negative effect of a delay adjustment by considering various desired spikes and refine a delay by multiple adjustments. When the number of spikes is increased by increasing T t , the negative effect is also increased and the performance of DL-ReSuMe drops as shown in [4].

In this paper, we extend the DL-ReSuMe by adding some new characteristics. The extended version of DL-ReSuMe, called EDL, can achieve higher performance than DL-ReSuMe and ReSuMe for different values of Tt.

2 Synaptic Weight and Delay Learning in EDL

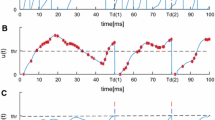

The structure of a neuron in EDL is shown in Fig. 1. Like DL-ReSuMe, EDL trains both the synaptic delays and weights. It is a heuristic method which trains a spiking neuron to map a spatiotemporal input pattern into a desired output spike train. The delay training is performed at the time of a desired spike and at the time of an undesired actual output spike.

EDL adjusts the delays to generate a set of desired spikes by increasing the neuron PSP at the instances of the desired spikes. EDL increases the delays of excitatory inputs that have input spikes shortly before a desired time to increase the PSP at the desired time. The increment in the delays brings the positive PSP produced by the excitatory input close to the time of the desired spike which consequently increases the level of the total PSP of the neuron at the time of the desired spike and may cause the neuron to hit the firing threshold and fire at the desired time. Additionally, EDL reduces the delays of inhibitory inputs that have input spikes shortly before the desired times. The reduction of the delays increases the distances of the negative PSP produced by the inhibitory inputs from the desired times. Thus the total produced PSP is increased at the desired time and help the neuron to produce the desired spike.

EDL also tries to remove undesired actual output spikes by reducing the neuron PSP at the instances of the undesired actual output spikes. This is done by reducing the delays of excitatory inputs that have spikes shortly before the undesired output spikes. The delay reduction reduces the PSP produced by the excitatory inputs at time of an undesired output spike time which makes the neuron less likely to reach the firing threshold, hence cancelling the undesired output spike. Furthermore, EDL increases appropriately the delays of inhibitory inputs that have spikes shortly before an undesired output spike to reduce the neuron PSP at the time of the undesired output spike. The delay adjustment continues over a number of learning epochs and a delay could be adjusted many times to train a neuron to fire at the desired spiking times.

EDL, similar to DL-ReSuMe, uses STDP to increase the neuron PSP at the time of desired spikes, i.e. it increases the weights of (both excitatory and inhibitory) input synapses that have spikes shortly before a desired time. EDL also uses anti-STDP at the time of an undesired output spikes to reduce the weights of input synapses that have spikes shortly before an undesired spike.

A local variable called spike trace is used to calculate the value of a delay adjustment. The trace shows the effect of an input spike in a synapse in a time interval. The effect of an input spike train is represented by a local variable called trace. The trace of the \( i^{th} \) input spike train, \( s_{i} \left( t \right) \), is called \( x_{i} \left( t \right) \). \( x_{i} \left( t \right) \) is described in (1). \( x_{i} \left( t \right) \) jumps to a saturated value ‘A’ whenever a presynaptic spike arrives and then decreases exponentially according to the saturated model [4, 11]:

where \( \tau_{x} \) is the time constant of the exponential decay, amplitude \( {\text{A}} \) is a constant value, and \( del_{i} \) is the delay corresponding to the \( i^{th} \) input. The level of \( x_{i} \left( t \right) \) at the moment \( t \) can be used to determine the time of the last previous input spike. \( x_{i} \left( t \right) \) can also be used to find the input synapse that has the nearest spike before the current time, \( t. \) Suppose that the \( m^{th} \) synapse (\( m = 1, 2, \ldots or\;N \)) has the nearest spike before the current time t and consequently, out of all (input) synapses, has the maximum trace at time \( t \), \( x_{m} \left( t \right) \), and it should be less than A, because the spike should occur before the current time. The delay, \( {\text{dt}}_{\text{m}} \), which is necessary to be applied to the synapse \( m \), to shift the effect of its spike to the time \( t \) can be calculated using the inverse of the trace function given in (1). The result is shown in (2).

where \( t_{m}^{f} \) is firing time of the \( f^{th} \) spike of the input m before current time t, \( del_{m} \) is the delay related to the \( m^{th} \) input. The delays are adjusted at the times of desired spikes and actual output spikes in proportion to the time interval of the closest input spike before a desired spike or an actual output spike.

The delay of \( i^{th} \) input is adjusted at the instances of desired spikes, \( t^{fd} \), and at the instances of the neuron actual output spikes, \( t^{fa} \). Suppose that the \( i^{th} \) input synapse is an excitatory synapse. The delay adjustment of the delay related to the \( i^{th} \) excitatory input, \( dte_{i} \left( t \right) \), is calculated by (3).

where \( t^{fd} \) is the firinig time of the \( fd^{th} \) desired spike in the desired spike train i.e. \( fd = 1, 2, \ldots {\text{or }}N_{d} \), and \( N_{d} \) is the number of spikes in the desired spike train. \( t^{fa} \) is the firing time of the \( fa^{th} \) spike at the neuron actual output spike train (\( {\text{fa}} = 1, 2, \ldots {\text{or }}N_{a} \), and \( N_{a} \) is the number of spikes in the actual output spike train). \( xe_{m} \left( {\text{t}} \right) \) (when \( t = t^{fd} \)) is the trace of the excitatory input that has the closest input spike before the desired spike at \( t^{fd} \). The time interval of the closest input spike and the desired spike at \( t^{fd} \), \( dte_{m} \left( {t = t^{fd} } \right) \), can be calculated by substituting \( xe_{m} \left( {t^{fd} } \right) \) in (2). The positive delay at the desired time (\( t = t^{fd} \)) in (2) brings the excitatory PSP produced by the \( i^{th} \) input close to the desired spike and consequently increases the total PSP at the desired time and it can produce an actual output spike at the desired time. Similarly, the negative adjustment of the delay of excitatory inputs in (2) moves the produced excitatory PSP away from the output spike at \( t = t^{fa} \) and tries to cancel the actual output spike. When the learning algorithm trains the neuron to produce an actual output spike at the time of a desired spike i.e. \( t = t^{fd} = t^{fa} \), the enhancement and the reduction of the delay is equal according to (3), and the net delay adjustment is zero. Therefore, the delay adjustment is stopped when the neuron learns to fire the desired spike train. Equation (3) also shows that the maximum delay adjustment is related to the input that has the closest spike before the time t and it is equal to \( dte_{m} \left( t \right) \) (when \( x_{i} \left( t \right) = xe_{m} \left( {\text{t}} \right) \)) and the delays of other inputs are adjusted in proportion to their distance from the time t (depending to \( x_{i} \left( t \right) \)).

If the \( i^{th} \) input synapse is an inhibitory synapse, the delay of the \( i^{th} \) input is reduced at the times of the desired spikes such that the distance of the inhibitory PSPs produced by the input spikes before the desired time is increased. The delays are increased at the times of actual output spikes to bring the produced inhibitory PSPs by the \( i^{th} \) input close to the actual output times, according to (4).

where \( dti_{i} \left( t \right) \) is the delay adjustment of the \( i^{th} \) inhibitory input at the time \( t \), \( xi_{m} \left( {\text{t}} \right) \) is the trace of the mth inhibitory input synapse that have the closest input spike before time t between all inhibitory inputs that have spikes before the time t. \( dti_{m} \left( t \right) \) is the time distance between the closest inhibitory input spike and the time t that is calculated by putting \( xi_{m} \left( {\text{t}} \right) \) in (2). Equation (4) shows that the delay adjustment of the \( i^{th} \) input is reduced by decreasing \( x_{i} \left( t \right) \) or by increasing the time distance of its input spike before time t. The amount of the delay increment and decrement at the time t is equal and they cancel each other when an actual output is produced at the time of a desired spike, i.e. \( t = t^{fd} = t^{fa} \). In this situation the delay adjustment is stabilized. The delay adjustments are limited by a maximum amount \( dtMax \).

3 Experimental Setup

The learning task is to train a neuron to fire a desired spike train in response to a spatiotemporal input pattern. The proposed learning algorithm trains the neuron in a number of learning epochs. The input and desired spikes are generated by a Poisson process. Once the input and the output pair are generated they are frozen during a learning run. In each learning run that is composed of a number of learning epochs the learning parameters (synaptic weights and delays) are adjusted to train the neuron to produce the desired spike train. Learning parameters are initialised at the start of a learning run and are then continuously updated during a number of learning epochs. The average performance over a number of runs is reported. Each run is performed using a different pair of a spatiotemporal input pattern and a single desired output spike train. The different spike trains for the different runs are drawn from random Poisson processes. At the start of each learning run the weights and delays are reset. The weights are initialized randomly, and the delays are initialized to \( dtMax/2 \).

During a learning epoch the delay adjustments of synapse i which are calculated by (3) or (4) are accumulated and the accumulated delay adjustment is added to the \( i^{th} \) input delay, \( del_{i} \). The new delay should be within [0, \( dtMax \)].

The weights are updated by the delayed version of ReSuMe rule which is governed by (5) where the delays, \( del_{i} \), are applied to the ReSuMe weight update.

where \( del_{i} \) is the delay related to input \( i \). In the delayed version of ReSuMe, the term \( s_{i} \left( {t - del_{i} - s} \right) \) is used instead of \( s_{i} \left( {t - s} \right) \) used in ReSuMe. At the start of the training procedure, 20 % of the weights are considered inhibitory and 80 % of the weights are considered excitatory as in [12].

4 Results and Discussion

The performance of EDL, DL-ReSuMe and ReSuMe are compared in Fig. 2. A spatiotemporal input pattern with 400 spike trains is used. Each spike train is generated by a Poisson process. The mean frequency of the input pattern is 5 HZ. A desired spike train is also produced by a Poisson process with 100 Hz mean frequency. The total time duration, \( T_{t} \), of input and output spike trains are changed from 100 to 1000 ms. The simulation is done for 20 independent runs and the median value of the 20 run is shown in Fig. 2.

Comparison of the performances of EDL, DL-ReSuMe and ReSuMe for different values of \( {\text{T}}_{\text{t}} \). The input spatiotemporal pattern and the desired spike train are produced by Poisson processes with frequencies of 5 Hz and 100 Hz, respectively. \( {\text{dtMax}} \) is set to 15 ms for both EDL and DL-ReSuMe. The synaptic delays are initialized to dtMax/2 = 7.5 ms and 0 ms for EDL and DL-ReSuMe, respectively.

The results show that the performance of EDL is higher than the performance of ReSuMe for all the values of \( T_{t} \) (up to 1000 ms). In addition, the performances of EDL and DL-ReSuMe are identical when the simulation time is shorter than 500 ms but the performance of EDL then becomes higher for \( T_{t} \) longer than 500 ms. Like DL-ReSuMe, the performance of EDL also drops after 500 ms but less sharply than DL-ReSuMe. The weights are adjusted based on STDP and anti-STDP. In the STDP and anti-STDP the time of an input spike is important. For example, during STDP, if an input spike occurs shortly before and close to a desired spike time, the corresponding weight is potentiated by a high value. When a delay is applied to the input after the weight adjustment, the PSP produced by the input spike is delayed. A high enough delay can shift the produced PSP beyond the desired time and because of the high weight of the input (related to the previous weight learning) it may cause an undesired output spike after the desired time, and this can be considered as the negative effect of the delay adjustment on the weight learning. A high value of \( T_{t} \) can result in each delay adjustment affecting a higher number of input spikes and consequently increases the negative effect of delay adjustment which can contribute to a decrease in the learning performance as shown in Fig. 2.

5 Conclusion and Future Work

In this paper, DL-ReSuMe is extended to improve the learning performance resulting in the proposed EDL (Extended Delay Learning) method. EDL trains a neuron to fire at desired times in response to an input spatiotemporal spiking pattern using the synergy between weights and delays training. This interplay between weights and delays helps the neuron fire at desired times while at the same time cancel its spikes fired at undesired times. Unlike DL-ReSuMe, delays are changed for both inhibitory and excitatory inputs and a synaptic delay can be changed several times in EDL. Multiple adjustments of a delay at various times give the EDL method the ability to assess the effect of a delay on various desired spikes and actual output spikes and then to reconcile the various effects by finding an appropriate delay adjustment.

The learning performance of EDL was compared against DL-ReSuMe, developed by the authors, and the well-established ReSuMe method and the obtained results have shown an overall increased accuracy for different durations of Poisson input spatiotemporal spiking pattern and desired spike train.

Future work will explore the application of EDL to a network of spiking neurons and its viability in learning classes of spatiotemporal spiking patterns.

References

Masquelier, T., Deco, G.: Learning and coding in neural networks. Principles of Neural Coding Anonymous, pp. 513–526. CRC Press, Boca Raton (2013)

Thorpe, S., Delorme, A., Van Rullen, R.: Spike-based strategies for rapid processing. Neural Netw. 14, 715–725 (2001)

Vreeken, J.: Spiking neural networks, an introduction, Technical Report UU-CS, pp. 1–5 (2003)

Taherkhani, A., Belatreche, A., Li, Y., Maguire, L.P.: DL-ReSuMe: a delay learning based remote supervised method for spiking neurons. IEEE Trans. Neural Netw. Learn. Syst. PP, 1 (2015)

Taherkhani, A., Belatreche, A., Li, Y., Maguire, L.: A new biologically plausible supervised learning method for spiking neurons. In: Proceedings of ESANN, Bruges (Belgium) (2014)

Bohte, S.M., Kok, J.N., La Poutre, H.: Error-backpropagation in temporally encoded networks of spiking neurons. Neurocomputing 48, 17–37 (2002)

Xu, Y., Zeng, X., Han, L., Yang, J.: A supervised multi-spike learning algorithm based on gradient descent for spiking neural networks. Neural Netw. 43, 99–113 (2013)

Belatreche, A., Maguire, L., McGinnity, M.: Advances in design and application of spiking neural networks. Soft Comput. Fusion of Found. Methodol. Appl. 11, 239–248 (2007)

Ponulak, F., Kasiński, A.: Supervised learning in spiking neural networks with ReSuMe: sequence learning, classification, and spike shifting. Neural Comput. 22, 467–510 (2010)

Taherkhani, A., Belatreche, A., Yuhua, L., Liam, P.M.: Multi-DL-ReSuMe: Multiple neurons delay learning remote supervised method. IN: The 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland (2015)

Morrison, A., Diesmann, M., Gerstner, W.: Phenomenological models of synaptic plasticity based on spike timing. Biol. Cybern. 98, 459–478 (2008)

Izhikevich, E.M.: Polychronization: computation with spikes. Neural Comput. 18, 245–282 (2006)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Taherkhani, A., Belatreche, A., Li, Y., Maguire, L.P. (2015). EDL: An Extended Delay Learning Based Remote Supervised Method for Spiking Neurons. In: Arik, S., Huang, T., Lai, W., Liu, Q. (eds) Neural Information Processing. ICONIP 2015. Lecture Notes in Computer Science(), vol 9490. Springer, Cham. https://doi.org/10.1007/978-3-319-26535-3_22

Download citation

DOI: https://doi.org/10.1007/978-3-319-26535-3_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-26534-6

Online ISBN: 978-3-319-26535-3

eBook Packages: Computer ScienceComputer Science (R0)