Abstract

In this paper, we deal with the classification problem of visually similar objects which is also known as fine-grained recognition. We consider both rigid and non-rigid types of objects. We investigate the classification performance of different combinations of bag-of-visual words models to find out a generalized set of visual words for different types of fine-grained classification. We combine the feature sets using multi-class multiple learning algorithm. We evaluate the models on two datasets; in the non-rigid, deformable object category, Oxford 102 class flower dataset is chosen and 17 class make and model recognition car dataset is selected in the rigid category. Results show that our combination of vocabulary sets provides reasonable accuracies of 81.05 % and 96.76 % in the flower and car datasets, respectively.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Object recognition is one of the most fundamental problems in computer vision. Most of the researches in this area belong to the classification problem among different categories, like cars, airplanes, animals etc. as we can see from PASCAL [2] and Caltech [3] datasets. Rather than differentiating the objects of different categories, we investigate the recognition problem within single basic category. This is also referred to as fine-grained recognition and in most cases, it requires domain knowledge which very few people have. Recent studies cite show that combination of heterogeneous features covering the aspects of texture, shape and color is successful in fine-grained recognition since the heterogeneity of the feature sets exploit the subtle object properties complementarily. Hence, in this paper, we address this problem in a generalized way by examining different heterogeneous feature combinations to find out a general set of bag-of-features irrespective of the expert level of domain knowledge. For experiment, we consider both rigid and non-rigid types of objects. In the non-rigid category, we choose the problem of flower classification and in the rigid case, we take the problem of make and model recognition of cars into account.

The problem of flower classification appears to be more challenging than multi-category object classification for several reasons. One reason is large inter-species shape and color similarities. Also, mostly the overall shape information of the flowers is quiet useless because of the high-level of deformation of non-rigid flower petals. Therefore, it is very difficult for the laymen to identify the species of a given flower from a color image with visual inspection. Given only the image, sometimes it becomes even impossible for the botanists or flora-experts to differentiate among visually similar flower categories.

Also, recognition of make and model of cars impose the same kind of problems. Anyone familiar with brand logos can easily identify the brand name only looking at the logo in the cars. However, the logos only provide information about the brand or make of the cars. No information is present about the car models in the logos. Given the image of the frontal side of the cars only, identifying both make and model of that car is impossible for the person not very much familiar with that specific model of that specific brand because of the similar appearances in most cases, especially in case of the models from the same brand.

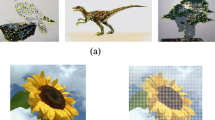

Sample images from flower and car datasets are shown in Fig. 1 from which high-level of visual similarities within the categories are evident.

In this paper, we investigate a general combination of color, shape and texture features using multiple kernel learning algorithm of Tang et al. [6]. Our selected bag-of-features model provides substantially high recognition accuracies on both flower and car datasets. The next section gives a review of the most relevant literatures. The methodology is described in Sect. 3. A short description of both datasets and the experimental results on these datasets are illustrated in Sect. 4. Section 5 contains discussion and future work.

2 Related Work

As a major field in computer vision, there are hundreds of papers published on object recognition every year. Here, we cover only the most relevant ones to ours.

Nilsback and Zisserman [7–11] have the first extensive level of work on flower classification. They use a bag-of-words model of combination of HSV, internal and boundary SIFT [4] and HOG [12] with a non-linear multiple kernel support vector machine [5]. Also, later they propose geometric layout features [7] to make all the images of same size and orientation. Our work can be considered a modified extension, improvement and generalization over their work.

Chai et al. [13] proposes two iterative bi-level co-segmentation algorithms (BiCos and BiCos-MT) based on SVM classification using GrabCut [14] and high-dimensional descriptors stacked from the standard sub-descriptors such as, color distribution, SIFT [4], size, location within the image and shape - all extracted from superpixels [15] of single image and multiple images from multiple classes. Finally, they use concatenated bag-of-words histograms of LLC [16] quantized Lab color and three different SIFT descriptors extracted from the forenground region and linear SVM for recognition.

Ito and Kubota [17] use the concept of co-occurrence features for recognition. They propose three heterogeneous co-occurrence features, i.e. color-CoHOG which consists of multiple co-occurrence histograms of oriented gradients including color matching information, CoHED which is the co-occurrence of edge orientation and color difference, CoHD which is the co-occurrence of a pair of color differences and lastly, one homogeneous feature - color histogram [10].

Angelova and Zhu [18] use RGB intensity based pixel affinity for segmentation and max pooling of the LLC [16] encoded HOG features [12] at multiple scales with linear SVM for classification. In the domain of make and model recognition (MMR), there are two types of approaches - one is feature-based and the other is appearance-based. Since our approach belongs to the former category, we only review the relevant approaches in that category.

Petrovic and Cootes [19] first analyze different features including pixel intensities, edge response and orientation, normalized gradients and phase information extracted from the frontal view of the vehicles with k-Nearest Neighbor (kNN) classifier for recognition. Another type of feature-based approach in this domain includes descriptor features like scale-invariant feature transform (SIFT)[4] and speeded up robust features (SURF)[20] as the descriptors. Dlagnekov [21] investigated SIFT based image matching along with two other methods, i.e. Eigencars and shape context matching for recognition. His experiments demonstrated the promising performance of SIFT compared to others. Baran et al. [1] examined the performance of two approaches in the same dataset we use in MMR. The first one is SURF bag-of-features with SVM and the second approach consists of different distance metrics based image matching using the weighted combination of edge histogram descriptors [22], SIFT and SURF.

3 Method

Many object recognition researches leverage segmentation as pre-processing for recognition [1, 7, 13, 18, 23]. Since our focus in this paper is to experiment the combination of bag-of-features using multiple kernel learning, we use simple GrabCut [24] and Haar-like feature detectors [25, 26] to segment the images of flower and car datasets, respectively, prior to applying our recognition framework.

3.1 Features

The low level features extracted from the foreground or object regions to exploit color, shape and texture properties of the objects are HSV color, SIFT, multi-scale dense SIFT and non-overlapping HOG.

HSV Color. HSV colorspace is chosen over RGB, Lab or other spaces because of its less sensitivity to illumination variance [7]. The descriptors are taken as the average of non-overlapping MxM pixel blocks over the entire image. All 3-D descriptors from the training images are then grouped into N clusters by estimating N cluster centroids or visual words using K-means [27]. The values of parameters M and N are chosen experimentally to avoid both high bias and high variance.

SIFT. SIFT descriptors take the local shape and texture properties of the objects in the segmented images into account. However, the original scale estimation procedure of Lowe [4] in the SIFT calculation shows serious drawbacks. Objects naturally appear in images in arbitrarily different scales. In most cases, in natural images, these scales are unknown and so multiple scales should be considered for each feature point. One typical approach is to seek for each feature point a stable, characteristic scale to both reduce the computational complexity of higher level visual systems, as well as improving their performance by focusing on more relevant information. In the original paper of SIFT descriptor [4], Lowe followed this approach. However, this method produces a small set of interest points located near corner structures in the image. Mikolajczyk [28] shows that a scale change of factor 4.4 causes the percent of pixels for which a scale is detected to decrease as little as 38 % for the DoG detector of which in only 10.6 %, the detected scale is correct. To overcome this limitation of scale estimation, instead of using original point localization method of SIFT, we extract SIFT descriptors on a regular grid of spacing M with circular patches of fixed radius R [7, 30]. The superiority of this kind of regular point sampling strategies over systems utilizing invariant features generated at stable coordinates and scales is already shown by Nowak et al. [29]. The reason behind this performance boost in [29] is because of having descriptors for many pixels over accurate scales than just having a few with the Lowe’s method.

Multi-scale Dense SIFT. SIFT descriptors of fixed size in regular grid points cannot handle larger scale differences. To this end, we incorporate the pyramid histogram of visual words (PHOW) descriptor [30, 31], which is a variant of multi-scale dense SIFT. At each point on a regular grid of spacing M, SIFT descriptors are computed over four circular support patches of four fixed radii. Hence, each point is represented by four SIFT descriptors. At last, all the features are vector quantized into N words using K-means algorithm.

Non-overlapping HOG. HOG features [12] are the normalized histograms of oriented gradients with overlaps between the neighboring cells (small blocks of pixels) in a grid. The number of bins B is equal to the number of orientations in the histogram. However, in our calculation, we have used no overlapping between the cells. Also, like SIFT descriptors, we treat B-bin normalized histograms as B-dimensional descriptors and quantize them into N visual words using K-means like the other three features.

3.2 Classifier

We have used multiple kernel learning approach of Tang et al. [6]. This approach uses SVM as its base classifier and a weighted linear combination of kernels, each kernel corresponding to one feature. We use normalized chi-square distance to calculate the similarity matrix and same kernel for each feature. Semi-infinite linear program (SILP) [32] is used in [6] for the purpose of large scale kernel learning. Figure 2 shows the block diagram of our general approach.

4 Experiment

In this section, we illustrate the experimental results of our proposed feature combination using multiple kernel learning on two fine-grained recognition benchmarks: Oxford 102 flowers [7] and MMR 17 cars [1].

4.1 Datasets

Oxford 102 Flowers. Oxford 102 flowers dataset is introduced by Nilsback and Zisserman [7]. It contains 102 species of flowers and a total of 8189 images. Each category consists of between 40 and 250 images. The species are chosen to be flowers commonly found in United Kingdom. Most of the images in this dataset were collected from the web whereas a few of them were acquired by taking pictures. The dataset is divided into a training set, a validation set and a test set. Training and validation sets each consist of 10 images per class, totaling 1020 images each. Remaining 6149 images belong to the test set.

MMR 17 Cars. This dataset is proposed by Baran et al. [1]. The number of car models in this dataset is 17. It is divided into two separate subsets - one is for training and the other is for testing. The images were collected in various lighting conditions over a period of 12 months. All the images represent front sides of cars taken “en face” or at a little angle (less than 30 degrees). The training set contains a total number of 1360 images with 80 images per class taken outdoor or downloaded from the internet (fifty-fifty). The test set is composed of 2499 images. Each category consists of between 65 and 237 images. The test images, like the training ones, were taken as well from the outdoor as from the internet. In case of test set however, less attention was paid to the quality and size of collected images.

4.2 Results

All the images are first cut according to the smallest bounding box enclosing the foreground segmentation and then they are rescaled to the smallest dimension of 500 pixels before feature extraction in Oxford 102 flower dataset. Table 1 shows the recognition performance for different features and their combinations on this dataset and Table 2 shows the comparison in accuracy with other recent methods. We compare our method with only those which use segmentation prior to recognition to keep the same baseline. On this baseline, our method provides slightly better recognition rate over the recent one of Angelova and Zhu [18].

Table 3 lists the recognition rate for different features and their combinations on MMR 17 dataset. Since any car model may have any arbitrary color, it cannot be considered an object property for make and model recognition. Therefore, we exclude HSV color from the feature set for this dataset. From the recognition rates listed in Tables 1 and 3 for different single features, multi-scale dense SIFT (MSD-SIFT) appears to be the best among all. Table 4 shows a comparison of our method with two other approaches recently published by Baran et al. [1]. Our method outperforms the approach using SURF-BoW+SVM in [1] but is slightly behind the other weighted combination of SURF, SIFT and EH. However, in [1], the authors clearly state that this method has significant computational burden of exhaustive high dimensional descriptor matching and so despite achieving higher accuracy, it is infeasible for real-time implementation. Therefore, from the perspective of feasibility in real-time applications, our method shows a performance boost over the recent one (SURF-BoW+SVM).

Parameters. The optimum number of words is 900 for HSV feature in Oxford dataset. For HOG, it is 1500 and for both SIFT and MSD-SIFT, this number is 3000 in both datasets. The block size for averaging in HSV colorspace is 3\(\,\times \,\)3. In case of HOG, 8\(\,\times \,\)8 square cells are used for both datasets with the range of gradient orientation from -180 to +180 degree. Regular grid spacing of 5 pixels is used in the calculation of both SIFT and MSD-SIFT for both datasets. The radius of the descriptors in SIFT is used to be 5 pixels and the magnification factor for MSD-SIFT used is equal to 6. Scales equal to 4, 6, 8 and 10 are used in MSD-SIFT calculation.

5 Discussion and Future Work

In this paper, we experiment on different combination of feature set to find out an effective one across different domain of fine-grained recognition. In this work, we use one non-rigid and one rigid dataset for evaluation. Albeit our feature set provides reasonable accuracies on both datasets compared to the state-of-the-art, it is much less accurate in non-rigid or deformable domain than the rigid one. Hence, one of the main future challenges is to find out an almost equally effective approach in both deformable and rigid domains. Also, future work includes the task of developing similar approaches for large-scale datasets.

References

Baran, R., Glowacz, A., Matiolanski, A.: The efficient real- and non-real-time make and model recognition of cars. Multimedia Tools Appl. 74, 4269–4288 (2015)

Everingham, M., Van Gool, L.J., Williams, C.K.I., Winn, J.M., Zisserman, A.: The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 88(2), 303–338 (2010)

Li, F.-F., Fergus, R., Perona, P.: One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach. Intell. 28(5), 594–611 (2006)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Scholkopf, B., Smola, A.: Learning with Kernels. MIT Press, Cambridge (2002)

Tang, L., Chen, J., Ye, J.: On multiple kernel learning with multiple labels. In: 21st International Joint Conference on Artificial Intelligence, Pasadena, California, USA, pp. 1255–1260 (2009)

Nilsback, M.-E., Zisserman, A.: Automated flower classification over a large number of classes. In: 6th Indian Conference on Computer Vision. Graphics & Image Processing, Bhubaneswar, India, pp. 722–729 (2008)

Nilsback, M-E., Zisserman, A.: Delving into the whorl of flower segmentation. In: Proceedings of the British Machine Vision Conference, Warwick, UK, pp. 1–10 (2007)

Nilsback, M.-E., Zisserman, A.: Delving deeper into the whorl of flower segmentation. Image Vis. Comput. 28(6), 1049–1062 (2010)

Nilsback, M-E., Zisserman, A.: A visual vocabulary for flower classification. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, USA, pp. 1447–1454 (2006)

Nilsback, M-E.: An automatic visual flora - segmentation and classification of flowers images. D. Phil. thesis, University of Oxford (2009)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2005), San Diego, California, USA, pp. 886–893 (2005)

Chai, Y., Lempitsky, V.S., Zisserman, A.: BiCoS: a bi-level co-segmentation method for image classification. In: IEEE International Conference on Computer Vision, Barcelona, Spain, pp. 2579–2586 (2011)

Rother, C., Kolmogorov, V., Blake, A.: “GrabCut”: interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 23(3), 309–314 (2004)

Felzenszwalb, P.F., Huttenlocher, D.P.: Efficient graph-based image segmentation. Int. J. Comput. Vis. 59(2), 167–181 (2004)

Wang, J., Yang, J., Yu, K., Lv, F., Huang, T.S., Gong, Y.: Locality-constrained linear coding for image classification. In: The Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, California, USA, pp. 3360–3367 (2010)

Ito, S., Kutoba, S. : Object classification using heterogeneous co-occurrence features. In: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, pp. 209–222 (2010)

Angelova, A., Zhu, S.: Efficient object detection and segmentation for fine-grained recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, pp. 811–818 (2013)

Petrovic, V.S., Cootes, T.F.: Analysis of features for rigid structure vehicle type recognition. In: British Machine Vision Conference, Kingston, UK, pp. 1–10 (2004)

Bay, H., Tuytelaars, T., Van Gool, L.J.: SURF: speeded up robust features. In: 9th European Conference on Computer Vision, Graz, Austria, pp. 404–417 (2006)

Dlagnekov, L.: Video-based car surveillance: license plate, make and model recognition. Master’s thesis, University of California, San Diego, USA (2005)

Park, D.K., Jeon, Y.S., Won, C.S.: Efficient use of local edge histogram descriptor. In: ACM Multimedia 2000 Workshops, Los Angeles, California, USA, pp. 51–54 (2000)

Rabinovich, A., Vedaldi, A., Belongie, S.: Does image segmentation improve object categorization? UCSD CSE Technical report, no. CS2007-090, USA (2007)

Boykov, Y., Jolly, M-P.: Interactive graph cuts for optimal boundary and region segmentation of objects in N-D images. In: International Conference on Computer Vision, Princeton, NJ, USA, pp. 105–112 (2001)

Andrzej, M., Piotr, G.: Automated optimization of object detection classifier using genetic algorithm. In: Dziech, A., Czyżewski, A. (eds.) Multimedia Communications, Services and Security. Communications in Computer and Information Science, vol. 149, pp. 158–164. Springer, Berlin, Heidelberg, Germany (2011)

Viola, P.A., Jones, M.J.: Rapid object detection using a boosted cascade of simple features. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, pp. 511–518 (2001)

Csurka, G., Dance, C.R., Fan, L., Willamowski, J., Bray, C.: Visual categorization with bags of keypoints. In: 8th European Conference on Computer Vision, Prague, Czech Republic, pp. 1–22 (2004)

Mikolajczyk, K.: Detection of local features invariant to affine transfomations. Ph.D. thesis, Institut National Polytechnique deGrenoble, France (2002)

Nowak, E., Jurie, F., Triggs, B. : Sampling strategies for bag-of-features image classification. In: 9th European Conference on Computer Vision, Graz, Austria, pp. 490–503 (2006)

Vedaldi, A., Fulkerson, B. : Vlfeat: an open and portable library of computer vision algorithms. In: International Conference on Multimedia, Firenze, Italy, pp. 1469–1472 (2010)

Bosch, A., Zisserman, A., Muoz, X., Image Classification using Random Forests and Ferns. In: International Conference on Computer Vision, Rio de Janeiro, Brazil, pp. 1–8 (2007)

Sonnenburg, S., Rätsch, G., Schäfer, C., Schölkopf, B.: Large scale multiple kernel learning. J. Mach. Learn. Res. 7, 1531–1565 (2006)

Acknowledgment

This research is financially supported by the Ministry of Education, Science and Technology (MEST) and National Research Foundation of Korea (NRF) through the Human Resource Training Project for Regional Innovation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Aich, S., Lee, CW. (2016). A General Vocabulary Based Approach for Fine-Grained Object Recognition. In: Bräunl, T., McCane, B., Rivera, M., Yu, X. (eds) Image and Video Technology. PSIVT 2015. Lecture Notes in Computer Science(), vol 9431. Springer, Cham. https://doi.org/10.1007/978-3-319-29451-3_45

Download citation

DOI: https://doi.org/10.1007/978-3-319-29451-3_45

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-29450-6

Online ISBN: 978-3-319-29451-3

eBook Packages: Computer ScienceComputer Science (R0)