Abstract

We propose an approach using DBM-DNNs for i-vector based audio-visual person identification. The unsupervised training of two Deep Boltzmann Machines DBM\(_{\text {speech}}\) and DBM\(_\text {face}\) is performed using unlabeled audio and visual data from a set of background subjects. The DBMs are then used to initialize two corresponding DNNs for classification, referred to as the DBM-DNN\(_{\text {speech}}\) and DBM-DNN\(_{\text {face}}\) in this paper. The DBM-DNNs are discriminatively fine-tuned using the back-propagation on a set of training data and evaluated on a set of test data from the target subjects. We compared their performance with the cosine distance (cosDist) and the state-of-the-art DBN-DNN classifier. We also tested three different configurations of the DBM-DNNs. We show that DBM-DNNs with two hidden layers and 800 units in each hidden layer achieved best identification performance for 400 dimensional i-vectors as input. Our experiments were carried out on the challenging MOBIO dataset.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The emergence of smart devices is opening doors to a range of applications such as the e-lodgment of service requests, e-transfer of payments, and e-banking. The viability of such applications would however require a robust and error free biometric system to identify the end users. This is a challenging task due to the significant session variabilities that may be contained in the captured data [1, 2]. Recently, audio-visual person recognition on mobile phones has gained a significant attention. For example, two evaluation competitions were organized in 2013 for speaker [3] and face [4] recognition on the MOBIO [5] dataset. In those competitions, the state-of-the-art face and speaker recognition techniques were evaluated. A majority of the speaker recognition systems in [3] used the Total Variability Modeling (TVM) [6] to learn a total variability matrix (T) which was then used to extract the i-vectors. Recently, in [7, 8], TVM was used for both speaker and face verification to achieve one of the top performing results. This motivates the use of i-vectors as features for audio-visual identification.

In addition, learning high level representations using a deep architecture with multiple layers of non-linear information processing has recently gained popularity in the areas of image, audio and speech processing [9, 10]. For example, as shown in Fig. 1 right panel, a Deep Belief Network (DBN) is a generative architecture built by stacking multiple layers of restricted Boltzmann machines (RBMs) [11]. A DBN can be converted into a discriminative network, which is referred to as DBN-DNN in [9], by adding a top label layer and using the standard back-propagation algorithm. Although it has been extensively used for speech recognition [9], DBN-DNN was also used for speaker recognition in [12]. In [13] a DBN was used as a pseudo-ivector extractor and then a Probabilistic Linear Discriminant Analysis (PLDA) [14, 15] was used for classification. Such greedy layer wise learning of DBNs however limits the network to a single bottom-up pass. It also ignores the top-down influence during the inference process, which may lead to failures in the modeling of variabilities in the case of ambiguous inputs. This motivates the use of Deep Boltzmann Machines (DBMs) as an alternative of DBN.

A DBM is a variant of Boltzmann machine which not only retains the multi-layer architecture but also incorporates the top-down feedback (Fig. 1 left panel). Hence, a DBM has the potential of learning complex internal representations and dealing more robustly the ambiguous inputs (e.g., image or speech) [16]. Similar to the DBN-DNN, a DBM can be converted into a discriminative network, which is referred to as a DBM-DNN [17]. Although they have been used for a variety of classification tasks (e.g., handwritten digit recognition and object recognition [16], query detection [18], phone recognition [17], and multi-modal learning [19]), multi-modal person identification using DBMs has not been well studied. In this paper, we propose to use DBM-DNNs for i-vector based audio-visual person identification on mobile phone data (details in Sect. 4). As opposed to the DBM-DNN in [17] (used for speech recognition), the DBM-DNNs presented in this paper do not use the hidden representations as additional input to the DBM-DNNs. Rather than using DBMs for learning hierarchical representations we use them to learn a set of initial parameters (weights and biases) of the DNNs.

In summary, our contributions in this paper can be listed as follows: (a) We use DBM-DNNs for i-vector based audio-visual person identification. To the best of our knowledge, this is the first application of DBM-DNN with i-vectors as inputs. (b) We show that a higher accuracy can be achieved with DBM-DNN compared to the cosine distance classifier [6] commonly used in the literature to evaluate i-vector based systems (see Fig. 2) and also the state-of-the-art DBN-DNN (c) We study three configurations of DBM-DNN. Our experimental results show that two hidden layers having 800 units each achieved the best accuracy with 400 dimensional i-vectors.

2 Background

In this section, we briefly present the theoretical background of DBMs and the i-vector extraction using TVM.

2.1 Deep Boltzmann Machines

A deep Boltzmann machine is formed by stacking multiple layers of Boltzmann machines as shown in Fig. 1 left panel. In a DBM each layer captures higher-order correlations between the activities of hidden units in the layer below. In [16], some key aspects of DBMs were mentioned: (i) potential of learning complex internal representations, (ii) high-level representations can be built from a large supply of unlabeled data and very small number of labeled data can be used to slightly fine-tune the model, (iii) deal more robustly with ambiguous inputs (e.g., image and speech). Therefore, DBMs are considered a promising tool for solving object and speech/speaker recognition problems [20, 21].

Consider a two-layer DBM with no within-layer connection, Gaussian visible units (e.g., speech, image) \(v \in \mathbb {R}^D \) and binary hidden units \(h \in \{0,1\}^P\). Then, the energy for the state \({v,h^1,h^2}\) can be defined as:

where b and \(c^n\) represent the biases of the visible and n-th hidden layer, respectively; \(\sigma _i\) is the standard-deviation of the visible units; \(W^n\) represents the synaptic connection weights between the n-th hidden layer and the previous layer; and D and \(P_n\) represent the number of units in the visible layer and in the n-th hidden layer, respectively. Here, \(\theta =\{b,c,W^1,W^2\}\) represents the set of parameters.

DBMs can be trained with the stochastic maximization of the log-likelihood function. The partial-derivative of the log-likelihood function is:

where \(\langle .\rangle _{data}\) and \(\langle .\rangle _{model}\) denote the expectations over the data distribution \(P(h|\{v_{(t)}\},\theta )\) and the model distribution \(P(v,h | \theta )\), respectively. The training set \(\{v_{(t)}\}_{t = 1,\ldots ,T}\) contains T samples. Although the update rules are well defined, it is intractable to exactly compute them. Variational approximation is commonly used to compute the expectation over the data distribution and different persistent sampling methods (e.g., [16, 22, 23]) are used to compute the expectation over the model distribution. A greedy layerwise approach [16] or a two-stage pre-training algorithm [24] can be used to initialize the parameters of DBM.

2.2 Total Variability Modeling (TVM)

Inter-session variability (ISV) [25] and joint factor analysis (JFA) [26] are two session variability modeling techniques widely used for session compensation. Total Variability Modeling (TVM) [6] overcomes the high-dimensionality issue of ISV and JFA. In TVM, each sample in the training set is treated as if it comes from a distinct subject. TVM utilizes the factor analysis as a front-end processing step and extracts low-dimensional i-vector. The TVM training process assumes that the jth sample of subject i is can be represented by the Gaussian Mixture Model (GMM) mean super-vector

where m is the speaker- and session-independent mean super-vector obtained from a Universal Background Model (UBM), T is the low-dimensional total variability matrix, and \(w_{i,j}\) is the i-vector representation.

The factor analysis process in TVM is used to extract a low-dimensional representation of each sample known as i-vector. An i-vector in its raw form captures the subject-specific information needed for discrimination as well as detrimental session variability. Hence, session compensation (e.g., whitening and i-vector length normalization) and scoring (e.g., PLDA or cosine distance) are performed as separate processes (see Fig. 2).

3 DBM-DNN Classification

In this section, we present the DBM-DNNs for audio-visual person identification. We train two DBMs (e.g., DBM\(_\text {speech}\) and DBM\(_\text {face}\)) as shown in Fig. 3, in an unsupervised fashion using the raw i-vectors extracted from the unlabeled samples from the background subjects. The steps followed in our proposed framework are: (i) DBMs pre-training, (ii) DBMs fine-tuning, (iii) discriminative training of the DBM-DNNs for classification, and (iv) fusion.

In the first step, we use the two-stage (Stage 1 and 2) pre-training algorithm presented in [24]. In Stage 1, each even-numbered layer of a DBM is trained as an RBM on top of each other. This is a common practice when a DBN is trained. In Stage 2, a model that has the predictive power of the variational parameters given the visible vector is trained. This is done by learning a joint distribution over the visible and hidden vectors using an RBM. In the second step, the initial set of parameters are fine-tuned using a layer-by-layer approach. This is similar to the one in [16] except that the visible units at the bottom layer and the hidden units at the top layer are not repeated. This allows the DBMs to adjust the parameters of all the layers (both even and odd) at one go.

In the third step, we use the learned DBM parameters to initialize deep neural networks (DNM-DNNs) with a top label layer. The top label layer of a DBM-DNN has as many units as the number of enrolled subjects. The bottom layers have exactly the same architecture as their corresponding DBM. Here, the connection weights between the layer at the top and the one immediately below are randomly initialized. After the initialization, they are discriminatively fine-tuned using a small set of labeled training data and the standard back-propagation algorithm. Finally, at the fourth step, we combine the outputs of the DBM-DNNs using the sum fusion, which for an identity j is given by:

where m is the modality assignment (in our case, \(m=1\) represents DBM-DNN\(_{\text {speech}}\) and \(m=2\) represents DBM-DNN\(_{\text {face}}\)) and \(p_m(v_m,j)\) represents the probability of the input \(v_m\) belonging to person j ( i.e., the value assigned by j-th node of DBM-DNN for \(v_m\)). For a given set of observation vectors \(o = \{v_1,v_2\}\), a decision is given in favor of the j-th identity if \(f_j\) is maximum in the fused score vector \(f = [f_1, f_2, \ldots , f_N]\), where N is the number of target subjects.

DBM-DNN\(_\text {speech}\) (left) and DBM-DNN\(_\text {face}\) are initialized with the generative weights of the DBM\(_\text {speech}\) and DBM\(_\text {face}\), respectively, and discriminatingly fine-tuned using the standard back-propagation algorithm. The output scores are fused using the sum rule of fusion.

4 Database and Features

In our experiments, we used the MOBIO dataset which is a collection of videos with speech captured using mobile devices. There are videos from 150 subjects (50 females and 100 males) captured in 12 different sessions over a one-and-a-half-year period. Each session contains 11–21 videos with significant pose and illumination variations (see Fig. 5) as well as different environment noise. We divided the subjects into two sets: (a) background (50 subjects: randomly picked 37 males and 13 females to retain the gender representation ratio of the dataset) and (b) target (remaining 100 subjects). This was repeated 10 times to ensure the experimental results were not based on a held-out set of data. Therefore, each experimental result presented in this paper represents th e mean of 10 evaluations. In each evaluation, we used the audio and visual data from the background subjects for: (a) building a UBM to learn the total variability matrix, and (b) for the unsupervised training of a DBM. We picked 5 samples each from a set of 6 randomly selected session (out of 12 sessions) from all the target subjects as the training data. Similarly, we used 5 samples each from the remaining 6 sessions as the test data.

4.1 Speech Features

We used speech enhancement and voice activity detection algorithms in the VOICEBOX toolbox [27] for preprocessing the speech signals. Then, frames were extracted from each silence removed speech with a window size of 20 ms and sampling rate of 10ms. Then, 12 cepstral coefficients were derived and augmented with the log energy forming a 13 dimensional static feature vector. The delta and acceleration were appended to form the final 13 static + 13 delta + 13 acceleration = 39 dimensional mel frequency cepstral coefficients (MFCCs) feature vector per frame.

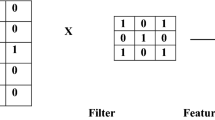

4.2 Visual Features

Each image is rotated, scaled and cropped to a size of 64\(\times \)80 pixels. This is done in such a way that the eyes are 16 pixels from the top and separated by 33 pixels. Then, each cropped image is photometrically normalized using the Tan-Triggs algorithm [28]. We extracted 12\(\times \)12 pixel blocks from a preprocessed image using an exhaustive overlap which led to 3657 blocks per image. Then, the 44 lowest frequency 2D discrete cosine transform (2D-DCT) coefficients [29] excluding the zero frequency coefficient were extracted from each normalized (zero mean and unit variance) image block. The resulting 2D-DCT feature vectors were also normalized to zero mean and unit variance in each dimension with respect to other feature vectors of the image.

5 Results and Analysis

In this section, we present the implementation details, experimental results and analysis. We carried our experiments on the MOBIO dataset and compared the performance of the DBM-DNNs with the DBN-DNN and the cosine distance classifier.

5.1 Implementation

In this section, we evaluate the identification accuracy of the DBM-DNNs and compare with state-of-the-art classifiers, such as the cosine distance classifier [6] and the DBN-DNN. In our experiments, i-vectors were extracted using the MSR Identity Toolbox [30]. We learned 512 mixture gender-independent UBMs for each modality. The rank of the TVM subspace was set to 400 (commonly used in the literature) and 5 iterations of the total variability modeling was carried out. The DBM-DNNs and DBN-DNNs presented in this paper were implemented using the Deepmat toolbox [31] and the same set of learning parameters. The input data was subdivided into mini-batches and the connection weights between the units of two layers were updated after each mini-batch. During the pre-training phase, the parameters of RBM were learned by the contrastive divergence (CD), adaptive learning rate and enhanced gradient techniques as in [32]. The learning rates for Stage 1 and 2 were set to 0.05 and 0.01, respectively. Then, persistent contrastive divergence (PCD) and the enhanced gradient were used to fine-tune the DBM parameters with a learning rate of 0.001. In each step, the model was trained for 50 epochs and with a mini-batch size of 100.

We evaluated the performance of the cosine distance classifier, DBN-DNNs (two hidden layers with 400 units each) and three configurations of the DBM-DNNs. Their rank-1 identification rates are reported in Table 1. The overall rank-1 identification rate obtained using the cosine distance classifier is 0.946 which is significantly better than the identification rates of the individual modalities (i.e., 0.775 for speech and 0.733 for face). In Table 1, the results show that deep learning methods significantly improved the identification accuracy. We carried out our experiments using DBM-DNNs and DBN-DNNs with two hidden layers. We used three different configurations for DBM-DNNs: 400-400, 800-800, and 1200-1200, representing the number of units in the hidden layers. Our experimental results in Table 1 show that using DBM-DNNs with 800 units in each hidden layer performed better in terms of individual and overall identification rates compared to the use of 400 or 1200 units in the hidden layers.

In Fig. 6, we also report the Cumulative Match Characteristics (CMC) curves for the individual modalities and their fusion performed using the equally weighted sum rule. The CMC curves for the best DBM-DNN (i.e., 800–800) as shown in Table 1 are reported. It can be seen that the DBM-DNN consistently outperformed the cosine distance classifier and the DBN-DNN. This is because the posteriors obtained using the DBM-DNNs were able to discriminate between the target subjects better than the DBN-DNNs and the cosine distance classifier.

6 Conclusion

In this paper, we present the first use of DBM-DNNs for i-vector based audio-visual person identification. We compare the performance of DBM-DNNs with the state-of-the-art DBN-DNNs and the cosine distance classifier commonly used in the literature to evaluate i-vector based systems. Our experiments were carried out on the challenging MOBIO dataset. Experimental results show that DBM-DNNs achieved higher accuracies compared to the DBN-DNNs and the cosine distance classifier. We also studied three different configurations of the DBM-DNNs in this paper. Our results show that when a 400 dimensional i-vector is presented to a DBM-DNN with two hidden layers and 800 units each this performed more accurately than the other configurations. The fact that DBMs incorporate top-down feedback in the learning process enables them to learn a good generative model of the underlying data. The performance of the DBM-DNNs and DBN-DNNs under various environmental noise conditions will be an interesting study.

References

Alam, M.R., Bennamoun, M., Togneri, R., Sohel, F.: An efficient reliability estimation technique for audio-visual person identification. In: 2013 8th IEEE Conference on Industrial Electronics and Applications (ICIEA), pp. 1631–1635. IEEE (2013)

Alam, M.R., Bennamoun, M., Togneri, R., Sohel, F.: A confidence-based late fusion framework for audio-visual biometric identification. Pattern Recogn. Lett. 52, 65–71 (2015)

Khoury, E., Vesnicer, B., Franco-Pedroso, J., Violato, R., Boulkcnafet, Z., Mazaira Fernandez, L.M., Diez, M., Kosmala, J., Khemiri, H., Cipr, T., et al.: The 2013 speaker recognition evaluation in mobile environment. In: Proceedings of the 2013 International Conference on Biometrics (ICB), pp. 1–8. IEEE (2013)

Gunther, M., Costa-Pazo, A., Ding, C., Boutellaa, E., Chiachia, G., Zhang, H., de Assis Angeloni, M., Struc, V., Khoury, E., Vazquez-Fernandez, E., et al.: The 2013 face recognition evaluation in mobile environment. In: Proceedings of the 2013 International Conference on Biometrics (ICB), pp. 1–7. IEEE (2013)

McCool, C., Marcel, S., Hadid, A., Pietikainen, M., Matejka, P., Cernocky, J., Poh, N., Kittler, J., Larcher, A., Levy, C., et al.: Bi-modal person recognition on a mobile phone: using mobile phone data. In: Proceedings of the 2012 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), pp. 635–640. IEEE (2012)

Dehak, N., Kenny, P., Dehak, R., Dumouchel, P., Ouellet, P.: Front-end factor analysis for speaker verification. IEEE Trans. Audio Speech Lang. Process. 19(4), 788–798 (2011)

Wallace, R., McLaren, M.: Total variability modelling for face verification. IET Biometrics 1(4), 188–199 (2012)

Khoury, E., El Shafey, L., McCool, C., Günther, M., Marcel, S.: Bi-modal biometric authentication on mobile phones in challenging conditions. Image Vis. Comput. 32(12), 1147–1160 (2014)

Hinton, G., Deng, L., Yu, D., Dahl, G.E., Mohamed, A.R., Jaitly, N., Senior, A., Vanhoucke, V., Nguyen, P., Sainath, T.N., et al.: Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Sig. Process. Mag. 29(6), 82–97 (2012)

Yu, D., Deng, L.: Deep learning and its applications to signal and information processing [exploratory dsp]. IEEE Sig. Process. Mag. 28(1), 145–154 (2011)

Hinton, G.E., Salakhutdinov, R.R.: Reducing the dimensionality of data with neural networks. Science 313(5786), 504–507 (2006)

Ghahabi, O., Hernando, J.: Deep belief networks for i-vector based speaker recognition. In: Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1700–1704. IEEE (2014)

Vasilakakis, V., Cumani, S., Laface, P.: Speaker recognition by means of deep belief networks. In: Proceedings of the Biometric Technologies in Forensic Science (BTFS) (2013)

Brümmer, N., De Villiers, E.: The speaker partitioning problem. In: Odyssey, p. 34 (2010)

Kenny, P.: Bayesian speaker verification with heavy-tailed priors. In: Odyssey, p. 14 (2010)

Salakhutdinov, R., Hinton, G.E.: Deep boltzmann machines. In: Proceedings of the 2009 International Conference on Artificial Intelligence and Statistics, pp. 448–455 (2009)

You, Z., Wang, X., Xu, B.: Investigation of deep boltzmann machines for phone recognition. In: 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 7600–7603. IEEE (2013)

Zhang, Y., Salakhutdinov, R., Chang, H.A., Glass, J.: Resource configurable spoken query detection using deep boltzmann machines. In: 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5161–5164. IEEE (2012)

Srivastava, N., Salakhutdinov, R.: Multimodal learning with deep boltzmann machines. In: Advances in Neural Information Processing Systems, pp. 2222–2230 (2012)

Senoussaoui, M., Dehak, N., Kenny, P., Dehak, R., Dumouchel, P.: First attempt of boltzmann machines for speaker verification. In: Proceedings of the Odyssey 2012-The Speaker and Language Recognition Workshop (2012)

Stafylakis, T., Kenny, P., Senoussaoui, M., Dumouchel, P.: Preliminary investigation of boltzmann machine classifiers for speaker recognition. In: Proceedings of the 2012 Odyssey Speaker and Language Recognition Workshop (2012)

Salakhutdinov, R.: Learning deep boltzmann machines using adaptive mcmc. In: Proceedings of the 27th International Conference on Machine Learning (ICML), pp. 943–950 (2010)

Salakhutdinov, R., Hinton, G.: An efficient learning procedure for deep boltzmann machines. Neural Comput. 24(8), 1967–2006 (2012)

Cho, K.H., Raiko, T., Ilin, A., Karhunen, J.: A two-stage pretraining algorithm for deep boltzmann machines. In: Mladenov, V., Koprinkova-Hristova, P., Palm, G., Villa, A.E.P., Appollini, B., Kasabov, N. (eds.) ICANN 2013. LNCS, vol. 8131, pp. 106–113. Springer, Heidelberg (2013)

Vogt, R., Sridharan, S.: Explicit modelling of session variability for speaker verification. Comput. Speech Lang. 22(1), 17–38 (2008)

Kenny, P., Boulianne, G., Ouellet, P., Dumouchel, P.: Joint factor analysis versus eigenchannels in speaker recognition. IEEE Trans. Audio Speech Lang. Process. 15(4), 1435–1447 (2007)

Brookes, M., et al.: Voicebox: Speech processing toolbox for matlab. Software (1997). http://www.ee.ic.ac.uk/hp/staff/dmb/voicebox/voicebox.html. March 2011

Tan, X., Triggs, B.: Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans. Image Process. 19(6), 1635–1650 (2010)

Sanderson, C., Paliwal, K.K.: Fast features for face authentication under illumination direction changes. Pattern Recog. Lett. 24(14), 2409–2419 (2003)

Sadjadi, S.O., Slaney, M., Heck, L.: MSR identity toolbox v1. 0: A matlab toolbox for speaker recognition research. In: Speech and Language Processing Technical Committee Newsletter (2013)

Cho, K.: (2013). https://github.com/kyunghyuncho/deepmat

Cho, K., Raiko, T., Ihler, A.T.: Enhanced gradient and adaptive learning rate for training restricted boltzmann machines. In: Proceedings of the 28th International Conference on Machine Learning (ICML), pp. 105–112 (2011)

Acknowledgment

This research is supported by Australian Research Council grants DP110103336 and DE120102960.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Alam, M.R., Bennamoun, M., Togneri, R., Sohel, F. (2016). Deep Boltzmann Machines for i-Vector Based Audio-Visual Person Identification. In: Bräunl, T., McCane, B., Rivera, M., Yu, X. (eds) Image and Video Technology. PSIVT 2015. Lecture Notes in Computer Science(), vol 9431. Springer, Cham. https://doi.org/10.1007/978-3-319-29451-3_50

Download citation

DOI: https://doi.org/10.1007/978-3-319-29451-3_50

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-29450-6

Online ISBN: 978-3-319-29451-3

eBook Packages: Computer ScienceComputer Science (R0)