Abstract

This paper reports a study on compression achieved on document images with different image formats, including PNG, GIF, PBM (zipped), JBIG and JBIG2. It also examines the issue of perceptual quality of the bi-level document images in these formats. It analyzes the impact of a common pre-processing step, namely the adaptive thresholding, on compression ratio and perceptual image quality in different image formats. We conclude that adaptive thresholding improves the compression ratio for common image formats, like the PNG and GIF, and make them comparable to JBIG/JBIG2 encoding; they result in significant improvement in perceptual image quality also. We also observe that the simple pre-processing step prevents perceptual information-loss in JBIG/JBIG2 encoding in certain situations.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Image compression

- Adaptive thresholding

- Utilization of Image formats

- Scanned document size optimization

1 Introduction

Digitization of paper documents is necessary for several reasons, such as for supporting computerized work-flow and long-term convenient archival. Many business processes and archival systems need to handle very large number, often in the order of millions, of documents. Optimization of document image size, maintaining readability, is important in these systems to reduce storage as well as handling time while transporting them over networks. In particular, big file-sizes are a major hindrance for consumption of documents over mobile networks. While several compression techniques have been proposed and several image representation formats have evolved to optimize document image sizes, their blind use often results in loss of readability. While confronted with such a situation, we experimented with several image compression schemes and some pre-processing algorithms. We present our key findings in this paper.

Generally, the commercial scanners produces color or gray-level document images that are stored in jpeg (or, pdf-encapsulated jpeg) formats. In many documents, the content is solely characterized by the text and graphics therein and color or texture do not contribute to its information value. Examples of such documents include business mails, doctors’ prescriptions, various forms and answer-books from examinations. Use of bi-level images drastically reduces the numbers of bits per pixels and is a pragmatic option for such documents. Various lossy and lossless techniques available for image compression are described in [3]. The image formats using these compression techniques is explained in details in [2, 4]. Different scenarios where certain image formats are more useful as compared to others are described as well. We also aim to experiment the proposed approach with JBIG [6, 7] and JBIG2 [8–10] standards designed and developed specially for bi-level images.

While JBIG / JBIG2 provides excellent compression on document images, degradation in perceptual quality of text and graphics are often observed in such encoding, especially when an image segment consists both. Our experimentation shows that some pre-processing steps like use of adaptive threshold for binarization helps in retaining the quality. We also show that such simple pre-processing technique can significantly reduce the file-size in other common document formats, such as PNG and GIF, and make their sizes almost comparable to those of JBIG/JBIG2 formats. Moreover, such pre-processing step often results in significant improvement in perceptual quality of the document.

The rest of the paper is organized as follows: Sect. 2 gives an overview of factors contributing to the large size of scanned documents, various compression techniques and the utilization of these compression techniques in image formats is summarized in Sect. 3, the proposed approach and the experimental results with discussion are elaborated in Sects. 4 and 5 respectively. Section 6 concludes the paper with the findings and recommendations from proposed approach.

2 Contributing Factors to Large Scanned Document Size

The possible reasons for large size of scanned documents are:

-

High Resolution while scanning: Resolution selected at the time of document scan can add to the size of scanned document. A document scanned at 300 dpi resolution is sufficient for printing, while a document scanned at 150–200 dpi is good enough for screen reading.

-

Scan Mode: On one hand, Black and White Text pages can be scanned at Line Art Scan Mode while, Black and White Photos can be scanned at Gray Tones. In Line art scan of a given document the aliasing are very pronounced at the edges which can be smoothend by increasing the resolution at the time of scan. But,the main benefit of small size of line art scan of documents is lost by increasing resolution(in order to get smooth-edged scan of documents). The gray-scale scan of documents has larger files size as compared to Line Art Scan of Documents.

-

Physical dimension of scanned document: A legal-size scan will be larger than a letter-size scan, with all other factors being equal.

-

Compression Techniques: The compression technique followed to compress the scanned document greatly defines the file size.

-

Scanning Noise: The principle source of noise in digital images arise during image acquisition. The performance of imaging sensors is affected by a variety of factors such as environmental conditions during image acquisition, and by the quality of sensing elements themselves.

We aim to reduce the size of scanned documents while enhancing the image clarity to simplify the process of upload/download of these documents.

3 Compression and Image Formats

The image format used for saving the scanned images can be interpreted as the compression technique used for scanned documents. The following sub-sections give a brief description of the broad categorization of compression techniques along with some commonly used image formats and the compression techniques used by them.

3.1 Compression Techniques

-

1.

Lossy Compression: Lossy compression is the class of data encoding methods that uses inexact approximations (or partial data discarding) for representing the content that has been encoded. Lossy compression algorithms preserve a representation of the original uncompressed image that may appear to be a perfect copy, but it is not a perfect copy. Often lossy compression is able to achieve smaller file sizes than lossless compression.

-

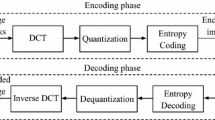

DCT (Discrete Cosine Transform): The discrete cosine transform (DCT) helps separate the image into parts (or spectral sub-bands) of differing importance (with respect to the image’s visual quality). The DCT is similar to the discrete Fourier transform: it transforms a signal or image from the spatial domain to the frequency domain.

-

Vector Quantization: Vector quantization (VQ) is a classical quantization technique from signal processing which allows the modeling of probability density functions by the distribution of prototype vectors. It works by dividing a large set of points (vectors) into groups having approximately the same number of points closest to them. Each group is represented by its centroid point, as in k-means and some other clustering algorithms.

-

-

2.

Lossless Compression: Lossless data compression is a class of data compression algorithms that allows the original data to be perfectly reconstructed from the compressed data.

-

Run Length Encoding: Run-length encoding (RLE) is a very simple form of data compression in which runs of data (that is, sequences in which the same data value occurs in many consecutive data elements) are stored as a single data value and count, rather than as the original run. There are a number of variants of run-length encoding. Image data is normally run-length encoded in a sequential process that treats the image data as a 1D stream, rather than as a 2D map of data.

-

LZW: The LZW Compression Algorithm can summarized as a technique that encodes sequences of 8-bit data as fixed-length 12-bit codes. The codes from 0 to 255 represent 1-character sequences consisting of the corresponding 8-bit character, and the codes 256 through 4095 are created in a dictionary for sequences encountered in the data as it is encoded. At each stage in compression, input bytes are gathered into a sequence until the next character would make a sequence for which there is no code yet in the dictionary. The code for the sequence (without that character) is added to the output, and a new code (for the sequence with that character) is added to the dictionary.

-

Zlib: zlib is a software library used for data compression. Zlib is an abstraction of the DEFLATE compression algorithm used in their gzip file compression program. Deflate is a data compression algorithm that uses a combination of the LZ77 algorithm and Huffman coding.

-

Huffman Coding: Huffman coding is based on the frequency of occurrence of a data item (pixel in images). The principle is to use a lower number of bits to encode the data that occurs more frequently. Codes are stored in a Code Book which may be constructed for each image or a set of images. In all cases the code book plus encoded data must be transmitted to enable decoding.

-

3.2 Image Formats

There are a number of image formats available that use either a lossless, or lossy form of compression (or their variation). Given below are a few image formats that use either of the forms of compression.

PNM: A Netpbm format is any graphics format used and defined by the Netpbm project. The portable pixmap format(PPM), the portable graymap format (PGM) and the portable bitmap format (PBM) are image file formats designed to be easily exchanged between platforms. They are also sometimes referred to collectively as the portable anymap format (PNM). The ASCII formats allow for human readability and easy transfer to other platforms (so long as those platforms understand ASCII), while the binary formats are more efficient both in file size and in ease of parsing, due to the absence of white spaces. In the binary formats, PBM uses 1 bit per pixel, PGM uses 8 bits per pixel, and PPM uses 24 bits per pixel: 8 for red, 8 for green, 8 for blue. PNM in itself does not provide any compression to encoded data, but a PNM format file when combined with some other compression techniques (e.g. .gz) can greatly reduce the size of given files.

JPEG: Jpeg support up to 16.7 million colors, which makes them the right choice for complex images and photographs. But for normal documents which does not include any complex drawings or images, but only line drawings (text), jpeg is not a suitable choice. JPEG supports lossy format that uses DCT to compress given files. JPEG images are full-color images that dedicate at least 24 bits of memory to each pixel, resulting in images that can incorporate 16.8 million colors. Hence the image takes up more bits/pixel to store image information resulting in larger file size. [12] explains in detail about JPEG Still picture Compression Standard.

PNG: Portable Network Graphics (PNG) is a raster graphics file format that supports lossless data compression [11]. PNG supports palette-based images (with palettes of 24-bit RGB or 32-bit RGBA colors), gray-scale images (with or without alpha channel), and full-color non-palette-based RGB[A] images (with or without alpha channel). PNG uses a 2-stage compression process: (1) pre-compression: filtering (prediction), (2) compression: DEFLATE. PNG uses a non-patented lossless data compression method known as DEFLATE, which is the same algorithm used in the zlib compression library. When storing images that contain text, line art, or graphics – images with sharp transitions and large areas of solid color – the PNG format can compress image data more than JPEG can.

GIF: The Graphics Interchange Format is a bitmap format. The format supports up to 8 bits per pixel for each image, allowing a single image to reference its own palette of up to 256 different colors chosen from the 24-bit RGB color space. GIF images are compressed using the Lempel-Ziv-Welch (LZW) lossless data compression technique (LZW compression technique is more efficient than run length encoding) to reduce the file size without degrading the visual quality. PNG files can be much larger than GIF files in situations where a GIF and a PNG file were created from the same high-quality image source, as PNG is capable of storing more color depth and transparency information than GIF. GIF is a good choice for storing line drawings, text, and iconic graphics at a small file size.

JBIG: JBIG is a lossless image compression standard from the Joint Bi-level Image Experts Group, standardized as ISO/IEC standard 11544 and as ITU-T recommendation T.82 [6, 7]. JBIG is based on a form of arithmetic coding patented by IBM, known as the Q-coder, but using a minor tweak patented by Mitsubishi, resulting in what became known as the QM-coder. It bases the probabilities of each bit on the previous bits and the previous lines of the picture. In order to allow compressing and decompressing images in scanning order, it does not reference future bits. JBIG also supports progressive transmission with small (around 5) overheads.

JBIG2: JBIG2 is an International Telecommunication Union format that represents a revolutionary breakthrough in captured document technology [8–10]. Using JBIG2 encoding, a scanned image can be compressed up to 10 times smaller than with TIFF G4. This facilitates creating, for the very first time, scanned image documents whose file size is the same as OCR-converted text files. The power behind JBIG2 technology is its ability to support both lossless and perceptually lossless black and white image compression.

The scanned textual documents cannot rely much on lossy compression as it may lead to loss of information. On the other hand, various lossless compression techniques can be useful for compression of scanned textual documents. The study of few commonly used image formats leads to the conclusion of experimenting the proposed approach with formats like .png/ .gif/ .pbm/ .jbg/ .jb2. JPEG is a lossy image format that is more suitable for complex pictures as it removes high frequency values. PNG and GIF formats can be more efficient and useful for saving line arts, texts and graphics, while PBM in itself is a binary format but gives large file size when used individually. The application of gzip compression on pbm files gives high compression. JBIG and JBIG2, the comparatively new standards, are specially suited for bi-level images. So we propose to experiment the proposed approach with these formats to achieve files of reduced size.

4 Approach

On the basis of study of the factors contributing to the large file size of scanned documents in Sect. 2, it is understood that one cannot have control over the Scanning noise and physical dimension of document being scanned. A minimal resolution required for clear reading of documents without any aliasing is dependent on resolution at the time of scan and scan mode, hence limiting the control on these factors. On the other hand, acquisition of textual documents’ images through scanning is accompanied by inclusion of irrelevant data points due to environmental factors, that acts as scanning noise. So, we precisely try to reduce scanning noise to achieve greater compression. Therefore, our approach towards size optimization of scanned documents is directed towards binarization of scanned documents which not only reduces file size, but also increases legibility of the documents. For any scanned textual document, the color may not be of great importance, hence it is significant to convert it into a binary image. Noise, one of the factors contributing to large size of scanned documents,is also removed by the process of binarization. The output images with adaptive thresholding are anti-aliased bi-level images that are saved in different image formats to utilize the compression techniques supported by them. The resultant images, hence are clear to read with optimal file size.

The novelty in this paper lies in the use of adaptive thresholding to binarize a given image that not only reduces the size of scanned textual documents but also enhances document clarity. Figure 1 shows an overview of proposed approach. Considering the input to be a gray-scale scan of documents, the approach to reduce the size of scanned text documents is explained as follows:

-

Binarization and Cleaning: The less colors that are in input image, smaller the file size will be. So for any given document, we can easily convert value of each pixel to either 0 or 1, i.e., black or white, hence reducing the number of colors in given image to only two. The input image is applied with adaptive thresholding to convert it into a bi-level image.

Unlike simple (global) binary thresholding, where a fixed threshold is used for all the pixels in the image, adaptive thresholding uses a local threshold for every pixel in an image. The threshold value is calculated based on the intensities of the neighbouring pixels. Adaptive thresholding has an edge over global thresholding in the scenarios where there is large variation in background intensity, which is true for scanned document images (because of noise).

Adaptive thresholding typically takes a gray-scale or color image as input and, in the simplest implementation, outputs a binary image representing the segmentation. For each pixel in the image, a threshold has to be calculated. If the pixel value is below the threshold it is set to the background value, otherwise it assumes the foreground value. It calculates thresholds in regions of size block-size surrounding each pixel (i.e. local neighborhoods). Each threshold value is the weighted sum of the local neighborhood minus an offset value [1]. While we have used an open-source implementation of standard adaptive thresholding algorithm, our contribution lies in deciding an optimal neighbourhood size and an optimal offset value that determines the performance of adaptive thresholding. These parameters are decided based on extensive experimentation over a large set of documentsFootnote 1.

The conversion of scanned grayscale documents to binary cleans the image, making it more legible. The adaptive thresholding acts as an agent to cleaning the given image since any such pixel value which is below the calculated threshold is set to white.

-

Compression Technique: The image is saved in an appropriate image format (see Sect. 2) to get suitable level of compression on scanned document along with maintenance of image quality.

The reason behind binarising the input image is not only to convert the image to bi-level but also to remove noise. Noise, being the unwanted data in input image, is removed by the process of adaptive thresholding leading to file size reduction. The binarization of image along with noise removal greatly optimizes on the size of input image saved in .png/ .gif/ .pbm (zipped)/ .jbg/ .jb2 formats due their suitability on type of content and bi-level nature.

Binarization of image is followed by saving it in an appropriate format to get desired compression. The image formats use different algorithms to encode the given image. Therefore, the judgment of image format is based on type of image and its content type. Every image format have their own pros and cons. They were created for specific, yet different purposes. Section 3 gives a deep insight into various image formats, compression technique used and their utility in various scenarios.

5 Results and Discussions

We experimented the proposed algorithm on 8 data sets containing in total of about 224 gray-scale scanned images. The images were scanned at 150–200 ppi with resolution in between 1240\(\times \)1753 to 2136\(\times \)1700. The images in data set mostly had handwritten text with graphs and figures. The compression percentage varied from 52 % to 87 % for various image formats used to save the processed images. The compression achieved is not only dependent on encoding format but is also driven by encoder used. The experiments for the proposed approach are performed using OpenCV library and ImageMagick tool. The source of encoder used for saving as JBIG2 file is [5].

Table 1 summarizes the compression achieved with the state of the art methods and implementation of proposed approach. Various formats used to save the binarized images may have their utility in various areas of application. The Compression % defined in Table 1 can be defined as: If a given image has originally scanned size of ‘x’ and the size of this image changes to ‘y’ after the application of proposed methodology, then the “Compression %” is defined as:

The grayscale scanned document images form the set of input images. There are two classes of image formats: (1) the JPEG family: designed for optimizing natural images, and (2) PNG, GIF, JBIG family, etc., which are more generic and work better for bi-level document images. Based on the nature of the set of input images, we experimented with the latter set of image formats to get compression. Hence, the set of scanned input images are encoded in .png/ .gif/ .pbm (zipped)/ .jbig/ .jbig2 formats for experimentation purpose. We have compared our results with publicly available encoders for these formats, besides an publicly available encoder for a standard lossless compression technique .gz. The results in Table 1 are shown in 2 parts , the upper rows indicating the compression achieved by state of the art methods, and the lower rows indicating the compression achieved with proposed approach. We observed that we get much better compression with the pre-processed images than when the encoding methods are directly applied for all except for .jbig2 format. For .jbig2, we find a little degradation in compression performance with pre-processing step. In all the image formats (including .jbig2), the perceptible quality of the document images are much better when pre-processed than when directly encoded. This is illustrated in Fig. 2.

The scanned input images encoded directly as .png/ .gif formats seemingly do not suit the compression needs of the documents. On the other hand, the images processed with adaptive thresholding when encoded as .png/ .gif format give a significant compression in the file size of scanned textual documents. The compression achieved in latter is due to the type of content in the scanned documents that fits in well with lossless compression techniques followed by these formats.

The pbm files generated by any of the two methods have large size, but the use of gzip format (lossless compression format) on the pbm encoded images gives a significant compression in the file size of scanned images. The input images encoded in raw .pbm files followed by the use of .gz compression, without the use of pre-processing step of Adaptive Thresholding, gives reasonable compression in scanned files’ size. Although the important aspect in this regard is that the pbm file format is a binary format. Hence grayscale images when encoded directly as .pbm files can potentially hamper the image completely. Therefore, in order to encode a scanned file as .pbm directly (Without Adaptive Thresholding), a thresholding is required. Such a thresholding, known as global thresholding, might not be feasible for all the scenarios. For calculating the impact of state of the art method in this case, we experimented with the input images by applying a global threshold(220), to get result images. On the other hand, use of Adaptive thresholding on input images binarizes the images. The resultant binarised images can be directly encoded as .pbm format followed by .gzip to get compressed images. Hence, the compression achieved by the use of pbm(zipped) in the latter case is comparatively better than former with the additional benefit of adaptive and feasible nature of adaptive thresholding in all scenarios.

JBIG and JBIG2 give a good compression percentage with both state of the art method and proposed approach. The approach of separating textual, halftones and generic regions from given image to apply different encoding techniques results in higher compression in JBIG2 as compared to JBIG. The proposed approach of using adaptive thresholding followed by JBIG encoding gives higher compression than state of art method. On the other hand, the experimental results show that the compression achieved through direct JBIG2 format encoding of input image is higher than the other case.

Apart from the compression achieved in file size of scanned document images, another crucial aspect that cannot be neglected is legibility of images after compression. A group of people were shown images from both state of the art method and proposed approach, for each of the image format considered in experimental setup, to rate on the quality of images. The assessment of perceptual quality of images was done by taking into consideration collective feedback. The adaptive thresholding on scanned input images along with binarization also leads to image cleaning, hence, improving the quality of images. The png and gif encoded input images hold perceptually similar quality of images for both the cases. The images produced by the state of art method and proposed approach (for pbm zipped) have gap in legibility. The pbm files generated after adaptive thresholding are smudge free and more clear to read. Thus,the scanned documents binarized through adaptive threshold, followed by pbm(zipped) encoding Fig. (2(d)) not only makes the approach a lot more reliable and robust but also gives higher legibility as compared to state of the art method Fig. (2(a)).

JBIG and JBIG2 give a good compression percentage with both state of the art method and proposed approach. JBIG and JBIG2 standards are mainly developed for bi-level images but can be used on other types of images as well. Figure 2(b) and (c) shows input image encoded directly in JBIG and JBIG2 format while Fig. 2(e) and (f) shows input image applied with Adaptive thresholding followed by JBIG and JBIG2 format encoding. The perceptual quality of image encoded with JBIG after adaptive thresholding format is better than directly encoded JBIG images.

It can be seen that the images directly encoded as JBIG in Fig. 2(b) have faded horizontal rulers as compared to Fig. 2(e). Also, the higher compression achieved by JBIG2 encoding, without the pre-processing step, comes at a cost of perceptual degradation of image quality. Figure 2(c) also shows that the horizontal lines in the text are lost (which are still there in JBIG2 files after adaptive thresholding in Fig. 2(f)) which points towards the fact that a grayscale/colored image input encoded directly may lead to loss of legibility in a few cases. JBIG2 when used in lossy mode, can potentially alter text in a way that’s not discernible as corruption. This is in contrast to some other algorithms, which simply degrade into a blur, making the compression artifacts obvious. Since JBIG2 tries to match up similar-looking symbols, the numbers ‘6’ and ‘8’ may get replaced for example.

The PBM, JBIG, and JBIG2 are bi-level formats. Though they themselves are sufficient in binarising given images when a given image is saved in these formats, but the quality of saved images need not necessarily be satisfactory for all the cases. Hence, the adaptive thresholding of grayscale images in accordance to the proposed approach not only binarizes the image at good quality without any perceptual degradation but also helps in optimizing the compression achieved by various image formats. Different image formats used to save the binarized files may have different system/software requirements at the time of their use. For example JBIG2 format, despite giving the highest compression after proposed algorithm, is a fairly new format to save files, hence there are not many image viewers that incorporate the decoder for the image format.The inference, hence, drawn from given results and discussions is that the file size can be greatly reduced by using the suitable file format on noise free bi-level images.

6 Conclusion

In this paper, we have presented a simple, but reliable and efficient approach to reduce the size of scanned textual documents. The varied results achieved by different methods can be opted on the basis of requirements of operational units.

Our contribution lies in introducing the step of adaptive thresholding which removes noise from input images. The binarization of given grayscale document, through adaptive thresholding, reduces the required number of bits per pixel without any information loss and degradation of the quality of image. Noise removal by proposed approach leads to better compression and clarity of document images. These steps of binarization, compression through noise removal and use of suitable image format appropriately work together to achieve good compression that not only reduces file size but also enhances image clarity and maintains data integrity. Thus, the approach presented in the paper can be used as an effective methodology in any organization to achieve high image compression for scanned documents with efficient cost incurred.

Notes

- 1.

The block size and offset for adaptive thresholding are selected on the basis of experimental evaluation of images.

References

Miscellaneous Image Transformations: AdaptiveThreshold

Compressed Image File Formats. Addison-Wesley Professional (1999)

Digital Image Processing, Chap. 8, pp. 564–626. Pearson Education (2010)

Aguilera, P.: Comparison of different image compression formats. Technical report, University of Wisconsin-Madison (2006)

Github. jbig2enc. Technical report, GitHub

Gutierre. Jbig compression

Kyrki, V.: Jbig image compression standard

Joint Bi level Image Experts Group. Jbig2.com: An introduction to jbig2. Technical report

Ono, F., Rucklidge, W., Arps, R.: Jbig2-the ultimate bi-level image coding standard. In: 2000 International Conference on Image Processing, Proceedings, vol. 1 (2011)

Howard, P.G., Kossentini, F., Martins, B., Ren Forchhammer, S., Rucklidge, W.J., Ono, F.: The emerging jbig2 standard. In: IEEE Transactions on Circuits and Systems for Video Technology

Roelofs, G.: History of the portable network graphics (png) format. In Linux Gazette

Wallace, G.K.: The jpeg still picture compression standard. In: IEEE Transactions on Consumer Electronics

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Saraswat, N., Ghosh, H. (2016). A Study on Size Optimization of Scanned Textual Documents. In: Bräunl, T., McCane, B., Rivera, M., Yu, X. (eds) Image and Video Technology. PSIVT 2015. Lecture Notes in Computer Science(), vol 9431. Springer, Cham. https://doi.org/10.1007/978-3-319-29451-3_7

Download citation

DOI: https://doi.org/10.1007/978-3-319-29451-3_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-29450-6

Online ISBN: 978-3-319-29451-3

eBook Packages: Computer ScienceComputer Science (R0)