Abstract

Hyperspectral images (HSIs) can facilitate extensive computer vision applications with the extra spectra information. However, HSIs often suffer from noise corruption during the practical imaging procedure. Though it has been testified that intrinsic correlation across spectrum and spatial similarity (i.e., local similarity in locally smooth areas and non-local similarity among recurrent patterns) in HSIs are useful for denoising, how to fully exploit them together to obtain a good denoising model is seldom studied. In this study, we present an effective cluster sparsity field based HSIs denoising (CSFHD) method by exploiting those two characteristics simultaneously. Firstly, a novel Markov random field prior, named cluster sparsity field (CSF), is proposed for the sparse representation of an HSI. By grouping pixels into several clusters with spectral similarity, the CSF prior defines both a structured sparsity potential and a graph structure potential on each cluster to model the correlation across spectrum and spatial similarity in the HSI, respectively. Then, the CSF prior learning and the image denoising are unified into a variational framework for optimization, where all unknown variables are learned directly from the noisy observation. This guarantees to learn a data-dependent image model, thus producing satisfying denoising results. Plenty experiments on denoising synthetic and real noisy HSIs validated that the proposed CSFHD outperforms several state-of-the-art methods.

L. Zhang’s contribution was made when visiting The University of Adelaide.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Hyperspectral images (HSIs) contain both spectral and spatial information. The spectra represent the reflectance of a real scene across multiple narrow-width bands, which can be used to identify and characterize a particular feature of the scene. HSI is thus widely used for extensive applications such as scene classification [1], surveillance [2] and disease diagnosis [3], etc. However, during the imaging process, HSIs are inevitably affected by noise [4]. Since the noise corruption increases the difficulty of applications, e.g., classification, HSIs denoising becomes a crucial step for HSIs based systems [5–7].

Lots of HSIs denoising methods have been proposed, such as wavelet shrinkage based method [8], multi-linear algebra based methods [5, 6], etc., among which the following two consensuses have been testified useful to obtain a good denoising result. (1) Since continuous spectrum implies high correlation, exploiting the correlation across spectrum will improve the denoising quality. (2) HSIs especially for natural scene HSIs contain abundant locally smooth areas and recurrent patterns, which result in the local and non-local spatial similarity, respectively, thus exploiting such spatial similarity (i.e., both the local and non-local similarity) in HSIs is also beneficial to denoising.

Based on these two consensuses, many effective methods have been proposed for HSIs denoising. Since spectrum can be sparsely represented in a specific domain, sparse representation model has been widely used to model the correlation across spectrum in HSIs [9, 10]. However, these methods cannot fully exploit the desired correlation, because \(\ell _1\) norm cannot depict the structure in each sparse signal. To address this problem, Zhang et al. [11] proposed a reweighted Laplace prior to explore the structured sparsity of spectra. Nevertheless, this method models each spectrum independently without considering the spatial similarity in HSIs, which limits its performance in HSIs denoising. To model the spatial similarity in HSIs, Qian et al. [12] and Maggioni et al. [13] adopted small 3D cubes instead of 2D patches in the classical 2D image denosing methods (e.g., NLM [14] and BM3D [15]), to consider the non-local spatial similarity in HSIs. However, they neglected the correlation across spectrum [7]. Considering an HSI as a \(3^\mathrm {rd}\) tensor, Renard et al. [5] employed the low rank tensor approximation to explore the correlation across spectrum as well as the local spatial similarity. Liu et al. [6] proposed a parallel factor analysis model to further refine the low rank tensor approximation. However, they do not consider the non-local spatial similarity in HSIs. To address this problem, Peng et al. [7] proposed a tensor dictionary learning model to depict the non-local spatial similarity in HSIs. Qian et al. [16] introduced the non-local similarity into spectral-spatial structure based sparse representation model. Nevertheless, none of those two methods explicitly consider the local similarity within the spatially locally smooth areas of HSIs [17]. Recently, Fu et al. [17] further explored the local similarity by learning a spectral-spatial dictionary, however it only fits the uniformly distributed noise across bands. Therefore, how to fully exploit the correlation across spectrum and spatial similarity together for an effective denoising model is still challenging.

In this study, we integrate those two characteristics into a novel cluster sparsity field based HSIs denoising (CSFHD) method. Firstly, a novel Markov random field prior, named cluster sparsity field (CSF), is proposed for the sparse representation of an HSI. By grouping pixels into several spatial clusters with spectral similarity, two different potentials in CSF are defined on each cluster to explore the structures in the HSI. The structured sparsity potential implicitly depicts the correlation over spectrum by exploring the structure within sparse representation, while the graph structure potential adopts the intra-cluster spectral similarity to depict the spatial similarity. Then, proper hyperpriors are employed to regularize the prior parameters, which allow the CSF prior to be suitable for the data-dependent structure as well as to avoid over-fitting in prior learning. Furthermore, we integrate the CSF prior learning and image denoising into a variational optimization framework, where the CSF prior, noise level and the latent clean image are jointly learned from the noisy observation. This guarantees to learn a data-dependent image model and thus producing satisfying denoising results.

In summary, the proposed method has three key benefits: (1) The CSF prior jointly models the correlation across spectrum and spatial similarity of an HSI with the sparse representation model. (2) The CSF prior is data-specific and well regularized. (3) The proposed method can learn the data-dependent structures and outperforms several state-of-the-art methods in extensive experiments.

2 Cluster Sparsity Field (CSF)

To jointly model the correlation across spectrum and spatial similarity into an image prior, we define a novel Markov random field, named cluster sparsity field (CSF), on the sparse representation of an HSI with a given dictionary. Specifically, the 3D HSI is rearranged into a 2D matrix \(X=[{\varvec{x}}_1,...,{\varvec{x}}_{n_p}]\in {\mathbb {R}^{n_b\times {n_p}}}\) for convenience, where \(n_b\) indicates the number of bands and \(n_p\) is the number of pixels. The 2D image on each band is vectorized as a row of X, while each column \({\varvec{x}}_{i}\) of X denotes the spectrum of one pixel. Provided that the imaging scene contains K homogeneous areas, pixels in X thus can be grouped into K spatial clusters based on spectral similarity. Let \(X_k\in {\mathbb {R}^{n_b \times n_k}}\) denote the pixels in the kth cluster, where \(k= 1,...,K\) and \(n_k\) is the number of pixels in this cluster. Given a proper spectra dictionary \(\mathrm {\Phi }\in {{\mathbb {R}}^{n_b \times n_d}}\), \(X_k\) can be sparsely represented as \(X_k = \mathrm {\Phi } Y_k\), where \(Y_k = [{\varvec{y}}^1_k,...,{\varvec{y}}^{n_k}_k]\in {\mathbb {R}^{n_d \times {n_k}}}\) is the sparse representation matrix. Given \(Y = [Y_1,...,Y_K]\), \(X = \mathrm {\Phi } Y\) with a permutation on columns. Let sparse vectors \({\varvec{y}}^{i}_k\) be denoted by nodes V in a graph \(G=(V,E)\), where E represents edges connecting such nodes. The probability distribution of Y can be characterized by a Gibbs distribution as

where \(E_{\mathrm {csf}}\) is the potential function defined on each cluster and Z is a normalization term. In this study, \(E_{\mathrm {csf}}(Y_k)\) is defined by tow potentials \(\varphi \left( Y_k\right) \) and \(\psi (Y_k)\), which models the correlation across spectrum and spatial similarity in an HSI, respectively.

2.1 Structured Sparsity Potential

Natural signals (e.g., spectra in HSIs) often produce approximately sparse representation vectors on the given dictionary [18], each of which contains many entries close to but not equal to zero. Moreover, the correlation among signal entries is reflected by the specific structure in each sparse vector [11], e.g., the tree structure of natural signal in wavelet domain. Therefore, it is essential to depict the structured sparsity in \(Y_k\) to model the correlation across spectrum in HSIs. Recently developed reweighted Laplace prior [11] specializes in depicting the structure in approximately sparse signals. Hence, we impose the reweighted Laplace prior on each \(Y_k\). First, we define \(\varphi \left( Y_k\right) \) as

where \(\Vert Y_k\Vert _{\varGamma _k} = \sqrt{\mathbf {tr}(Y^T_k\varGamma ^{-1}_kY_k)}\) denotes a weighted trace norm on \(Y_k\) and \({\varvec{\gamma }}_k = [\gamma _{1k},...,\gamma _{n_dk}]^T\). Then, a Gamma distribution is imposed on \({\varvec{\gamma }}_k\) as

where \({\varvec{\varpi }}_k=[\varpi _{1k},...,\varpi _{n_dk}]^T\). When each \(\psi \left( Y_k\right) = 0\), the CSF prior in Eq. (1) degenerates to a product of K matrix normal distributions. By integrating \({\varvec{\gamma }}_k\), the hierarchical CSF prior in Eqs. (1), (3) amounts to a joint reweighted Laplace prior for each cluster as

where \(\varOmega _k = \mathbf {diag}(\hat{\varvec{\varpi }}_k)\) and \(\hat{\varvec{\varpi }}_k =[\sqrt{\varpi _{1k}},...,\sqrt{\varpi _{n_dk}}]^T\). In contrast to [11] without clustering, the hierarchical CSF prior tries to capture different structures for various clusters. Moreover, the product operation in Eq. (4) forces all clusters to fit their corresponding joint structured sparsity simultaneously.

2.2 Graph Structure Potential

HSIs especially for natural scene HSIs often contain locally smooth areas and recurrent patterns in the spatial domain, which result in abundant spatial similarity in HSIs. Owing to the fact that spatially similar pixels often show similar spectra [19], after spectral similarity based clustering, pixels from locally smooth areas or recurrent patterns fall into the same cluster. Thus, the spatial (i.e., both local and non-local) similarity can be naturally modelled by the intra-cluster spectral similarity. In each cluster, high similarity guarantees that a considered spectrum can be well reconstructed by others. With a given dictionary, such relationship is trivially preserved in the corresponding sparse representation of spectra. Therefore, we introduce a graph structure potential as

to describe the desired intra-cluster similarity, where each node \({\varvec{y}}^{i}_k\) is linearly represented in terms of other nodes in the same cluster. \(\varSigma _{k} = \mathbf {diag}({\varvec{\eta }}_k)\), where \({\varvec{\eta }}_k = [\eta _{1k},...,\eta _{n_kk}]^T\) denotes the variance of the representation error. \(W_k=[{\varvec{w}}^1_k,...,{\varvec{w}}^{n_k}_{k}]\in {\mathbb {R}^{n_k \times n_k}}\) is the representation weight matrix with \(\mathbf {diag}(W_k)=\mathbf {0}\), which implies the node itself is excluded in its representation. Due to the high intra-cluster spectral similarity, representation error \(\psi \left( Y_k\right) \) could be quite small and sparse. To model the sparse error, a Gamma distribution similar to Eq. (3) is imposed on \({\varvec{\eta }}_k\) as

where \({\varvec{\nu }}_k = [\nu _{1k},...,\nu _{n_kk}]^T\). When \(\varphi \left( Y_k\right) = 0\), this hierarchical prior in Eqs. (1), (6) results in a sparse prior on the representation error as Eq. (4).

The self-expressiveness relation [20] in Eq. (5) implicitly defines a graph structure with \(W_k\). Specifically, each node \({\varvec{y}}^{i}_k\) is fully connected with other nodes in \(Y_k\), and the edges are defined by the weight matrix \(W_k\). When entries of \(W_k\) exactly equal to zero, the corresponding edges are pruned. In other words, the specific graph structure on \(Y_k\) is defined by \(W_k\). To learn the graph structure in each cluster flexibly, the normal distribution is imposed on each weight vector in \(W_k\) independently as

where \({\mathbf {I}}\) is an identity matrix with proper size and \(\epsilon \) is a predefined scalar. This \(\epsilon \)-parametrized normal distribution constrains the \(\ell _2\) norm of each weight vector \({\varvec{w}}^{i}_k\) to avoid over-fitting in graph structure learning.

Substituting those two potentials \(\varphi \left( Y_k\right) \) and \(\psi \left( Y_k\right) \) into Eq. (1), we obtain the whole graphic model of Y as

where \(\varphi \left( Y_k\right) \) and \(\psi \left( Y_k\right) \) act as the unary term and the high-order term, respectively. With those corresponding hyperpriors \(p\left( {\varvec{\gamma }}_k|{\varvec{\varpi }}_k\right) \), \(p\left( {\varvec{\eta }}_k|{\varvec{\nu }}_k\right) \) and \(p(W_k|\epsilon )\), the whole hierarchical CSF prior for the sparse representation Y can be written as

where \(\mathbf {\Theta } = \left\{ {\varvec{\gamma }}_k,{\varvec{\varpi }}_k,{\varvec{\eta }}_k, {\varvec{\nu }}_k, W_k\right\} ^{K}_{k=1}\). This prior models the spectral correlation and the spatial similarity in HSIs simultaneously by defining the structured sparsity and graph structure based intra-cluster similarity within the sparse representation model.

To employ this prior for HSIs denoising, we have to determine the unknown parameters \(\mathbf {\Theta }\). Most previous graphic models learn parameters with a set of training examples [21, 22]. However, training examples often show different clustering results, thus learning parameters from training examples is infeasible for the data-specific CSF prior. To address this problem and capture the data-dependent structure of HSIs, we learn the prior parameters directly from the noisy observation in the following section.

3 HSIs Denoising with CSF

Similar as many previous works [5, 6], we adopt the noisy observation model for HSIs as \(F = X + N\), where \(F\in {\mathbb {R}^{{n_b}\times {n_p}}}\) is the noisy observation and \(N\in {\mathbb {R}^{{n_b}\times {n_p}}}\) represents the noise. In this study, we mainly focus on the signal-independent noise model, where the noise can be well modelled by Gaussian white noise. In practical hyperspectral imaging, various bands of HSIs often bear different levels of noise [23]. Therefore, we assume noise N comes from a matrix normal distribution \(\mathcal {MN}\left( \mathbf {0},\varSigma _n,\mathbf {I}\right) \), where \(\varSigma _n = \mathbf {diag}({\varvec{\lambda }})\in {{\mathbb {R}}^{n_b \times n_b}}\) and \({\varvec{\lambda }}=\left[ \lambda _1,...,\lambda _{n_b}\right] ^T\) controls the various noise levels in \(n_b\) bands. With a proper spectrum dictionary \(\mathrm {\Phi }\), we have the following likelihood

Given the CSF prior \(p_{\mathrm{csf}}(Y|\mathbf {\Theta })\), we can infer Y by the maximum a posteriori (MAP) estimation as

Then, \(X^{opt}=\mathrm{Phi} Y^{opt}\). To this end, we learn the parameter \(\mathbf {\Theta }\) and noise level \({\varvec{\lambda }}\) directly from the noisy observation in advance by solving the following problem

Due to the coupled variables in \(p_\mathrm{csf}(Y|\mathbf{\Theta })\), it is intractable to solve Eq. (12) directly. To address this issue, we first give an approximation to \(p(Y|\{{\varvec{\gamma }}_k,{\varvec{\eta }}_k, W_k\}^K_{k=1})\) in \(p_{\mathrm {csf}}(Y|\mathbf {\Theta })\), then simplify this problem to a tractable regularized regression model.

3.1 Approximated CSF and Inference

To approximate \(p(Y|\{{\varvec{\gamma }}_k,{\varvec{\eta }}_k, W_k\}^K_{k=1})\), we first replace \(Y_kW_k\) in Eq. (8) with \(M_k = Y'_kW_k\), where \(Y'_k\) is the sparse representation of \(X_k\) from the previous iteration. Since the representation error \(Y_k - M_k\) and \(Y_k\) are almost independent [24], we then approximate \(p(Y|\{{\varvec{\gamma }}_k,{\varvec{\eta }}_k, W_k\}^K_{k=1})\) as

When the independence assumption stands and \(M_k = Y_kW_k\) (i.e., \(Y'_k=Y_k\)), the equality of Eq. (13) holds. This approximation simplifies the subsequent optimization and performs well in extensive experiments shown in Sect. 4.

Based on this approximation, the MAP inference in Eq. (11) and prior learning in Eq. (12) can be integrated into a unified regularized regression model as

where \(F_k\) is the observation of \(X_k\) and \(\mathbf{\Lambda }_k = (\varGamma ^{-1}_k + \varSigma ^{-1}_k + \mathrm{\Phi }^T\varSigma ^{-1}_n\mathrm{\Phi })^{-1}\). \(\Vert \cdot \Vert _F\) is the Frobenius norm. Equation (14) describes the relation between all unknown variables and the observation. Moreover, we can learn the data-dependent CSF prior, noise level and the desired sparse representation simultaneously by solving Eq. (14).

3.2 Optimization Procedure

In this study, the alternative minimization scheme [11, 24] is adopted to optimize Eq. (14). This scheme reduces original problem into several simpler subproblems, which are then alternatively optimized in each iteration until convergence. To start, we conduct K-meansFootnote 1 on an initialized HSI \(\hat{X}\) (the initialization will be illustrated in Sect. 4) to group the pixels of \(\hat{X}\) into K clusters based on the spectrum similarity.

A. Graph structure estimation. Given \(X_k\) and \({\Phi }\), we can estimate the weight matrix \(W_k\) in the kth cluster from Eq. (14) asFootnote 2

This least square regression based subspace clustering problem can be effectively solved by the algorithm in [25]. Given \(W_k\) and \(Y_k\), \(M_k\) can be obtained as \(M_k = Y_kW_k\).

B. Sparse representation reconstruction. With the updated \(M_k\) for each cluster, the subproblem for Y simplifies to

where \(\mathbf {\Theta }\) and \(\varvec{\lambda }\) are the auxiliary variables for optimizing Y. This minimization problem can be divided into three subproblems, namely \(Y_k\)-subproblem, \(\mathbf {\Theta }\)-subproblem and \(\varvec{\lambda }\)-subproblem. Firstly, \(Y_k\)-subproblem can be written as

which gives a closed-form solution as \(Y_k = \mathbf{\Lambda }_k({\Phi }^T\varSigma ^{-1}_nF_k + \varSigma ^{-1}_kM_k)\). Then, given \(Y_k\), we can obtain \(\mathbf{\Theta }\)-subproblem as

which can be solved effectively (see footnote 2) by employing alternative minimization on each variable in \(\mathbf {\Theta }\) as [11, 26]. Finally, \(\varvec{\lambda }\)-subproblem can be formulated as

which produces a closed-form solution (see footnote 2) as [11].

C. Dictionary learning and clustering. Currently, the commonly used dictionary is either a universe one (e.g., DCT dictionary) or learned from training examples (e.g., K-SVD [27]). However, they mainly capture the common characteristics over different images rather than the data-dependent characteristics of the desired HSI. To address this problem, we learn a PCA spectra dictionary as \({\Phi }\) from the denoised HSI at each iteration, then K-means (see footnote 1) are conducted to group the pixels in the denoised image into K clusters. At the first iteration, the PCA dictionary is learned from the initialized HSI. Algorithm 1 describes the whole procedure of CSF prior based HSIs denoising. The alternative minimization scheme decreases the cost function at each iteration, thus guaranteeing algorithm converges as [11, 26].

4 Experiments and Analysis

4.1 Experiments on Synthetic Data

In this section, we evaluate CSFHD on the CAVE dataset [28]Footnote 3. This dataset consists of 32 HSIs on real-world scenes. Each image contains \(512 \times 512\) pixels and 31 bands which are captured at a 10 nm wavelength interval in the range from 400 nm to 700 nm. In experiments, we crop subimages of \(200 \times 200\) pixels from all HSIs as the experimental data and each data is normalized into [0, 1] in advance. To simulate the various levels of noise across bands, \(n_b\) levels of Gaussian white noise with standard deviation uniformly sampled in the range of \([\sigma _n - \sigma _n/2, \sigma _n + \sigma _n/2]\) are added into \(n_b\) bands of the HSI X to generate the noisy observation F. \(\sigma _n\) denotes the average standard deviation across different bands, which indicates the level of noise corruption on the whole X. Larger \(\sigma _n\) denotes higher level of noise corruption. In experiments, we choose \(\sigma _n\) from 0.1 to 0.35 at a 0.05 interval to simulate different levels of noise corruption on the whole HSI.

In the denoising procedure, to demonstrate the superiority of the proposed method, 5 state-of-the-art hyperspectral denoising methods are employed for comparison, including VBM4D [29], LRTA [5], PARAFAC [6], TDL [7] and TDLNP [7]. Only VBM4D and TDL require \(\sigma _n\) as input parameter. TDLNP is the special version of TDL without given \(\sigma _n\). To further illustrate the effectiveness of the structured sparsity potential and the graph structure potential in the proposed method, we implemented two special versions of CSFHD, namely CSFHD_S and CSFHD_G. CSFHD_S only considers the structured sparsity in spectral domain, while CSFHD_G only considers the graph structure based spectral similarity in spatial domain. For CSFHD, the denoised result of TDLNP is utilized as the initialization \(\hat{X}\). \(T = 4\), \(K = 30\) are adopted for Algorithm 1. Same parameters are adopted by CSFHD_S and CSFHD_G. Given the noisy F, all methods are conducted to recover the clean HSIs.

To assess the performance of different methods quantitatively, three evaluation measures are adopted in this study, including peak signal-to-noise ratio (PSNR), structure similarity (SSIM) and spectral angle map (SAM). PSNR measures the numerical difference between the target image and the reference one, while SSIM calculates their spatial structure similarity. Different with PSNR and SSIM, SAM reflects the performance of spectrum denoising by measuring the average spectrum angles between the spectra from the target image and the reference one. In comparison, larger PSNR, SSIM and smaller SAM denote better performance. For each method, the average results of those three measures are obtained on the whole CAVE dataset.

Effectiveness of different potentials: The comparison of average performance among CSFHD_S, CSFHD_G and CSFHD is shown as the bar charts in Fig. 1, and the detailed numerical comparison is provided in Table 1. We can observe that CSFHD produces significantly superior performance over CSFHD_S. Specifically, when \(\sigma _n = 0.2\), compared with CSFHD_S, the PSNR of CSFHD increases by 12 db, SSIM increases by 0.6 and SAM decreases by 27 degree. This demonstrates that only considering the spectral structured sparsity in CSFHD_S is not sufficient. To further clarify this point, the visual comparison on the denoising results of ‘Toy’ image from the CAVE dataset is provided in Fig. 2, where two areas of interest are zoomed for easy observation. We find that the restored result of CSFHD_S contains a lot of noise and the spatial smoothness in the homogeneous areas are damaged. Similar situations occur on other bands. Therefore, only considering the spectral structured sparsity in CSFHD_S fails to preserve the spatial similarity in HSIs. By contrast, with introducing the intra-cluster spectral similarity based graph structure potential, CSFHD_G and CSFHD both preserve the spatial similarity well and produces smooth homogeneous areas. Therefore, the proposed graph structure potential is effective in representing the spectral similarity in spatial domain. Additional, CSFHD shows obvious superiority over CSFHD_G for denoising. For example, when \(\sigma _n=0.2\), the PSNR of CSFHD is higher than that of CSFHD_G by 1.2 db, and the SAM reduces by 2.8 degree. Moreover, in the visual comparison on ‘Toy’ image, CSFHD gives more clear and sharper result than CSFHD_G. This in turn demonstrates the importance of modelling the correlation across spectrum in HSIs with the structured sparsity potential. Based on the above analysis, we can conclude that the structured sparsity potential and the graph structure potential are effective in representing the correlation across spectrum and the spatial similarity, and thus are beneficial to HSIs denoising.

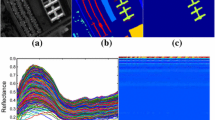

Superiority of the proposed method: The average PSNR, SSIM and SAM of denoised results on the CAVE dataset from different methods are listed in Table 1. Under different levels of noise, CSFHD gives the highest PSNR, while VBM4D is the second best method. When \(\sigma _n = 0.2\), the average PNSR of CSFHD is 0.9 db higher than VBM4D and 5.5 db higher than its initialization TDLNP. In addition, CSFHD exhibits superior performance on preserving the spatial structures. With different levels of noise, it always obtains higher SSIM than other methods. When \(\sigma _n = 0.1\), only CSFHD gives SSIM larger than 0.9. To make this point more clear, the visual comparison among the denoised results from all methods are given in Fig. 2. Compared with the results from other methods which contain noise corruption or blur at some extent, CSFHD produces more clear and sharper result. In the respect of spectrum, CSFHD produces the smallest SAM among all comparison methods. For example, compared with other methods, the SAM of CSFHD decreases by at least 1.7 degree when \(\sigma _n = 0.2\). To further illustrate this point, we plot the spectral reflectance difference curves of all comparison methods in Fig. 3. The curve of each method is interpolated by those discrete spectral reflectance difference across all bands between the denoised HSI and the reference one at a give spatial position. It is clear that CSFHD gives the smallest difference at all three chosen positions. Based on those above results, we can conclude that the proposed CSFHD method shows stable superiority on HSIs denoising over 5 state-of-the-art methods.

Effect of the cluster number K : In this part, we conduct experiments to explore the effect of K on the performance of HSIs denoising. For different scenes, the resulted HSIs often have different clustering results. Thus, two images ‘Flower’ and ‘Toy’ are selected from the CAVE dataset as the experimental data. Each image is corrupted with two different levels of noise with \(\sigma _n = 0.1\) and \(\sigma _n = 0.3\) as previous experiments. CSFHD is employed to denoise those two images with different K, which is selected in the range of [1, 5, 10, 15, ..., 400] at a 5 interval. The curves of PSNR, SSIM and SAM versus K are plotted in Fig. 4. First row gives the results on the ‘Flower’ image and the results on ‘Toy’ images are provided in the second row. First, we find that there is the best \(K_{opt}\) for each image. When \(K < K_{opt}\), pixels from different categories are grouped into the same cluster. Spectra difference from different categories cannot guarantee that each node in the cluster is well represented by others with sparse representation error. Furthermore, the uniform structured sparsity potential on one cluster is not appropriate for spectra from different categories. Therefore, the performance decreases. When \(K > K_{opt}\), although each node in the cluster can be well represented by the uniform structured sparsity potential, the similar spectra are grouped into different small clusters. Too small amount of similar spectra will reduce the representation precision of each node. For example, in the extreme case with \(K = n_p\), each pixel is grouped into an individual cluster, which corresponds to ignoring the spectral similarity in spatial domain. Hence, when K is too large, the performance is also reduced. In addition, we find something interesting that the trend of curves and \(K_{opt}\) are similar under different levels of noise for the same image. Moreover, \(K_{opt}\) varies in different images, e.g., \(K_{opt} = 30\) in ‘Flower’ while \(K_{opt} = 50\) in ‘Toy’. For simplicity, we set \(K=30\) for the whole CAVE dataset.

Effect of approximation: We investigate the effect of approximation in Eq. (13) by denosing the ‘Flower’ image from the CAVE dataset when the image is corrupted with band-various noise with the average \(\sigma _n = 0.2\) as previous experiments. Since the representation error \(Y_k - M_k\) and the sparse signal \(Y_k\) are often likely independent [24], we only need to check the difference of sparse representation matrices in two successive iterations as \(Y'_k - Y_k\) for all k. For each cluster, we define the error at each node as the \(\ell _2\) norm of the difference of sparse representation vectors from two successive iteration, which is denoted as \(\Vert {\varvec{y}}'^i_k - {\varvec{y}}^i_k\Vert _2\) (\({\varvec{y}}'^i_k\) is the corresponding node in \(Y'_k\)). After calculating the error at each node defined in the whole image, we can obtain a error map shown as the first row in Fig. 5. From those error maps, we can obviously find that the error is constantly reduced with the increase of iteration, viz., \(Y'_k\) is more and more close to \(Y_k\) for all k. This implies the approximation in Eq. (13) is more and more accurate and the algorithm converges well, both of which guarantee to produce the constantly refined denoising result shown as the second row in Fig. 5.

4.2 Experiment on Real Data

In this section, we further test the proposed method on the real noisy HSI dataset, INDIANAFootnote 4. This image is of size \(145\times 145\) with 10 m spatial resolution and consists of 220 bands covering the wavelength in the range from 400 nm to 2500 nm by 10 nm spectral resolution. Before denoising, we remove the atmospheric and water absorption bands from bands 150–163 from the original HSI [30]. As a result, there are only 206 bands used in the following experiment. This image contains different levels of noise corrupted bands, including heavy noise corruption bands, light noise corruption bands and nearly noise-free bands, respectively. In the denoising procedure, CSFHD is given the same parameters as previous experiments. VBM4D, LRTA, PARAFAC, TDLNP, CSFHD_S and CSFHD_G are employed as the comparison methods. Since the real \(\sigma _n\) is unknown, here we only select the non-parameter version TDLNP of TDL. VBM4D is also tuned to be the noise estimation mode.

To easily observe the superior performance of the proposed method on denoising the real HSI dataset, we provide the denoised 204 band of the INDIANA dataset from all comparison methods in Fig. 6, where two areas of interest are zoomed for easy observation. It is clear that the proposed CSFHD not only appropriately removes the noise corruption but also preserves the spatial structure well. In contrast to CSFHD, VBM4D, PARAFAC and CSFHD_G remove a certain amount of noise corruption but fail to preserve the spatial structure. The edges in their denoising results are blurred, especially in PARAFAC. While the denoising results of LRTA, TDLNP and CSFHD_S still contain obvious noise corruption.

5 Conclusion

In this study, we present an effective HSIs denoising method, where a novel CSF prior is proposed for the sparse representation of an HSI to better exploit its intrinsic correlation across spectrum and spatial similarity simultaneously. In specific, by grouping the pixels of an HSI into several clusters with spectral similarity, the CSF prior defines two different potentials on each cluster. Among them, the structured sparsity potential models the correlation across spectrum in the HSI by exploring the structure in the sparse representation, while the graph structure potential defines the intra-cluster spectral similarity to model the spatial similarity in the HSI. With a proper approximation, we integrate the CSF prior learning and MAP inference in image denoising into a variational framework for optimization, where the data-dependent structure in the CSF prior can be learned directly from the noisy observation without any training examples. With the learned prior, the correlation across spectrum and spatial similarity are properly preserved in the denoising results. Extensive experiments on synthetic and real noisy HSIs demonstrate the effectiveness of the proposed method in HSIs denoising.

Notes

- 1.

It should be noted that other clustering methods could also be used instead of the K-means.

- 2.

Detailed derivation can be found in the supplementary material.

- 3.

- 4.

References

Wang, Z., Nasrabadi, N.M., Huang, T.S.: Semisupervised hyperspectral classification using task-driven dictionary learning with Laplacian regularization. IEEE Trans. Geosci. Remote Sens. 53(3), 1161–1173 (2015)

Van Nguyen, H., Banerjee, A., Chellappa, R.: Tracking via object reflectance using a hyperspectral video camera. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 44–51. IEEE (2010)

Akbari, H., Kosugi, Y., Kojima, K., Tanaka, N.: Detection and analysis of the intestinal Ischemia using visible and invisible hyperspectral imaging. IEEE Trans. Biomed. Eng. 57(8), 2011–2017 (2010)

Kerekes, J.P., Baum, J.E.: Full-spectrum spectral imaging system analytical model. IEEE Trans. Geosci. Remote Sens. 43(3), 571–580 (2005)

Renard, N., Bourennane, S., Blanc-Talon, J.: Denoising and dimensionality reduction using multilinear tools for hyperspectral images. IEEE Geosci. Remote Sens. Lett. 5(2), 138–142 (2008)

Liu, X., Bourennane, S., Fossati, C.: Denoising of hyperspectral images using the PARAFAC model and statistical performance analysis. IEEE Trans. Geosci. Remote Sens. 50(10), 3717–3724 (2012)

Peng, Y., Meng, D., Xu, Z., Gao, C., Yang, Y., Zhang, B.: Decomposable nonlocal tensor dictionary learning for multispectral image denoising. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2949–2956. IEEE (2014)

Othman, H., Qian, S.E.: Noise reduction of hyperspectral imagery using hybrid spatial-spectral derivative-domain wavelet shrinkage. IEEE Trans. Geosci. Remote Sens. 44(2), 397–408 (2006)

Greer, J.B.: Sparse demixing of hyperspectral images. IEEE Trans. Image Process. 21(1), 219–228 (2012)

Rasti, B., Sveinsson, J.R., Ulfarsson, M.O., Benediktsson, J.A.: Hyperspectral image denoising using a new linear model and sparse regularization. In: IEEE International Geoscience and Remote Sensing Symposium, pp. 457–460. IEEE (2013)

Zhang, L., Wei, W., Zhang, Y., Tian, C., Li, F.: Reweighted Laplace prior based hyperspectral compressive sensing for unknown sparsity. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2274–2281. IEEE (2015)

Qian, Y., Shen, Y., Ye, M., Wang, Q.: 3-D nonlocal means filter with noise estimation for hyperspectral imagery denoising. In: Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, pp. 1345–1348. IEEE (2012)

Maggioni, M., Boracchi, G., Foi, A., Egiazarian, K.: Video denoising, deblocking, and enhancement through separable 4-D nonlocal spatiotemporal transforms. IEEE Trans. Image Process. 21(9), 3952–3966 (2012)

Buades, A., Coll, B., Morel, J.M.: A non-local algorithm for image denoising. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, vol. 2, pp. 60–65. IEEE (2005)

Dabov, K., Foi, A., Katkovnik, V., Egiazarian, K.: Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 16(8), 2080–2095 (2007)

Qian, Y., Ye, M.: Hyperspectral imagery restoration using nonlocal spectral-spatial structured sparse representation with noise estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 6(2), 499–515 (2013)

Fu, Y., Lam, A., Sato, I., Sato, Y.: Adaptive spatial-spectral dictionary learning for hyperspectral image denoising. In: Proceedings of the IEEE Conference on Computer Vision, pp. 343–351 (2015)

Baraniuk, R.G., Cevher, V., Wakin, M.B.: Low-dimensional models for dimensionality reduction and signal recovery: a geometric perspective. Proc. IEEE 98(6), 959–971 (2010)

Camps-Valls, G., Tuia, D., Bruzzone, L., Atli Benediktsson, J.: Advances in hyperspectral image classification: earth monitoring with statistical learning methods. IEEE Signal Process. Mag. 31(1), 45–54 (2014)

Li, B., Zhang, Y., Lin, Z., Lu, H., Center, C.M.I.: Subspace clustering by mixture of Gaussian regression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2094–2102 (2015)

Lin, D., Fisher, J.: Manifold guided composite of Markov random fields for image modeling. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2176–2183. IEEE (2012)

Schmidt, U., Roth, S.: Shrinkage fields for effective image restoration. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2774–2781. IEEE (2014)

Zhang, H., He, W., Zhang, L., Shen, H., Yuan, Q.: Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 52(8), 4729–4743 (2014)

Dong, W., Zhang, D., Shi, G.: Centralized sparse representation for image restoration. In: Proceedings of the IEEE Conference on Computer Vision, pp. 1259–1266. IEEE (2011)

Lu, C.-Y., Min, H., Zhao, Z.-Q., Zhu, L., Huang, D.-S., Yan, S.: Robust and efficient subspace segmentation via least squares regression. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7578, pp. 347–360. Springer, Heidelberg (2012). doi:10.1007/978-3-642-33786-4_26

Wipf, D.P., Rao, B.D., Nagarajan, S.: Latent variable Bayesian models for promoting sparsity. IEEE Trans. Inf. Theory 57(9), 6236–6255 (2011)

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006)

Yasuma, F., Mitsunaga, T., Iso, D., Nayar, S.K.: Generalized assorted pixel camera: postcapture control of resolution, dynamic range, and spectrum. IEEE Trans. Image Process. 19(9), 2241–2253 (2010)

Maggioni, M., Katkovnik, V., Egiazarian, K., Foi, A.: Nonlocal transform-domain filter for volumetric data denoising and reconstruction. IEEE Trans. Image Process. 22(1), 119–133 (2013)

Yuan, Q., Zhang, L., Shen, H.: Hyperspectral image denoising employing a spectral-spatial adaptive total variation model. IEEE Trans. Geosci. Remote Sens. 50(10), 3660–3677 (2012)

Acknowledgements

This work is in part supported by National Natural Science Foundation of China (No. 61231016, 61301192 and 61571354), Fundamental Research Funds for the Central Universities (No. 3102015JSJ0006), Innovation Foundation for Doctoral Dissertation of Northwestern Polytechnical University (No. CX201521) and Australian Research Council grants (DP140102270, DP160100703, FT120100969).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Zhang, L., Wei, W., Zhang, Y., Shen, C., van den Hengel, A., Shi, Q. (2016). Cluster Sparsity Field for Hyperspectral Imagery Denoising. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds) Computer Vision – ECCV 2016. ECCV 2016. Lecture Notes in Computer Science(), vol 9909. Springer, Cham. https://doi.org/10.1007/978-3-319-46454-1_38

Download citation

DOI: https://doi.org/10.1007/978-3-319-46454-1_38

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46453-4

Online ISBN: 978-3-319-46454-1

eBook Packages: Computer ScienceComputer Science (R0)