Abstract

Centroid index is the only measure that evaluates cluster level differences between two clustering results. It outputs an integer value of how many clusters are differently allocated. In this paper, we apply this index to other clustering models that do not use centroid as prototype. We apply it to centroid model, Gaussian mixture model, and arbitrary-shape clusters.

Similar content being viewed by others

Keywords

1 Introduction

Clustering aims at partitioning a data set of n points into k clusters. External index measures how similar a clustering solution is to a given ground truth (if available), or how similar two clustering solutions are. This kind of measure is needed in clustering ensemble, measuring stability and evaluating performance of clustering algorithms.

In clustering algorithms, one of the main challenges is to solve the global allocation of the clusters instead of just tuning the partition borders locally. Despite of this, most external cluster validity indexes calculate only point-level differences without any direct information about how similar the cluster-level structures are.

Rand index (RI) [1] and Adjusted Rand index (ARI) [2] count the number of pairs of data points that are partitioned consistently in both clustering solutions (A and B); if a pair of points is allocated in the same cluster in A, they should be allocated into the same cluster also in B. This provides estimation of point-level similarity but does not give much information about the similarity at cluster level.

More sophisticated methods operate at the cluster level. Mutual information (MI) [3] and Normalized mutual information (NMI) [4] measure the amount of information (conditional entropy) that can be obtained from a cluster in A using the clusters in B. Set-matching based measures include Normalized van Dongen (NVD) [5] and Criterion-H (CH) [6]. They match the clusters between A and B, and measure the amount of overlap between the clusters, see Fig. 1. However, all of them measure point-level differences. What is missing is a simple structural cluster-level measure.

Figure 2 demonstrates the situation with three clustering results: k-means (KM) [7], random swap (RS) [8] and agglomerative clustering (AC) [9]. The clustering structure of RS and AC is roughly the same, and their differences come mostly from the minor inaccuracies of the centroids in the agglomerative clustering. The result of the k-means has a more significant, structural difference: one centroid is missing at the top, and there are too many centroids at the bottom.

Some indexes (ARI, NVD, CH) indicate that RS-vs-AC in Fig. 2 are more similar (ARI = 0.91; NVD = 0.05; CH = 0.05) than RS-vs-KM (ARI = 0.88; NVD = 0.07; CH = 0.10), or AC-vs-KM (ARI = 0.82; NVD = 0.10; CH = 0.14) but the numbers do not tell that their global structure is the same.

In a recent work [10], we introduced a cluster level index called centroid index (CI) to cope with this problem by measuring the number of clusters allocated differently. For each centroid in A, the method finds its nearest centroid in B. Then it calculates the indegree-values for each centroid in B; how many times it was selected as the nearest. An orphan centroid (indegree = 0) indicates that a cluster is differently located in the clustering structure. The index value is the count of these orphans so that CI-value indicates the number of cluster-level differences. Value CI = 0 indicates that the clustering structures are identical, CI = 1 that one cluster mismatch, and so on.

The measure is somewhat rough as it ignores the point-level differences. A simple point-level extension called centroid similarity index (CSI) was therefore also considered. However, the main idea to measure the cluster level differences is best captured by the raw CI-value, or relative to the number of clusters: CI/k. In Fig. 2, CI-value clearly tells that RS and AC have similar clustering structure (CI = 0), and that k-means has one difference (CI = 1).

A limitation of CI is that we must have the centroids. It is possible to use other clustering models like k-medoids [11] and k-modes [12] by finding the nearest medoid/mode using the distances in the feature space. However, in more complex clustering models like Gaussian mixture model (GMM), density-based or arbitrary-shape clustering, it is not as simple.

In this paper, we show how to apply centroid index by using partitions instead of the centroids. For every cluster in A, we first find its most similar cluster in B by calculating the amount of overlap by Jaccard coefficient, which is the number of shared points divided by the total number of distinctive data points in the two clusters:

where A i and B j are the matching (most similar) clusters.

However, we do not sum up the overlaps but we further analyze the nearest neighbor mapping. The cluster-to-cluster similarity is now determined at point level, but the overall value is still measured at the cluster level. The measure is calculated as the number of orphan clusters (indegree = 0) as in the original definition of centroid index. Thus, the method now generalizes from the centroid-model to other clustering models independent on how the cluster is represented: centroid, medoid, mode, or even no prototype used at all.

For example, in Fig. 1, the topmost clusters are mapped to each other, and the bottom-most clusters to each other. This results in mapping where all indegree = 1 for both clusters, and CI-value becomes 0. Therefore, these two solutions have the same clustering structure and they differ only at the point level.

2 External Cluster Validity

Existing cluster validity indexes can be divided into three categories: pair-counting, information theoretic and set-matching based measures:

-

Pair-counting measures

-

Information theoretic measures

-

Set-matching based measures

We next briefly recall the idea of set-matching based methods [13]. The clusters are first either paired (NVD) or matched (CH and CI). In pairing, best pair of the clusters in A and B are found by minimizing the sum of the similarities of the paired clusters. Hungarian [13], or greedy algorithm [6, 14] has been used to solve it.

In matching, nearest neighbour is searched. This does not always result in bijective mapping where exactly two clusters are paired, but several clusters in A can be mapped to the same cluster in B (or vice versa), see Fig. 3. The mapping is not symmetric, and is usually done in both ways: A→B and B→A.

The similarities of the matched/paired clusters are then calculated by summing up the total overlap. In [13], the values are first normalized by the maximum cluster size. In NVD, CH and CSI, the normalization is performed after the summation by dividing the total number of shared points by the total number of points:

where NN(A i ) is the cluster in B that A i is matched/paired with. Centroid index (CI) finds the nearest centroid without any use of the partitions:

where c[A i ] and c[B j ] are the centroids of A and B. The other difference is that CI does not use the point-level measure (2). Instead, it calculates the number of mappings (indegree) made for each cluster B j, and then sums up the number of clusters in B that has not been mapped at all (indegree = 0). These are called orphans:

where Oprhan(B) has value 1 if no cluster in A is mapped to it:

In order to have symmetric index, we perform mappings in both ways: A→B and B→A. The CI-value is then defined as the maximum of these two:

To sum up, the index is easy to calculate, and the result has clear interpretation: how many clusters are differently allocated. An example is shown in Fig. 4.

3 Centroid Index Using Different Clustering Models

The main limitation of the centroid index is that it requires centroids, which may not exist in other clustering models such as k-medoids [11], k-modes [12], Gaussian mixture model (GMM), density-based and arbitrary-shape clustering. To apply the index to other models, we consider the following alternative approaches:

-

Prototype similarity

-

Partition similarity

-

Model similarity

3.1 Prototype vs. Partition Similarity

K-medoids and k-modes clustering operate also in a feature space (usually Euclidean), so generalization to them is trivial using the prototype similarity approach. We just find the nearest prototype in the other solution using the distance in the feature space. It does not matter whether the prototype is centroid, medoid or mode.

The second approach uses the partitions of the two clustering solutions. The matching of each cluster is done by finding the most similar cluster in the other solution using (1). This applies to any partition-based clustering and it can be calculated from the contingency table in O(N·k 2) time [2]. Contingency table tells how many shared points two clusters A i and B j have. See Table 1 for an example.

This partition similarity approach applies also to centroid-based clustering but it may be slower to calculate. Finding the most similar prototype takes only O(dk 2), where d is the number of dimensions. This is expected to be faster than the O(N) + O(k 2) of the partition similarity; unless if the dimensionality or the number of clusters is very high. For S1–S4 data (http://cs.uef.fi/sipu/datasets) the estimated numbers are 450 distance calculations for the prototype similarity, and 4352 counter additions for the partition similarity. However, observed values in Table 2 show that the calculation of the squared distance takes so much longer that the speed benefit is practically lost. Thus, the partition-based variant is virtually as fast already when d = 2.

It is also possible that the two approaches provide different mappings. Even then, the resulting CI-value is expected to be mostly the same. Table 2 also reports the number of times the two approaches give different CI-value.

The third approach is to derive the similarity of clusters directly from the probability density function of the model. Next we study this for Gaussian mixture model.

3.2 Model Similarity

Gaussian mixture model (GMM) represents every cluster by its centroid and covariance matrix. This increases the size of the model from O(1) to O(d 2) per cluster. However, there is often not enough data to estimate the covariances reliably. A simplified variant therefore considers the diagonal of the covariance matrix, thus, reducing the model size to O(d). We also use this simplified variant here.

Expectation maximization (EM) [16] algorithm optimizes GMM analogous to k-means. It iterates Expectation and Maximization steps in turn to optimize loglikelihood. It also suffers the same problem as k-means: stucks into a local optimum. Better variants include split-and-merge [17], genetic algorithm [18] and random swap [19].

For comparing two clusters (mixtures), we can use any of the three approaches. Prototype similarity approach ignores the covariance and just finds the nearest centroid in the other solution. Partition similarity performs hard partition by assigning each point into the cluster with maximum likelihood, after which the partition similarity can be calculated using the contingency table.

For the model similarity, we use here Bhattacharyya coefficient. It measures the similarity between two probability distributions p and q, and is calculated as follows:

For two multivariate normal distributions, it can be written as:

where c[A i ] and c[B j ] are the means (centroids), Σ1 and Σ2 are the covariance matrices of the two clusters A i and B j. and Σ is the average of Σ1 and Σ2. The first term in (8) represents the Mahalonobis distance, which is a special case of Bhattacharyya when the covariance matrixes of the two distributions are the same as is the case in GMM.

Figure 5 shows an example of two GMM models by SMEM [17] and RSEM [19] algorithms. We compare the results by calculating the various indexes from the resulting partitions. The result of CI was calculated by all the three approaches; all resulted into the same mapping, and gave exactly the same value CI = 2.

3.3 Arbitrary Partitions

Arbitrary-shape clustering differs from the model-based clustering in that there is no model or prototype for the clusters. This kind of clustering problem is often solved based on connectivity. For example, single link algorithm [20] results in a minimum spanning forest where each cluster is represented by the minimum spanning tree of the points in the cluster.

To compare such clustering results by CI, we use the partition similarity because it is independent on the chosen clustering model. All what is required is that we can access the partitions. However, in on-line clustering where huge amount of data is processed, the original data points might not be stored but deleted immediately when their contribution to the model is calculated. In this case, the partition-based similarity cannot be applied but in most offline applications, we do have access to partitions.

Examples of data with arbitrary-shape clusters is shown in Fig. 6 when clustered by k-means (left) and single link (right). K-means misses the two smaller clusters, and divides the bigger ones in the middle and on the top. Single link makes three mistakes in total, by merging three real clusters and by creating three false clusters of size one.

4 Experiments

Here we provide numerical comparison of the centroid index (CI) against the selected existing indexes. We use the following data sets:

-

S1–S4 [15]: 5000 points in 15 clusters.

-

Unbalanced [13]: 6500 points in 8 clusters

-

Birch2 [23]: 100,000 points in 100 clusters

-

Aggregate [24]: 788 points in 7 clusters

-

Compound [25]: 399 points in 6 clusters

We apply the following algorithms: K-means (KM), K-means++ (KM++) [26], Random Swap (RS) [8], Genetic Algorithm (GA) [27] for the data with spherical clusters; Single Link (SL) [21], DBSCAN [21], Split-and-Merge (SAM) [22] for data with arbitrary-shape clusters. K-means is applied for both.

Same clustering results with the corresponding validity index values are given in Tables 3. Unbalanced is rather easy to cluster by good algorithm but k-means fails because random initialization tends to select all centroids from the bigger clusters to the left, and only one centroid will move to cover the five small clusters, see Fig. 7. Thus, leaving four other clusters empty, which results in CI = 4. Most other indexes react to this but their exact values tell very little about how severe the error is, whereas the CI-value tells that half (4/8) of the clusters are wrongly allocated.

The results for the data with arbitrary-shaped clusters are similar. DBSCAN and SAM work well for the Aggregate providing perfect clustering structure (CI = 0) although DBSCAN leaves out few points as outliers. Compound is more challenging and all the methods make 2 or 3 errors in the clustering structure, usually merging the leftmost clusters and creating too many on the right.

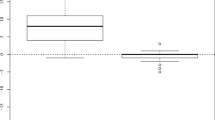

Finally, we study how the indexes react when randomness is added increasingly to artificially created partitions (for details see [13]). Figure 8 shows that the centroid index does not react at all for these point-level changes as long as most points keeps in the original cluster. The values of the set-based measures (NVD, CH, Purity) decrease linearly, which shows that they are most appropriate to measure point-level changes.

5 Conclusions

Centroid Index (CI) is the only validity index that provides cluster level measure. It tells exactly how many clusters are differently allocated, which is more useful information than counting point-level differences. In this paper, we applied it to other clustering models such as Gaussian mixture and data with arbitrary-shaped clusters. Its main advantage is that the significance of the index value can be trivially concluded: value CI > 0 indicate that there is a significant difference in the clustering structure.

References

Rand, W.M.: Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 66(336), 846–850 (1971)

Hubert, L., Arabie, P.: Comparing partitions. J. Classif. 2, 193–218 (1985)

Vinh, N.X., Epps, J., Bailey, J.: Information theoretic measures for clusterings comparison: variants, properties, normalization and correction for chance. J. Mach. Learn. Res. 11, 2837–2854 (2010)

Kvalseth, T.O.: Entropy and correlation: some comments. IEEE Trans. Syst. Man Cybern. 17(3), 517–519 (1987)

Dongen, S.V.: Performance criteria for graph clustering and Markov cluster experiments. Technical report INSR0012, Centrum voor Wiskunde en Informatica (2000)

Meila, M., Heckerman, D.: An experimental comparison of model based clustering methods. Mach. Learn. 41(1–2), 9–29 (2001)

MacKay, D.: An example inference task: clustering. In: MacKay, D. (ed.) Information Theory, Inference and Learning Algorithms, pp. 284–292. Cambridge University Press, Cambridge (2003)

Fränti, P., Kivijärvi, J.: Randomised local search algorithm for the clustering problem. Pattern Anal. Appl. 3(4), 358–369 (2000)

Fränti, P., Virmajoki, O., Hautamäki, V.: Fast agglomerative clustering using a k-nearest neighbor graph. IEEE TPAMI 28(11), 1875–1881 (2006)

Fränti, P., Rezaei, M., Zhao, Q.: Centroid index: cluster level similarity measure. Pattern Recogn. 47(9), 3034–3045 (2014)

Kaufman, L., Rousseeuw, P.J.: Finding Groups in Data: An Introduction to Cluster Analysis. Wiley, New York (1990)

Huang, Z.: A fast clustering algorithm to cluster very large categorical data sets in data mining. Data Min. Knowl. Disc. 2(3), 283–304 (1998)

Rezaei, M., Fränti, P.: Set matching measures for external cluster validity. IEEE Trans. Knowl. Data Eng. 28(8), 2173–2186 (2016)

Zhao, Q., Fränti, P.: Centroid ratio for pairwise random swap clustering algorithm. IEEE Trans. Knowl. Data Eng. 26(5), 1090–1101 (2014)

Fränti, P., Virmajoki, O.: Iterative shrinking method for clustering problems. Pattern Recogn. 39(5), 761–765 (2006)

Dempster, A., Laird, N., Rubin, D.: Maximum likelihood from incomplete data via the EM algorithm. J. Roy. Stat. Soc. B 39, 1–38 (1977)

Udea, N., Nakano, R., Gharhamani, Z., Hinton, G.: SMEM algorithm for mixture models. Neural Comput. 12, 2109–2128 (2000)

Pernkopf, F., Bouchaffra, D.: Genetic-based em algorithm for learning Gaussian mixture models. IEEE Trans. Pattern Anal. Mach. Intell. 27(8), 1344–1348 (2005)

Zhao, Q., Hautamäki, V., Kärkkäinen, I., Fränti, P.: Random swap EM algorithm for Gaussian mixture models. Pattern Recogn. Lett. 33, 2120–2126 (2012)

Jain, A.K., Dubes, R.C.: Algorithms for Clustering Data. Prentice-Hall Inc., Upper Saddle River (1988)

Ester, M., Kriegel, H.P., Sander, J., Xu, X.: A density-based algorithm for discovering clusters in large spatial databases with noise. In: KDDM, pp. 226–231 (1996)

Zhong, C., Miao, D., Fränti, P.: Minimum spanning tree based split-and-merge: a hierarchical clustering method. Inf. Sci. 181, 3397–3410 (2011)

Zhang, T., et al.: BIRCH: a new data clustering algorithm and its applications. Data Min. Knowl. Disc. 1(2), 141–182 (1997)

Gionis, A., Mannila, H., Tsaparas, P.: Clustering aggregation. ACM Trans. Knowl. Disc. Data (TKDD) 1(1), 1–30 (2007)

Zahn, C.T.: Graph-theoretical methods for detecting and describing gestalt clusters. IEEE Trans. Comput. 100(1), 68–86 (1971)

Arthur, D., Vassilvitskii, S.: K-means ++: the advantages of careful seeding. In: ACM-SIAM Symposium on Discrete Algorithms (SODA 2007), pp. 1027–1035, January 2007

Fränti, P.: Genetic algorithm with deterministic crossover for vector quantization. Pattern Recogn. Lett. 21(1), 61–68 (2000)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Fränti, P., Rezaei, M. (2016). Generalizing Centroid Index to Different Clustering Models. In: Robles-Kelly, A., Loog, M., Biggio, B., Escolano, F., Wilson, R. (eds) Structural, Syntactic, and Statistical Pattern Recognition. S+SSPR 2016. Lecture Notes in Computer Science(), vol 10029. Springer, Cham. https://doi.org/10.1007/978-3-319-49055-7_26

Download citation

DOI: https://doi.org/10.1007/978-3-319-49055-7_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-49054-0

Online ISBN: 978-3-319-49055-7

eBook Packages: Computer ScienceComputer Science (R0)