Abstract

Face recognition has been widely applied to identification/authentication systems [1,2,3,4,5,6], however, a considerable drawback of conventional face recognition when used alone lies in its limited ability to distinguish between living human user and 2D photos or pre-recorded videos of the user’s face. To address the risk of these systems being easily bypassed by non-living photos or recordings of users, our study proposes an interactive authentication system that requires users to follow a specific pattern displayed onscreen with their gaze. The system uses the subject’s eye movement and facial features during viewing for user authentication while randomly generating the displayed pattern in real time to ensure that no prepared video can fake user authentication. Given gaze movement is an inseparable part of the face, our system guarantees that facial and eye features belong to the same human following the pattern displayed. To this its deployment in an eye controlled system, we developed a gaze-controlled game that relies on user eye movement for input and applied the authentication system to the game.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Literature Review

Face Recognition applications [7] have been widely applied in security systems. With the ever-accelerated development of face recognition technology, more and more identification work has been carried out by face recognition. Common applications include [8]: access control systems for classified departments, login systems for laptops and mobile unlocking systems. For its convenience, efficiency and user-friendliness, face recognition has become one of a most important encryption and decryption methods.

The biggest criticism for face recognition is that the systems are unable to distinguish the liveness of the human face, that is to say, tell apart a real person from photos or videos of that person [9]. Common attacks on face recognition systems are based on users’ photos or video recordings. In fact, even when a user is unconscious or asleep, their faces can still be used to pass the authentication. As it was revealed in the famous international conference, Black Hat, a widely recognized face recognition authentication system was easily bypassed with a color image of a user [10].

To overcome this limitation, various adaptations have been made based on the existing face recognition technology. Li [11] proposed a Fourier spectrum analysis method to evaluate the liveness of face that is based on: (1) the face in a photo has less high-frequency components than a real human face and (2) the frequency field changes in time of a face photo is subtle. Kollreider [13] applied a time frame method based on movements, which assumes that real human faces have distinct 3D structure, therefore during the movements, a special 2D movement pattern will be shown on image planes. One main feature of this pattern is that the movement range is wider in the inner area than outer. Kollreider [14] also used mouth movement to carry out the live authentication. Pan [12] gave an aliveness detection method based on different stages of blinks. Bao [15] applied face region light vectors from video series, which prevented the bypass with face images. Nevertheless, a pre-recorded face video with movement of facial organs is still able to bypass Bao’s system. In another approach, Kim [16] raised up an infrared face recognition system with 685 nm and 850 nm infrared lights. Then the light intensity information from the face (RGB value and brightness) will be projected to a 2D space, where the real human face and masked face are easier to be distinguished using Linear Discriminative Analysis (LDA). However, Kim’s system needs these two conditions for better accuracy: no occluding objects on forehead and having a constant distance between the camera and the user. As a newer study, Tronci [17] implemented a live detection algorithm combining movement information with pattern information (i.e. feature points’ location with movement information of these points) using feature points to carry out verification and using movement information to carry out live authentication.

Following the direction of these new developments, we compare the user’s eye movement with an interactive visual pattern display, which is unpredictable and therefore impossible to be prepared for, to fulfill a high-precision aliveness detection with face recognition. With the motivation of constructing a gaze controlled system, we applied the live gaze-based authentication first to an eye controlled gaming.

2 Methods

2.1 Construction of the Whole System

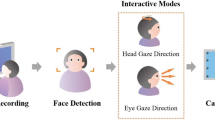

We used eye tracking for two applications: live authentication and gaze-controlled gameplay. The whole system works as is shown in Fig. 1. During the live authentication stage, the user is instructed to follow a white dot moving along the trajectory of a circle with their eyes. At the same time, our program uses the video stream to compute the location of user’s pupil centers and compares their movement with the trajectory of the displayed moving dot. If the user’s eye movement forms a circle-like or ellipse-like ring, the user will be judged as ‘Alive’. If not, the access will be denied immediately. In addition, several images are captured for face-recognition at random time points while the user follows the moving dot. We employed OpenCV [20] to detect faces and employed the Eigenfaces algorithm. The five most similar pictures from video frames of per person were saved in the results folder along with similarity scores. These recognition results were also stored in a log file. Users needed to pass the eye movement with trajectory match, and face recognition to successfully achieve authentication before proceeding to gameplay.

For our second application, we deployed Nightmare, a game that runs on unity3D with the Eyetribe eye tracker and Eyetribe SDK. This SDK can record the trajectory of eyes’ movement of users in front of screen. Users were randomly assigned to either the eye or mouse controller input conditions and then switched to the other controller in a counterbalanced sequence. Users played the role of city defenders eliminating zombies in a 3D gaming environment. In the mouse condition, the weapon fired after a mouse click, while in gaze condition, the weapon fired following the user’s gaze fixation. We recorded Survival Time and number of zombies eliminated (Hit count) during play sessions in both conditions.

The Authentication and Game Play are both important part of our whole interactive system. Interactive Pattern Display requires the instant response of users, in other words, no videos can be prepared beforehand because the pattern is unpredictable. In this way, the authentication avoids bypass actions such as access attempts with pre-recorded photo or video. On the other hand, randomly selected face images while the gaze movement is monitored, guarantees that the identity of face and gaze movement is from the same person. Combination of live authentication and face recognition makes the aliveness authentication more practical and robust.

The game portion of the current development gave an example of eye-controlled system, providing an interesting and potential application field for the video games. Our goal of this work is to construct a gaze controlled gaming system which can be authorized in a novel and secured way.

2.2 Face Recognition and Living Authentication

Equipment and Setup.

Eyetribe is an eye tracking device with a shape of a slim bar, with infrared illuminators on each side and an infrared camera on the center, as shown in Fig. 2. After turning on the EyeTribe camera, illuminators and its data server, we applied the videoGrabber example of the Ofx project to get access to the Eyetribe camera on a Mac computer and directly capture the video stream from it.

When opening the EyeTribe UI, the left area indicates the eye detection, green for detecting eyes while red for not detecting eyes. If not detected, the user needs to change their head angle and position until the screen turns green. This can work as a quality monitoring feature.

The ‘calibrate’ button (Fig. 3) starts the calibration procedure, several points (up to 16) will show up on the screen. The user needs to focus on these points to finish the calibration work. The quality of calibration is rated into 5 levels: Perfect, Good, Acceptable, Poor and Re-Calibration. Once the user gets the Acceptable (or above) rating, the calibration step is done. We used calibration through EyeTribe UI before the game play.

Compute the Location of Eyes.

During the live authentication stage, the users were instructed to follow a white dot moving along a trajectory with their eyes, in the example here, a circle. The size of screen in this experiment is 15 inches with resolution 1366*768. The radius of circle is set as half of the screen height, with speed at 240 degree/second. The trajectory is shown in Fig. 4(A).

While the main program is running in background, several images were captured for face-recognition at random time points while the user followed the moving dot. An Eyetribe was used as an imaging unit to directly output images of the users’ faces (Fig. 4(B)). The image sequences from Eyetribe are in gray-scale and have resolution of 1280*768 with 10 frame per second. These images are used for face recognition as well as further calculating of eyes’ location from video streaming.

In the meantime, several outputs are extracted from image sequences and recorded:

-

Start point of Eye Boxes (SB): Boxes are the region we are interested in, the eye region, red rectangles in Fig. 4. SS is the top left corner location in the picture. In this experiment, boxes are used to compute the center of eyeball so that the track of eye movement is recorded.

-

Glints Center (GC): GC is the center of glints points.

-

Pupil Center (PC): PC is the center of the eye, yellow points in Fig. 4(C).

GC and SS are calculated through Eye Detection Algorithm, IC is calculated through Starburst Algorithm.

Eye Detection and Tracking.

In ideal lighting condition, there should be two glints inside the eye region from the two illuminators. However, in different environmental light settings, sometimes more glints are observed and this can influence the tracking accuracy. We used a chinrest to reduce head movement.

We applied SmartGaze program to compute the geometric center of glints and then crop a 100*80 pixel boxes from the geometric center. Before the computation of eye pupil center, the glints are filled by changing their grey level to 0. By doing so, pupil area becomes a dark continuous area without bright spots. Pupil center (PC) identified with starburst algorithm. By finding an appropriate starting point inside the pupil area, an intensity gradient was used to detect the edge of pupil enabling more edge points to be detected in an iterative way. By fitting the edge of pupil with ellipse so that the center of ellipse can be determined as the center of pupil.

Aliveness Authentication.

Parallel to the gaze tracking function, our program compares the eyes’ movement with the trajectory of the displayed moving dot. Figure 5 shows the location of left eye when a person following the movement of white dot (A) or not (B).

A Monte Carlo simulation [18] based method was then applied to compare the pattern similarity. As is shown in Fig. 5(C), the similarity comparison process is:

Firstly, to calculate the center of all the location points of eye, the center was taken as point O, O for origin to create a rectangle coordinate system. The points are divided into four regions: I/II/III/IV. Then, the sum of the number of points was calculated in every part as S1, S2, S3, S4. We can get a Percentage (i) through Eq. (1).

If there is a Percentage (i) over a threshold value, we judged the task of users’ eye movement is not circle-like or ellipse-like. Otherwise, the program will keep running.

Secondly, accounting the distance from every point to the center point O, find the nearest point A1near and the distance of OA1near (to avoid the bias points which are too close to O, OA1near should be longer than a threshold value). Then take the O as center, OA1near as radius, we get the red circle in Fig. 3. If the number of points inside red circle is larger than a threshold percentage of all the points, we judged the tack of users’ eye movement is not circle-like or ellipse-like. Otherwise, continue the identifying work.

Finally, if the user’s eye movement forms a circle-like or ellipse-like ring, the user will be judged as ‘Alive’. If not, the access will be denied immediately. The images were used for face recognition with Eigenface algorithm [19].

Eigenface Algorithm for Face Recognition.

The concept of Eigenface algorithm is to transform the face from the initial pixel space to another pixel space and carrying out the similarity comparison in the new space.

The dataset combined the at&t face database (40 persons, each 10 pictures, 400 pictures in total, 40.5 M) and users’ faces. Training set is 72 M in total and the validity is 95.5%. Figure 6 shows the average face of every person in face data set. The last five people in red rectangle are the participants of our experiment. In this experiment, we took 40 principal components into consideration.

2.3 Eye-Controlled Gaming

Before the beginning of the game, calibration is strongly suggested in order to have a better experience and higher accuracy in the game. The game starts with the user’s character showing on the corner of the map. All the movements are done by the direction keys on the keyboard. When the character is moved to a certain spot, zombies will start appearing and moving towards the user’s character (Fig. 7).

In the mouse condition, the weapon fires after a mouse click, while in the gaze condition, the weapon fires following the user’s gaze fixation. Living time depends on character’s health points (HP, initial HP is 100), once the character is touched by the zombies for more than 3 times, the user loses all his HP and the game ends. We recorded Survival Time and number of zombies eliminated (Hit count) during play sessions in both conditions in the log files of the game.

3 Results

3.1 Live Authentication Results

For the first tests in the authentication system, the screen was covered so that users could not see the displayed pattern. All users were classified as ‘Not Alive’ and failed access. Results are listed in Table 1.

The screen was then uncovered for the next tests to clearly display the moving dots, before and after their face has been entered into our face database. Users were able to follow the dots and passed the live authentication. Interactive authentication results are listed in Table 2 (ID failed when their faces not in the database yet) and Table 3(passed).

3.2 Eye-Controlled Gaming

After being authorized and having gained access, each user played game for 10 times, 5 in mouse mode and 5 in eye controller mode. Gameplay data is listed in Table 4.

Average survival time was comparable in mouse (93.6 ± 18.1) and gaze mode (82.6 ± 14.6), with T-test (t (5) = 3.52, p = 0.06). The average hit count with mouse (34.7 ± 3.5) was higher than with gaze (24.6 ± 5.2), but no significant difference (t (5) = 3.67, p = 0.06).

4 Conclusion and Discussions

Our goal of this work is to construct a gaze controlled gaming system which can be authorized in a novel and secured way. Gaze interaction was applied in both of the two applications: live authentication and gaze-controlled gameplay. The live authentication with gaze tracking serves as an attractive and natural feature for the eye-controlled game. In the future, we are planning to implement more functionalities in this gaming system by including more gaze controlled interactions.

Preliminary data showed that our system only authorized users who passed both the face recognition and the aliveness test. Given that the users who participated in the study had vastly greater experience with using the mouse as a controller compared to the relative novelty of the gaze-controller, the study suggests that there is considerable potential for gaze-controlled game play.

There are several factors which are related to the function of Live Authentication. Accuracy is an important factor to judge living authentication result. The current pupil detection is unstable in different light conditions, especially for users with glasses. Frequent blinks also reduced the accuracy of pupil detection, about 2% offset from the actual location. The biggest limitation is that eye tracking is sensitive to head movement, so we used a chinrest to reduce head movement. In a future study we will try to improve the pupil detection without the help of the chinrest, to get a stable pupil detection accuracy. Given that most of living authentication systems are used in online applications, efficiency is considered to be one of the most critical factors in the Living Authentication design. In our experiment, living authentication with pattern display takes about 7 s, which is longer than the most of the static face recognition system. Moreover, currently we only used a circle as the interactive pattern. Random pattern and instant judgement is needed improve the robustness of the whole system. In the next step, random pattern with advanced algorithm will be implanted into the liveness authentication to make the pattern not predictable. One of our goals for the next step is to add different types of displayed patterns and shorten the pattern display time, and at the same time continue improving the accuracy in the further work.

For face recognition, we used Eigenface as a classical face recognition method, however, new development of deep learning methods such as Incremental Convolution Neural Network (ICNN) shows an unparalleled efficiency in face recognition. There is no doubt that the implementation of the deep learning method can be a good option for our future work.

This was our first exploration of live gaze-based authentication and its application in a gaze controlled system. Current gameplay still needs the assistance of keyboards for navigation. Eventually, we would like to construct a system which includes live gaze-based Authentication and various keyboard-free and gaze controlled games.

References

Daugman, J.G.: Biometric personal identification system based on iris analysis: U.S. Patent 5,291,560. 1994-3-1

Piosenka, G.V., Chandos, R.V.: Unforgeable personal identification system: U.S. Patent 4,993,068. 1991-2-12

Lanitis, A., Taylor, C.J., Cootes, T.F.: Automatic face identification system using flexible appearance models. Image Vis. Comput. 13(5), 393–401 (1995)

Miller, S.P., Neuman, B.C., Schiller, J.I., et al.: Kerberos authentication and authorization system. In: Project Athena Technical Plan (1987)

Alfieri, R., Cecchini, R., Ciaschini, V., et al.: VOMS, An Authorization System for Virtual Organizations. Grid Computing, pp. 33–40. Springer, Berlin (2004)

Russell, E.A.: Authorization system for obtaining in single step both identification and access rights of client to server directly from encrypted authorization ticket: U.S. Patent 5,455,953[P]. 1995-10-3

Ahonen, T., Hadid, A., Pietikainen, M.: Face description with local binary patterns: application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 28(12), 2037–2041 (2006)

Yu, H., Yang, J.: A direct LDA algorithm for high-dimensional data—with application to face recognition. Pattern Recognit. 34(10), 2067–2070 (2001)

Kim, Y., Na, J., Yoon, S., Yi, J.: Masked fake face detection using radiance measurements. JOSA A 26(4), 760–766 (2009)

Maatte, J., Hadid, A., Pietikinen, M.: Face spoofing detection from single images using micro-texture analysis. In: International Joint Conference on Biometrics (IJCB), pp. 1–7. IEEE (2011)

Li, J., Wang, Y., Tan, T., Jain, A.K.: Live face detection based on the analysis of fourier spectra. In: Defense and Security, pp. 296–303. International Society for Optics and Photonics (2004)

Pan, G., Sun, L., Wu, Z., Lao, S.: Eyeblink-based anti-spoofing in face recognition from a generic webcamera. In: IEEE 11th International Conference on Computer Vision, 2007. ICCV 2007, pp. 1–8. IEEE (2007)

Kollreider, K., Fronthaler, H., Bigun, J.: Evaluating liveness by face images and the structure tensor. In: Fourth IEEE Workshop on Automatic Identification Advanced Technologies, 2005, pp. 75–80. IEEE (2005)

Kollreider, K., Fronthaler, H., Faraj, M.I., Bigun, J.: Real-time face detection and motion analysis with application in “liveness” assessment. IEEE Trans. Inf. Forensics Secur. 2(3), 548–558 (2007)

Bao, W., Li, H., Li, N., Jiang, W.: A liveness detection method for face recognition based on optical flow field. In: International Conference on Image Analysis and Signal Processing, 2009. IASP 2009, pp. 233–236. IEEE (2009)

Kim, Y., Na, J., Yoon, S., Yi, J.: Masked fake face detection using radiance measurements. JOSA A 26(4), 760–766 (2009)

Tronci, R., Muntoni, D., Fadda, G., Pili, M., Sirena, N., Murgia, G., Ristori, M., Ricerche, S., Roli, F.: Fusion of multiple clues for photo-attack detection in face recognition systems. In: 2011 International Joint Conference on Biometrics (IJCB), pp. 1–6. IEEE (2011)

Mooney, C.Z.: Monte Carlo Simulation. SAGE Publications, Thousand Oaks (1997)

Turk, M., Pentland, A.: Eigenfaces for recognition. J. Cognit. Neurosci. 3(1), 71–86 (1991)

OpenCV. OpenCV Version 2.4.13. http://opencv.org/, 2016–05–19/2016–11–01

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Wang, Q., Deng, L., Cheng, H., Fan, H., Du, X., Yang, Q. (2017). Live Gaze-Based Authentication and Gaming System. In: Tryfonas, T. (eds) Human Aspects of Information Security, Privacy and Trust. HAS 2017. Lecture Notes in Computer Science(), vol 10292. Springer, Cham. https://doi.org/10.1007/978-3-319-58460-7_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-58460-7_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-58459-1

Online ISBN: 978-3-319-58460-7

eBook Packages: Computer ScienceComputer Science (R0)