Abstract

In this paper we propose a feasible method based on projections using a curvilinear search for solving optimization problems with orthogonality constraints. Our algorithm computes the SVD decomposition in each iteration in order to preserve feasibility. Additionally, we present some convergence results. Finally, we perform numerical experiments with simulated problems; and analyze the performance of the proposed methods compared with state-of-the-art algorithms.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Constrained optimization

- Orthogonality constraints

- Non-monotone algorithm

- Stiefel manifold

- Optimization on manifolds

1 Introduction

In this paper we consider the following optimization problem with orthogonality constraints:

where \({\mathcal {F}}: \mathbb {R}^{n\times p}\rightarrow \mathbb {R}\) is a differentiable function and \(I_p\in \mathbb {R}^{p\times p}\) represents the identity matrix. The feasible set \(Stf (n,p) :=\{ X\in \mathbb {R}^{n\times p} | X^{\top }X=I \} \) is known as the “Stiefel Manifold”. This manifold is simplified to the unit sphere when \(p=1\) and in the case \(p=n\) is called “Orthogonal group”. The Stiefel manifold can be seen as an embedded sub-manifold of \(\mathbb {R}^{n\times p}\) with dimension equals to \(np- \frac{1}{2}p(p+1)\), see [1].

Problem (1) admits many applications such as, linear eigenvalue problem [14], sparse principal component analysis [4], Kohn-Sham total energy minimization [16], orthogonal procrustes problem [5], weighted orthogonal procrustes problem [6], nearest low-rank correlation matrix problem [7, 12], joint diagonalization (blind source separation) [8], among others. In addition, some problems such as PCA, LDA, multidimensional scaling, orthogonal neighborhood preserving projection can be formulated as problem (1) [9].

On the other hand, the Stiefel manifold is a compact set, which ensures that (1) has a global optimum at least. However, this manifold is not a convex set, which transforms (1) in a hard optimization problem. For example, the quadratic assignment problem (QAP) and the leakage interference minimization are NP-hard [10].

In this paper we propose a new method based on projections onto the Stiefel manifold. In particular, we study two algorithms to solve problem (1). At each iteration of the algorithms, we project the corresponding update onto the Stiefel manifold using the singular value decomposition (SVD) which guarantees to obtain a feasible sequence. Although, the SVD decomposition is computationally expensive, this is less expensive than building a geodesic. In the literature, we can find other feasible methods that solve problem (1), for example, the ones based on retractions methods use projections that involve QR factorization, polar decomposition, Gram-Schmidt process or SVD decomposition [1].

This paper is organized as follows. In Subsect. 2.1 we present some standard notation and in Subsect. 2.2 we give the optimality conditions of the problem (1), Subsect. 2.3 describes the proposed update scheme, where we present a linear search monotone algorithm and a globally convergent non-monotone algorithm for solving problem (1), Subsect. 2.4 shows different strategies to choose the step size according to Armijo-Wolfe like condition, and a non-monotone search using the Barzilai Borwein step size. Some theoretical results are presented in Sect. 3. Section 4 is dedicated to numerical experiments in order to demonstrate the efficiency and robustness of the proposed algorithms.

2 Algorithms

In the first two subsections, we introduce some standard notation and the optimality conditions of problem (1) respectively. Next subsections are devoted to introduce our proposed method.

2.1 Notation

We say that a matrix \(W\in \mathbb {R}^{n\times n}\) is skew-symmetric if \(W = -W^{\top }\). The trace of X is defined as the sum its diagonal elements, and we will denote by Tr[X]. The Euclidean inner product of two matrices \(A,B\in \mathbb {R}^{m\times n}\) is defined as \(\langle A, B\rangle : = \sum _{i,j}A_{i,j}B_{i,j} = Tr[A^{\top }B]\). The Frobenius norm is defined using the previous inner product, i.e., \(||A||_F = \sqrt{\langle A,A\rangle }\). Let \(\mathcal {F}:\mathbb {R}^{n\times p} \rightarrow \mathbb {R}\) be a differentiable function, then the derivative of \(\mathcal {F}\) with respect to X is denoted as \( G := \mathcal {DF}(X):=( \frac{\partial \mathcal {F}(X)}{\partial X_{ij}} )\) and the derivative of the function \(\mathcal {F}\) in X in the direction Z is defined as:

2.2 Optimality Conditions

The Lagrangian function associated to the optimization problem (1) is given by:

where \(I_p\) is the identity matrix and \(\varLambda \) is the Lagrange multipliers matrix, which is symmetric due to the matrix \(X^{\top }X\) is also symmetric. The Lagrangian function leads to the first order optimality conditions for problem (1):

Lemma 1

(cf. Wen and Yin [15]). Suppose that X is a local minimizer of problem (1). Then X satisfies the first order optimality conditions (4a) and (4b) with the associated Lagrangian multiplier \(\varLambda = G^{\top }X\). Defining \(\nabla \mathcal {F}(X) :=G-XG^{\top }X\) and \(A:=GX^{\top }-XG^{\top }\). Then \(\nabla \mathcal {F}(X) = AX\). Moreover, \(\nabla \mathcal {F} = 0\) if and only if \(A=0\).

Proof

See [15].

The Lemma 1 establishes an equivalence to the (4a) and (4b) conditions, i.e., if \(X\in Stf (n,p) \) satisfies that \(\nabla \mathcal {F}(X) = 0\) then X also satisfies (4a) and (4b), so we can use this result as a stopping criterion for our algorithms.

2.3 Update Schemes

In this subsection we present a linear combination based algorithm. As the new iterated of our proposals does not necessarily belong to the Stiefel Manifold, we use a projection operator, in order to force the feasibility of the new iterated. Specifically, we use the classical projection operator which is defined as \(\pi (X) := \text {arg} \min _{Q\in Stf (n,p)} ||X - Q||_F^{2}\), it is known that the solution of this problem is given by \(\pi (X) = UI_{n,p}V^{\top }\) where \(X = U\varSigma V^{\top }\) is the SVD decomposition of X, for details of the demonstration of this result see [11].

In our updating formula, we use the previous result for obtaining a new point that satisfies the constraints of the problem (1). For example, if \( Y_k(\tau )\) is obtained from our proposal, i.e., the linear combination scheme, then the new test point is:

In the next subsections we explain in more detail our updating formula \(Y_k(\tau )\).

A Scheme Based on a Linear Combination. Our proposal uses the following update formula:

where \(G_k = \mathcal {DF}(X_k)\), \(B_k = G_kL^{\top } - LG_k^{\top }\), \(C_k = G_kR^{\top } - RG_k^{\top }\), \(L,R\in \mathbb {R}^{n\times p}\), \(\tau \) is the step size and (\(\lambda \), \(\mu \)) are any two scalars satisfying:

The following lemma shows that the curve \(Y^{CL}_{k}(\cdot )\) defined by Eq. (6) is a descent curve at \(\tau =0\).

Lemma 2

Let \(Y^{CL}_{k}(\tau )\) be defined by Eq. (6), then \(Y^{CL}_{k}(\tau )\) is a descent curve at \(\tau = 0\), i.e.,

Proof

The proof is straightforward, and it can be obtained by using trace properties and using Eq. (2).

Remark 1

Note that in the updating formula (6), we can select any matrix L or R, in particular one can use matrices L, R with random entries. The parameters (\(\lambda ,\mu \)) can appropriately selected, for example, we can choose both positive. This ensures that the method will descent and may eventually converge to a local minimum. In our implementation, we select \(L = X_k\), \(R = X_{k-1}\) and \((\lambda ,\mu ) = (2/3,1/3)\).

2.4 Strategies to Select the Step Size

From now on, \(Y_k(\tau )\) represents our proposal, i.e., the based on the linear combination method.

A Descent Condition. In our method, we will choose the biggest step size \(\tau \) that satisfies the following condition:

with \(0<\sigma <1.\)

Note that Eq. (8) is not exactly the classic “Armijo condition”, since we use \(\dot{Y}_k(0)\) instead of \(\dot{Z}_k(0)\). However, if we only use the condition (8) for computing the step size, it ensures the descent of the objective function as long as the directional derivative \(Tr[\mathcal {DF}(X_k)^{\top }\dot{Y}_k(0)]\) is negative. In this work, we also study the behavior of our algorithms calculating the step size as satisfying (8).

Nonmonotone Search with Barzilai Borwein Step Size. It is known that the Barzilai-Borwein (BB) step size, see [2], can sometimes improve the performance of linear search algorithms such as the steepest descent method without adding too much computational cost. This technique considers the classic steepest descent method and proposes to use any of the following step sizes:

where \(S_k = X_{k+1}-X_k\), \(R_k = \mathcal {DF}(X_{k+1}) - \mathcal {DF}(X_k)\) and the matrix \(B(\alpha ) = (\alpha I)^{-1}\), is considered an approximation of the Hessian of the objective function. For more details see [2, 13].

Since the quantities \(\alpha _k^{BB1}\), \(\alpha _k^{BB2}\) could be negatives, the absolute value of these step sizes is usually considered. On the other hand, the BB-steps do not necessarily guarantee the descent of the objective function at each iteration, this may imply that the method does not converge. In order to solve this problematic, we use a technique that guarantees global convergence, see Refs. [3, 13] for details. In particular, we use a non-monotone line search algorithm, see [17], combined with the BB-step in order to select the step size, see Algorithm 1.

Note that when \(\eta =0\), Algorithm 1 is reduced to a monotonous algorithm which generates points satisfying the descent condition (8).

3 Theoretical Results

In this section we prove some convergence results of our Algorithm 1 when it’s use with \(\eta = 0\).

Lemma 3

Let \(\{X_k\}\) be an infinite sequence generated by Algorithm 1. Then \(\{\mathcal {F}(X_k)\}\) is a convergent sequence. Moreover any accumulation point \(X_{*}\) of \(\{X_k\}\) is feasible, i.e., \(X_{*}^{\top }X_{*} = I\).

Proof

By construction of the Algorithm 1 we have,

or equivalently,

so, \(\{\mathcal {F}(X_k)\}\) is a monotonically decreasing sequence. Now, since Stiefel manifold is a compact set and \(\mathcal {F}\) is a continuous function, we obtain that \(\mathcal {F}\) has maximum and minimum on Stf(n,p). Therefore, \(\{\mathcal {F}(X_k)\}\) is bounded, and then \(\{\mathcal {F}(X_k)\}\) is a convergent sequence.

On the other hand, let \(\{X_k\}_{k\in \mathcal {K}}\) be a convergent subsequence of \(\{X_k\}\) and suppose that this subsequence converges to \(X_{*}\), that is \(\lim _{k\in \mathcal {K}}X_k = X_{*}\), since \(X_k\) is a feasible point for all \(k\in \mathcal {K}\) and Stf(n,p) is a compact set, then we have \(X_{*}\in Stf(n,p) \), i.e.,

therefore every accumulation point is feasible.

Theorem 1

Let \(\{X_k\}\) be an infinite sequence generated by Algorithm 1. Then any accumulation point \(X_{*}\) of \(\{X_k\}\) satisfies the the first order optimality conditions.

The proof of Theorem 1 is obtained by following the ideas of the demonstration of Theorem 4.3.1 in [1] except for slight adaptations.

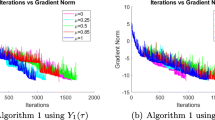

4 Numerical Experiments

In this section we analyze the performance of our method by solving several simulated experiments with the format of the problem (1), for different objective functions and different sizes of problems. We also make comparisons between some state of the art methods and our proposal, in order to measure the performance and efficiency of our algorithms.

4.1 Implementation Details

All our experiments were performed using Matlab R2013a on an Intel processor i3-380M, 2.53 GHz CPU with 500 Gb HD and 8 Gb of Ram. For the different parameters of our two algorithms, we use the following values: initial step size \(\tau =\) 1e−2, \(\sigma = \) 1e−4, \(\eta = 0\text {.}85\), \(\delta = 0\text {.}1\). Moreover, as the convergence of the first-order methods (methods using the first derivative of the objective function) can be very slow we will use several stop criteria:

and a maximum of K iterations, where

In the experiments, we used the following default values: \(xtol = \) 1e−6, \(ftol = \) 1e−12, \(T = 5\) and \(\epsilon = \) 1e−4.

In all experiments presented in the following subsections we use the following notation:

-

Nfe: The number of evaluations of the objective function.

-

Nitr: The number of iterations performed by the algorithm to convergence.

-

Time: The time (in seconds) used by the algorithm to converge.

-

NrmG: The gradient norm of the Lagrangian function with respect to primal variables evaluated at the estimated “optimal”.

-

Fval: Evaluation of the objective function at the estimated “optimal”.

-

Feasi: Corresponds to the following error \(|| \hat{X}^{\top }\hat{X} - I_p ||_F\), where \(\hat{X}\) denotes the “optimal” estimated by the algorithm.

In addition, we denote by the Steepest Descent Steep-Dest, the Trust-Region method Trust-Reg and the Conjugate Gradient method Conj-Grad from “manopt” toolboxFootnote 1, and PGST the algorithm presented in [6]. On the other hand, Linear-Co denote our Algorithm 1.

4.2 Weighted Orthogonal Procrustes Problem (WOPP)

Let \(X\in \mathbb {R}^{m\times n}\), \(A\in \mathbb {R}^{p\times m}\), \(B\in \mathbb {R}^{p\times q}\) and \(C\in \mathbb {R}^{n\times q}\). The Weighted Orthogonal Procrustes Problem (WOPP) consists in solving the following constrained optimization problem:

When C is the identity matrix with appropriate dimensions, this problem is known as Unbalanced Orthogonal Procrustes Problem (UOPP), for more details see [1].

Experiments with WOPP Problems. The problems in this subsection were taken from [18]. In particular, we considered \(n = q\), \(p = m\), \(A = PSR^{\top }\) and \(C = Q \varLambda Q^{\top }\), where P, Q and R are orthogonal matrices generated randomly with \(Q \in \mathbb {R}^{n\times n}\), \(R,P\in \mathbb {R}^{m \times m}\), \(\varLambda \in \mathbb {R}^{n\times n}\) is a diagonal matrix with entries generated from a uniform distribution in the range \([\frac{1}{2},2]\) and S is a diagonal matrix defined for each type of problem, see below for details. As a starting point \(X_0\in \mathbb {R}^{m\times n}\), we generated random matrices on the Stiefel manifold. When not specified, the entries of the matrix were generated using a standard Gaussian distribution.

For comparison purposes, we created problems with a known solution \(Q_{*}\in \mathbb {R}^{m\times n}\) randomly selected on the Stiefel manifold. Then, we built the matrix B as \(B = AQ_{*}C \). Finally, for the different tested problems the diagonal matrix S is described below.

Problem 1: The diagonal elements of S were generated by a normal distribution in the interval [10,12].

Problem 2: The diagonal of S is given by \(S_{ii} = i + 2r_i\), where \(r_i\) was a random number uniformly distributed in the interval [0, 1].

For each experiment, a total of 300 WOOP’s problems were built with the matrix S generated according to problems Problem 1 and Problem 2 respectively. The maximum number of iterations, for all methods, was \(K=8000\).

The results of the previous experiments are presented in Tables 1 and 2. We denote by Error to the standard error with respect to the global solution \(Q_{*}\), i.e., \(|| \hat{X} - Q_{*}||_F\) where \( \hat{X}\) is the optimum estimated by the algorithms. Furthermore, min, mean, max denote the minimum, maximum and average obtained by each algorithm in the 300 runs.

According to Table 1 for well-conditioned problems, i.e., Problem 1, all the algorithms present similar results. Note that PGST obtained a lower number of iterations. In general, all the methods presented a similar performance for this type of problems. On the other hand, for ill-conditioned problems, i.e., Problem 2, we observe that all the method arrived to the solution \(Q_{*}\), according to NrmG, Fval and Error measures. Moreover, our Linear-Co procedure obtained similar results compared with the PGST algorithm when \(n<m\), and when \(m=n\) Linear-Co method achieved better results that the PGST, see Table 2.

5 Conclusions

In this paper we proposed a feasible method for solving optimization problems with orthogonality constraints. This method is very general and was based on a linear combination of descent directions and using the same manifold framework. We are currently exploring several variants of this procedure. In order to preserve feasibility, our proposal requires to project onto the Stiefel manifold. In particular, we used the SVD decomposition in each iteration. In this work, we also presented some convergence results. Finally, in numerical experiments, the proposed algorithms obtained a competitive performance compared with some state of the art algorithms.

Notes

- 1.

The tool-box manopt is available in http://www.manopt.org/.

References

Absil, P.A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2009)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Dai, Y.H., Fletcher, R.: Projected barzilai-borwein methods for large-scale box-constrained quadratic programming. Numerische Mathematik 100(1), 21–47 (2005)

d’Aspremont, A., Ghaoui, L., Jordan, M.I., Lanckriet, G.R.: A direct formulation for sparse PCA using semidefinite programming. SIAM Rev. 49(3), 434–448 (2007)

Eldén, L., Park, H.: A procrustes problem on the stiefel manifold. Numerische Mathematik 82(4), 599–619 (1999)

Francisco, J., Martini, T.: Spectral projected gradient method for the procrustes problem. TEMA (São Carlos) 15(1), 83–96 (2014)

Grubisi, I., Pietersz, R.: Efficient rank reduction of correlation matrices. Linear Algebra Appl. 422(2), 629–653 (2007)

Joho, M., Mathis, H.: Joint diagonalization of correlation matrices by using gradient methods with application to blind signal separation. In: Sensor Array and Multichannel Signal Processing Workshop Proceedings, pp. 273–277. IEEE (2002)

Kokiopoulou, E., Chen, J., Saad, Y.: Trace optimization and eigenproblems in dimension reduction methods. Numer. Linear Algebra Appl. 18(3), 565–602 (2011)

Liu, Y.F., Dai, Y.H., Luo, Z.Q.: On the complexity of leakage interference minimization for interference alignment. In: 2011 IEEE 12th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), pp. 471–475. IEEE (2011)

Manton, J.H.: Optimization algorithms exploiting unitary constraints. IEEE Trans. Signal Process. 50(3), 635–650 (2002)

Pietersz, R., Groenen, P.J.: Rank reduction of correlation matrices by majorization. Quant. Fin. 4(6), 649–662 (2004)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7(1), 26–33 (1997)

Saad, Y.: Numerical Methods for Large Eigenvalue Problems, vol. 158. SIAM, Manchester (1992)

Wen, Z., Yin, W.: A feasible method for optimization with orthogonality constraints. Math. Program. 142(1–2), 397–434 (2013)

Yang, C., Meza, J.C., Lee, B., Wang, L.W.: KSSOLVoa MATLAB toolbox for solving the Kohn-Sham equations. ACM Trans. Math. Softw. (TOMS) 36(2), 10 (2009)

Zhang, H., Hager, W.W.: A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 14(4), 1043–1056 (2004)

Zhang, Z., Du, K.: Successive projection method for solving the unbalanced procrustes problem. Sci. China Ser. A 49(7), 971–986 (2006)

Acknowledgments

This work was supported in part by CONACYT (Mexico), Grant 258033.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Dalmau-Cedeño, O., Oviedo, H. (2017). A Projection Method for Optimization Problems on the Stiefel Manifold. In: Carrasco-Ochoa, J., Martínez-Trinidad, J., Olvera-López, J. (eds) Pattern Recognition. MCPR 2017. Lecture Notes in Computer Science(), vol 10267. Springer, Cham. https://doi.org/10.1007/978-3-319-59226-8_9

Download citation

DOI: https://doi.org/10.1007/978-3-319-59226-8_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59225-1

Online ISBN: 978-3-319-59226-8

eBook Packages: Computer ScienceComputer Science (R0)