Abstract

Hyperspectral (HSI) retinal imaging is an emergent modality for disease diagnosis such as diabetic retinopathy. HSI represents the retina as a 3D cube, with two spatial dimensions and one spectral, meaning that spectral signatures associated with a disease may be identified. The quality of this hypercube influences the accuracy of automatic diagnosis. Three main artifacts may limit the hypercube’s quality: parasitic contribution (e.g. blinking or ghost), uneven illumination and blurriness. We present a method for artifact detection and quality assessment using SURF features and intensity-based analysis. Quality evaluation has a rich literature in classic fundus images. However, none of these works have tackled the challenges related to HSI. Hypercubes from volunteers recruited at an eye clinic, in reflectance (48) and fluorescence (32) imaging modes, were captured using a Metabolic Hyperspectral Retinal Camera based on a tuneable light source in the visible and near infrared spectral range (450–900 nm). Compared with the ratings of two observers, our proposed method shows encouraging results in artifact detection and quality assessment.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

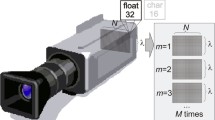

Hyperspectral imaging (HSI) is an emerging modality in medical applications with a great potential in disease diagnosis and surgical guidance [1]. HSI has the advantage of depicting tissues that are invisible under white light. It captures spectral information at different wavelengths (from ultraviolet light to near infrared), resulting in a hypercube rich of several tens of frames. Each frame corresponds to a 2D greyscale image captured at a specific wavelength. The HSI principle is applicable to two imaging modalities to measure different optical properties such as light absorption and scattering in reflectance imaging and light emission from fluorophores in fluoresence imaging. Recent works evaluated HSI system for retinal disease diagnosis such as diabetic retinopathy [2] and suggested the potential of automatic evaluation of hypercubes for pathology detection. The accuracy of such evaluation depends on the quality of the hypercube. Several factors can influence this quality: the operator experience, the type of camera and the patient’s condition. In this work, we propose a new solution to detect the main artifacts that influence a hypercube’s quality: parasitic contribution (blink or ghost), defocus, uneven illumination and patient’s movement.

Quality image evaluation in retinal HSI images is still a new topic. To the best of our knowledge, there is no existing work on the subject. On the other hand, quality evaluation in color retinal fundus have been the topic of different studies. In [3, 4], the authors used histogram-based analysis and other statistical features within a clustering method to detect artifacts related to blur, blinking or illumination. Similar features in addition to wavelet-based features were used in [5] to cluster fundus images depending on their illumination and focus quality. Recently, a new method [6] applied convolutional neural networks for both feature extraction and classification of fundus images in gradable/ungradable category. However, these works assessed color fundus images and cannot be applied to tackle the specific challenges in evaluating hypercubes.

Retinal HSI are characterized by large variations in the global intensity and the visible features in the images at different wavelengths. Moreover, unlike color fundus images where all frames are obtained simultaneously, our HSI system is sequential. Hence, each 2D frame has to be evaluated independently. Besides, the lack of contrast in fluorescence and in frames acquired at high wavelengths in reflectance makes them more challenging to assess. Finally, retinal HSI imaging is a relatively new technique and no public dataset is available yet.

This work is a first iteration on the computation of image quality metrics applied in retinal HSI. We present a new technique that assess the overall quality of the hypercube and lists all the artifacts that were detected, in a close to real time manner, to advice the operator in making the appropriate decision whether a new acquisition is needed.

2 Dataset

The dataset was acquired from the eye clinic Clinique ophtalmologique 2121 (Montreal, Canada), with 80 hypercubes from 20 volunteers from the regular clinic population. Informed consent was received from all subjects under the National Research Council Canada (NRC) approved protocol. Each cube was acquired using a Metabolic Hyperspectral Retinal Camera (MHRC) (Optina Diagnostics, Montreal, Canada) based on a tuneable light source that acquires a hypercube of up to 91 frames sequentially within 1s. Our database contains 48 cubes in reflectance mode within the spectral range [450, 900] at a 5 nm steps and 32 in fluorescence within the range [450, 580]. Each cube was tested and compared with the ratings of two observers who graded the cubes for the presence of artifacts: 29 cubes showed artifacts of illumination, 32 of defocus, 19 of movement and 27 of parasitic contribution. Some cubes may display more than one artifact. The observers also evaluate if the entire cube can be gradable (26)/ungradable (43). If a cube is gradable but shows some minor artifact, it is then categorized as “intermediate” (11 cubes). For defocus artifact evaluation, Gaussian blur was simulated on 25 “gradable” hypercubes with a kernel size of \(15 \times 15\) and a standard deviation of 5. Moreover, four additional cubes were acquired at different focus levels using a synthetic model of the eye. A preprocessing step is applied on all cubes: The field of view (FOV) in retinal imaging is usually depicted within a circle surrounded by a dark background. For faster and more accurate computation, the dark background is deleted. To do so, the first frame of the hypercube is thresholded to get a binary mask locating the FOV. Only the squared region surrounding the FOV is extracted in all the following frames of the hypercube.

3 Method

3.1 Parasitic Signal Detection

During the acquisition of hypercubes, some frames may display parasitic contribution, due to eye blinking (Fig. 1g) or due to inadequate positioning causing the appearance of a bright crescent-like regions in the image named ghost (in Fig. 1i). Such artifact may alter the spectral signatures of regions in the hypercube. Computing the variance in mean intensity at each wavelength in hypercubes with “good” quality can be used as an indicator of parasitic contribution. Indeed, such artifact corresponds usually to a frame with very bright or dark pixels in the entire image or in a large region. Therefore, a sudden increase or decrease in mean intensity variation compared to a “good” acquisition can highlight that. First, the mean intensity variation and standard deviation over 12 cubes in reflectance and 14 in fluorescence of good quality is computed. Half of these cubes were from our dataset and the other half from a different cohort of patients. For each frame of the cube \(I_i\) of size \(m \times n\) acquired at wavelength i, we compute the difference of intensity \(\varDelta _i = \mid I_i - I_{i+1} \mid \) with the following frame in wavelength \(i+1\) (\(\mid . \mid \) corresponds to the absolute value operator). Then the mean value of intensity differences at wavelength i is computed:

Knowing the \(\mu _{\varDelta _i}\) value at each wavelength, we compute \(M_i\), the mean of \(\mu _{\varDelta _i}\) within the 26 “good” hypercubes with its corresponding standard deviation \(\sigma _i\) at each wavelength. This characterizes intensity variation in a hypercube acquired without any parasitic artifact. To detect the artifact in a new cube, we compare these values to the \(\mu _{\varDelta _i}\) of a new cube at each wavelength. A frame \(I_i\) is identified as displaying parasitic signals if the following condition is respected: \(\mu _{\varDelta _i} > M_i + 6 \times \sigma _i\).

3.2 Movement Detection

Due to the sequential nature of the acquisitions obtained with the MHRC, eye movements may occur between frames of a hypercube. We aim to capture large motions that can limit the quality of the acquisition. First, all frames of the hypercube are preprocessed by an adaptive histogram equalization and a low pass filter to enhance the contrast. Then our algorithm evaluates the distances between SURF [7] (Speeded Up Robust Features) keypoints to detect large movements from one frame \(I_{i-1}\) to the following \(I_{i}\). In retinal HSI, these keypoints are mostly located around the optic disc. Movement is considered as such when the mean euclidean distance \(D_{i-1,i}\) between corresponding keypoints is higher than an empirically selected threshold (\(=100\)). However, other situations in the acquisition process may lead to an increase in mean distances, for example when the illumination conditions are not optimal. On the other hand, in these acquisitions the number of keypoints typically stay constant between frames, while it significantly decreases during large movements. This is because movement can alter the appearance of certain key features in the image. Therefore, two conditions are required in order to detect movement at frame \(I_i\): if \(D_{i-1,i}\) is higher than the empirically selected threshold and if the number of points correspondences decreases by at least \(50\%\) compared to the previous frame.

3.3 Defocus Detection

Defocused acquisitions occur when the position of the camera is not adequate, leading to blurry images. We use statistical-based measure to evaluate focus on each frame of the hypercube similar to [3]: First, the image is blurred using an average filter with \([6\times 6]\) window size. The mean gradient  of the resulting blurred image is computed. The same step is applied again to get the value of

of the resulting blurred image is computed. The same step is applied again to get the value of  with an average filter window of \([10\times 10]\). The final focus measure is computed as:

with an average filter window of \([10\times 10]\). The final focus measure is computed as:  . An unfocused image will be robust to the average filter and the differences in image gradients

. An unfocused image will be robust to the average filter and the differences in image gradients  value will be low. On the other hand, a focused image is more sensitive to the average filtering and its

value will be low. On the other hand, a focused image is more sensitive to the average filtering and its  value will be higher. We used an empirically selected threshold value (\(=5 \times 10^{-3}\)) to determine whether the computed

value will be higher. We used an empirically selected threshold value (\(=5 \times 10^{-3}\)) to determine whether the computed  value corresponds to an unfocused image.

value corresponds to an unfocused image.

The algorithm is applied at each frame in the low wavelengths of the cube from 450 to 600 nm, since only at these wavelengths there is enough contrast to use the  measure. If half the frames are defocused, then it is assumed that the entire hypercube is defocused. This threshold is used to discriminate a systematic defocus from occasional blurred images due to eye movements.

measure. If half the frames are defocused, then it is assumed that the entire hypercube is defocused. This threshold is used to discriminate a systematic defocus from occasional blurred images due to eye movements.

3.4 Illumination Detection

Inadequate acquisition conditions, such as inefficient pupil dilation can lead to uneven illumination. This result in the presence of a dark region within the FOV that overshadows critical information in the retina such as the optic nerve head [5]. Our algorithm evaluates whether there is a dark region within the borders of the FOV, as illustrated in Fig. 1d. First, Gaussian filtering is applied to limit the effect of other dark regions such as blood vessels. Then, since most illumination artifact occur around the FOV, a region of a specific width surrounding the borders is computed depending on the size of the image. The histogram of 5 bins of the image within the computed region is evaluated. If the largest bin in the histogram is the darkest one and if it forms a large connected region taking up to \(20\%\) to \(70\%\) of the border region, then there is a shadowing region in the image. Images within the hypercubes have generally a thin dark circle around the FOV, which is not to be considered as an illlumination artifact. Our algorithm therefore considers uneven illumination only in cases were the dark region covers only \(70\%\) or less of the image’s circumference.

3.5 Quality Index Computation

An overall quality index is computed using the results of the aforementioned four artifact detection algorithms. Each artifact has an associated weight that was empirically selected to optimize the specificity in recognizing ungradable cubes. The value of focus, parasite, movement and illumination in Eq. (2) corresponds to binary values on whether the corresponding artifact was identified (1) or not (0). The weights in Eq. (2) were selected to mirror their relative potential detrimental effect on subsequent interpretation of the images. Parasitic contribution and illumination have similar weights since a hypercube won’t be gradable if some information is missing. Movement artifact has less weight since it can be corrected with registration. Defocus has a similar lower weight because of the sensitivity of its threshold. The quality index is measured as:

Once q is computed, three levels are associated to the hypercube: “gradable” if \(q\in [0.9 \ 1]\), “ungradable” if \(q\in [0 \ 0.6]\) and “intermediate” if \(q\in ]0.6 \ 0.9[\). The latter category is added to notify the operator of the limited quality and let him/her decide whether the cube is gradable or not.

4 Results and Discussion

The proposed algorithms are implemented with non-optimized Matlab code on a Intel Core CPU 2.70 GHz. Most of the parameters values described in Sect. 3 were fixed to qualitatively agree with our visual observations. The limited size of our dataset prevented us from carrying a more extensive study to optimize the parameters. Table 1 shows the quantitative results for each algorithm with the mean computation times per algorithm. Illumination detection algorithm was the slowest since it has to go through different steps for validation. The other three algorithms perform in much faster times with the maximum time of 22 s/hypercube. Figure 1 shows examples of the true positive (TP), false positive (FP) and negative (FN) results for every algorithm. Indeed, one quality of the proposed algorithms is their ability to not only identify an artifact but also to specify at which frame it has been detected.

With an intensity prior defined using only 26 hypercubes, the algorithm detects accurately parasitic artifacts (as in Fig. 1g). Quantitative results illustrates this accuracy. The value of intensity variation in a cube with a parasitic contribution at one frame can be much larger than the “good” population’s intensity variations. However, the algorithm can miss cases when ghost regions are not bright enough as in Fig. 1i. The algorithm made a false detection (Fig. 1h) only in the case of two patients with macular degeneration. This could be improved by a larger database displaying various retinal pathologies.

The high performance of movement detection algorithm shows that the distance in SURF keypoints is an accurate indicator (Fig. 1a). Indeed SURF features are well know in the literature to solve motion compensation problems. The method can be limited when the contrast in the hypercube is low especially in fluorescence as in Fig. 1b and c. In such cases, correspondence between keypoints are not retrieved as the contrast decreases from high to low wavelengths in fluorescence. This can be adjusted by enhancing the preprocessing step.

Illumination detection results are encouraging with a tradeoff between specificity and sensitivity. The algorithm detects only large and dark regions around the image’s border. However, the algorithm can be limited when the contrast between the region opposite to the optic disc is too high and the darkest region can be considered as an uneven illumination as illustrated in Fig. 1e. These FP are detected at one frame from the entire cube. To overcome this limitation, we may evaluate the number of frames that were detected. Results show one FN cube (Fig. 1f) where the dark region is relatively small. Our algorithm didn’t detect it since it focuses only on large regions.

Defocus detection is the most challenging artifact since the contrast value can be dramatically different from one patient to another depending on the presence of a refractive error of the eye exceeding the correction range of the imaging system, pathologies affecting the transmission of the anterior segment of the eye or the nature of the lens (natural or intraocular). The algorithm identifies cases were the defocus is evident to a trained observer (Fig. 1j). On the other hand, the algorithm still recognizes blurry frames in FN cases as the one in Fig. 1l. However, the number of detected blurry frames was not high enough to evaluate the entire cube as defocused. Figure 1k shows a frame detected falsely as defocused due to the limited contrast in the image.

To further evaluate the performance of the  measure, we used a dataset of simulated blur and acquisitions using a synthetic model. Using simulated data, our algorithm accurately identifies the 25 hypercubes as defocused with the same threshold value used for the original dataset. This shows that the

measure, we used a dataset of simulated blur and acquisitions using a synthetic model. Using simulated data, our algorithm accurately identifies the 25 hypercubes as defocused with the same threshold value used for the original dataset. This shows that the  measure is an accurate indicator for defocus and that the threshold selected helps detecting cubes when the defocus is clearly visible to the naked eye as illustrated in Fig. 1m. In synthetic eye aquisition, the

measure is an accurate indicator for defocus and that the threshold selected helps detecting cubes when the defocus is clearly visible to the naked eye as illustrated in Fig. 1m. In synthetic eye aquisition, the  values were different for the focused hypercubes shown in Fig. 1n and for the defocused ones shown in Fig. 1o. This illustrates that our defocus measure is indeed affected by the level of focus in the camera and can be an accurate indicator of the artifact.

values were different for the focused hypercubes shown in Fig. 1n and for the defocused ones shown in Fig. 1o. This illustrates that our defocus measure is indeed affected by the level of focus in the camera and can be an accurate indicator of the artifact.

Results in Quality Index Computation

Table 2 compares the performance of our computed quality index (Sect. 3.5) with the manual grading by two operators. The sum of each column corresponds to cubes manually classified for each category, e.g. the first column shows that 24 cubes are manually labeled gradable and each row of that column shows that our method classified them as gradable (12), intermediate (10) and ungradable (4). If a gradable or ungradable cube was classified as intermediate, we do not consider it a false positive. Indeed, the intent of our tool is to assist the operator in his decision making whether the cube can be graded and to specify the nature of the detected artifacts. In that context, results show that \(60\%\) of the cubes were labeled in the exact category, and only \(16\%\) of gradable cubes were misclassified as ungradable and, most importantly, only \(7\%\) of ungradable cubes where misclassified as gradable. Misclassified cubes were mostly due to the defocus detector. Indeed, the defocus measure is highly depending on different parameters related to the presence of pathologies or the nature of the lens. More work should be made on that aspect. Nonetheless, the results are encouraging for the identification of cubes’ quality, especially at detecting ungradable ones.

5 Conclusion

This paper presents a first iteration for automatic quality assessment of retinal HSI. We defined different algorithms, using SURF and intensity features, to identify artifacts and to compute the overall quality of the hypercube. The proposed method has encouraging results in identifying each artifact and in assessing the quality of the hyercube. Having a good quality of acquisition is key for better automatic evaluation of pathologies in the retina. Future works will include tests on a larger dataset to represent patients with different pathologies and will include the application of a clustering approach for a better quality categorization. Finally, more work should be made to reduce processing times.

References

Lu, G., Fei, B.: Medical hyperspectral imaging: a review. J. Biomed. Opt. 19(1), 010901 (2014)

Desjardins, M., Sylvestre, J.-P., Jafari, R., Kulasekara, S., Rose, K., Trussart, R., Arbour, J.D., Hudson, C., Lesage, F.: Preliminary investigation of multispectral retinal tissue oximetry mapping using a hyperspectral retinal camera. Exp. Eye Res. 146, 330–340 (2016)

Dias, J.M.P., Oliveira, C.M., da Silva Cruz, L.A.: Retinal image quality assessment using generic image quality indicators. Inf. Fusion 19, 73–90 (2014)

Davis, H., Russell, S., Barriga, E., Abramoff, M., Soliz, P.: Vision-based, real-time retinal image quality assessment. In: CBMS 2009. IEEE (2009)

Veiga, D., Pereira, C., Ferreira, M., Gonçalves, L., Monteiro, J.: Quality evaluation of digital fundus images through combined measures. J. Med. Imaging 1(1), 014001 (2014)

Tennakoon, R., Mahapatra, D., Roy, P., Sedai, S., Garnavi, R.: Image quality classification for DR screening using convolutional neural networks. In: OMIA Held in Conjunction with MICCAI, Greece (2016)

Bay, H., Ess, A., Tuytelaars, T., Van Gool, L.: Speeded-up robust features (SURF). Comput. Vis. Image Underst. 110(3), 346–359 (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

M’hiri, F., Chevrefils, C., Sylvestre, JP. (2017). Quality Assessment of Retinal Hyperspectral Images Using SURF and Intensity Features. In: Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D., Duchesne, S. (eds) Medical Image Computing and Computer-Assisted Intervention − MICCAI 2017. MICCAI 2017. Lecture Notes in Computer Science(), vol 10434. Springer, Cham. https://doi.org/10.1007/978-3-319-66185-8_14

Download citation

DOI: https://doi.org/10.1007/978-3-319-66185-8_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-66184-1

Online ISBN: 978-3-319-66185-8

eBook Packages: Computer ScienceComputer Science (R0)