Abstract

Non-rigid 3D objects are difficult to distinguish due to the structural transformation and noises. In this paper we develop a novel method to learn a discriminative shape descriptor for non-rigid 3D object retrieval. Compact low-level shape descriptors are designed from spectral descriptor, and the non-linear mapping of low level shape descriptors is carried out by a Siamese network. The Siamese network is trained to maximize the inter-class margin and minimize the intra-class distance. With an appropriate network hierarchy, we extract the last layer of the successfully trained network as the high-level shape descriptor. Furthermore, we successfully combine two low-level shape descriptors, based on the Heat Kernel Signature and the Wave Kernel Signature, and test the method on the benchmark dataset SHREC’14 Non-Rigid 3D Human Models. Experimental results show our method outperforms most of the existing algorithms for 3D shape retrieval.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

With the 3D objects widely used in a variety of fields, such as 3D games, movies entertainment, multimedia and so on, efficient and concise 3D shape analysis is be-coming more and more important. Retrieving non-rigid 3D objects has always been a hot and difficult topic. A large number of 3D retrieval methods have been proposed [1]. In [1] the authors present a review of the existing methods. Most of the methods represent a 3D shape as a shape descriptor. Shape descriptors are crucial for the 3D object representation. They not only need to describe the intrinsic attributes of shapes, but also need a good distinction for different models.

In the past decade, a large number of shape descriptors have been proposed, such as D2 shape distribution [2], statistical moments [3, 4], Fourier descriptor [5], Eigen-value Descriptor [6], etc. Those shape descriptors can effectively express 3D shapes, however they are relatively sensitive to non-rigid deformation and topological changes, and they are not suitable for non-rigid 3D shape retrieval.

Intrinsic descriptors with isometric invariance are proposed for non-rigid 3D models, the Laplace-Beltrami operator-based methods are the most common, includes the Global Point Signature (GPS) [7], the Heat Kernel Signature (HKS) [8], the Wave Kernel Signature (WKS) [9], the scale-invariant Heat Kernel Signature (SIHKS) [10], etc.

In the aforementioned methods, the shape descriptors are hand-crafted, rather than self-learning by data-driven. They are often not robust enough to deal with the deformation of the 3D models and geometric noises. In [11], the authors applied standard Bag-of-feature (BoF) to construct global shape descriptor. A set of HKSs of shapes are clustered using k-means to form the dictionary of words. Then they combine spatially-close words into histogram as shape descriptors. In [12], it combines the standard and spatial BoF descriptors for shape retrieval. Unlike the conventional BoF methods, the authors of [13] propose a supervised BoF approach with mean pooling to form discriminative shape descriptor. The authors of [14] adopt three levels for extracting high-level feature. They combine the SIHKS, the shape diameter function (SDF) and the averaged geodesic distance (AGD) three representative intrinsic features, then a position-independent BoF is extracted in mid-level. Finally a stack of restricted Boltzmann machines are used for high-level feature learning. [15,16,17] on the basis of histogram to optimize HKS by auto-encoder. In [14] they extract low level feature first, then neural networks are used to maximize the inter-class margin, minimize intra-class distance. [18] combines with SIHKS and WKS, the shape descriptor is obtained by the weight pooling, and linearly mapped into subspace using large margin nearest neighbor.

However, they almost have some common problems. Most high-level features are based on linear mapping. Although some methods extract high-level feature by deep learning, they have too few training samples. And the train set and test set always contain the same categories in their experiments.

2 Overview of Our Method

In this paper, we propose a novel non-rigid 3D object retrieval method. Our method improves both retrieval performance and time consumption. Inspired by mean pooling proposed in [13] and weighted pooling proposed in [18], we propose a simple and efficient method to simplify the multi-scale descriptor into a compact descriptor. We named it sum pooling descriptor. We use sum pooling instead of the weighted pooling, because the difference between the two is very small, with the increase in the number of points. This difference can be ignored when using the network optimization. So we use the simplest way to resolve this problem. This operation can be considered as the sum of the energy at each scale of each vertex on the meshes. it can describe the transformation of total energy. And we test this approach on HKS and WKS respectively.

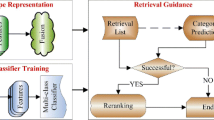

Then we train a discriminative deformation-invariant shape descriptor via a two-stream network. It is called Siamese network in the field of computer vision and image processing. Figure 1 shows the pipeline of our proposed method, where the red arrows indicate the process of network training, and the blue arrows indicate test process. It included three steps:

The detailed framework of our method. We preprocess the 3D shapes from dataset and extract the point descriptors for each shape. The sum pooling step transforms the point descriptor into the compact descriptors, which can be represented by a vector. Finally, the high level descriptor is trained by the Siamese network. (Color figure online)

-

Multi-scale feature extraction. We extract two kinds of multi-scale features respectively, HKS and WKS, from 3D models to describe each non-rigid shape.

-

Low level feature extraction. We employ the sum pooling operation on multi-scale features and get a succinct and compact representation of 3D shape. Each model can be represented as a vector.

-

High level feature extraction. The low level features are mapped into subspace by a two-stream network which we design for 3D shape descriptor.

The reasons for choosing the two-stream network architecture for high-level feature extraction are as follows:

-

The number of 3D models in one dataset is usually only a few tens or hundreds. The two-stream network architecture employ multiple inputs to increase the number of input samples of the network, it’s a large extent and improve the ability of the network to fit.

-

The low-level descriptors could be mapped into a better projection subspace in the Euclidean distance by the nonlinear mapping of the neural network.

-

By comparing the differences between the different categories of samples in the loss function, the inter-class margin could be maximized and intra-class distance could be minimized.

3 Method

In this section, we describe the details of our method. In the first part, we describe the extraction of multi-scale features, includes HKS and WKS. The sum pooling operation and the design of the Siamese network are introduced in the second and third part.

3.1 Multi-scale Features Extraction

Heat Kernel Signature.

The Heat Kernel Signature (HKS) [8] has been widely used in 3D shape analysis due to its capability of capturing significant intrinsic geometric properties, such as isometric invariance and robustness of geometric transformation. In HKS, a 3D model is represented as a Riemannian manifold \( {\text{M}} \). The heat diffusion process on \( {\text{M}} \) is governed by the heat equation

\( \Delta M \) is the Laplace-Beltrami operator (LBO) on the Riemannian manifold \( {\text{M}} \). And \( k_{t} (x,y):\Re^{ + } \times {\rm M} \times {\rm M} \to \Re \) is a function on \( M \), which is heat kernel function used to describe the heat values between point x and point y on a given time t. The eigen-decomposition of the heat kernel is

where \( \lambda_{i} \) and \( \phi_{i} \) are the i-th eigenvalue and the i-th eigenfunction of the LBO respectively. The equation \( k_{t} \left( {x,x} \right) \) can represent the heat values at point x from time \( t_{0} \) to time t, where the unit heat sources \( u_{0} \) aggregates on the 3D model surface \( S \). Thus the HKS can be represented as

Note that we no longer choose all the eigenfunctions, just intercept the top k eigenfunctions of LBO. And the different diffusion time of HKS concatenated to obtain a descriptor of point x

where the HKS(x) describes the local descriptor of point x on one model. It is showed as a T-dimensional vector. For a 3D model with n point \( X = \{ x_{1} ,x_{2} , \ldots ,x_{n} \} \), we finally get a global descriptor of size of \( n*T \).

Wave Kernel Signature.

The Wave Kernel Signature (WKS) [9] reflects the fact that the probability distribution of different energy of quantum particles. The energy of a quantum particle on a model surface is governed by the wave function \( \psi (x,t) \) which is a solution of the Schrödinger equation

Similar to HKS, WKS also depends on the LBO eigen-decomposition. We get an energy probability distribution with expectation value E. Therefore the wave equation can be written as

The probability to measure the particle at point x is \( |\psi_{E} (x,t)|^{2} \). Considering that the time parameters do not have an intuitive explanatory of shape. The WKS define as the average probability over time is

Therefor descriptor of different scales can be described by choose different distributions \( f_{E} \). The multi-scales descriptor WKS with a set of energy distributions \( E = \{ e_{1} , \ldots ,e_{q} \} \) is shown below

3.2 Sum Pooling Descriptor

For retrieval, a concise and compact descriptor to describe a shape is necessary, and there is a need for a good distinction between different no-rigid 3D shapes. Both HKS and WKS are multi-scale descriptors. They are not suitable for retrieval. In order to form a compact descriptor, we sum energy values at all points on each scale. It describes the process of the heat values changes on the shape surface over time. For a given shape \( S \) with \( V \) points, the scale size of multi-scale descriptor is \( N \). We compute a sum pooling HKS or WKS over all point descriptors \( d(x) \) computed from the points x of \( S \). Thus, our shape descriptor is defined as

where \( x \in S \) is a point from shape \( S \). \( N \) is the number of all points. The descriptor of point x is represented by \( d(x) \).

3.3 Siamese Network Architecture

As shown in Fig. 2, in order to make the descriptor map to the optimal Euclidean distance projection subspace while maximize the inter-class margin and minimize intra-class distance, we apply the two-stream network architecture to handle this part of work. Figure 3 shows that the Siamese network achieves the two-stream architecture by sharing network weights. It is proposed to be used for signature verification [19]. Later, various improvements to Siamese networks are applied in many missions, including face verification [20, 21], ground-to-aerial image matching [22], local path descriptor learning [23, 24] and stereo matching [25]. In this work, we design a novel Siamese network to learn a concise and compact descriptor for 3D shape retrieval.

Note that Siamese network is a metric learning algorithm. Our aim is to get a compact descriptor. Considering that the hierarchical structure of the neural network and the distance of two samples are measured at the last layer in the European space. Thus we extract the last layer of the network as a descriptor after the training is finished.

Network Architecture.

Figure 3 shows the architecture of our network. Here \( X_{1} \) and \( X_{2} \) are a pair of input samples of network. If they come from the same category then Y = 1, and we calls “positive pair” and otherwise Y = 0 (“negative pair”). The W is the sharing weights of network, and the function \( E_{W} \) that measures the Euclidean distance between the network outputs of \( X_{1} \) and \( X_{2} \). It is defined as

where \( G_{W} (X_{1} ) \) and \( G_{W} (X_{2} ) \) are the high-level space vector after network mapping and \( ||G_{W} (X_{1} ) - G_{W} (X_{2} )||_{2} \) is the Euclidean distance of two network’s latent representations.

Considering that the input dimension is small, we do not incorporate the regularization term into the loss function, instead apply the RELU activation function directly in the first layer of the network, not only played a role of the regularization term, but also we find it increases the speed of convergence nearly ten times.

Loss.

We apply a margin contrastive loss function consists of squared error loss function and hinge loss function as shown below

where Y is a binary label of the pair indicates whether they are the same category or not and \( \varepsilon \) is a distance margin.

4 Experiments

4.1 Dataset and Evaluation Metric

Dataset.

We evaluated our approaches on the dataset of the SHREC’14-Shape Retrieval of Non-Rigid 3D Human Model [26]. “Real” dataset contains real scans objects of 20 males and 20 females different persons altogether 40 subjects, each subject includes ten different poses a total of 400 models. “Synthetic” dataset includes 300 models with 15 groups (5 males, 5 females and 5 children). Each class has a unique shape with 20 different poses. Compared with other 3D datasets, the datasets we adopted are composed of human body models, rather than the different kinds of objects. It made these datasets particularly challenging.

Evaluation Metrics.

We chose several evaluation metrics commonly used in 3D retrieval for evaluating the performance of our proposed descriptors.

-

Nearest Neighbor (NN): The percentage of the closest matching objects and query are from the same class.

-

First Tier (FT): The percentage of shapes is from the same class of the query are in the top K.

-

Second Tier (ST): The percentage of shapes is from the same class of the query are in the top 2K.

-

E-measure (EM): The EM is obtained by the precision and recall for top 32 of retrieved results.

-

Discounted Cumulative Gain (DCG): a logarithmic statistic used to weighs correct results near the front of the ranked list and toward the end of the list.

-

The Mean Average Precision (mAP): from the PR curves the average of all precision values computed in each relevant object in the retrieved list.

4.2 Implementation Details

In this subsection, we introduce our experimental settings. The LBO is limited to the first 100 eigenfunctions. For HKS, we choose 100 time scale. For WKS, the variance of the WKS Gaussian is set to 6. The dimension of WKS was 100. We down-sample all shapes to 10K vertexes and 20K faces with Meshlab [27].

We train a Siamese network which is made up of 3 layers fully connected network with size 150-516-256-150. We over sampling the number of “positive pair” ensure the number of two input samples of network is the same. The margin of safety \( \varepsilon \) in the loss function is set to 1.0.

4.3 Experiment 1

In this experiment, we divide the dataset into the training set and the test set according to [13]. We select 4 samples of each class as the training set, and the other as the test set.

We test the sum pooling HKS (SPHKS), the sum pooling WKS (SPWKS) and the stacked sum pooling HKS and WKS (SPHWKS) three low level descriptors and their corresponding trained descriptors by network. We run each test in this paper 20 times with different training set and test set splits, and take the average as the final results.

We test the performance of those features. After combining the Siamese network, our descriptor obtained significant results (Tables 1 and 2). And in this more challenging Real dataset, our approach is getting better results. It shows our method has a better effect on a dataset with noise and topology changes. The performance of stacked descriptors was worse than HKS. However, after network mapping, the performance of the descriptors is significantly improved.

We also compare the P-R curves Fig. 4 and the mean average accuracy (mAP) shows in Table 3.

Our method compares with some state-of-the-art methods which are introduced in [30], including ISPM, DBN, HAPT, R-BIHDM, Shape Google, Unsupervised DL and supervised DL. We also compare our method with some methods that use deep learning or the recent learning methods, including CSD+LMNN [18], DLSD [28], RMVM [29]. Although our approach is not the best, but such as CSD+LMNN method, its training set and test set must have the same class which is not reasonable in the practical application.

By comparing the P-R curves and the results of the mAP, it’s clear that our algorithm decreases slowly with the increase of Recall rate, which is more stable than other approaches. And the mAP of our proposed method is superior to most existing methods.

4.4 Experiment 2

We make two more interesting tests and choose some classes as training set, the rest as test set. One of the reasons we chose a two-stream architecture network is to weaken the label, so that the network have the power to find the difference between the different classes, rather only distinguishing between known classes. It is not difficult to find that out method is more suitable for this experiment than experiment 1. In both experiments, the number of negative sample pairs has been sufficient. However, in experiment 1, the Synthetic dataset and real dataset can only get 240 and 640 positive sample pairs. In this experiment, the number of positive sample pairs is significantly more than experiment 1, for the Synthetic dataset and the Real dataset we can obtain 2800 and 2000 sample pairs.

We choose half classes (20 calsses, 200 models in “Real” dataset and 7 classes, 2800 models in “Synthetic” dataset) as training set, and the rest as test set. The experiment results in (Tables 4 and 5) show that our method is more effective in improving the dataset with noise, which just shows the advantages of the neural network.

Obviously, as shown in Tables 4 and 5, even if the test classes are not in the training set, the results of our experiments are also significant. Our method is still more effective in SHREC’14 Real dataset. The SHREC’14 Synthetic dataset has little noise. The sum pooling descriptors have enough power to distinguish shapes from different classes.

The Precision-Recall plots Fig. 5 and the mAP Table 6 show the results of different descriptor. The network-optimized descriptors are more stable. Despite the stacked descriptor with HKS and WKS did not show good performance, it get a remarkable result trained by the Siamese network.

We visualized the trained SPHWKS descriptor, from Fig. 6 we find that no trained descriptors are difficult to distinguish visually. After training, there is a significant difference between classes.

4.5 The Time Consumption

We test our method on the PC with Inter(R) Core(TM) i5-4690 CPU and 8.0 GB RAM. It takes approximate 2.7 s for each shape to compute LBO eigenvalues and eigenvectors. It takes a total of 2.3 s to finish compute the low level descriptor for one shape, where the sum pooling process only needs 0.4 s. The time consumption in the high level descriptor extraction is mainly in the training stage. It take about 15 m to finish training a network.

5 Conclusion

We proposed a novel 3D shape descriptor via Siamese network. We first extracted a compact low level descriptor. We obtained it by sum pooling the point descriptor HKS and WKS. The HKS is more sensitive to the global information, the WKS is more effective for detail information. We stacked HKS and WKS, and further optimize it through the Siamese network. The network descriptor is obtained by extracting the last layer of Siamese network. We tested our proposed method on two challenging datasets, including Real and Synthetic dataset of SHREC’14 Human dataset. The results of our experiments showed that our proposed method is very effective for containing noises and indistinguishable data. The performance of our method is more than the existing method in many aspects.

References

Lian, Z., Godil, A., Bustos, B., et al.: A comparison of methods for non-rigid 3D shape retrieval. Pattern Recogn. 46(1), 449–461 (2013)

Elad, M., Tal, A., Ar, S.: Content based retrieval of VRML objects — an iterative and interactive approach. In: Jorge, J., Correia, N., Jones, H., Kamegai, M.B. (eds.) Multimedia 2001. Eurographics, pp. 107–118. Springer, Vienna (2002). https://doi.org/10.1007/978-3-7091-6103-6_12

Vranic, D.V., Saupe, D., Richter, J.: Tools for 3D-object retrieval: Karhunen-Loeve transform and spherical harmonics. In: IEEE MMSP, pp. 293–298 (2001)

Saupe, D., Vranić, D.V.: 3D model retrieval with spherical harmonics and moments. In: Radig, B., Florczyk, S. (eds.) DAGM 2001. LNCS, vol. 2191, pp. 392–397. Springer, Heidelberg (2001). https://doi.org/10.1007/3-540-45404-7_52

Chen, D., Tian, X., Shen, Y., et al.: On visual similarity based 3D model retrieval. Comput. Graph. Forum 22(3), 223–232 (2010)

Jain, V., Zhang, H.: A spectral approach to shape-based retrieval of articulated 3D models. Comput.-Aided Des. 39(5), 398–407 (2007)

Rustamov, R.M.: Laplace-Beltrami eigenfunctions for deformation invariant shape representation. In: Eurographics Symposium on Geometry Processing, pp. 225–233 (2007)

Sun, J., Ovsjanikov, M., Guibas, L.: A concise and provably informative multi-scale signature based on heat diffusion. In: Computer Graphics Forum, vol. 28, pp. 1383–1392. Wiley Online Library (2009)

Aubry, M., Schlickewei, U., Cremers, D.: The wave kernel signature: a quantum mechanical approach to shape analysis. In: 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), pp. 1626–1633. IEEE (2011)

Bronstein, M.M., Kokkinos, I.: Scale-invariant heat kernel signatures for non-rigid shape recognition. In: 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1704–1711. IEEE (2010)

Bronstein, A.M., Bronstein, M.M., Guibas, L.J., et al.: Shape Google: geometric words and expressions for invariant shape retrieval. ACM Trans. Graph. 30(1), 1 (2011)

Lavoué, G.: Combination of bag-of-words descriptors for robust partial shape retrieval. Vis. Comput. 28(9), 931–942 (2012)

Litman, R., Bronstein, A.M., Bronstein, M.M., Castellani, U.: Supervised learning of bag-of-features shape descriptors using sparse coding. Comput. Graph. Forum 33(5), 127–136 (2014)

Bu, S., Liu, Z., Han, J., Wu, J., Ji, R.: Learning high-level feature by deep belief networks for 3D model retrieval and recognition. IEEE Trans. Multimedia 16(8), 2154–2167 (2013). CAESAR

Xie, J., Fang, Y., Zhu, F., et al.: Deepshape: deep learned shape descriptor for 3D shape matching and retrieval. In: Computer Vision and Pattern Recognition, pp. 1275–1283. IEEE (2015)

Fang, Y., Xie, J., Dai, G., et al.: 3D deep shape descriptor. In: Computer Vision and Pattern Recognition, pp. 2319–2328. IEEE (2015)

Zhu, Z., Wang, X., Bai, S., Yao, C., Bai, X.: Deep learning representation using autoencoder for 3D shape retrieval. In: Proceedings of 2014 International Conference on Security, Pattern Analysis, and Cybernetics (SPAC), pp. 279–284. IEEE (2014)

Chiotellis, I., Triebel, R., Windheuser, T., Cremers, D.: Non-rigid 3D shape retrieval via large margin nearest neighbor embedding. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 327–342. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_21

Bromley, J., Guyon, I., Lecun, Y., et al.: Signature verification using a siamese time delay neural network. In: Advances in Neural Information Processing Systems, DBLP, pp. 737–744 (1993)

Chopra, S., Hadsell, R., LeCun, Y.: Learning a similarity metric discriminatively, with application to face verification. In: CVPR (2005)

Taigman, Y., Yang, M., Ranzato, M., Wolf, L.: Deepface: closing the gap to human-level performance in face verification. In: CVPR (2014)

Lin, T.Y., Cui, Y., Belongie, S., Hays, J.: Learning deep representations for ground-to-aerial geolocalization. In: CVPR (2015)

Han, X., Leung, T., Jia, Y., Sukthankar, R., Berg, A.C.: Matchnet: unifying feature and metric learning for patch based matching. In: CVPR (2015)

Zagoruyko, S., Komodakis, N.: Learning to compare image patches via convolutional neural networks. In: CVPR (2015)

Zbontar, J., LeCun, Y.: Computing the stereo matching 藝 cost with a convolutional neural network. In: CVPR (2015)

Pickup, D., Sun, X., Rosin, P.L., et al.: Shape retrieval of non-rigid 3D human models. In: Eurographics Workshop on 3D Object Retrieval, pp. 101–110. Eurographics Association (2014)

Visual Computing Lab, ISTI-CNR: Meshlab. http://meshlab.sourceforge.net/

Dai, G., Xie, J., Zhu, F., et al.: Learning a discriminative deformation-invariant 3D shape descriptor via many-to-one encoder. Pattern Recogn. Lett. 83, 330–338 (2016)

Gasparetto, A., Torsello, A.: A statistical model of Riemannian metric variation for deformable shape analysis. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2015

Pickup, D., Sun, X., Rosin, P.L., Martin, R.R., Cheng, Z., Lian, Z., Aono, M., Ben Hamza, A., Bronstein, A., Bronstein, M., Bu, S., Castellani, U., Cheng, S., Garro, V., Giachetti, A., Godil, A., Han, J., Johan, H., Lai, L., Li, B., Li, C., Li, H., Litman, R., Liu, X., Liu, Z., Lu, Y., Tatsuma, A., Ye, J.: SHREC’14 track: shape retrieval of non-rigid 3D human models. In: Proceedings of the 7th Eurographics Workshop on 3D Object Retrieval, EG 3DOR 2014. Eurographics Association (2014)

Acknowledgements

This work is supported by National Natural Science Foundation of China under Grant 61571247, the National Natural Science Foundation of Zhejiang Province under Grant LZ16F030001, and the International Cooperation Projects of Zhejiang Province under Grant No. 2013C24027.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Shi, X., Zhao, J., Zhang, L., Ye, X. (2017). Non-rigid 3D Object Retrieval with a Learned Shape Descriptor. In: Zhao, Y., Kong, X., Taubman, D. (eds) Image and Graphics. ICIG 2017. Lecture Notes in Computer Science(), vol 10667. Springer, Cham. https://doi.org/10.1007/978-3-319-71589-6_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-71589-6_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-71588-9

Online ISBN: 978-3-319-71589-6

eBook Packages: Computer ScienceComputer Science (R0)