Abstract

In this paper, we propose an efficient and robust model fitting method, called Hierarchical Voting scheme based Fitting (HVF), to deal with multiple-structure data. HVF starts from a hierarchical voting scheme, which simultaneously analyses the consensus information of data points and the preference information of model hypotheses. Based on the proposed hierarchical voting scheme, HVF effectively removes “bad” model hypotheses and outliers to improve the efficiency and accuracy of fitting results. Then, HVF introduces a continuous relaxation based clustering algorithm to fit and segment multiple-structure data. The proposed HVF can effectively estimate model instances from the model hypotheses generated by random sampling, which usually includes a large proportion of “bad” model hypotheses. Experimental results show that the proposed HVF method has significant superiority over several state-of-the-art fitting methods on both synthetic data and real images.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Geometric model fitting addresses the basic task of estimating the number and the parameters of model instances and it has various applications in computer vision such as camera calibration, 3D reconstruction and motion segmentation. It is particularly challenging to determine the correct model instances from multiple-structure data in the presence of severe outliers and noises. Recently, many robust model fitting methods have been proposed, including AKSWH [1], T-linkage [2], MSH [3], RansaCov [4] and SDF [5].

Conventionally, a “hypothesize-and-verify” framework has been widely adopted to fit models by firstly verifying a set of model hypotheses generated from data points and then selecting the best model hypothesis satisfying some specific criteria. The representative work of such a framework is RANSAC [6], which shows favorable robustness and simplicity for single model estimation. The variants of RANSAC (e.g., [7,8,9]) deal with multiple-structure data in a sequential manner, where one model instance can be estimated in each round and then the corresponding inliers are removed for the model estimation in the next round. However, the verifying criteria of these methods generally require to give one threshold related to the inlier noise scale, whose value is non-trivial to be determined. In addition, since the intersecting parts of different model instances are probably removed during the sequential fitting procedure, the fitting performance of these methods is constrained.

Some other robust fitting methods adopts the voting scheme. Unlike the verifying procedure, the voting scheme simultaneously select all the potential model instances. For example, HT [10] and its variant RHT [11] are the representative methods. After mapping data points into the parameter space, each data point is converted into a set of votes for the corresponding model hypotheses. However, when searching for the model hypotheses with the most number of votes as the underlying model instances, the quantization may be ambiguous and high-dimensional data may lead to prohibitive computation as well.

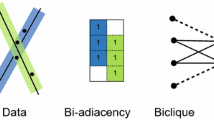

Inspired by the clustering algorithms, a “voting-and-clustering” framework is widely used in geometric model fitting recently. In terms of different voting schemes, the methods based on this framework can be divided into the consensus analysis based voting (referred to as the consensus voting) methods and the preference analysis based voting (referred to as the preference voting) methods. The methods based on the consensus voting (e.g., AKSWH and MSH) endow each model hypothesis votes coming from the corresponding inliers and then cluster the model hypotheses according to the consensus information. On the other hand, the methods based on the preference voting (e.g., KF [12], J-linkage [13] and T-linkage), the model hypotheses vote for the data points within the respective inlier noise scale, and then the data points are clustered to obtain the model instances. However, there are some limitations in these methods unilaterally based on consensus or preference analysis. For the consensus voting based methods, how to distinguish the correct model instances from the redundant and invalid model hypotheses is difficult. For the preference voting based methods, the “bad” model hypotheses may cause incorrect analysis of the data points, which leads to the fitting failure. Besides, the intersection of the model instances may be dealt with unfavorably for the preference voting based methods [4].

To benefit from the superiorities and alleviate the deficiencies of the consensus voting scheme and the preference voting scheme, we propose a Hierarchical Voting scheme based Fitting method (HVF) which effectively combines both voting schemes. Specifically, we firstly apply the consensus voting scheme as the first step of the hierarchical voting scheme to distinguish the “good” model hypotheses from the “bad” model hypotheses. Then for the second step of the hierarchical voting scheme, the model hypotheses that are prone to be the “good” model hypotheses are retained for more precise preference voting to the data points, which contributes to the gross outliers removal. Finally, the data points are clustered into the sets corresponding to the true model instances. An illustration of the hierarchical voting scheme is depicted in Fig. 1.

An illustration of the hierarchical voting scheme, where the squares represent the model hypotheses and the circles represent the data points. (a) The first step includes the consensus voting scheme: the vote \(v_c(x_i,\varvec{\hat{\theta }}_j)\) from the \(i-\)th data point \(x_i\) is given to the \(j-\)th model hypothesis \(\varvec{\hat{\theta }}_j\), which removes the “bad” model hypotheses (green squres). (b) The second step includes the preference voting scheme: the vote \(v_p(\varvec{\hat{\theta }}_j,x_i)\) from the \(j-\)th model hypothesis \(\varvec{\hat{\theta }}_j\) is given to the \(i-\)th data point \(x_i\), which removes outliers (green circles). (Color figure online)

Compared with the state-of-the-art model fitting methods, the proposed method HVF has three advantages: Firstly, HVF can simultaneously estimate the number and the parameters of multiple model instances in challenging multiple-structure data (e.g. with intersecting parts or a large number of outliers and noises). Secondly, the hierarchical voting scheme, combining the consensus voting and preference voting schemes, effectively improves the fitting accuracy and robustness by comprehensive analysis. Thirdly, HVF successfully reduces the negative influence of “bad” model hypotheses and gross outliers, which shows high computational efficiency.

The rest of the paper is organized as follows: We detail the specific two steps of the hierarchical voting scheme and present the overall HVF method in Sect. 2. We provide the extensive experimental results compared with several state-of-the-art methods on both synthetic data and real images in Sect. 3, and then draw conclusions in Sect. 4.

2 A Hierarchical Voting Scheme for Model Fitting

In this section, we describe the proposed hierarchical voting scheme at first, and then present the complete model fitting method HVF based on this voting scheme. From a set of N data points in d dimensions \(\varvec{X} = \{x_i\}_{i=1}^N \in \mathbb {R}^d\), we randomly sample M p-subsets (p is the minimum number of data points required to estimate a model hypothesis) to generate model hypotheses \(\varvec{\hat{\varTheta }} = \{\varvec{\hat{\theta }_j}\}_{j=1}^M \in \mathbb {R}^p\). Some other sampling methods [14, 15] have been proposed to produce as many all-inlier p-subsets as possible and they are easy to be employed in our method as well. Here we put emphasis on the model selection process and only use random sampling for the generation of model hypotheses for simplicity. For the i-th data point, its absolute residual set \(\varvec{r}_i = \{r_i^1, \ldots , r_i^M\}\) is measured with regard to all the model hypotheses by computing the Simpson distance, where \(r_i^j\) represents the residual of the i-th data point to the j-th model hypothesis.

2.1 The Consensus Voting of the Hierarchical Voting Scheme

The qualities of model hypotheses depend on their similarities to the true model instances. A “good” model hypothesis, similar to the corresponding true model instance, holds a larger proportion of true inliers and compacts the corresponding inliers more tightly than the other “bad” model hypotheses. The “bad” model hypotheses, however, are very likely to mislead the preference analysis. Therefore, the first step of the hierarchical voting scheme is constructed to alleviate the negative effects of the “bad” model hypotheses.

Following the idea of the conventional consensus voting schemes [1, 10, 11], each generated model hypothesis acts as a model hypothesis candidate while each data point acts as a voter. Basically, we make an inlier vote for the model hypotheses it closely relates to. In this paper, we introduce a biased measurement to assign continuous values for the consensus voting and enlarge the differences between the “good” and “bad” model hypotheses. Unlike the binary voting in RANSAC and HT/RHT, a continuous value gives detailed information about the disparities between different data points with regard to the evaluation of a model hypothesis. Furthermore, the consensus analysis scheme simply based on the number of the inliers in [6, 17] is sensitive to a threshold related to the inlier noise scale and is not discriminative enough for dichotomizing model hypotheses. Therefore, we consider the voting details from two aspects. On one hand, since the relationships between data points and model hypotheses are reflected on the distribution of data points, votes from different data points contribute to the model hypotheses in different degrees. Specifically, the closer a data point is to a model hypothesis, the greater contributions it gives to this model hypothesis in the voting. On the other hand, the model hypotheses with all the inliers in smaller deviations are more close to the underlying model instances than the others. Hence, we get these model hypotheses to accept the support from the data points in different degrees. Specifically, the model hypotheses which compact the inliers more tightly are endowed with greater acceptance of the votes.

To achieve the above idea, here we use the concept of the variable bandwidth kernel desity estimation [20, 21] to design the voting formulation. Similar to the Gaussian kernel function, we combine the density estimation of model hypotheses and the residuals of data points with respect to model hypotheses. Thus each model hypothesis is endowed with a discriminative vote value which takes the information of both model hypotheses and data points into consideration. In addition, we restrain the model hypotheses with large inlier noise scales. To this end, the consensus vote value of the j-th model hypothesis from the i-th data point is defined as:

where \(\hat{s}_j\) is the inlier noise scale of the j-th model hypotheses estimated by IKOSE [1], h is the bandwidth used in computing the variable bandwidth kernel density, and \(\alpha \) is a constant (98% of inliers of a Gaussian distribution are included when \(\alpha \) is set to 2.5).

After all the data points give their votes to the generated model hypotheses, each model hypothesis gathers the support from all the data points. Therefore, the voting score of the j-th model hypothesis from all the data points is calculated as follows:

Based on the above formulation, the “good” model hypotheses, which possesses more inliers, denser inlier distribution and smaller inlier noise scale, tend to have significantly higher voting scores than the “bad” model hypotheses. Thus, we retain the “good” model hypotheses with significantly higher voting scores as \(\varvec{\hat{\varTheta }}^*=\{\varvec{\hat{\theta }}_j^*\}_{j=1}^{m}\) (m is the number of the retained model hypotheses), and filter out the “bad” model hypotheses. Similar to [1], we adopt an adaptive model hypothesis selection method based on the information theory to decide the cut-off boundary using the voting scores.

2.2 The Preference Voting of the Hierarchical Voting Scheme

The above-mentioned consensus voting to the model hypotheses step effectively eliminates the “bad” model hypotheses. Thus the influence of “bad” model hypotheses, which often leads to the failure in the estimation of model instances, is significantly reduced. However, as for the data points, there are still a great number of gross outliers that need to be removed. As a supplementary of the first step, we also use the affinity information in the second step to handle this problem. Each data point is supported by the model hypotheses with close affinities in the form of votes. Since the retained model hypotheses generally include redundancy, which means several model hypotheses correspond to the same model instance, the true inliers receive much more votes from multiple model hypotheses (corresponding to the same model instance) than the gross outliers. In addition, in the case of the intersecting models, the inliers located in the intersection receive the votes from multiple model hypotheses (corresponding to the multiple intersecting model instances). Hence, the differences between the true inliers and the gross outliers can be easily discriminated by utilizing these observations in the preference voting. To this end, the preference vote value of the i-th data point voted by the j-th model hypothesis is defined as:

where \(\xi (x_i,\varvec{\hat{\theta }}_j^*)\) is a function that calculates the value of a valid vote from the j-th retained model hypothesis to the i-th data points based on the affinity information. Various functions can be used as the function \(\xi (x_i,\varvec{\hat{\theta }}_j^*)\), such as the Gaussian function and the exponential function. Since the consensus voting in the first step removes a great number of “bad” model hypotheses, the retained model hypotheses are relatively reliable in the preference analysis of data points and have discriminative residuals for the inliers and the outliers. For simplicity and effciency, we employ a function similar to the one used in J-linkage [2], which sets the valid vote \(\xi (x_i,\varvec{\hat{\theta }}_j^*)\) to 1. Then the voting score of a data point can be computed as the sum of all the votes from the retained model hypotheses, which can be written as follows:

The retained model hypotheses that are close to the true model instances, give plenty of votes to the inliers but only few votes to the outliers. Therefore, we can derive a set of filtered points \(\varvec{X}^*\) of which the voting scores are larger than a cut-off threshold. For the outlier-free data or the data with a small proportion of outlier, it is unlikely to wrongly remove the inliers when the cut-off threshold is fixed to 0. For the data with severe outlier contamination, the outlier removal results are more accurate when the cut-off threshold is fixed to 5%–10% of the number of the retained model hypotheses. Here we set the threshold to 0 to filter out the outliers without any support from the retained model hypotheses.

Note that the preference voting step removes most gross outliers in the given data by utilizing the retained model hypotheses. Some experiments on the performance of the outlier removal are demonstrated in Sect. 3.1.

2.3 The Complete Method

With all the components of the hierarchical voting scheme mentioned above, we present the complete proposed method HVF, summarized in Algorithm 1. The proposed hierarchical voting scheme acts as the key step of HVF to sequentially evaluate model hypotheses and data points. Specifically, the first step of HVF differentiates the “bad” model hypotheses from the “good” model hypotheses by using the consensus voting. And then HVF removes the “bad” model hypotheses. Benefiting from this step, HVF not only reduces the influence of the “bad” model hypotheses in terms of the preference analysis, but also removes the unnecessary computation on the evaluation of data points using “bad” model hypotheses. The second step of HVF utilizes the preference information to eliminate outliers, which narrows the range of clustering data points in the next step and reduces the influence of outliers. After the hierachichal voting procedure, a bottom-up clustering method similar to [2] is applied to the filtered data points using the preference information. With the help of the hierarchical voting scheme, the clustering step is carried out with more accurate preference analysis and less computational complexity. Finally, the estimated model instances can be derived from the clusters obtained on the filtered data points.

3 Experiments

In this section, we evaluate the proposed model fitting method HVF on synthetic data and real images. Four state-of-the-art model fitting methods—KF [12], AKSWH [1], J-linkage [13] and T-linkage [2] (HVF takes some inspiration from these methods and has some similarities to them in terms of voting schemes), are used for performance comparison. For fairness, we implement each experiment 50 times with the best tuned settings. We generate 5000 model hypotheses for line fitting, 10000 for homography based segmentation and two-view based motion segmentation. Intuitively, we show some fitting results obtained by HVF and list the average performance statistics obtained by the five competing methods. We conduct all the experiments in MATLAB on the Windows system with Inter Core i7-4790 CPU 3.6 GHz and 16 GB RAM. The fitting error is computed as [2, 18], which equals to the proportion of the misclassified points in all the data points. The outlier removal error is calculated as the proportion of the incorrectly dichotomized inliers and outliers in all the data points.

3.1 Line Fitting and Outlier Removal

For line fitting, the five model fitting methods are tested on several synthetic data and some datasets from [12, 19]. The gross outlier proportions of these data range from 0 to 80%. To investigate the effectiveness of the hierarchical voting scheme, we test the performance of outlier removal on the data of line fitting. As shown in Fig. 2, HVF is compared with the other three methods (AKSWH is not evaluated since it focuses on the model hypotheses selecting and clustering without explicit outlier removal) in the line fitting task in terms of the outlier removal results. These data respectively consist of 2, 4 and 6 lines. It can be seen that HVF achieves the lowest outlier removal errors among the four methods or equivalent low outlier removal errors compared with J-linkage and T-linkage under most conditions. This is due to that the number and the quality of the model hypotheses and the data points are progressively refined by using the hierarchical voting scheme. We show some outlier removal results and fitting results obtained by HVF for line fitting in Fig. 3. The gross outlier proportions of these three data are 80%. We can see that HVF successfully removes most of the outliers although the outlier proportions are high. In addition, HVF can favorably deal with the multiple-structure data for line fitting even if there exist intersections between the model instances.

Some outlier removal results and fitting results obtained by HVF on the line fitting. The top row shows the outlier removal results where the yellow dots are outliers. The bottom row shows the line fitting results where the blue dots are outliers. Each of the other colors represents a model instance. (Color figure online)

Some results obtained by HVF on 4 data for homography based segmentation. The top row and the bottom row show the ground truth (i.e., Elderhalla, Oldclassicswing, Elderhallb and Johnsona) and the fitting results obtained by HVF, respectively. The red dots represent the outliers. Each of the other colors represents a model instance. (Color figure online)

3.2 Homography Based Segmentation

In terms of homography based segmentation, we test the performance of the five model fitting methods on 10 data from the AdelaideRMF [16] dataset. The segmentation results for 4 data and the performance for the 10 data are respectively shown in Fig. 4 and Table 1. From Fig. 4 and Table 1, we can see that the proposed HVF achieves the lowest fitting errors and the least computational time on all the data among the five methods. HVF achieves better accuracy than the AKSWH and T-linkage which have already achieved quite good results in some data. The improvement of speed of HVF is quite obvious due to the proposed hierarchical voting scheme (at least equivalent to AKSWH in the “Johnsona” or at most 25 times faster than the T-linkage in the “Hartley”). T-linkage improves the accuracy of the preference analysis compared with J-linkage at the cost of prohibitive computational time. KF obtains the worst performance due to its sensitivity to the parameters.

3.3 Two-View Based Motion Segmentation

For two-view based motion segmentation, we evaluate the competing methods on 8 pairs of images in the AdelaideRMF dataset. The segmentation results for 4 data and the performance for the 8 data are respectively shown in Fig. 5 and Table 2. As we can see, the proposed HVF achieves the fastest computational speed in all the 8 data among the five competing methods, the lowest fitting errors in 6 out of the 8 data, and the second lowest fitting errors in the “Biscuitbook” and “Cubechips” data (still close to the lowest fitting errors obtained by T-linkage, which clusters all the data points with slightly higher clustering precision, but consumes much more computational cost). KF and AKSWH achieve unsatisfactory performance on some data. KF is prone to be disturbed by “bad” model hypotheses because it is difficult to generate sufficient clean subsets in the task of two-view based motion segmentation. AKSWH may wrongly remove “good” model hypotheses, which causes errors in the final estimation. J-linkage and T-linkage are based on the preference analysis, and thus the “bad” model hypotheses may affect the accuracy of the preference description and the final estimation. The improvement on speed obtained by the proposed HVF is remarkable compared with the other four methods, for the reason that the removal of “bad” model hypotheses and outliers accelerates the clustering. Specifically, the speed of HVF is at least equivalent to KF in the “Breadtoycar” or at most 15 times faster than T-linkage in the “Cubebreadtoychips”.

Some results obtained by HVF on 4 data for two-view based motion segmentation. The top row and the bottom row show the ground truth (i.e., Biscuitbook, Cubechips, Breadtoycar and Cubebreadtoychips) and the fitting results obtained by HVF, respectively. The red dots represent the outliers. Each of the other colors represents a model instance. (Color figure online)

4 Conclusion

We propose a multiple-structure fitting method (HVF) based on a hierarchical voting scheme. The hierarchical voting scheme comprehensively utilizes both the consensus information of data points and the preference information of model hypotheses, to remove a large proportion of “bad” model hypotheses and gross outliers. Based on the hierarchical voting scheme, HVF is able to successfully estimate the number and the parameters of model instances simultaneously for multiple-structure data contaminated with a large number of outliers and noises. Especially, HVF can effectively select the model instances from the model hypotheses of uneven quality generated by random sampling with noticeable acceleration. This is because that the effective removal of “bad” model hypotheses and outliers. The experimental results have shown that HVF has significant superiority in robustness, accuracy and efficiency compared with several state-of-the-art fitting methods.

References

Wang, H., Chin, T.J., Suter, D.: Simultaneously fitting and segmenting multiple-structure data with outliers. IEEE Trans. Pattern Anal. Mach. Intell. 34(6), 1177–1192 (2012)

Magri, L., Fusiello, A.: T-linkage: a continuous relaxation of j-linkage for multi-model fitting. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3954–3961 (2014)

Wang, H., Xiao, G., Yan, Y., Suter, D.: Mode-seeking on hypergraphs for robust geometric model fitting. In: IEEE International Conference on Computer Vision, pp. 2902–2910 (2015)

Magri, L., Fusiello, A.: Multiple model fitting as a set coverage problem. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3318–3326 (2016)

Xiao, G., Wang, H., Yan, Y., Suter, D.: Superpixel-based two-view deterministic fitting for multiple-structure data. In: European Conference on Computer Vision, pp. 517–533 (2016)

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981)

Torr, P.H.: Geometric motion segmentation and model selection. Philos. Trans. R. Soc. London A: Math. Phys. Eng. Sci. 356(1740), 1321–1340 (1998)

Vincent, E., Laganire, R.: Detecting planar homographies in an image pair. In: International Symposium on Image and Signal Processing and Analysis, pp. 182–187 (2001)

Zuliani, M., Kenney, C.S., Manjunath, B.S.: The multi-RANSAC algorithm and its application to detect planar homographies. In: IEEE International Conference on Image Processing, pp. III-153 (2005)

Duda, R.O., Hart, P.E.: Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 15(1), 11–15 (1972)

Xu, L., Oja, E., Kultanen, P.: A new curve detection method: Randomized Hough Transform (RHT). Pattern Recogn. Lett. 11(5), 331–338 (1990)

Chin, T.J., Wang, H., Suter, D.: Robust fitting of multiple structures: the statistical learning approach. In: IEEE International Conference on Computer Vision, pp. 413–420 (2009)

Toldo, R., Fusiello, A.: Robust multiple structures estimation with J-linkage. In: European Conference on Computer Vision, pp. 537–547 (2008)

Tran, Q.H., Chin, T.J., Chojnacki, W., Suter, D.: Sampling minimal subsets with large spans for robust estimation. Int. J. Comput. Vis. 106(1), 93–112 (2014)

Pham, T.T., Chin, T.J., Yu, J., Suter, D.: The random cluster model for robust geometric fitting. IEEE Trans. Pattern Anal. Mach. Intell. 36(8), 1658–1671 (2014)

Wong, H.S., Chin, T.J., Yu, J., Suter, D.: Dynamic and hierarchical multi-structure geometric model fitting. In: IEEE International Conference on Computer Vision, pp. 1044–1051 (2011)

Chen, T.C., Chung, K.L.: An efficient randomized algorithm for detecting circles. Comput. Vis. Image Underst. 83(2), 172–191 (2001)

Mittal, S., Anand, S., Meer, P.: Generalized projection-based M-estimator. IEEE Trans. Pattern Anal. Mach. Intell. 34(12), 2351–2364 (2012)

Magri, L., Fusiello, A.: Robust multiple model fitting with preference analysis and low-rank approximation. In: British Machine Vision Conference, pp. 1–12 (2015)

Silverman, B.W.: Density Estimation for Statistics and Data Analysis. CRC Press, Boca Raton (1986)

Wand, M.P., Jones, M.C.: Kernel Smoothing. CRC Press, Boca Raton (1994)

Acknowledgments

This work was supported by the National Natural Science Foundation of China under Grants U1605252, 61472334, and 61571379, by the National Key Research and Development Plan (Grant No. 2016YFC0801002), and by the Natural Science Foundation of Fujian Province of China under Grant 2017J01127.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Xiao, F., Xiao, G., Wang, X., Zheng, J., Yan, Y., Wang, H. (2017). A Hierarchical Voting Scheme for Robust Geometric Model Fitting. In: Zhao, Y., Kong, X., Taubman, D. (eds) Image and Graphics. ICIG 2017. Lecture Notes in Computer Science(), vol 10666. Springer, Cham. https://doi.org/10.1007/978-3-319-71607-7_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-71607-7_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-71606-0

Online ISBN: 978-3-319-71607-7

eBook Packages: Computer ScienceComputer Science (R0)