Abstract

The current proliferation of 3-d representations of cultural heritage objects presents a new category of challenges for the curation and search methods used to access the growing amount spatial data-sets. Spatial data will soon be a new standard in the representation of object collections as the methods and technologies are quickly improving. The LibraryMachine project investigates how spatial data can be leveraged to improve the search experience and to integrate new qualities of object representation. It is tailored to the needs of Special Collections, a type of collection with particularly heterogeneous holdings ranging from books, archival documents to tangible objects and dynamic objects, making it the ideal testbed to investigate new forms of access and dissemination for cultural heritage resources. We are reporting on the first phase of a research endeavor consisting of three phases, iterating our research questions through various forms of representation and viewing frameworks including public touch-screen installations, mobile tablet and VR deployment.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Haptic interfaces

- Multi-modal interaction

- Object search

- 3d meta-data

- Virtual reality

- Image-based rendering

- Cultural heritage collections

1 Introduction

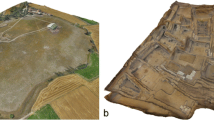

Thanks to digital reproduction techniques the landscape of cultural heritage preservation has changed significantly. With the efforts of mass-scanning of books and digital image reproduction the possibilities of documenting and disseminating cultural heritage resources have massively improved. In the past ten years the technologies of text and image capture have been extended to include 3-dimensional objects through 3-d scanning and photogrammetry. The recent development of scanning technology has made it possible for many museums to explore the scanning of their 3-dimensional artifact collections. What used to be an expensive and time-consuming process, requiring extensive custom-made equipment and lengthy calibration [17], is now done in an assembly-line style for mass-digitization of objects as demonstrated by the Fraunhofer Institutes Cultlab3D system [7]. The Cultlab3D system has been tested scanning sculptures from the collection of the Liebieghaus Museum in Frankfurt, Germany, and the Museum of Natural History in Berlin, Germany [11], which indicates that soon the presentation of objects with 3d data-sets will be a common way of viewing this type of resource. The technology use in cultural heritage institutions has constantly increased the surveys of the Institute for Museum and Library Services [31, 32] show. In particular museums are embracing 3d-scanning to showcase the objects they cannot put on display in their normal exhibitions [30]. Several institutes such as the Metropolitan Museum of Art in New York [24] or the British Museum in London [6] are even publicly providing printable 3d data-sets of their object suitable for 3d-printing. Interested viewers, who dispose of a 3d printer can print them for their own use at home, without having to go to the museum. Similar efforts are being made in the capture and representation of excavation sites [8, 37], monuments and in-situ artifacts such as the Million Image Database of the Institute for Digital Archaeology, which aims to preserve cultural heritage sites with a special emphasis on at-risk sites in the Middle East and North Africa [25].

The proliferation of 3d data acquisition redefines the possibilities for preserving and exhibiting objects in different frameworks beyond the classic display situation in the exhibition. It allows to formulate responses to immediate threats to cultural sites and object holdings become available to a much broader audience than cultural heritage institutions are able to show a higher percentage of their holdings even with limited exhibition space. This shift also presents a new category of challenges for the curation and search methods used to access the growing amount 3d data. Museums usually follow the traditional exhibition approach based on the “techniques and rhetorics of display.” [3: 73] From the perspective of the viewers this means that they are in an explorative mindset and trust that the objects on display are curated in a way that enables them to learn a meaningful ‘lesson’ about the theme constituted by those objects. Similar rhetorics of display translated from the real-world display case to the online displays of virtual objects, a translation that works rather well for the museum context. But not all cultural heritage institutions function according to the principle of the curated show. In libraries, for example, the process of finding relevant objects out of a enormous pool of holdings is solely the responsibility of the patron. Providing search tools and cataloguing techniques that enable patrons to find the resources they are interested in is a necessity, which made the development of appropriate tools a strong focus of research in the library domain. The development of search tools for libraries dates far back to pre-computational time but for mechanized search we can situate tools like Calvin Mooers’ Zatocode system as early instances in an ongoing line of research [26]. The development of similar search-oriented tools developed more recently in the museum domain as object access through databases of digital object representations became more important. As an early example we can regard the effort of the French Ministry of Culture to create and link databases of museum objects and digital representations [21]. The development approaches for search tools differ in the amount of expertise and responsibility for the effectiveness of search is expected from the user. The library tools are more efficiency driven and assume a certain amount of specialized knowledge [23, 33, 36], while museum search aims to implement the explorative aspect of the viewers mindset towards the display case [13, 20, 22] acknowledging a gap in the expertise between user and curator. We can predict that in the near future both lines of research will be converging, facing similar challenges. The effort of museums to make the part of their holdings that is normally hidden in storage accessible through 3d representations will, given the extent of objects (the Smithsonian Institution states that 99% of their holdings are not on display [1]), have to rely on search tools, rather than curated exhibitions.

As both libraries and museums are increasingly using digital data representations – and in particular 3d data-sets – it is important to develop appropriate search tools that include the spatial aspect of the data in meaningful ways. In the following we present the LibraryMachine research project, which engages with several of the core questions relating to access and representation of spatial objects. Our effort is situated in the context of the special collections of the libraries of the University of Southern California. The LibraryMachine project explores the implications and possibilities of working with spatial data at the intersection between library and museum collections. Special collections generally have a wide range of different object types, ranging from books and archival documents to 3-dimensional objects, to moving, manipulable objects, which we will call dynamic objects in the following. In this sense they are the ideal object of study in the investigation of how spatial data can be leveraged to improve the search experience and results and integrate new qualities of object representation. Looking at the holdings of special collections we are examining the types of objects that are typical for this kind of collection and which forms of representation and search are most appropriate for them.

2 Heterogeneous Collections and Complex Object Types

Our choice to work with special collections was based on the large number of different object types they hold, providing us with a framework to investigate questions of object capture, representation and search that pertains to a wide range of cultural heritage institutions. The LibraryMachine works with the special collections of the University of Southern California, which contain rare books, artist books, images, audio documents, costumes, tools, stereo-slides, archaeological objects and many other objects that cannot easily be represented by one consistent form of value attribution and representation. While library holdings generally are valuable for their textual content, the way special collections items are valuable to scholars vary [28]. Rather than for their content, which may be released in revised, newer editions, rare books are often important for their marginalia, special printing or binding techniques. In these cases scholars need to examine their qualities as objects, the construction of leather hinges and metalwork, the operation of clasps, materials of treasure bindings and the texture of the pages and the writing or printing on them. Artist books may be important for their art historic value and their sculptural or dynamic properties or they may be viewed for how they embody discursive processes like a particular way of unfolding or of recombining their component parts in the process of viewing the item. Ephemera collections are interesting for the variety of materials, purposes, historic handling traces etc.; archaeological objects may be important for their shapes, surface structures etc.

The varied qualities of special collections items make them particularly interesting for humanities research, but they are currently underrepresented in the discourse about 3d-digitizing due to the wide range of challenges they present. Capture methods such as text and image scanning, optical character recognition and 3d-scanning are currently mostly administered separately and exclusive to defined object types. High quality image scanning is reserved for images, while books are treated with lower resolution high contrast scans for optical character recognition. 3-dimensional objects are mostly represented as polygon meshes with color information. Representing the varied qualities of objects like those found in special collections in representation and reference systems structured according to these rather rigid type classifications and representation techniques means that their value as a resource is often not represented and therefore not accessible to patrons and users. While the established techniques give adequate representations for many of the demands brought to cultural heritage objects by researchers and specialists they leave a whole range of questions that can also be asked of these objects uncovered.

In the special collection context this problem comes to the foreground for two reasons. The heterogeneous holdings that largely go across all of the different forms of representations intensify the fact that objects cannot satisfactorily be classified into groups of those objects that are represented through high-quality image scans, those that are 3d-scanned and those that are going through optical character recognition, without omitting some of their aspects that may be important for certain researchers. Just like the special collections holdings, the field of the humanities has a very heterogeneous range of methodologies and inquiries that can be effectively supported by reshaping the established forms of representation and classification. The other reason is that special collections have to solely rely on search tools in order to make their holdings discoverable and accessible. Items do not circulate due to safety and security measures and while other object collections can showcase their objects in exhibitions, special collections are normally hidden in vaults.

In preparation of the LibraryMachine project surveys have been administered post-instruction and at the occasion of special outreach events at the University of Southern California and they indicate that patrons engage quickly with the collection objects once they encounter them and are impressed by their qualities such as age, aesthetic value and rarity. But most patrons are not aware of the special collections holdings and would not seek to find them. This is due to the fact that the objects are locked up in the vault, but it also has to do with the way they are currently referenced in the general catalogue. From the text entries in the database it is very hard to evaluate if an object has potential value for a specific research question if the patron is not already familiar with the object. For images and archival documents, the digitizing efforts have helped to increase accessibility even though search still has to rely on text entry and keywords. The one-sided accessibility of the digitized items isolates them from the rest of the collection and puts them into the separate group of digital collections.

The LibraryMachine project aims to create a comprehensive search experience across all aspects of the heterogeneous collections through a search interface that allows the user to move fluidly between different object types and forms of representation. The project tackles the problems of search on three different levels: (a) It formulates new methods of item representation that cross the boundaries between the currently established methods and add support for dynamic objects and better rendering of surface and detail characteristics. (b) It develops methods of object capture that can extract information about object properties serving as meta-data. And (c) it creates a new search interface that integrates all types and representations forms of objects and realizes an interaction pattern that caters to the spatial and tactile qualities of objects acquired with the new methods of 3d-scanning.

3 Modes of Representation, Image-Based Rendering

Based on the analysis of different object types in special collections we have determined several capture methods that deliver the specific qualities of a rather wide range of objects. In the current first stage of the project we have realized two main form of image-based rendering representations which we will discuss in the following. As the main component of the LibraryMachine project is to develop a search infrastructure that allows patrons of the collections to more efficiently locate the resources they need, our focus is on how to support their efforts through effective object descriptions and representations. We are not aiming to replace the objects, rather to communicate their core qualities in the search interface to enable the patron to evaluate whether an object is worthwhile paging and investigating in original. Making query evaluation more efficient in this way reduces the strain on the resources items because they do not need to be paged in order to determine their value for a certain research question, it makes the search experience for the user more efficient and satisfactory and alleviates the burden on the collection staff who do not have to retrieve and file objects that upon retrieval turned out not to have the expected qualities.

Despite the focus on search our digitizing efforts are designed such that they can support other cultural heritage applications such as curation, display and remote investigation of specific resources. For curation and exhibition purposes it is often desirable to have high quality data at hand which – within limits – exhaustive account of certain aspects of cultural heritage resources to support exhibitions in digital format online or in other digitally mediated contexts. In particular if we understand the task of digital curation in the sense that has developed in the increasing interdisciplinary collaboration between the sciences and humanities, as a discipline that plays an active role in the creation of interdisciplinary thematic collections, new interpretations, theoretical frameworks, and knowledge [29: 11], we need to support not only the selection and preservation of cultural heritage resources, but also their development and valorization as well as the technical means of interpretation and representation. The development of search methods and new forms of representation becomes an interpretive and curatorial act of knowledge creation. In a similar sense, the creation of high quality forms of object representation is of great value to support remote investigation of cultural heritage resources in cases where it is easier for researchers to remotely access and investigate an object rather than traveling to the object or making the object travel to the researcher.

To satisfy these different requirements of object representation the LibraryMachine uses an image-based rendering approach that allows us to integrate different forms of object representation tailored to various objects originating from a standard set of images taken of the objects. For the current phase of the LibraryMachine we captured objects with cinema cameras with a resolution of 4K and a dynamic range of 12 stops in uncompressed RAW format in two different set-ups. From these image sets we derived several types of representation as shown in the capture matrix in Table 1.

The established representation methods make a strong separation between high-quality 2d image capture (2d-scan), polygon mesh representations (3d-scan) and content data extraction either through manual meta-data annotation or through OCR. The input data for these three types of representation differ substantially and require very different production pipelines. The LibraryMachine uses an integrated pipeline based on 4K images, which are the basis for four different methods of representation. The 4K-based approach delivers an image quality that satisfies most requirements for 2d representations in search and general display purposes, nevertheless, it can be assumed that the image quality of cinema cameras will soon be increasing (8K and 15 stops of dynamic range already exist, albeit in a comparatively high price range) to deliver better, nearly archive quality representations. 4K resolution is also enough for most optical character recognition tasks.

4 Near-Field Capture

The same 4K images produced in a sequence that surrounds an object in a full 360° circle is used to generate a 3-dimensional representation of spatial objects. We are using a 3d capture method that has been developed by the MxR lab at the University of Southern California, employing a simplified image-based light-field rendering technique of spatial images [5]. The technology is based on the light-field rendering method developed by Levoy and Hanrahan [18] but introduces several simplifications that make the process work efficiently within our pipeline for cultural heritage object representation. The light field rendering method replaces the polygon mesh data as the basis for the computation of visual representations of 3d object with images computed from arrays of light field samples captured with a digital cinema camera. Based on the samples of light-ray flow patterns images corresponding to variable viewpoints can be calculated, that show correct perspective and surface qualities of the object. The image-based approach results in a efficient computational model that is independent from the complexity of the object. In particular objects with complex geometry tend to produce very large datasets containing many polygons, which are dependent on high computation power and to calculate the representation. The image-based method can be deliver with high-quality representations also to systems with less graphics-computation power. While normal light field capture requires special camera set-ups, the simplified method of the MxR lab, can be generated with a rather simple capture setup using one or more off-the-self cameras and is computationally light. The simplification uses a reduced amount of light field samples, resulting in a limitation of possible viewpoints, a drawback that is acceptable for our purpose. The method is tailored to capture objects at a close distance and deliver the full shape and surface complexity of the object. As the samples are acquired photographically, surfaces with complex reflectance patterns, sub-surface scattering can be represented in photo-realistic quality, which generally is a challenge for geometry-based representations as they have to recompute the illumination and reflectance through calculated shading. When we observe objects up close, as we would normally do when examining a collection object or a rare book all the minute details of surface and material qualities of the object play an important role and our image-based rendering technique is specializing in this near-field zone.

For the first phase of the project we captured several 360° circles of images around each object with one image at every degree step, which allows us to calculate all viewpoints close to the object on a horizontal plane. Users can zoom into the object and rotate it, necessary additional viewpoints by combining information from the different image samples. The captured data also allows us to calculate stereoscopic representations of the object for use in for example virtual reality applications, which we will explore in a later stage of the project (Fig. 1).

Capturing a 360° ring for each object results in a matrix of that we use to represent both the spatial as well as the dynamic qualities of an object. Each ring of images corresponds to one spatially resolved phase of a stop motion animation sequence that displays a select phases of an operation or manipulation sequence of the object. Using moving image sequences allows us to go beyond the static 2d or 3d representations employed normally.

5 Dynamic Objects

The established set of capture methods for the representation of cultural heritage objects focuses on static representations and dynamic characteristics are omitted. Dynamic objects are objects that have time-based characteristics, for example moveable parts, or objects that need to be manipulated to be perceived in their actual form and full purpose. Examples for dynamic objects are tools, enclosures, or art objects. Defining a category of dynamic objects is useful to capture objects to which the movement aspect is characteristic. The category is close to the notion of intangible cultural heritage (ICH), and extends what is considered as cultural heritage. As the UNESCO states “cultural heritage does not end at monuments and collections of objects. It also includes traditions or living expressions inherited from our ancestors and passed on to our descendants, such as oral traditions, performing arts, social practices, rituals, festive events, knowledge and practices concerning nature and the universe or the knowledge and skills to produce traditional crafts [35].” The ICH category as in this definition is very large and includes many very different forms of cultural heritage practices, looking only at the dynamic characteristics of collection objects is in this sense a very small subset, but it allows us to extend our understanding of cultural heritage resources and include aspects that, even though the objects are part of a well-established workflow and collecting practice, generally omitted. Our approach is closely connected to existing workflows and thus easily integrated and tested. The rapid development of viewing frameworks for digitized cultural heritage resources promises that in the near future mobile devices or virtual reality systems may be common platforms for the engagement with CH resources, opening up new opportunities to reframe what is preserved and which aspects of objects are represented. We have designed the LibraryMachine project with the perspective of prototyping such platforms and to develop solid workflows and practices for the representation and preservation of CH resources.

Our current approach for the inclusion of dynamic object qualities is focused on the aspects of movement and manipulation of collection objects. The spatio-temporal representation matrix of our near-field capture technique is one of the possibilities explored in this first phase of the project (Fig. 2).

Matching the aesthetic of the near-filed representations we have developed another dynamic capture method, which, also in the style of stop motion animation, displays select characteristic states of an object including the manipulation processes connecting them. Since real stop motion animation would be too time and labor consuming, we chose to film demonstrations of the objects, in which a curator shows the handling of the object. With a similar 4K cinema camera set-up these sequences are captured and assembled as a linear stream images with several nodes of characteristic states of the object that can be examined in detail (Fig. 3).

The selection of objects to be treated in this way was guided by the how central the dynamic aspect is to a given object. One could of course consider books in general as dynamic objects since they have to be opened and the reading process is a progressive movement through the body of the book, nevertheless, we decided to keep the definition narrower and limit it for example to the items in the artist-book collections, of which many rely on a procedural unfolding and revealing to communicate their content and artistic value. Artist books are a particular example for the category of dynamic objects, but many of the qualities at play in artist books equally apply to other objects. We worked in similar ways with archival objects, objects from a costume collection and others. The dynamic characteristics of many of these object types is important and a great value for scholars. Having the possibility to display these qualities in the digitized representation makes search and evaluation of the object easier and it helps the preservation by reducing the strain that is put on sometimes century-old paper hinges (Fig. 4).

6 Automated Meta-data Extraction

In the current cataloguing practice the creation of meta-data is a time-staking and resource-intense process. Every object has to be manually classified according to established meta-data standards. Improving computer vision algorithms make it possible to explore methods for the computational extraction of meta-data for object annotation [4]. Among the properties explored are morphometric analysis, which possibly can support besides shape classification of objects for example art-historical classification in the sense Carlo Ginzburg was describing it in his essay on the evidential paradigm [12].

The LibraryMachine project is investigating how data-sets acquired for spatial and temporal representation of objects can be used to infer additional qualifiers for objects. Information regarding the shape, volume and surface qualities can potentially be extracted in the course of data acquisition. Even though the first phase of the LibraryMachine project does not present a workflow solution, several options have been explored and will continue to be investigated. In collaboration with the Institute for Creative Technologies of the University of Southern California we are exploring the use of camera-based capture of objects under illumination with spherical harmonics, allowing to acquire and calculate complex reflectance patterns of object surfaces [34]. This technique is promising in several ways because, besides opening the possibility to infer material and surface qualities of objects, it provides another form of object representation through a relighting interface (Fig. 5). Users can control the incident light of a digitized object revealing the detail structure of the surface. We have explored this option with relief objects, which are nearly flat objects but have a complex sculpted surface (see Fig. 6).

7 Haptic Search

As more collection items are digitized and put on display in digital frameworks accessing these objects will be done through search tools. While the exhibition of real, tangible objects allows the viewer to browse the collection and engage in serendipitous encounters and findings, in collections that make their holdings available through search interfaces this form of serendipity is difficult to establish. In particular in the library context the serendipitous search has mostly been replaced by search term driven interfaces that rely on abstract description of the desired items. The importance of serendipity for innovative and creative thinking has been highlighted basically since cataloguing and reference systems have begun to replace the real physical walk through the rows of shelves [16]. The same desire for accidental findings and surprises motivated information science pioneers like Vannevar Bush, Ted Nelson and Douglas Engelbart who aimed to foster serendipity in the computational tools the built. The importance of serendipity as a design goal in the interface design for search tools was renewed through more recent studies [9].

Nevertheless, most current search methods for collections are relying on the textual processing of meta-data. The search interfaces normally demand the entry of search terms to describe the objects of interest and an increasing move towards digitized cultural heritage holdings will introduce the same movement away from the pleasures and rewards of working with physical object collections. The LibraryMachine project is therefore dedicated to rethink the search approaches and to integrate the qualities of abstract search and concrete browsing into its toolset. The use of spatial and temporal object representations provides an opportunity to restore some of the associative and haptic qualities that the encounter with real objects has to the abstract search of digital resources. The salient qualities involved into the work with real tangible objects in a museum setting are the sensual concreteness of the objects and their real spatial proximity. The concrete presence of the object allows a comprehensive and conclusive grasp of the object and its qualities, which, compared to an abstract description through search terms, is more efficient and intuitive. It activates the same perceptual propensities that are at work in data visualization and are responsible for its cognitive efficiency [10: 30].

Visual perception is to a great extent supported through our haptic sense. Even if we do not touch an object prior haptic experiences allow our perception inferences about the haptic aspects of an object that is only perceived visually. The visual stimulus is associated with a certain sensory-motor action and evokes a feeling as if the haptic stimulus was perceived. This effect is referred to as pseudo-haptic sensation and is close to a form of sensory illusion [19]. The effects of pseudo-haptic sensation have been found to be effective in the execution of shape identification and interaction tasks [2]. Leveraging these pseudo-haptic effects is one of the strategies of the search interface concept of the LibraryMachine. The information display is predominantly visual and uses a spatial setting based on the metaphor of a library or museum building housing the objects that displayed. The spatial qualities of the display support the visual processing and pseudo-haptic clues. In addition to the visual focus we are using a large format touch screen as display device for the search interface, which allows us to implement gestural interactions with the objects. Search hits are displayed as a “whirlwind” containing the objects. The “whirlwind” can be navigated by rotating and scrolling it. In this way the user is able to ‘pseudo-tangibly’ interact with the objects and locate those that attract interest.

The “whirlwind” (WW) can be populated in two ways, either by entering search terms in a classical directed search, or by gradual refinement of its content. When the user approaches the screen, the WW begins to spin and pick up objects from various thematic areas. As the user approaches the WW slows down and stops once the user is at the touch screen at a distance to interact with the screen. At this stage the user can navigate the WW and refine its content. The approach phase during which the user is tracked by a Microsoft Kinect depth camera extends the interaction sequence with the interface to include full body movement. Gestures and body movement are important aspects in parsing the spatial information provided by the 3d object representations and developing a ‘feeling’ for the objects.

The hypothesis of the LibraryMachine project is that with the inclusion of spatio-temporal data we need to rethink the design and interaction strategies used to search for collection items. Similar to the research that began when large scale image scanning became available and new interface designs for image search were developed and tested. New interaction methodologies and meta-information requirements relevant to image search had to be developed [27]. This first phase of the project makes a contribution to formulating and testing methods of object representation and associated search and retrieval methods.

8 Discussion and Future Perspectives

In this article we discussed the first phase of the LibraryMachine project, which explores methods of 3d and dynamic object capture for cultural heritage resources. The first phase is a proof of concept implementation that developed a foundational set of techniques of capture, search and interaction. We have developed a workflow of object capture techniques and a data management and search infrastructure to handle and display the data. These techniques are in a first user testing stage now and will serve as the basis for the second phase of the project. The second phase will scale the number of represented objects to include a representative set of the USC libraries special collections that will be large enough to do real world search task assessment. In parallel we are planning to develop an immersive version of the search and display interface using a virtual reality system.

Another research vector of the second phase will be the extension of search methods of the project phase discussed in this article. People often use gestures to describe the shapes or sizes of objects. These non-verbal gestures form an important part of how we communicate, and we are often better at describing an object through gestures rather than through words. Neuroscientists regard gestures as an integrated system of language production and comprehension [15]. Investigating how gestures can be captured, analyzed and used in the search process will be an important part of creating medium-specific search approaches. The computational analysis of gestures [14] and the creation of gestural interfaces are active fields of research.

The third phase of the project will consist of a full-scale implementation of the system to support real-world user traffic in the special collections. The focus of this phase will be on the solid implementation of the methods and techniques developed in the first two phases and on the extensive capture of collection objects.

The LibraryMachine project is a collaboration of members of the Media Arts and Practice Division, the Interactive Media and Games Division of the School of Cinematic Arts and the Libraries of the University of Southern California.

We would like to thank the MxR lab of the University of Southern California for their support with the near-field capture technique.

References

About Smithsonian X 3D | Smithsonian X 3D (2016). http://3d.si.edu/about. Accessed 27 Feb 2018

Ban, Y., Narumi, T., Tanikawa, T., Hirose, M.: Modifying an identified position of edged shapes using pseudo-haptic effects. In: Proceedings of the 18th ACM Symposium on Virtual Reality Software and Technology, pp. 93–96. ACM (2012). https://doi.org/10.1145/2407336.2407353

Bennett, T.: The Birth of the Museum: History, Theory, Politics. Routledge, London, New York (1995)

Bevan, A., Li, X., Martinón-Torres, M., Green, S., Xia, Y., Zhao, K., Zhao, Z., Ma, S., Cao, W., Rehren, T.: Computer vision, archaeological classification and China’s terracotta warriors. J. Archaeol. Sci. 49, 249–254 (2014). https://doi.org/10.1016/j.jas.2014.05.014

Bolas, M., Kuruvilla, A., Chintalapudi, S., Rabelo, F., Lympouridis, V., Barron, C., Suma, E., Matamoros, C., Brous, C., Jasina, A., Zheng, Y., Jones, A., Debevec, P., Krum, D.: Creating near-field VR using stop motion characters and a touch of light-field rendering. In: ACM SIGGRAPH 2015 Posters, p. 19:1. ACM (2015). https://doi.org/10.1145/2787626.2787640

The British Museum on Sketchfab - Sketchfab. https://sketchfab.com/britishmuseum. Accessed 27 July 2018

Cultlab3D -Welcome. http://www.cultlab3d.de/. Accessed 27 Feb 2018

Ducke, B., Score, D., Reeves, J.: Multiview 3D reconstruction of the archaeological site at Weymouth from image series. Comput. Graph. 35(2), 375–382 (2011). https://doi.org/10.1016/j.cag.2011.01.006

Edward Foster, A., Ellis, D.: Serendipity and its study. J. Documentation 70(6), 1015–1038 (2014). https://doi.org/10.1108/jd-03-2014-0053

Few, S.: Now You See It: Simple Visualization Techniques For Quantitative Analysis. Analytics Press, Oakland (2009)

Fraunhofer technology thrills Frankfurt museum | Fraunhofer IGD. http://www.igd.fraunhofer.de/en/Institut/Abteilungen/CHD/AktuellesNews/Fraunhofer-technology-thrills-Frankfurt-museum. Accessed 30 Apr 2016

Ginzburg, C.: Clues, Myths, and the Historical Method. Johns Hopkins University Press, Baltimore (1992)

Green, J., Pridmore, T., Benford, S.: Exploring attractions and exhibits with interactive flashlights. Pers. Ubiquit. Comput. 18(1), 239–251 (2014). https://doi.org/10.1007/s00779-013-0661-3 from https://doi.org/10.1007/s00779-013-0661-3

Holz, C., Wilson, A.: Data miming: inferring spatial object descriptions from human gesture. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 811–820. ACM (2011). https://doi.org/10.1145/1978942.1979060

Kelly, S.D., Manning, S.M., Rodak, S.: Gesture gives a hand to language and learning: perspectives from cognitive neuroscience, developmental psychology and education. Lang. Linguist. Compass 2(4), 569–588 (2008). https://doi.org/10.1111/j.1749-818x.2008.00067.x

Krajewski, M.: Paper machines about cards & catalogs, 1548–1929. http://search.ebscohost.com/login.aspx?direct=true&scope=site&db=nlebk&db=nlabk&AN=414111. Accessed 30 Apr 2016

Levoy, M., Pulli, K., Curless, B., Rusinkiewicz, S., Koller, D., Pereira, L., Ginzton, M., Anderson, S., Davis, J., Ginsberg, J., Shade, J., Fulk, D.: The digital Michelangelo project: 3D scanning of large statues. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, pp. 131–144. ACM Press/Addison-Wesley Publishing Co. (2000). https://doi.org/10.1145/344779.344849

Levoy, M., Hanrahan, P.: Light field rendering. In: Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, pp. 31–42. ACM (1996). https://doi.org/10.1145/237170.237199

Lécuyer, A.: Simulating haptic feedback using vision: a survey of research and applications of pseudo-haptic feedback. Presence Teleoper. Virtual Environ. 18(1), 39–53 (2009). https://doi.org/10.1162/pres.18.1.39

Lin, Y., Ahn, J., Brusilovsky, P., He, D., Real, W.: ImageSieve: exploratory search of museum archives with named entity-based faceted browsing. In: Proceedings of the 73rd ASIS&T Annual Meeting on Navigating Streams in an Information Ecosystem - Volume 47, American Society for Information Science, pp. 38:1–38:10 (2010). http://dl.acm.org/citation.cfm?id=1920331.1920386

Mannoni, B.: A virtual museum. Commun. ACM 40(9), 61–62 (1997). https://doi.org/10.1145/260750.260772

Mateevitsi, V., Sfakianos, M., Lepouras, G., Vassilakis, C.: A game-engine based virtual museum authoring and presentation system. In: Proceedings of the 3rd International Conference on Digital Interactive Media in Entertainment and Arts, pp. 451–457. ACM (2008). http://doi.org/10.1145/1413634.1413714

McKay, D., Buchanan, G.: Boxing clever: how searchers use and adapt to a one-box library search. In: Proceedings of the 25th Australian Computer-Human Interaction Conference: Augmentation, Application, Innovation, Collaboration, pp. 497–506. ACM (2013). https://doi.org/10.1145/2541016.2541031 from https://doi.org/10.1145/2541016.2541031

The Metropolitan Museum of Art - Thingiverse. https://www.thingiverse.com/met/collections. Accessed 27 Feb 2018

Million Image Database: Goals — The Institute for Digital Archaeology. http://digitalarchaeology.org.uk/new-page/. Accessed 30 Apr 2016

Mooers, C.N.: Theory digital handling non-numerical information and its implications to machine economics. Zator Technical Bulletin, 48 (1950)

Ren, K., Sarvas, R., Ćalić, J.: Interactive search and browsing interface for large-scale visual repositories. Multimedia Tools Appl. 49(3), 513–528 (2010). https://doi.org/10.1007/s11042-009-0445-y

Rinaldo, K.: Evaluating the future: special collections in art libraries. Art Documentation J. Art Libr. Soc. North America 26(2), 38–47 (2007). https://doi.org/10.2307/27949468

Sabharwal, A.: Digital Curation in the Digital Humanities. Elsevier, Waltham (2015)

Smithsonian X 3D. http://3d.si.edu/. Accessed 27 Feb 2018

Status of Technology and Digitization in the Nation’s Museums and Libraries. Institute of Museum and Library Services, Washington D.C. (2002)

Status of Technology and Digitization in the Nation’s Museums and Libraries. Institute of Museum and Library Services, Washington D.C. (2006)

Toms, E.G., Bartlett, J.C.: An approach to search for the digital library. In: Proceedings of the 1st ACM/IEEE-CS Joint Conference on Digital Libraries, pp. 341–342. ACM (2001). https://doi.org/10.1145/379437.379723

Tunwattanapong, B., Ghosh, A., Debevec, P.: Practical image-based relighting and editing with spherical-harmonics and local lights. In: Proceedings of the European Conference on Visual Media and Production (CVMP). IEEE, Washington, D.C. (2011)

What is Intangible Cultural Heritage? - intangible heritage - Culture Sector - UNESCO. http://www.unesco.org/culture/ich/en/what-is-intangible-heritage-00003. Accessed 27 Feb 2018

Windhouwer, M.A., Schmidt, A.R., Van Zwol, R., Petkovic, M., Blok, H.E.: Flexible and scalable digital library search. In: Proceedings of the 27th VLDB Conference, Rome, Italy (2001)

Zollhöfer, M., Siegl, C., Vetter, M., Dreyer, B., Stamminger, M., Aybek, S., Bauer, F.: Low-cost real-time 3d reconstruction of large-scale excavation sites. J. Comput. Cult. Herit. 9(1), 2:1–2:20 (2015). https://doi.org/10.1145/2770877

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Kratky, A. (2018). Acquisition, Representation and Retrieval of 3D Dynamic Objects. In: Antona, M., Stephanidis, C. (eds) Universal Access in Human-Computer Interaction. Virtual, Augmented, and Intelligent Environments . UAHCI 2018. Lecture Notes in Computer Science(), vol 10908. Springer, Cham. https://doi.org/10.1007/978-3-319-92052-8_39

Download citation

DOI: https://doi.org/10.1007/978-3-319-92052-8_39

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-92051-1

Online ISBN: 978-3-319-92052-8

eBook Packages: Computer ScienceComputer Science (R0)