Abstract

Microservices are an architectural style that decomposes the functionality of an application system into several small functional units. The services are implemented and managed independently from each other. Breaking up monolithic structures into a microservice architecture increases the number of single components massively. Thus, effective management of the dependencies between them is required. This task can be supported with the creation and evaluation of architectural models. In this work, we propose an evaluation approach for microservice architectures based on identified architecture principles from research and practice like a small size of the services, a domain-driven design or loose coupling. Based on a study showing the challenges in current microservice architectures, we derived principles and metrics for the evaluation of the architectural design. The required architecture data is captured with a reverse engineering approach from traces of communication data. The developed tool is finally evaluated within a case study.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Microservices are an emerging style for designing software architectures to overcome current issues with monoliths like the difficulties of maintenance and system evolvement, but also their limited scalability. The style is characterized by building an application through the composition of independent functional units, running its own process, and communicating through message passing [1]. The microservice architectural style enables the creation of scalable, reliable and agile software systems [2]. With an automatic deployment, they enable shorter product development cycles and serve the need for more flexible software systems.

Microservice systems can be composed of hundreds or even thousands of services [3]. Complexity increases also due to the nature of a distributed system and the typically high release frequency [4]. Managing and monitoring those systems is essential [2,3,4]. This includes the detection of failures and anomalies at runtime but also ensuring a suitable architecture design for example regarding consistency and fault tolerance [2].

Organizations employing microservice architectures require a “systematic understanding of maintainability as well as metrics to automatically quantify its degrees” [4]. Analyzing the performance of the system and understanding failures that occur during runtime is essential to understand the system as a whole [5]. Existing measurement approaches for service- or object-oriented systems are difficult to apply within microservice architectures [4]. Since microservice systems can contain up to thousands of single services, the architectural models get very large and complex. If they are manually created, typical problems in practice are inconsistent and incomplete models. Often information is missing or only a part of the architecture is represented. The up-to-dateness of the data is also questionable in many cases. Recovering the architecture from source code or other artifacts is seen as one option to improve the management of microservice systems [3]. However, source code is not always available or a great diversity of different programming languages hinders its analysis.

In the following we present a method and tool for the evaluation of microservice architectures based on a reconstructed architectural model. In a first step, we analyzed current literature (Sect. 2) and five microservice projects (Sect. 3) for microservice architecture principles. From these principles we derived the metrics that are used to evaluate the architecture and to identify hot spots (Sect. 4.1). Hot spots indicate parts of the architecture, where problems may occur and thus require a detailed consideration. The required architectural model is reconstructed based on communication data to ensure its correctness and completeness (Sect. 4.2). Based on these concepts we implemented the Microservice Architecture Analysis Tool MAAT (Sect. 5) and integrated it into an existing project for evaluation purposes (Sect. 6).

2 Foundations

2.1 Characteristics of Microservices Systems

A microservice system is a decentral system that is composed of several small services that are independent from each other. The communication takes place via light-weight mechanisms i.e. messaging. Instead of a central orchestration of the services, a decentral management like choreography is applied. Core principles of a microservice architecture are loose coupling and high cohesion [1, 6,7,8]. Microservice architectures follow a domain-driven design, where each microservice is responsible for one bounded context [2, 8,9,10]. That means, that a microservice provides only a limited amount of functionality serving specific business capabilities. Microservices are developed independently from each other i.e. they are independent regarding the utilized technologies and programming languages, the deployment and also the development team [2, 10,11,12]. A team is responsible for the full lifecycle of a microservice, from development to its operation [1]. The build and release process is automated and enables continuous delivery [1, 8, 11]. According to [5] scalability, independence, maintainability (including changeability), deployment, health management and modularity (i.e. single responsibility) are the most mentioned attributes of microservice systems in literature.

During implementation of a microservice system, two major issues have to be considered. The first one addresses challenges due to the nature of distributed systems and the second one the context definition of a microservice. Finding the right granularity of microservices is essential, since badly designed microservices increase the communication complexity of the system [2, 10, 11]. They also reduce the extensibility of the system and impede later refactoring and the overall management.

Since communication is essential in such a system, network communication, performance and complexity are important issues [1, 5, 11, 12]. The system must be able to deal with latency and provides mechanisms for debugging, monitoring, auditing and forensic analysis. The architectural design has to support the openness of the system and has to deal with the heterogeneity [1, 13]. Additionally, fault handling and fault tolerance [5, 13] as well as security issues [1] must be taken into account.

2.2 Service Metrics for Architecture Evaluation

Scenario-based or metric-based evaluation is required to assess the quality of attributes of a software system and provide a solid foundation for planning activities [14]. There exists a large number of metrics for measuring and evaluating services and service-based systems. [14] provides an overview of the metrics in current literature with focus on maintainability and analyzes their applicability for microservice systems. With exception of centralization metrics the majority of the identified metrics are also applicable for microservice systems. But specific evaluation approaches for microservice systems are rare and especially practicable and automatic evaluation methods are missing [4].

In [4] a maintainability model for service-oriented systems and also microservice systems is presented. For the identified quality attributes the authors determine applicable metrics to measure the system architecture. For example, the coupling degree of a service can be measured considering the number of consumed services, the number of consumers and the number of pairwise dependencies in the system. Metrics for measuring cohesion are the diversity degree of parameter types at the interfaces. A highly cohesive service can also be identified considering the distribution of using services to the provided endpoints. Granularity is for example measured with the number of exposed interface operations. Further quality attributes are complexity and code maturation. A weakness of [4] is the missing tool support and integration into the development process.

In [15] constraints and metrics are presented to automatically evaluate microservice decomposition architectures. The authors define metrics to assess pattern conformance regarding decomposition. They focus on two aspects, the independence of the microservice and the existence of shared dependencies or components. The metrics and constraints rely on information about the connector type, i.e. whether it is an ‘in-memory’ connector or a loosely coupled connector. For example, to quantify the independence degree the ratio of the number of independent clusters to the number of all non-external components is used. Ideally the cluster has the size of 1 and thus the resulting ratio is 1. Components are aggregated to a cluster if they have an in memory connection.

3 Challenges Within Microservice Architectures in Practice

Within an exploratory study we analyzed the challenges in five different microservice projects. Table 1 presents the projects with the their type, duration, size (in number of microservices, MS) and the utilized technologies. We conducted in-depth interviews with selected experts from the projects.

An interview constitutes of four parts: In the introduction the purpose of this study and the next steps were presented. Afterwards the interview partner was asked to describe the project including her own role in the project. The first main part of the interview was about identifying challenges and problems within the project. This included also the reason for choosing a microservice architecture. Finally an open part was left to discuss project-specific topics in detail, that were not covered so far. Resulting topics were e.g. the size of microservices, rules for the decomposition into small services, use cases covering multiple microservices and a refactoring affecting more than one microservice. For some projects asynchronous communication, data consistency, deployment and testing were addressed as well.

Especially in larger projects, context definition of microservices was mentioned as a major challenge (Pr1, Pr5). Questions in this context were ‘What is a microservice?’, ‘What size does it have?’ and ‘Which tasks does the microservice implement?’. With these questions as baseline the problems arising through a unsuitable service decomposition were elaborated. Discussed consequences are an increasing communication complexity which leads to performance weaknesses and the difficult integration of new features that lead to an increasing time-to-market. An unsuitable breakdown of microservices tends to have more dependencies and thus the developer has to understand greater parts of a system for the implementation of a new feature.

Another challenge is refactoring, that affect more than one microservice (Pr1, Pr5). In contrast to monolithic systems, there is no tool support to perform effective refactorings of microservice systems. Additionally the complexity increases through the utilization of JSON objects or similar formats at the interfaces.

Closely related to that aspect is the issue of non-local changes (Pr1, Pr3). Experiences in the projects have shown that changes made to one microservice may not be limited to this microservice. For example changing interface parameters has effects on all microservices using that interface. Another reason why changes in one microservice may lead to changes on other services is because of changes in the utilized technologies. Experience in the projects have shown that the adherence of homogeneity is advised for better maintainability, robustness and understandability of the overall system. Even if the independence of technology and programming languages is possible and a huge advantage, system-wide technology decisions should be made, and only if necessary deviations should be made. This is why changes affecting the technology of one microservice may also lead to changes in other microservices.

A challenge that addresses the design of microservice systems are cyclic dependencies (Pr1). These dependencies cannot be identified automatically and cause severe problems when deploying new versions of a service within a cycle. To prevent a system failure all services the compatibility of the new version to all services in the cycle has to be ensured.

Keeping an overview of the system is a big challenge in the projects (Pr1, Pr4, Pr5). Especially large microservice systems are developed using agile development methods within different autonomous teams. Each team is responsible for its own microservices, carries out refactorings and implements new functionality by its own. Experiences in the projects show that it is hard to not lose track of the system. The complexity of this task increases, since the dataflow and service dependencies cannot be statically analyzed like in monolithic systems.

Beside the above challenges, monitoring and logging (Pr1, Pr4), data consistency (Pr4) and testing (Pr5) were also mentioned in the interviews. Monitoring and logging address the challenge of finding, analyzing and solving failures. Testing is aggravated by the nature of a distributed system and the heavy usage of asynchronous communication causing large problems concerning data consistency.

4 Evaluating Microservice Architectures

To address the identified challenges in current microservice projects we propose a tool-supported evaluation and optimization approach. The proposed method contains four steps (Fig. 1). Since we do not make the assumption of having an up-to-date architectural model, the first step is retrieving the required architectural data. Upon this information an architectural model is built, representing the microservice system with the communication dependencies. The model is used to evaluate the architecture design based on identified principles with corresponding metrics. Finally the results are visualized using a dependency graph and coloring.

In the following the retrieved principles with their metrics are presented (Sect. 4.1) as well as the data retrieval approach (Sect. 4.2). The visualization and the architectural model are shown exemplary for our tool in Sect. 5.

4.1 Evaluation Criteria: Principles and Metrics

Based on the literature (Sect. 2) and the results of the structured interviews (Sect. 3) we identified 10 principles for the design of microservice architectures. Table 2 provides an overview of them with their references to literature and the related projects.

To evaluate the principles, we derive metrics using the Goal Question Metric (GQM) approach [18]. To ensure a tool-based evaluation the metrics should be evaluable with the automatically gathered information in the first two method steps. For the metric definition we considered the proposed metrics by [4] to measure quality attributes of a service-oriented system like coupling, cohesion and granularity. The metrics proposed in [15] were not practicable for our approach, since the required information cannot automatically gathered from communication logs. Especially in-memory dependencies are not graspable.

The overall goal is the adherence of the principles. A possible question in this context is for example ‘Are the microservices of a small size?’ (P1). The derived metric for the small size principle counts the number of synchronous and asynchronous interfaces of a service (M1). A (a)synchronous interface indicates a provided functionality of a service via an endpoint, that can be consumed synchronously (respectively asynchronously). The number of interfaces is an indicator for the amount of implemented functionality and can thus be used to measure the size of a microservice. Due to the relation to the implemented functionality the metric can also be used for evaluating P2 (one task per microservice). Additionally, the distribution of the synchronous calls (M2) can be used to determine services that fulfill several tasks. Therefore the quotient of the minimum and maximum amount of synchronous calls at the interfaces of a service is considered. Having a small quotient, the number of interface calls varies widely and the microservice may implement more than one task. A high diversity within the number of interface calls hampers scalability (P3). Executing several instances of a service to deal with the high number of incoming request leads also to a duplication of the functionality behind the less requested interfaces.

To evaluate the principle loose coupling and high cohesion (P4) the number of synchronous and asynchronous dependencies (M3) can be used. This metric is calculated at service level and denotes the number of service calls, either via synchronous or asynchronous communication dependencies. This metric is also used to assess the principle of domain-driven design (P5). If a system is built around business capabilities, the services own the majority of the required data by themself. In contrast a system built around business entities contains more dependencies since more data has to be exchanged. A further indicator that speaks against a domain-driven design is a long synchronous call trace. M4 provides the longest synchronous call trace identified in the microservice system.

The principles P6 and P7, small network complexity and manageability of the system, can be evaluated using the number of (a)synchronous dependencies (M3). A small amount of dependencies indicates a low complexity of the network, while it also supports the clarity of the whole system. The network complexity increases, if there exist cyclic communication dependencies. M6 provides the number of strongly connected components in the microservice system in order to refine the evaluation of P7. Thereby only the synchronous dependencies are considered, since they cause blocking calls. This metrics is strongly connected to the principle P9 which says that a microservice systems should not contain cyclic dependencies.

To provide indicators for the system performance (P10) the longest synchronous call trace (M4) and the average size of asynchronous messages (M5) are proposed. Large messages may cause performance bottlenecks and the problem of network latency increases with a rising number of services within one call trace. Both factors have negative impact on the overall performance of a microservice system. Finally, P8 describes the independence of the microservice in different aspects like technology, lifecycles, deployment but also the development team. With the concept of utilizing communication and trace data we are currently not able to provide a meaningful metric. Integrating static data e.g. from source code repositories in future work can address this issue.

4.2 Data Retrieval

For the application of the metrics the architectural model must contain the following information: The microservices with their names and the provided interfaces. The number, size and source of incoming requests at an interface. A differentiation between synchronous and asynchronous has to be available for the communication dependencies. For synchronous requests the respective trace information is required in order to interpret the dependencies correctly. Finally it is recommended to aggregate the microservices within logical groups (clusters). Clustering enables an aggregation of characteristics to higher abstraction levels and thus supports the understandability of the architectural model.

To be able to perform the evaluation on an up-to-date architectural model, we decided to employ a reverse engineering approach. These approaches provide techniques to derive a more abstract representation of the system of interest [3]. [3] provide a two-step extraction method with a final user refinement of the architectural model. They include a static analysis of the source code as well as a dynamic analysis of the communication logs. The focus for our evaluation are the communication dependencies between the services. We decided to derive the required information from the communication data. Later extensions can include further information from the source code repository. With this approach, we are able to create an architectural model representing the actual behavior of the system. We do not have to estimate performance measurements like the number of messages or the message size. Furthermore, we do not have to deal with the heterogeneity of the technologies and programming languages regarding communication specification. Another option would be the utilization of manual created models. In this case the number and size of message has to be estimated and additionally, these models are of often not up to date.

When implementing a dynamic approach for data retrieval, it is important to set an adequate time span for data retrieval, since only communicating services are captured. Additionally, the data retrieval procedure must be performed in a way, that it has no significant impact on the productive system. Especially the performance must be ensured, when integrating such a logging approach. Due to the independence and heterogeneity of microservices, the data retrieval must also be independent from the used technologies and programming languages. And finally, the necessary extensions have to be performed without changing the source code of the microservice. The last point is important to ensure the practicability and acceptance of the approach.

We retrieve the required data from the communication logs of the microservice system. The communication dependencies are retrieved from logging the synchronous and asynchronous messages. The communication endpoints are identified as interfaces. Sources for the data are application monitoring tools and message brokers for aggregated data or the Open Tracing API to record service calls. The logical aggregation of microservices into clusters in made manually. After establishing the architectural model, a review has to ensure the quality of the model. The recovered architectural model contains only those services, that send or receive a message during the logged time span.

5 Tool for Architecture Evaluation: MAAT

For metric execution we implemented a tool, considering the requirements for data retrieval from the previous section. The Microservice Architecture Analysis Tool (MAAT) enables an automatic evaluation of the architectural design. The tool provides a visualization of the system within an interactive dashboard and applies the metrics described in Sect. 4.1. Additionally, it provides interfaces for the retrieval of communication data and an automatic recovery of the architectural model. The main goals are providing an overview of the system and to support the identification of hot spots in the architecture as well as the analysis of the system. Therefore, it can be used within the development process to ensure the quality of the implemented system but also in software and architecture audits. Figure 2 shows the architecture of MAAT.

Data retrieval is done within the Data collector component. This component provides two interfaces, one implementing the Open Tracing Interface to capture synchronous communication data and one for logging the messages from asynchronous communication. The Data collector processes this raw information and writes it into the database. Further information about the data retrieval at the interfaces are provided in the next two sections. The Data processor component utilizes the communication data in the database to generate a dependency graph of the system and to calculate the metrics. The results can be accessed via an implemented Frontend but also via a REST interface. The Frontend is responsible for the visualization of the dependency graph and the presentation of the metric results. The data and metric visualization is described in Sects. 5.3 and 5.4.

5.1 Synchronous Data Retrieval

For synchronous data retrieval, the Open Tracing standardFootnote 1 is used. Open Tracing is a vendor neutral interface for distributed tracing that provides an interface for collecting trace data and is widely used. The reason for this standard is, that there exist many distributed tracing systems collecting more or less the same data but using different APIs. Open Tracing unifies these APIs in order to improve the exchangeability of these distributed tracing systems. ZipkinFootnote 2 implements the Open Tracing Interface for all common programming languages like Java, C#, C++, Javascript, Python and Go and even for some technologies like Spring Boot. The Data collector of MAAT implements the same interface as Zipkin. Thus, the libraries developed for Zipkin can also be used for MAAT to collect synchronous data.

5.2 Asynchronous Data Retrieval

In contrast to synchronous data retrieval there is no standard available for collecting asynchronous data. Therefore, we had to implement our own interface for asynchronous data retrieval that is independent of any message broker. The Data collector of MAAT implements an interface for asynchronous data expecting the microservice name, the message size, an identification of the endpoint e.g. the topic, the name of the message broker, a timestamp and the type indicating if it was a send or received message. The generic interface is implemented for RabbitMQ using the Firehose TracerFootnote 3 to get the data. If the Firehose Tracer is activated, all send and received messages are also send to a tracing exchange and enriched with additional meta data. A specific RabbitMQReporter is implemented as intermediate service between the MAAT Data collector and the message broker RabbitMQ. The task of the RabbitMQReporter is to connect to the tracing exchange of RabbitMQ and to process all messages. He extracts the required information from the messages, finds the name of the microservice that has send respectively received the message and calls the interface of the MAAT Data collector to forward the information.

5.3 Data Visualization

The architecture is visualized as dependency graph. Nodes represent microservices and edges represent (a)synchronous dependencies. A solid edge with arrow at the end indicates a synchronous call and a dashed edge with arrow in the middle an asynchronous message. A microservice cluster is identified with a grey rectangle in the background. Figure 3 shows an example representation of a microservice system.

To support navigation and understandability in large microservice systems, a context sensitive neighborhood highlighting is implemented. Selecting a specific microservice, all outgoing dependencies as well as the respective target services will be highlighted. In Fig. 3 on the left the service named microservice is selected. All directly dependent services (outgoing communication edges) are highlighted to. In the right part, the edge is selected and respectively source and target are highlighted. This functionality adapts also to the respective metrics under consideration, i.e. if only synchronous dependencies are considered also only the synchronous neighbors are emphasized.

Additionally, the tool enables the creation of a more abstract view by aggregating the dependencies between clusters. This view provides a high-level overview of the system. If required the user can step into details by dissolving a single aggregated dependency or all aggregated dependencies for a specific cluster.

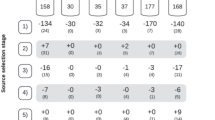

5.4 Metric Evaluation

Despite a textual representation of system and service details the tool visualizes the metric results within the dependency graph. Except M6 each metric refers to one microservice and therefore microservices are marked using the colors green, yellow and red depending on the calculated result. This color coding is intuitive, emphasizes the metric evaluation and hot spots can be quickly identified. Figure 4 shows the evaluation of the metric M3, number of asynchronous dependencies, in a fictional system. Since the service distributor has more than 5 asynchronous dependencies, he is colored red and the service microservice is colored yellow because he has between 4 and 5 asynchronous dependencies. All other microservices have 3 or less asynchronous dependencies and therefore colored green.

Since M6, the number of synchronous cycles, is bound to the whole system the visualization of the metric evaluation differs from the other ones. In this case all microservices and dependencies belonging to a cycle are marked with one color in order to easily spot cycles and distinguish between them.

6 Evaluation and Discussion

For evaluation purposes, we integrated the tool in a microservice project (Pr5) and applied the proposed method. The results are discussed with team members from the project. Additionally, we discuss related work and the strength and weaknesses of the approach.

The application system of the case study consists of about 50 microservices. Utilized technologies in the project are AWS, consul, Docker, FeignClient, MongoDB, RabbitMQ and Spring Boot. The project is under development for two years and is developed by three scrum teams at different locations. To gather the asynchronous communication data the proposed RabbitMQReporter in Sect. 5 is integrated into the system. Other reporter services are also possible at this point. For synchronous data Spring Cloud SleuthFootnote 4, an implementation of the Open Tracing API, is used. An alternative, independent from Spring Cloud would be braveFootnote 5 at this point. In this project, environmental variables are used to set the configuration for different target platforms. Thus, the service responsible for the RabbitMQ configuration was extended as well as the microservice variables for Spring Boot. For the integration of MAAT, no changes to the source code were necessary. Small changes have to be made for each microservice regarding the deployment. Altogether the integration was done in 10 man-days.

After the tool integration, data retrieval was performed during a system test. Thereby 40 microservices were identified, which were clustered in 9 groups. 3.466 synchronous calls could be aggregated to 14 different communication dependencies and another 49.847 asynchronous calls to 57 dependencies. Gathering the data at runtime can have impact on the performance. According to RabbitMQ the overhead for synchronous data retrieval in only additional messages. For synchronous data it is possible to specify the percentage of traced calls in order to address this issue.

The metrics were executed using the retrieved data and discussed with an architect of the development team. In Fig. 5 the evaluation of the metric ‘Number of asynchronous dependencies’ is presented. According to the result, the services are colored with green, yellow and red. The service marked red in the figure does not fulfill the principle of loosely coupled services. The high number of asynchronous dependencies together with its name (timetable) indicates a violation of the principle domain-driven design, where the service is built around an entity. Further implications from the evaluation were a missing data caching at a microservice and a missing service in the overall system test that was not visualized in the architectural model. The overall architecture was evaluated as good by the architects. This was also represented by the metrics. Nevertheless, several discussion points were identified during the evaluation (see points above).

The tool integration was possible without making changes to the source code. After recovering the architectural model from the communication data, a review was required and only minor corrections had to be made. The evaluation results, i.e. the visualization of the architecture and the metric execution, advance the discussion about the architectural design. The discussion outlines issues, that were not in mind of the team before. All over the positive outcome of the metric evaluation confirms the perception of the team, having a quite good architecture.

The single disciplines of architecture recovery and service evaluation are presented in Sect. 2. Approaches that combine both disciple in some way a distributed tracing systems and application performance management (APM) tools. Distributed tracing system, e.g. Zipkin, collect data at runtime in order to identify single requests and transactions and to measure the performance. The focus here is the runtime behavior of the system. MAAT provides a static view on the communication data with additional concepts for handling the models. APM tools are also analysis tools for microservice systems. Their focus is a real-time monitoring to support the operation of those systems. MAAT is primarily used by architects and developers for design and development of those systems.

7 Conclusion

Within an exploratory study of five microservice projects we identified several challenges. Context definition of microservices, keeping an overview of the systems and the effects of refactorings and non-local changes are major issues in the projects. Additional cyclic dependencies, monitoring and logging, consistency and testing are mentioned topics by the interviewed experts. To address these challenges we proposed a tool-supported evaluation method for microservice architectures. Relying on the dynamic communication data we recovered a static dependency model of the current architecture. Additionally we determined principles for the microservice architecture design from literature and the experiences in the considered projects. Using the Goal Question Metric approach we derived automatic executable metrics to evaluate the recovered dependency model. The visualization of the metric results within the implemented tool MAAT provides a quick access to design hot spots of the architecture. The method and the tool are applied within one of the projects. For further evaluation of the quality of the metrics to validate a microservice architecture, their application in further use cases is required. Since we were not able the evaluate the independence of the microservices in the different identified aspects, future work has to elaborate the integration of static information, e.g. from the source code repository. Future work will include the combination of the dynamically derived data with static data, e.g. source code, as proposed by [3] to improve the significance of the metrics. Additionally minor improvements like further support for service clustering and dynamic coloring of the metrics are planned.

References

Dragoni, N., Giallorenzo, S., Lafuente, A.L., Mazzara, M., Montesi, F., Mustafin, R., Safina, L.: Microservices: yesterday, today, and tomorrow. In: Mazzara, M., Meyer, B. (eds.) Present and Ulterior Software Engineering, pp. 195–216. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67425-4_12

Hasselbring, W., Steinacker, G.: Microservice architectures for scalability, agility and reliability in e-commerce. In: 2017 IEEE International Conference on Software Architecture Workshops (ICSAW), pp. 243–246 (2017)

Granchelli, G., Cardarelli, M., Francesco, P.D., Malavolta, I., Iovino, L., Salle, A.D.: Towards recovering the software architecture of microservice-based systems. In: 2017 IEEE International Conference on Software Architecture Workshops (ICSAW), pp. 46–53 (2017)

Bogner, J., Wagner, S., Zimmermann, A.: Towards a practical maintainability quality model for service-and microservice-based systems. In: Proceedings of the 11th European Conference on Software Architecture: Companion Proceedings, ECSA 2017, pp. 195–198. ACM, New York (2017)

Alshuqayran, N., Ali, N., Evans, R.: A systematic mapping study in microservice architecture. In: 2016 IEEE 9th International Conference on Service-Oriented Computing and Applications (SOCA) (2016)

Newman, S.: Building Microservices. O’Reilly Media Inc., Newton (2015)

Thönes, J.: Microservices. IEEE Softw. 32(1) (2015)

Amundsen, M., McLarty, M., Mitra, R., Nadareishvili, I.: Microservice Architecture: Aligning Principles, Practices, and Culture. O’Reilly Media, Inc., Newton (2016)

Evans, E.J.: Domain-Driven Design. Addison Wesley, Boston (2003)

Fowler, M., Lewis, J.: Microservices - a definition of this new architectural term (2014). https://martinfowler.com/articles/microservices.html. Accessed 29 Sept 2017

Amaral, M., Polo, J., Carrera, D., Mohomed, I., Unuvar, M., Steinder, M.: Performance evaluation of microservices architectures using containers. In: 2015 IEEE 14th International Symposium on Network Computing and Applications (NCA), pp. 27–34 (2015)

Francesco, P.D., Malavolta, I., Lago, P.: Research on architecting microservices: trends, focus, and potential for industrial adoption. In: 2017 IEEE International Conference on Software Architecture (ICSA), pp. 21–30 (2017)

Coulouris, G.F., Dollimore, J., Kindberg, T.: Distributed Systems. Pearson Education Inc., London (2005)

Bogner, J., Wagner, S., Zimmermann, A.: Automatically measuring the maintainability of service- and microservice-based systems - a literature review. In: Proceedings of IWSM/Mensura 17, Gothenburg, Sweden (2017)

Zdun, U., Navarro, E., Leymann, F.: Ensuring and assessing architecture conformance to microservice decomposition patterns. In: Maximilien, M., Vallecillo, A., Wang, J., Oriol, M. (eds.) ICSOC 2017. LNCS, vol. 10601, pp. 411–429. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-69035-3_29

Killalea, T.: The hidden dividends of microservices. Commun. ACM 59(8), 42–45 (2016)

Sharma, S.: Mastering Microservices with Java. Packt Publishing Ltd., Birmingham (2016)

Basili, V.R., Rombach, H.D.: The TAME project: towards improvement-oriented software environments. IEEE Trans. Softw. Eng. 14(6), 758–773 (1988)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Engel, T., Langermeier, M., Bauer, B., Hofmann, A. (2018). Evaluation of Microservice Architectures: A Metric and Tool-Based Approach. In: Mendling, J., Mouratidis, H. (eds) Information Systems in the Big Data Era. CAiSE 2018. Lecture Notes in Business Information Processing, vol 317. Springer, Cham. https://doi.org/10.1007/978-3-319-92901-9_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-92901-9_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-92900-2

Online ISBN: 978-3-319-92901-9

eBook Packages: Computer ScienceComputer Science (R0)