Abstract

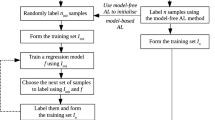

Supervised learning is concerned with the task of building accurate classifiers from a set of labelled examples. However, the task of gathering a large set of labelled examples can be costly and time-consuming. Active learning algorithms try to reduce this labelling cost by performing a small number of label-queries from a large set of unlabelled examples during the process of building a classifier. However, the level of performance achieved by active learning algorithms is not always up to our expectations and no rigorous performance guarantee, in the form of a risk bound, exists for non-trivial active learning algorithms. In this paper, we propose a novel (and easy to implement) active learning algorithm having a rigorous performance guarantee (i.e., a valid risk bound) and that performs very well in comparison with some widely-used active learning algorithms.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Ben-David, S., Blitze, J., Crammer, K., Pereira, F.: Analysis of Representations for Domain Adaptation. Advances in Neural Information Processing System 19, 137–144 (2007)

Cohn, D.A., Atlas, L., Ladner, R.E.: Improving generalization with active learning. Machine Learning 15(2), 201–221 (1994)

Freund, Y., Seung, H.S., Shamir, E., Tishby, N.: Selective sampling using the query by committee algorithm. Machine Learning 28, 133–168 (1997)

Freund, Y., Schapire, R.E.: A decision-theoretic generalization of on-line learning and an application to boosting. Journal of Computer and System Sciences 55(1), 119–139 (1997)

Kääriäinen, M.: Generalization error bounds using unlabelled data. In: Proceedings of the 18th Annual Conference on Learning Theory, pp. 127–142 (2005)

Langford, J.: Tutorial on practical prediction theory for classification. Journal of Machine Learning Research 6, 273–306 (2005)

Lewis, D.D., Catlett, J.: Heterogeneous uncertainty sampling for supervised learning. In: Proceedings of the 11th International Conference on Machine Learning (ML 1994), pp. 148–156 (1994)

Lewis, D.D., Gale, W.A.: A sequential algorithm for training text classifiers. In: Proceedings of SIGIR 1994, 17th ACM International Conference on Research and Development in Information Retrieval, pp. 3–12 (1994)

Lewis, D.D., Gale, W.A.: Training text classifiers by uncertainty sampling. In: Proceedings of the 17th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 1994), pp. 3–12 (1994)

Marchand, M., Sokolova, M.: Learning with Decision Lists of Data- Dependent Features. Journal of Machine Learning Research 6, 427–451 (2005)

Seeger, M.: PAC-Bayesian generalization bounds for guassian process. Journal of machine learning research 3, 233–269 (2002)

Tong, S., Koller, D.: Support vector machine active learning with applications to text classification. Journal of machine learning research 2, 45–66 (2002)

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 2008 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Laviolette, F., Marchand, M., Shanian, S. (2008). Selective Sampling for Classification. In: Bergler, S. (eds) Advances in Artificial Intelligence. Canadian AI 2008. Lecture Notes in Computer Science(), vol 5032. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-68825-9_19

Download citation

DOI: https://doi.org/10.1007/978-3-540-68825-9_19

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-68821-1

Online ISBN: 978-3-540-68825-9

eBook Packages: Computer ScienceComputer Science (R0)