Abstract

This paper considers the problem of clustering uncertain objects whose locations are uncertain and described by probability density functions (pdf). Though K-means has been extended to UK-means for handling uncertain data, most existing works only focus on improving the efficiency of UK-means. However, the clustering quality of UK-means is rarely considered in existing works. The weights of objects are assumed same in existing works. However, the weights of objects which are far from their cluster representatives should not be the same as the weights of objects which are close to their cluster representatives. Thus, we propose an AdaUK-means to group the uncertain objects by considering the weights of objects in this article. In AdaUK-means, the weights of objects will be adjusted based on the correlation between objects by using Adaboost. If the object pairs are must-link but grouped into different clusters, the weights of the objects will be increased. In our ensemble model, AdaUK-means is run several times, then the objects are assigned by a voting process. Finally, we demonstrate that AdaUK-means performs better than UK-means on both synthetic and real data sets by extensive experiments.

Similar content being viewed by others

Keywords

1 Introduction

Due to the limitation of instruments, the collected data (for example, in the case of sensor data) cannot provide correct or precise information. Thus, data uncertainty attracts more attentions. Existential uncertainty, value uncertainty and relationship uncertainty are three types of data uncertainty. Value uncertainty on a dimension is caused by instrument limitation or delay. Existential uncertainty [1–4] is caused by not sure the tuple exists in a database. Relationship uncertainty [5] learns the order on a dimension. In our clustering problem, value uncertainty [6–10] is studied.

Several clustering methods have been extends to uncertain data, i.e. Uncertain K-means (UK-means) [6]. Existing clustering methods only focus on improving the efficiency of clustering uncertain data [6–8]. The weights of objects are assumed same in existing methods. However, the weights of objects which are far from their cluster representatives should not be the same as the weights of objects which are close to their cluster representatives.

For example, as shown in Fig. 1 the uncertain objects in the same color should be grouped to the same cluster. The yellow points present the location of cluster representatives of UK-means. But the objects in the circle would likely to be grouped into other cluster in UK-means. The uncertain object which is grouped uncorrectly should be close to its corresponding cluster representative. Thus, the weights of objects which are must-link but grouped into different clusters should be increased. Then the cluster representative will shift to the objects which are grouped uncorrectly. AdaUK-means is proposed to group the uncertain objects by considering the weights of objects in this article. AdaUK-means is based on the ensemble model, and the decision is made by a voting process. In AdaUK-means, the weights of objects will be adjusted based on the correlation between objects by using Adaboost [11]. If the object pairs are must-link but grouped into different clusters, the weights of the objects will be increased. In our ensemble model, AdaUK-means is run several times, then the objects are assigned by a voting process. Our contributions of this work include:

-

1.

Different from UK-means, the weights of objects are considered in our method. The weights of objects will be adjusted during clustering process. The weights of objects which are must-link but grouped to different clusters will be increased in next iteration in AdaUK-means.

-

2.

The ensemble model is used to assign uncertain objects in AdaUK-means. The objects are assigned by a voting process in our method, which is different from existing works assigning the objects directly.

-

3.

The cluster representative is updated by the weighted objects, which is different from UK-means. In UK-means, the cluster representative is the average of the mean vectors of objects assigned to the cluster. The cluster representative considers the weights of objects in AdaUK-means.

The remainder of this paper is organized as follows. Related work of clustering uncertain data is briefly reviewed in Sect. 2. The preliminary knowledge of clustering uncertain objects is introduced in Sect. 3. The ensemble method of UK-means (AdaUK-means) is introduced in Sect. 4. Section 5 shows the experimental evaluation on the performance of AdaUK-means. Finally, our work is concluded in Sect. 6.

2 Related Work

Uncertain K-means (UK-means) is proposed to handle uncertain objects based on K-means. UK-means is computationally expensive because of a large amount of expected distance calculations. Some pruning technics [7, 8] are proposed to improve the efficiency of UK-means by pruning farther candidate clusters. The clustering quality is rarely considered in UK-means. UK-means is based on K-means, the weights of objects equals to each other. UK-means is limited in handling non-spherical data. In our work, the weights of must-link objects are adjusted depends on the clustering results of last iteration.

Some density-based clustering methods have also been extended to handle uncertain objects, i.e. FDBSCAN [9] and FOPTICS [10]. FDBSCAN is based on DBSCAN [12], and FOPTICS is based on OPTICS [13]. Fuzzy distance function is used in FDBSCAN and FOPTICS. The assumption of independent pairwise distances between objects may cause wrong results. For the purpose of making reliable results, a small number of cluster representatives are returned with quality guarantees in [14]. Then the user selects the results from the clusterings. The work of [14] just focuses on generating cluster representatives without improving the clustering quality. The weights of objects are the same in previous work. Different from previous work, the weights of objects is considered in our ensemble clustering model.

Besides the studies of clustering uncertain objects, the problem of classification uncertain data is also considered in [15–20]. Other mining technics on uncertain data are also studied, i.e. outlier detection [21–24], frequent pattern mining [25–30] and domain orders learning [31–36].

3 Preliminary Knowledge

As we have known that the learning model based on similarity metrics can perform better when classifying multimedia data [37–40], expected Euclidean distance is used in UK-means [6] and our work. Before introducing the preliminary knowledge, the notations used in this paper are listed in Table 1.

In our work, the uncertainty is defined in a multi-dimensional space. In the defined space, the uncertain region of an object is represented by a probability distribution function (PDF) or a probability density function. PDF (probability distribution function) describes the distribution of the probabilities of possible locations. In our work, the uncertain objects \(o_i\) falls within a finite region \(UD(o_i)\). The probability density of the possible location within \(UD(o_i)\) is represented by a probability density function (pdf), \(f_i\). There is \(\int _{UD(o_i)}f_i(x)\,dx=1\) [41].

The uncertain domain \(UD(o_i)\) is divided into T grid cells. \(s_{i,t}\) is the location (vector) of the t-th sample of \(o_i\). The expected Euclidean distance (EED) between object \(o_i\) (represented by \(f_i\)) and the cluster representative \(p_{c_j}\) is calculated as Eq. (1).

The mean vector of \(o_i\) (\(\overline{o_i}\)) is the weighted mean of all T samples calculated by Eq. (2). In UK-means, the mean vector of cluster representative \(p_{c_j}\) (\(\overline{p}_{c_j}\)) is the average of the mean vectors of objects assigned to the cluster Eq. (3). In Eq. (3), \(|c_j|\) represents the number of objects assigned to cluster \(c_j\), and \(C(o_i)=c_j\) describes the cluster that \(o_i\) assigned to.

4 Ensemble Clustering Model for Uncertain Data

UK-means is a kind of EM (expected maximum) algorithm, but it is likely to attain an local optimal value. In this section, we introduce our method AdaUK-means, which is an ensemble clustering method based on Adaboost. Given a small number of objects (no more than 10) that should be in the same cluster, and the weights of objects in the same cluster are grouped in different clusters will be increased. The objects will be assigned to the clusters by a voting process. We first define the problem of ensemble clustering of uncertain objects, then the ensemble clustering method (AdaUK-means) is introduced in detail.

4.1 Problem Definition

In our model, there are N objects, and m numerical (real-valued) feature attributes \(V_1,...,V_m\). The range value of the u-th dimension \(V_u(1\le u \le m)\) is denoted as \(dom(V_u)\). Each \(o_i\) is associated with a probability density function (pdf \(f_i(x)\)), where x is a possible location of \(o_i\), and \(UD(o_i)\) is the uncertain domain of \(o_i\). Each tuple x is associated with a feature vector \(x=(\tilde{x}_1,\tilde{x}_2,...,\tilde{x}_{m})\), where \(\tilde{x}_u \in dom(V_u)(1\le u \le m)\). Given N uncertain objects, the goal of ensemble clustering is to minimize sum of the distances between uncertain objects and cluster representatives Eq. (4). The distance is related to the weight of each UK-means. During clustering process, UK-means is executed u times, and \(u \times K\) cluster representatives are obtained.

Where D is Euclidean distance between objects, and \(\alpha _u\) is the weight of the uth UK-means. The problem of ensemble clustering of uncertain objects is finding \(u \times K\) cluster representatives by minimizing Eq. (4).

4.2 Ensemble Method

UK-means is considered as a kind of EM (Expectation Maximization) algorithm. UK-means is likely to attain an local optimal value. For the purpose of improving the performance of UK-means, AdaUK-means is proposed for handling uncertain objects based on Adaboost. Different from [14], our method considers uncertain objects.

AdaBoost is short for Adaptive boosting [11], which is proposed for improving the performance of learning methods. Adaboost is an adaptive method that subsequent classifiers are built focusing on objects misclassified by previous classifiers. AdaBoost calls a weak classifier repeatedly in a series of classifiers. During each iteration, the weights of objects is updated to indicate the importance of examples in a data set. The weights of incorrectly classified examples are increased (or alternatively, the weights of correctly classified examples are decreased), so that the new classifier focuses more on those incorrectly classified examples.

4.3 AdaUK-Means

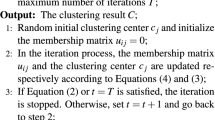

AdaUK-means executes UK-means u times on the weighted uncertain objects. The objects will be assigned to the cluster by the minimum distance between the uncertain objects and cluster representatives during each UK-means. \(u \times K\) cluster representatives will be returned in Algorithm 1. There are three steps in AdaUK-means as shown in Fig. 2. First, the weights of uncertain objects are calculated by Adaboost. Second, the cluster representative is updated by the weighted objects. Step 1 and 2 will be repeated U times to get an ensemble model. Third, all the objects are assigned based on ensemble UK-means.

Weights of Uncertain Objects. The weights of uncertain objects are computed in Line 9 Algorithm 1. In Algorithm 1, \(h_u(o_i)\ne C(o_i)\) means that \(o_i\) is assigned uncorrectly by \(h_u\) (\(C(o_i)\) is the cluster that object \(o_i\) should be assigned, \(h_u(o_i)\) is the cluster \(o_i\) assigned to by \(h_u\)). The subsequent cluster representatives are obtained in favor of those objects which are misclassified in previous iteration in Adaboost [11]. For example, if \(o_l\) and \(o_l^{'}\) are in the same cluster and are assigned to the same cluster (\(h_u(o_l)=h_u(o_l^{'})\)), the value of \(M(o_l)\) is \(-1\). Otherwise, the value of \(M(o_l)\) is 1. If the objects are clustered correctly, the weights of them will be decreased. n is the number of objects which are labeled by the correlation with other \(n-1\) objects. n is a very small number in our method, i.e. no more than 10.

Update of Cluster Representative. The weights of objects are updated by weighted objects (Algorithm 1 Line 28). The weights of objects which are must-link but assigned to different clusters will be increased. Algorithm 2 describes the calculation of cluster representatives. The cluster representatives are updated by weighted uncertain objects Eq. (5). The cluster representative is the weighted average of the mean vectors of objects which are assigned to the cluster. The update of cluster representative considers the correlation between uncertain objects, which is different from UK-means.

Clustering Uncertain Objects. In Algorithm 1, \(o_i\) is assigned by the minimizing the objective function of Eq. (4). Algorithm 3 shows the clustering process of AdaUK-means. In each UK-means, the uncertain objects are assigned to the cluster by Eq. (6) first, then the objects are assigned by a voting process Eq. (7).

5 Experimental Evaluation

In this section, the AdaUK-means is evaluated by comparing with UK-means. All codes were written in Matlab and were run on a Windows machine with an Intel 2.66 GHz Pentium(R) Dual-Core processor and 4 GB of main memory. The clustering quality of our method is evaluated on both synthetic data sets and real data sets.

The clustering quality of a method is clustering quality, which is measured by precision. \(\mathbb {G}\) is denoted as the ground truth of clustering results, and \(\mathbb {R}\) is the clustering results obtained by a clustering algorithm. If there are two objects appearing in the same cluster in a clustering, they are a pair. True positive (TP) represents the set of common pairs of objects in both \(\mathbb {G}\) and \(\mathbb {R}\); False positive (FP) is the set of pairs of objects in \(\mathbb {R}\) but not in \(\mathbb {G}\); False negative (FN) is the set of pairs of objects in \(\mathbb {G}\) but not in \(\mathbb {R}\). The precision of a clustering algorithm \(\mathbb {R}\) are calculated as follows:

where |.| is the number of objects in the set. 5-fold cross-validation for each data set are generated to evaluate the effectiveness of the algorithms.

5.1 Synthetic Data Sets

For the purpose of demonstrating the performance of AdaUK-means, 110 data sets with Gaussian distribution are generated. The N uncertain objects in a data set were equally grouped into K clusters. For each cluster, the centers of \(\frac{N}{K}\) uncertain objects were generated from a Gaussian distribution. The baseline values of parameters used for the experiments on Gaussian data sets are summarized in Table 2. In each data set, T samples are used to represented an uncertain objects in a D-dimensional space. The uncertain domain of an object on each dimension is \(un \times un\).

The precision of AdaUK-means compared with UK-means is shown in Tables 3, 4, 5 and 6. In Table 3, u represents the number of UK-means. Tables 3, 4, 5 and 6 show that our ensemble method (AdaUK-means) performs better than that of UK-means on average. The precision of AdaUK-means with different us does not change significantly.

In the experiments, the performance of UK-means decreases dramatically when K is increasing. AdaUK-means improves the precision of UK-means by 11.9 % on average with varying K. AdaUK-means improves the clustering quality of UK-means by 4.5 %–5 % on average. The experimental results demonstrate that AdaUK-means can improve the precision of UK-means when clustering uncertain objects.

5.2 Real Data Sets

The experiments are also done on real data sets. The parameters of the chosen data sets used for the experiments are summarized in Table 7. The attributes of all the data sets are numerical obtained from measurements. We follow the common practice in the research work of this area [6–8, 18–20] to generate the uncertainty of real data sets. For object \(o_i\) on the u-th dimension (i.e. the attribute \(A_u\)), the point value \(v_{i,u}\) reported in a data set is used as the mean of a pdf \(f_{i,u}\), defined over an interval \([a_{i,u},b_{i,u}]\). The range of values for \(A_u\) (over the whole data set) is noted and the width of \([a_{i,u},b_{i,u}]\) is set to \(un\times |A_u|\), where \(|A_u|\) denotes the width of the range for \(A_u\) and un is a parameter to control the uncertainty of data set. Gaussian distribution is used to generate pdf \(f_{i,u}\), which the standard deviation is set to be \(\frac{1}{4} \times (b_{i,u}-a_{i,u})\) (the same as that in [19]). T samples are used to generate pdf over the interval. The point value is transformed into uncertain samples on Gaussian distribution by using the controlled parameter un and T samples. To compare with UK-means, T is set to be 100 and un is from 5 % to 25 %. The point value data become uncertain when the error model (un and pdf) is applied for them.

Figure 3 shows the precision of our ensemble method compared with UK-means on the selected UCI data sets. The precision may be affected by varying un. However, the precision of our method is higher than that of UK-means on the five selected data sets. The experimental results show that the error model (un) can affect the clustering results of algorithm slightly.

Our ensemble method has been done on both synthetic and real data sets. The experimental results on those data sets demonstrate that the ensemble method can improve the clustering performance of UK-means on both synthetic and real data sets.

6 Conclusions

In this paper, the problem of clustering uncertain objects whose locations are described by probability density functions (pdf) is studied. For the purpose of improving the performance of clustering quality, an ensemble clustering method is proposed to assign the objects by minimizing the distance between objects and cluster representatives. For the purpose of overcoming the limitation of UK-means, Adaboost is combined to enhance the learning strength of the proposed model. The experimental evaluation demonstrates that our ensemble semi-supervised UK-means outperforms UK-means on both synthetic and real data sets. We will consider clustering uncertain heterogeneous data in further work.

References

Dalvi, N.N., Suciu, D.: Efficient query evaluation on probabilistic databases. VLDB J. 16(4), 523–544 (2007)

Barbará, D., Garcia-Molina, H., Porter, D.: The management of probabilistic data. IEEE Trans. Knowl. Data Eng. 4(5), 487–502 (1992)

Cheng, R., Kalashnikov, D.V., Prabhakar, S.: Querying imprecise data in moving object environments. IEEE Trans. Knowl. Data Eng. 16(9), 1112–1127 (2004)

Ruspini, E.H.: A new approach to clustering. Inf. Control 15(1), 22–32 (1969)

Jiang, B., Pei, J., Lin, X., Cheung, D.W., Han, J.: Mining preferences from superior, inferior examples. In: Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 390–398 (2008)

Chau, M., Cheng, R., Kao, B., Ng, J.: Uncertain data mining: an example in clustering location data. In: Ng, W.-K., Kitsuregawa, M., Li, J., Chang, K. (eds.) PAKDD 2006. LNCS (LNAI), vol. 3918, pp. 199–204. Springer, Heidelberg (2006)

Ngai, W.K., Kao, B., Chui, C.K., Cheng, R., Chau, M., Yip, K.Y.: Efficient clustering of uncertain data. In: Proceedings of the 6th IEEE International Conference on Data Mining, pp. 436–445 (2006)

Kao, B., Lee, S.D., Cheung, D.W., Ho, W.-S., Chan, K.F.: Clustering uncertain data using voronoi diagrams. In: Proceedings of the 8th IEEE International Conference on Data Mining, pp. 333–342 (2008)

Kriegel, H.-P., Pfeifle, M.: Density-based clustering of uncertain data. In: Proceedings of the 11th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 672–677 (2005)

Kriegel, H.-P., Pfeifle, M.: Hierarchical density-based clustering of uncertain data. In: Proceedings of the 5th IEEE International Conference on Data Mining, pp. 689–692 (2005)

Freund, Y., Schapire, R.E.: A decision-theoretic generalization of on-line learning and an application to boosting. In: Vitányi, P. (ed.) Computational Learning Theory. LNCS, vol. 904, pp. 23–37. Springer, Heidelberg (1995)

Ester, M., Kriegel, H.-P., Sander, J., Xu, X.: A density-based algorithm for discovering clusters in large spatial databases with noise. In: Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining, pp. 226–231 (1996)

Ankerst, M., Breunig, M.M., Kriegel, H.-P., Sander, J.: Optics: ordering points to identify the clustering structure. In: Proceedings ACM SIGMOD International Conference on Management of Data, pp. 49–60 (1999)

Züfle, A., Emrich, T., Schmid, K.A., Mamoulis, N., Zimek, A., Renz, M.: Representative clustering of uncertain data. In: The 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD 2014, New York, NY, USA, 24–27 August 2014, pp. 243–252 (2014)

Bi, J., Zhang, T.: Support vector classification with input data uncertainty. In: Advances in Neural Information Processing Systems 17 [Neural Information Processing Systems] (2004)

Qin, B., Xia, Y., Prabhakar, S., Tu, Y.-C.: A rule-based classification algorithm for uncertain data. In: Proceedings of the 25th International Conference on Data Engineering, pp. 1633–1640 (2009)

Qin, B., Xia, Y., Prabhakar, S.: Rule induction for uncertain data. Knowl. Inf. Syst. 29(1), 103–130 (2011)

Tsang, S., Kao, B., Yip, K.Y., Ho, W.-S., Lee, S.D.: Decision trees for uncertain data. In: Proceedings of the 25th International Conference on Data Engineering, pp. 441–444 (2009)

Tsang, S., Kao, B., Yip, K.Y., Ho, W.-S., Lee, S.D.: Decision trees for uncertain data. IEEE Trans. Knowl. Data Eng. 23(1), 64–78 (2011)

Ren, J., Lee, S.D., Chen, X., Kao, B., Cheng, R., Cheung, D.W.-L.: Naive bayes classification of uncertain data. In: The 9th IEEE International Conference on Data Mining, pp. 944–949 (2009)

Jiang, B., Pei, J.: Outlier detection on uncertain data: objects, instances, and inferences. In: Proceedings of the 27th International Conference on Data Engineering, pp. 422–433 (2011)

Aggarwal, C.C., Yu, P.S.: Outlier detection with uncertain data. In: Proceedings of the SIAM International Conference on Data Mining, pp. 483–493 (2008)

Wang, B., Xiao, G., Yu, H., Yang, X.: Distance-based outlier detection on uncertain data. In: 12th IEEE International Conference on Computer and Information Technology, pp. 293–298 (2009)

Matsumoto, T., Hung, E.: Accelerating outlier detection with uncertain data using graphics processors. In: Tan, P.-N., Chawla, S., Ho, C.K., Bailey, J. (eds.) PAKDD 2012, Part II. LNCS, vol. 7302, pp. 169–180. Springer, Heidelberg (2012)

Aggarwal, C.C., Li, Y., Wang, J., Wang, J.: Frequent pattern mining with uncertain data. In: Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 29–38 (2009)

Chui, C.-K., Kao, B.: A decremental approach for mining frequent itemsets from uncertain data. In: Washio, T., Suzuki, E., Ting, K.M., Inokuchi, A. (eds.) PAKDD 2008. LNCS (LNAI), vol. 5012, pp. 64–75. Springer, Heidelberg (2008)

Chui, C.-K., Kao, B., Hung, E.: Mining frequent itemsets from uncertain data. In: Zhou, Z.-H., Li, H., Yang, Q. (eds.) PAKDD 2007. LNCS (LNAI), vol. 4426, pp. 47–58. Springer, Heidelberg (2007)

Leung, CK.-S., Brajczuk, D.A.: Mining uncertain data for constrained frequent sets. In: International Database Engineering and Applications Symposium, pp. 109–120 (2009)

Zhang, Q., Li, F., Yi, K.: Finding frequent items in probabilistic data. In: Proceedings of the ACM SIGMOD International Conference on Management of Data, pp. 819–832 (2008)

Bernecker, T., Kriegel, H.-P., Renz, M., Verhein, F., Züfle, A.: Probabilistic frequent itemset mining in uncertain databases. In: Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 119–128 (2009)

Lawrence, R.D., Almasi, G.S., Kotlyar, V., Viveros, M.S., Duri, S.: Personalization of supermarket product recommendations. Data Min. Knowl. Disc. 5(1/2), 11–32 (2001)

Govindarajan, K., Jayaraman, B., Mantha, S.: Preference queries in deductive databases. New Gener. Comput. 19(1), 57–86 (2000)

Chomicki, J.: Querying with intrinsic preferences. In: Jensen, C.S., Šaltenis, S., Jeffery, K.G., Pokorny, J., Bertino, E., Böhn, K., Jarke, M. (eds.) EDBT 2002. LNCS, vol. 2287, pp. 34–51. Springer, Heidelberg (2002). doi:10.1007/3-540-45876-X_5

Chomicki, J.: Database querying under changing preferences. Ann. Math. Artif. Intell. 50(1–2), 79–109 (2007)

Kießling, W., Köstler, G.: Preference SQL - design, implementation, experiences. In: Proceedings of 28th International Conference on Very Large Data Bases, pp. 990–1001 (2002)

Lacroix, M., Lavency, P.: Preferences: putting more knowledge into queries. In: Proceedings of 13th International Conference on Very Large Data Bases, pp. 217–225 (1987)

Wang, D., Kim, Y.-S., Park, S.C., Lee, C.S., Han, Y.K.: Learning based neural similarity metrics for multimedia data mining. Soft. Comput. 11(4), 335–340 (2007)

Wang, D., Ma, X.: Learning pseudo metric for multimedia data classification and retrieval. In: Negoita, M.G., Howlett, R.J., Jain, L.C. (eds.) KES 2004. LNCS (LNAI), vol. 3213, pp. 1051–1057. Springer, Heidelberg (2004)

Cong, Y., Wang, S., Fan, B., Yang, Y., Yu, H.: UDSFS: unsupervised deep sparse feature selection. Neurocomputing

Cong, Y., Yuan, J., Luo, J.: Towards scalable summarization of consumer videos via sparse dictionary selection. IEEE Trans. Multimedia 14(1), 66–75 (2012)

Hung, E., Xiao, L., Hung, R.Y.S.: An efficient representation model of distance distribution between uncertain objects. Comput. Intell. 28(3), 373–397 (2012)

Acknowledgments

The work described in this paper was supported by the grants from National Natural Science Foundation of China (Nos. 61308027, 61575128), Guangdong Provincial Department of Science and Technology (2015A030313541), Science and Technology Innovation Commission of Shenzhen (KQCX20140512172532195).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Xu, L., Hu, Q., Zhang, X., Chen, Y., Liao, C. (2016). AdaUK-Means: An Ensemble Boosting Clustering Algorithm on Uncertain Objects. In: Tan, T., Li, X., Chen, X., Zhou, J., Yang, J., Cheng, H. (eds) Pattern Recognition. CCPR 2016. Communications in Computer and Information Science, vol 662. Springer, Singapore. https://doi.org/10.1007/978-981-10-3002-4_3

Download citation

DOI: https://doi.org/10.1007/978-981-10-3002-4_3

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-3001-7

Online ISBN: 978-981-10-3002-4

eBook Packages: Computer ScienceComputer Science (R0)