Abstract

Hashing is a typical approximate nearest neighbor search approach for large-scale data sets because of its low storage space and high computational ability. The higher the variance on each projected dimension is, the more information the binary codes express. However, most existing hashing methods have neglected the variance on the projected dimensions. In this paper, a novel hashing method called balanced and maximum variance deep hashing (BMDH) is proposed to simultaneously learn the feature representation and hash functions. In this work, pairwise labels are used as the supervised information for the training images, which are fed into a convolutional neural network (CNN) architecture to obtain rich semantic features. To acquire effective and discriminative hash codes from the extracted features, an objective function with three restrictions is elaborately designed: (1) similarity-preserving mapping, (2) maximum variance on all projected dimensions, (3) balanced variance on each projected dimension. The competitive performance is acquired using the simple back-propagation algorithm with stochastic gradient descent (SGD) method despite the sophisticated objective function. Extensive experiments on two benchmarks CIFAR-10 and NUS-WIDE validate the superiority of the proposed method over the state-of-the-art methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the era of big data, Internet has been inundated with millions of images possessing sophisticated appearance variations. Traditional linear search is obviously not a satisfying choice for inquiring the relevant images from large data sets as its high-computational cost. Due to the high efficiencies in computation and storage, hashing methods [2, 9, 13] have become a powerful approach of approximate nearest neighbor (ANN) search in above fields. They learn stacks of projection functions to map original high-dimensional features to lower dimensional compact binary descriptors on the conditions that similar samples are mapped into nearby binary codes. By computing the Hamming distance between two binary descriptors via XOR bitwise operations, one can find the similar images of the query one.

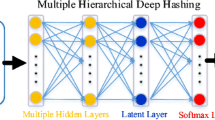

Pipeline of the proposed BMDH method. When an image is fed into the pre-trained deep network, it will finally be encoded into one binary vector. When a batch of m images pass through the network, the output of the full-connected layer is a \(n\times m \) real-valued matrix where n is the length of generated binary codes. To learn effective yet discriminative binary codes, three restrictions are used at the top layer of the network: (1) similarity-preserving mapping, (2) maximum variance on all projected dimensions, (3) balanced variance on each projected dimension.

Generally speaking, hashing methods are divided into two categories: data dependent and data independent. Without adopting the data distribution, data independent methods generate hash functions through random projections. Nevertheless, they need relatively longer binary codes to obtain the comparable accuracy [2]. Data dependent methods utilize the data distribution and thus having more accurate results. These methods can be further divided into three streams. The first stream are the unsupervised methods which only use the unlabeled data to learn hash functions. The representative methods include [2, 13]. The other two streams are supervised methods and semi-supervised methods which both incorporate label information in the process of generating hash codes. [6, 9] are the typical methods of them. Compared to the unsupervised methods, the supervised methods usually need fewer bits to achieve competitive performance as a result from the using of supervised information. Here, we focus on the supervised methods.

Conventional hashing methods exploit hand-crafted features to learn hash codes which can not necessarily preserve the semantic similarities of images in the learning process. By light of the outstanding performance of convolutional neural networks (CNNs) in vision tasks, some existing hashing methods use the CNNs to capture the rich semantic information beneath images. However, these methods even some deep hashing methods [8, 14] merely view the CNN architectures as feature extractors which are followed by the procedure of generating binary codes. That is to say, the image representation and hash functions learning are mutual independent two stages. It results in the feature representation may not be tailored to the hashing learning procedure and thus undermining the retrieval performance. Consequently, other hashing methods based deep learning [4, 5, 7, 16, 17] have been proposed to simultaneously learn the semantic features and binary codes thus outperforming the previous hashing methods.

It is known that variance is often analysed for statistics. Besides, the amount of information included in each projected dimension is directly proportional to the variance of it. Thus, there can be two considerations. On one hand, maximizing the total variance on all dimensions to amplify the information capacity of the binary codes. On the other hand, balancing the variance on each dimension so that it can be encoded into the same number of bits. Whereas, most existing hashing methods including the current state-of-the-art method DPSH [5] have not taken these aspects into consideration. In this paper, a novel deep hashing method dubbed balanced and maximum variance deep hashing (BMDH) is proposed to perform simultaneous feature learning and hash-code learning. The pipeline of our method is revealed in Fig. 1. Images whose supervised information is given by pairwise labels are used as the training inputs. The similarity matrix constructed from label information indicates whether two images are similar or not. To acquire more effective hash codes, we exert three criteria on the top layer of the deep network to form a joint objective function. Stochastic gradient descent (SGD) method is utilized to update all the parameters once time. To solve the discrete optimization problem, we relax the discrete outputs to continuous real-values. Meanwhile, a regularizer is used to approach the desired discrete values. Given a query image, we propagate it through the network and quantize the deep features to desired binary codes.

The main contributions of the proposed method are as follows:

-

A joint objective function is formed. We exert three restrictions on the top layer of the network to constitute a joint objective function. The first is that the learned binary codes should preserve the local data structure in the original space namely two similar samples should be encoded into nearby binary codes. The second is that the total variance on all projected dimensions is maximized so as to capture more information which is beneficial to the retrieval performance. The last is that the variance on each dimension should be as equal as possible so that each dimension can be encoded into the same number of bits.

-

An end-to-end model is constructed to simultaneously perform the feature representation and hash functions learning. In the framework, the above two stages can give feedback to each other. The optimal parameters are acquired using the simple back-propagation algorithm with stochastic gradient desent (SGD) method despite the sophisticated objective function.

-

The important variance information on the projected dimensions is focused on in our method. To the best of our knowledge, it is the first time that maximizing the total variance on all projected dimensions and balancing the variance on each dimension is simultaneously considered in the existing deep hashing methods.

The rest of the paper is organized as follows. In Sect. 2, we discuss the related works briefly. The proposed BMDH method is described in Sect. 3. The experimental results are presented in Sect. 4. Finally, Sect. 5 concludes the whole paper.

2 Related Work

Conventional Hashing: The earliest hashing research concentrates on data-independent methods in which the LSH methods [1] are the representatives. The basic idea of LSH methods is that two adjacent data points in the original space are projected to nearby binary codes in a large probability, while the probability of projecting two dissimilar data points to the same hash bucket is small. The hash functions are produced via random projections. However, LSH methods usually demand relatively longer codes to achieve comparable performance thus occupying larger storage space. Data-dependent methods learn more effective binary codes using training data. Among them, unsupervised methods learn hash functions using unlabeled data. The typical methods include Spectral Hashing (SH) [13], Iterative Quantization (ITQ) [2]. Semi-supervised and supervised hashing methods generate binary codes employing the label information. The representative methods include, Supervised Discrete Hashing (SDH) [10], Supervised Hashing with Kernels (KSH) [9], Fast Supervised Hashing (FastH) [6], Latent Factor Hashing (LFH) [15] and Sequential Projection Learning for Hashing (SPLH) [11].

Deep Hashing: Hashing methods based on CNN architectures are earning more and more attention especially when the outstanding performance of deep learning is demonstrated by Krizhevsky et al. on the Imagenet. Among the recent study, CNNH [14] learns the hash functions and feature representation based on binary codes obtained from the pairwise labels. However, it has not simultaneously learned the feature representation and hash functions. DLBH [7] learns binary codes by employing a hidden layer as features in a point-wise manner. [4, 16, 17] learn compact binary codes using triplet samples. [5, 8] learn binary codes with elaborately designed objective functions.

Balance and Maximize Variance: Variance is a significant statistic value which stands for the amount of information to some degree. So, it is quite necessary for us to think about the variance on the projected dimensions. Liong et al. [8] uses the criterion of maximizing the variance of learned binary vectors at the top layer of the network. But it is not an end-to-end model. Isotropic Hashing (IsoHash) [3] learns projection functions which can produce projected dimensions with isotropic variances. Iterative Quantization (ITQ) [2] seeks an optimized rotation to the PCA-projected data to minimize the quantization error which also effectively balances the variance on each dimension.

Totally, most of the existing hashing methods try to preserve the data structure in the original space. But they seldom consider maximizing the total variance on all projected dimensions and balancing the variance on each dimension or just take one aspect into account. In this paper, we propose a novel deep architecture to learn similarity-preserving binary codes with the consideration of simultaneously maximizing the total variance on all dimensions and balancing the variance on each dimension.

3 Approach

The purpose of hashing methods is to learn a series of projection functions h(x) which map u-dimensional real-valued features to v-dimensional binary codes \(b\in \{-1,1\}^v\)(\(u\gg v\)) while the distance relationship of data points in the original space is preserved. However, most existing hashing methods have not considered the variance on the projected dimensions which is an important statistic value. To learn compact and discriminative codes, we propose a novel hashing method called BMDH. Three criteria are exerted on the top layer so that a joint loss function is formed: (1) the distance relationship between data points in the Euclidean space is effectively preserved in the corresponding Hamming space, (2) the total variance on all projected dimensions is maximized, (3) the variance on each dimension is as equal as possible. In the following, we first give the proposed model and then describe how to optimize it.

3.1 Notations

Suppose there are M training data points. \(\varvec{x_i}\in \mathbb {R}^d(1\le i\le M)\) is the ith data point, and the matrix form is \(\varvec{X}\in \mathbb {R}^{M\times d}\). \(\varvec{B}^{M\times n}=\{\varvec{b}_i\}_{i=1}^M\) denotes the binary codes matrix, where \(\varvec{b}_i=sgn(h(\varvec{x_i}))\in \{-1,1\}^n\) denotes the n-bits binary vector of \(\varvec{x}_i\). \(sgn(v)=1\) if \(v>0\) and \(-1\) otherwise. \(\varvec{S}=\{s_{ij}\}\) is the similarity matrix where \(s_{ij}\in \{0,1\}\) is denoted as the similarity label between pairs of points. \(s_{ij}=1\) means the two data points \(\varvec{x}_i\) and \(\varvec{x}_j\) are similar and the corresponding Hamming distance is low. \(s_{ij}=0\) means the two data points are dissimilar and the corresponding Hamming distance is high. \(\varvec{\varTheta }\) denotes all the parameters of the feature learning part.

3.2 Proposed Model

Corresponding to the three restrictions, the overall objective function is composed of three parts. The first part aims to preserve the similarities between data pairs. That is to say, the Hamming diatance is minimized on similar pairs and maximized on dissimilar pairs simultaneously. The second part is used to maximize the total variance on all projected dimensions to boost the information capacity of the binary codes. The last part seeks to balance the variance on each dimension so that each dimension is allocated with the same number of bits.

where \(\rho \) is the regularization term, \(\lambda _1\) and \(\lambda _2\) are used to balance different objectives. \(\Vert \cdot \Vert _2\) is the L2-norm of vector.

To construct an end-to-end model, we set:

where \(\varvec{H}=\{\varvec{h}_i\}_{i=1}^m\in \mathbb {R}^{4096\times m} \) denotes the output matrix of the full7 layer associated with the batch of m data points, where \(\varvec{h}_i\in \mathbb {R}^{4096\times 1} \) denotes the output vector associated with the data point \(\varvec{x}_i\). \(\varvec{W}=\{\varvec{w}_i\}_{i=1}^n\in \mathbb {R}^{4096\times n}\) is the projection matrix of the full8 layer, where \(\varvec{w}_i\) is the ith projection vector. \(\varvec{Q}\in \mathbb {R}^{n\times m}\) is the bias matrix. It means that we incorporate the hash-code learning part with the feature learning part by a fully-connected layer. As a result, the weight \(\varvec{W}\) and bias \(\varvec{Q}\) of the hash-code layer are simultaneously updated with all the other parameters of the feature learning layers.

In order to make a better understanding of the joint objective function, we describe it in details as below.

3.3 Preserving the Similarities

The proposed model learns non-linear projections that map the input data points into binary codes and simultaneously preserve the distance relationship of points in the original space.

We define the likelihood of the similarity matrix as that of [15]:

while

where

Taking the negative log-likelihood of the observed similarity labels \(\varvec{S}\) as that of [5], we get a primary model:

It is a reasonable model which makes the similar pairs have low Hamming distance and dissimilar pairs have high Hamming distance. To solve the discrete optimization problem, we set:

where \(\varvec{q}\in \mathbb {R}^{n\times 1}\) is the bias vector.

Then, we relax the discrete values \(\{\varvec{b}_i\}_{i=1}^m\) to continuous real-values \(\{\varvec{g}_i\}_{i=1}^m\) as that of [5]. The ultimate model can be reformulated as:

where \(\varPhi _{ij}=\frac{1}{2}\varvec{g}_i^T\varvec{g}_j\), \(\rho \) is the regularization term to approach the desired discrete values.

3.4 Maximizing the Variance

The variances on different projected dimensions are not identical. The bigger the variance on each projected dimension is, the richer the information of the binary codes convey. To expand the information capacity of all binary codes, it is a good idea to maximize the total variance on all dimensions. First, we work out the variances of different dimensions. Next, we sum the computed variances. Last, we maximize the sum so that the information contained in the binary codes is maximized.

The maximum variance theory is also the basic idea of Principal Component Analysis which can minimize the reconstruction error. Here, we set:

where \(\varvec{p}_i\in \mathbb {R}^{1\times m}\) is a bias vector.

Then, we get the following formulation:

3.5 Balancing the Variance

Typically, different projected dimensions have different variances and dimensions with larger variances will carry more information. So it is unreasonable to utilize the same number of bits for different dimensions. To solve the problem, we balance the variance on each projected dimension namely making them as equal as possible so that the same number of bits can be employed.

Define \(u_i={\text {var}}(\varvec{z}_i)\) as the variance on each dimension and the variance vector is denoted as \(\varvec{u}=(u_1,u_2,\ldots ,u_n)\). To make \(u_i (i=1,2,\ldots ,n)\) as equal as possible, we minimize \({\text {var}}(\varvec{u})\), which denotes the variance of \(\varvec{u}\). We can get the following formulation:

Once the variance of \(\varvec{u}\) is minimized, the variance on each projected dimension is balanced.

3.6 Optimization

The strategy of back-propagation algorithm with stochastic gradient descent (SGD) method is leveraged to obtain the optimal parameters. First we calculate the outputs of the network with the given parameters and quantize the outputs:

Then, we work out the derivative of the joint objective function (1) with respect to \(\varvec{G}\). Since the objective function is composed of three parts, we compute the derivatives of the three parts respectively.

-

The vector form of Eq. (3) can be formulated as:

$$\begin{aligned} J_1(\varvec{W})=-(\varvec{S}\varvec{\varPhi }-\log (1+e^{\varvec{\varPhi }})+\rho \Vert \varvec{B}-\varvec{G}\Vert _2^2 \end{aligned}$$

where \(\varvec{\varPhi }=\frac{1}{2}\varvec{G}^T\varvec{G}\).

The derivative of \(J_1(\varvec{W})\) with respect to \(\varvec{G}\) is computed as:

-

The Eq. (4) is rewritten as:

$$\begin{aligned} J_2(\varvec{W})&\!=\!\frac{1}{m}\sum _{i=1}^n\sum _{j=1}^m\Vert \varvec{w}_i^T\varvec{h}_j\!+\!p'_{ij}\Vert _2^2\!-\!\sum \limits _{i=1}^n\Vert {\text {E}}(\varvec{w}_i^T\varvec{H}\!+\!\varvec{p}_i)\Vert _2^2\nonumber \\&\!=\!\frac{1}{m}tr((\varvec{W}^T\varvec{H}\!+\!\varvec{Q})^T(\varvec{W}^T\varvec{H}\!+\!\varvec{Q}))\!-\!\sum \limits _{i=1}^n\Vert {\text {E}}(\varvec{z}_i)\Vert _2^2 \end{aligned}$$

where \(\varvec{h}_j\in \mathbb {R}^{4096\times 1}\) denotes the outputs of the full7 layer associated with the jth data point, \(p'_{ij}\) is the bias term related to the ith weight and the jth data point.

The derivative of \(J_2(\varvec{W})\) with respect to \(\varvec{G}\) is computed as:

-

The Eq. (5) is reformulated as:

$$\begin{aligned} J_3(\varvec{W})=\frac{1}{n}\sum _{i=1}^n[{\text {var}}(\varvec{z}_i)]^2-[\frac{1}{n}\sum _{i=1}^n{\text {var}}(\varvec{z}_i)]^2 \end{aligned}$$

The derivative of \(J_3(\varvec{W})\) with respect to \(\varvec{G}\) is computed as:

In this way, the derivative of the joint loss function (1) with respect to \(\varvec{G}\) is the sum of the three parts:

Last, the derivative of the parameters \(\varvec{W}\), \(\varvec{Q}\) and \(\varvec{H}\) can be computed as follows:

All the parameters are alternatively learned. That is to say, we update one parameter with other parameters fixed.

The proposed algorithm of BMDH is summarized as follows.

4 Experiment

We implement our experiments on two universally used data sets: CIFAR-10 and NUS-WIDE. The mean Average Precision (mAP) as the metric is applied to evaluate the retrieval performance. Several conventional hashing methods and deep hashing methods are utilized to make comparison with the proposed method.

4.1 Datasets

-

CIFAR-10 Data set consists of the total number of 60, 000 images each of which is a \(32\times 32\) color image. These images are categorized into 10 classes with 6000 images per class.

-

NUS-WIDE Data set consists of nearly 27, 0000 color images collected from the web. It is a multi-label data set each image of which is annotated with one or more labels from 81 semantic concepts. Following [4, 5, 14], we only exploit images whose labels are from the 21 most frequent concept tags. At least 5, 000 images are associated with each label.

4.2 Evaluation Metric

We use the mean Average Precision (mAP) as the metric for evaluation which means the mean precision of all query samples. It is defined as:

where \(q_i\in Q\) denotes the query sample, \(n_i\) is the number of samples similar to the query sample \(q_i\) in the dataset. The relevant samples are ordered as \(\{x_1,x_2,\ldots ,x_{n_i}\}\), \(R_{ij}\) is the set of ranked retrieval results from the top result until getting to point \(x_j\).

The definition of Precision is as follows:

4.3 Experimental Setting

For CIFAR-10, following [5], we randomly sample 1000 images (100 images per class) as the query set. The rest images are used as database images. For the unsupervised methods, the database images are utilized as the training images. For the supervised methods, the training set consists of 5000 images (500 images per class) which are randomly sampled from the rest images. We construct the pairwise similarity matrix \(\varvec{S}\) based on the images class labels where two images sharing the same label are considered similar. For methods using hand-crafted features, we represent each image with a 512-dimension GIST vector [9].

For NUS-WIDE, we randomly sample 2, 100 images (100 images per class) from the selected 21 classes as the query set. For the unsupervised methods, all the remaining images from the 21 classes constitute the training data set. For the supervised methods, 500 images per class are randomly sampled from the 21 classes to form the training set. The pairwise similarity matrix \(\varvec{S}\) is constructed based on the images class labels where two images sharing at least one common label are considered similar. When calculating the mAP values, only the top 5, 000 returned neighbors are used for evaluation. For methods using hand-crafted features, we represent each image with a 1134-dimensional feature vector, which is composed of 64-D color histogram, 144-D color correlogram, 73-D edge direction histogram, 128-D wavelet texture, 225-D block-wise color moments and 500-D SIFT features.

The first seven layers of our network are initialized with the CNN-F network that has been pre-trained on ImageNet. The size of the batch is determined as 128 which means that 128 images are fed into the network in each iteration. The hyper-parameters \(\rho \), \(\lambda _1\) and \(\lambda _2\) are set to 10, 0.5 and 0.1 unless otherwise stated.

The experiments are carried out on Intel Core i5-4660 3.2 GHZ desktop computer of 8 G Memory with MATLAB2014a and MatConvNet. They are also implemented on a NVIDIA GTX 1070 GPU server.

4.4 Retrieval Results on CIFAR-10 and NUS-WIDE

Performance Improved Step by Step: Since the loss function is composed of three parts, we validate how the performance is gradually improved with another restriction added to the objective function every time. First, we acquire the performance with the criterion of similarity-preserving. Next, the criterion of maximum variance on all projected dimensions is added. Last, we add the criterion of balanced variance on each projected dimension to get the final performance.

When the number of iterations is set to 10, the performance of different number of bits with respect to different criteria on CIFAR-10 is revealed in Fig. 2. It can be seen that the performance with the restriction of similarity-preserving is outperformed by the performance with another restriction of maximum variance added. When the last restriction of balanced variance is added, the performance is enhanced again. This can be attributed to more information captured by the binary codes and equal information contained in each dimension.

Performance Compared with Conventional Methods with Hand-crafted Features: To validate the superiority of deep features over hand-crafted features, we compare the proposed method with those conventional methods having hand-crafted features including FastH [6], SDH [10], KSH [9], LFH [15], SPLH [11], ITQ [2], SH [13]. The results are shown in Table 1. It can be found that the BMGH method exceeds the contrastive algorithms dramatically no matter it is unsupervised or supervised. It is because that deep architectures provide richer semantic features despite the tremendous appearance variations. The results of CNNH, KSH and ITQ are copied from [4, 14] and the results of other contrastive methods are from [5]. Because the experimental setting and evaluation metric are all the same, so the above practice is reasonable.

Performance Compared with Conventional Methods with Deep Features: To verify the improvement in performance originates from our method instead of the deep network, we select some conventional methods with deep features extracted by the CNN-F network to compare with the proposed method. Table 2 shows the final results. We can see that the performance of our method outperforms that of contrastive methods on two datasets which demonstrates the progressiveness of the proposed method. In additional, the results also validate the effectiveness of the end-to-end framework. The results of the compared methods are from [5, 12] which is reasonable as the experimental setting and evaluation metric are all the same.

Performance Compared with Deep Hashing Methods: We compare the proposed method with some deep hashing methods including DPSH [5], NINH [4], CNNH [14], DSRH [17], DSCH [16] and DRSCH [16]. These methods have not considered maximizing the total variance on all projected dimensions and balancing the variance on each dimension.

When comparing with DSRH, DSCH and DRSCH, we leverage another experimental setting the same as [16] for fair comparison. Specifically, in CIFAR-10, we randomly sample 10, 000 images (1, 000 images per class) as the query set. The remaining images are used to form the database set. And the database set is used as the training set. In NUS-WIDE, we randomly sample 2, 100 images (100 images per class) from the 21 classes as the query set. Similarly, the remaining images are used as the database images and the training images simultaneously. The top 50,000 returned neighbors are used for evaluation when calculating the mAP values. DPSH# in Table 3 denotes the DPSH method under the new experimental setting. The results of the compared methods are directly from [5, 16] which is reasonable as the experimental setting and evaluation metric are all the same. From Tables 1 and 3 we can see that the performance of our method is superior to the compared deep hashing methods including the current state-of-the-art method DPSH. It verifies the effectiveness of maximizing the total variance on all dimensions and balancing the variance on each dimension. Additionally, the superiority of our method to CNNH can also demonstrate the advantage of simultaneous feature learning and hash-code learning.

5 Conclusion

In this paper, we present a novel deep hashing algorithm, dubbed BMDH. To the best of our knowledge, it is the first time that maximizing the total variance on all projected dimensions and balancing the variance on each dimension is simultaneously considered in the existing deep hashing methods. Back-propagation algorithm with stochastic gradient desent (SGD) method and the end-to-end way ensure the optimal parameters despite the complex objective function. Experimental results on two widely used data sets demonstrate that the proposed method can outperform other state-of-the-art algorithms in image retrieval applications.

References

Andoni, A., Indyk, P.: Near-optimal hashing algorithms for approximate nearest neighbor in high dimensions. In: Foundations of Computer Science Annual Symposium, vol. 51, no. 1, pp. 459–468 (2006)

Gong, Y., Lazebnik, S., Gordo, A., Perronnin, F.: Iterative quantization: a procrustean approach to learning binary codes for large-scale image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 35(12), 2916–2929 (2013)

Kong, W., Li, W.J.: Isotropic hashing. In: Advances in Neural Information Processing Systems, vol. 2, pp. 1646–1654 (2012)

Lai, H., Pan, Y., Liu, Y., Yan, S.: Simultaneous feature learning and hash coding with deep neural networks. In: Computer Vision and Pattern Recognition, pp. 3270–3278 (2015)

Li, W.J., Wang, S., Kang, W.C.: Feature learning based deep supervised hashing with pairwise labels. Computer Science (2015)

Lin, G., Shen, C., Shi, Q., van der Hengel, A., Suter, D.: Fast supervised hashing with decision trees for high-dimensional data. In: Computer Vision and Pattern Recognition, pp. 1971–1978 (2014)

Lin, K., Yang, H.F., Hsiao, J.H., Chen, C.S.: Deep learning of binary hash codes for fast image retrieval. In: Computer Vision and Pattern Recognition Workshops, pp. 27–35 (2015)

Liong, V.E., Lu, J., Wang, G., Moulin, P., Zhou, J.: Deep hashing for compact binary codes learning. In: Computer Vision and Pattern Recognition, pp. 2475–2483 (2015)

Liu, W., Wang, J., Ji, R., Jiang, Y.G.: Supervised hashing with kernels. In: Computer Vision and Pattern Recognition, pp. 2074–2081 (2012)

Shen, F., Shen, C., Liu, W., Shen, H.T.: Supervised discrete hashing. In: Computer Vision and Pattern Recognition, pp. 37–45 (2015)

Wang, J., Kumar, S., Chang, S.F.: Sequential projection learning for hashing with compact codes. In: International Conference on Machine Learning, pp. 1127–1134 (2010)

Wang, X., Shi, Y., Kitani, K.M.: Deep supervised hashing with triplet labels. In: Lai, S.-H., Lepetit, V., Nishino, K., Sato, Y. (eds.) ACCV 2016. LNCS, vol. 10111, pp. 70–84. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-54181-5_5

Weiss, Y., Torralba, A., Fergus, R.: Spectral hashing. In: Conference on Neural Information Processing Systems, Vancouver, British Columbia, Canada, December, pp. 1753–1760 (2008)

Xia, R., Pan, Y., Lai, H., Liu, C., Yan, S.: Supervised hashing for image retrieval via image representation learning. In: AAAI Conference on Artificial Intelligence (2012)

Zhang, P., Zhang, W., Li, W.J., Guo, M.: Supervised hashing with latent factor models. In: International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 173–182 (2014)

Zhang, R., Lin, L., Zhang, R., Zuo, W., Zhang, L.: Bit-scalable deep hashing with regularized similarity learning for image retrieval and person re-identification. IEEE Trans. Image Process. 24(12), 4766–4779 (2015)

Zhao, F., Huang, Y., Wang, L., Tan, T.: Deep semantic ranking based hashing for multi-label image retrieval. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1556–1564 (2015)

Acknowledgments

This work is supported by the National Natural Science Foundation of China (NSFC) (No. 61472442, No. 61773397, No. 61703423) and the funding (No. 2015kjxx-46).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Zhang, S., Zha, Y., Li, Y., Li, H., Chen, B. (2017). Image Retrieval via Balanced and Maximum Variance Deep Hashing. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 771. Springer, Singapore. https://doi.org/10.1007/978-981-10-7299-4_52

Download citation

DOI: https://doi.org/10.1007/978-981-10-7299-4_52

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7298-7

Online ISBN: 978-981-10-7299-4

eBook Packages: Computer ScienceComputer Science (R0)