Abstract

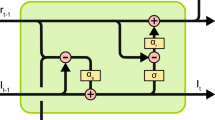

We describe a technique for automatically adapting to the rate of an incoming signal. We first build a model of the signal using a recurrent network trained to predict the input at some delay, for a ‘typical’ rate of the signal. Then, fixing the weights of this network, we adapt the time constant τ of the network using gradient descent, adapting the delay appropriately as well. We have found that on simple signals, the network adapts rapidly to new inputs varying in rate from being twice as fast as the original signal, down to ten times as slow. So far our results are based on linear rate changes. We discuss the possibilities of the application of this idea to speech.

Similar content being viewed by others

References

Bynner, W. (1942). The Way of Life According to Lao Tzu. New York: Putnam.

Denes, P. B. & Pinson, E. N. (1963). The Speech Chain: The Physics and Biology of Spoken Language. Bell Telephone Laboratories.

Elman, J. (1990). Finding Structure in Time.Cognitive Science 14: 179–211.

Jordan, M. (1986). Serial Order: A Parallel Distributed Processing Approach. Technical Report 8604, Institute for Cognitive Science, University of California, San Diego, La Jolla, California.

McClelland, J. L. & Rumelhart, D. E. (1981). An Interactive Activation Model of Context Effects in Letter Perception: Part I, An Account of Basic Findings.Psychological Review 88: 375–407.

Mozer, M. C. (1992). The Indication of Multiscale Temporal Structure. In J. E. Moody, S. J. Hanson & R. P. Lippmann (eds.),Advances in Neural Information Processing Systems IV. San Mateo, CA: Morgan Kaufmann.

MacKay, D. J. C. (submitted). The Evidence Framework Applied to Classification Networks. Submitted toNeural Computation.

Miller, J. L., Grosjean, F. & Lomanto, C. (1984). Articulation Rate and its Variability in Spontaneous Speech: A Reanalysis and some Implications.Phon 41: 215–225.

Olshen, Richard A., Biden, Edmund N., Wyatt, Marilynn P. & Sutherland, David H. (1989). Gait Analysis and the Bootstrap.Ann. Stat. 17: 1419–1440.

Rabiner, L. R. & Schafter, R. W. (1978). Digital Processing of Speech Signals. Prentice-Hall.

Sutherland, David H., Olshen, Richard A., Biden, Edmund N. & Wyatt, Marilynn P. (1988). The Development of Mature Walking. Oxford: Mac Keith.

Tsung, Fu-Sheng (1991). Learning in Recurrent Finite Difference Networks. In D. S. Touretzky, J. L. Elman, T. J. Sejnowski & G. E. Hinton (eds.),Proceedings of the 1990 Connectionist Models Summer School. San Mateo: Morgan Kaufmann.

Waibel, A., Hanazawa, T., Hinton, G., Shikano, K. & Lang, K. (1987). Phoneme Recognition Using Time-Delay Neural Networks. ATR Interpreting Telephony Research Laboratories Technical Report.

Williams, R. & Zipser, D. (1989). A Learning Algorithm for Continually Running Fully Recurrent Neural Networks.Neural Computation 1: 270–280.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Cottrell, G.W., Nguyen, M. & Tsung, FS. Dynamic rate adaptation. Artif Intell Rev 7, 271–283 (1993). https://doi.org/10.1007/BF00849055

Issue Date:

DOI: https://doi.org/10.1007/BF00849055