Abstract

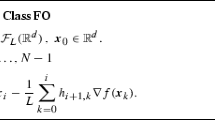

Convergence properties of descent methods are investigated for the case where the usual requirement that an exact line search be performed at each iteration is relaxed. The error at each iteration is measured by the relative decrease in the directional derivative in the search direction. The objective function is assumed to have continuous second derivatives and the eigenvalues of the Hessian are assumed to be bounded above and below by positive constants. Sufficient conditions are given for establishing that a method converges, or that a method converges at a linear rate.

These results are used to prove that the order of convergence for a specific conjugate gradient method is linear, provided the error at each iteration is suitably restricted.

Similar content being viewed by others

References

M.J. Box, “A comparison of several current optimization methods, and use of transformations in constrained problems”,The Computer Journal 9 (1966) 67–77.

H. Crowder and P. Wolfe, “Linear convergence of the conjugate gradient method”, IBM Research Report No. RC 3330 (1971).

L.C.W. Dixon, “The choice of step length: a crucial factor in the performance of variable metric algorithms”,Conference on Numerical Methods for Nonlinear Optimization, University of Dundee, Scotland (1971).

R. Fletcher and M.J.D. Powell, “A rapidly convergent descent method for minimization”,The Computer Journal 6 (1963) 163–168.

R. Fletcher and C.M. Reeves, “Function minimization by conjugate gradients”,The Computer Journal 7 (1964) 149–154.

A.A. Goldstein and J.F. Price, “An effective algorithm for minimization”,Numerische Mathematik 10 (1962) 184–189.

R. Klessig and E. Polak, “Efficient implementation of the Polak—Ribiere conjugate gradient algorithm”, Memo no. ERL-M279, Electronics Research Laboratory, University of California, Berkeley, Calif. (1970).

J.I. Ljubic, “General theorems on quadratic relaxation”,Soviet Mathematics 6 (1965) 588–591.

E. Polak,Computational methods in optimization (Academic Press, New York, 1971) ch. 6.

E. Polak and G. Ribiere, “Note sur la convergence de méthodes de directions conjugées”,Revue Française d'Informatique et de Recherche Operationelle 3 Series R (1969) 35–43.

B.T. Poljak, “Conjugate gradient methods in extremal problems”,Journal of Computational Mathematics and Mathematical Physics 9 (1969) 802–821 (in Russian).

H.W. Sorenson, “Comparison of some conjugate direction procedures for function minimization”,Journal of the Franklin Institute 288 (1969) 421–441.

M.L. Stein, “Gradient methods in the solution of systems of linear equations”,Journal of Research of the National Bureau of Standards 48 (1952) 407–413.

P. Wolfe, “Convergence conditions for ascent methods”, SIAMMathematical Review 11 (1969) 226–235.

P. Wolfe, “Convergence theory in nonlinear programming”, in:Integer and nonlinear programming, Ed. J. Abadie (North-Holland, Amsterdam, 1970) 1–36.

P. Wolfe, “Comparison of conjugate gradient methods”, to appear.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Lenard, M.L. Practical convergence conditions for unconstrained optimization. Mathematical Programming 4, 309–323 (1973). https://doi.org/10.1007/BF01584673

Received:

Revised:

Issue Date:

DOI: https://doi.org/10.1007/BF01584673