Abstract

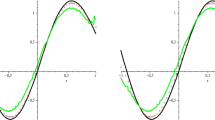

In general, there is a great difference between usual three-layer feedforward neural networks with local basis functions in the hidden processing elements and those with standard sigmoidal transfer functions (in the following often called global basis functions). The reason for this difference in nature can be seen in the ridge-type arguments which are commonly used. It is the aim of this paper to show that the situation completely changes when instead of ridge-type arguments were so-called hyperbolic-type arguments. In detail, we show that usual sigmoidal transfer functions evaluated at hyperbolic-type arguments—usually called sigma—pi units—can be used to construct local basis functions which vanish at infinity and, moreover, are integrable and give rise to a partition of unity, both in Cauchy's principal value sense. At this point, standard strategies for approximation with local basis functions can be used without giving up the concept of non-local sigmoidal transfer functions.

Similar content being viewed by others

References

S. Bochner,Vorlesungen über Fouriersche Integrale (Chelsea, New York, 1948).

C. de Boor and R.A. DeVore, Partitions of unity and approximation, Proc. Amer. Math. Soc. 93(1985)705–709.

C. de Boor, R.A. DeVore and A. Ron, Approximation from shift-invariant subspaces ofL 2(ℝd), CMS TSR No. 92-02, University of Wisconsin, Madison, 1991).

C. de Boor, R.A. DeVore and A. Ron, The structure of finitely generated shift-invariant spaces inL 2(ℝd), CMS TSR No. 92-08, University of Wisconsin, Madison (1992).

D. Chen, Degree of approximation by superpositions of a sigmoidal function, Approx. Theory Appl. 9:3(1993)17–28.

C.K. Chui and X. Li, Realization of neural networks with one hidden layer, in:Multivariate Approximation: From CAGD to Wavelets, ed. K. Jetter and F.I. Utreras (World Scientific, Singapore, 1993) pp. 77–89.

D.H. Hubel and T.N. Wiesel, Receptive fields, binocular interation, and functional architecture in the cat's visual cortex, J. Phys. 160(1962) no. 106.

R.-Q. Jia and J. Lei, Approximation by multi-integer translates of functions having global support, J. Approx. Theory 72(1993)2–23.

E. Kamke,Das Lebesgue-Stieltjes-Integral (B.G. Teubner, Leipzig, 1956).

A. Lapedes and R. Farber, How neural nets work, in:Neural Information Processing, Systems—Natural and Synthetic, ed. D.Z. Anderson (Amer. Inst. of Physics, New York, 1988) pp. 442–456.

B. Lenze, Constructive multivariate approximation with sigmoidal functions and applications to neural networks, in:Numerical Methods of Approximation Theory, ed. D. Braess and L.L. Schumaker, ISNM 105 (Birkhäuser, Basel, 1992) pp. 155–175.

B. Lenze, Local behaviour of neural network operators—approximation and interpolation, Analysis 13(1993)377–387.

B. Lenze, How to make sigma—pi neural, networks perform perfectly on regular training sets, Neural Networks, to appear.

B. Lenze, Quantitative approximation results for sigma—pi type neural network operators, in:Multivariate Approximation: From CAGD to Wavelets, ed. K. Jetter and F.I. Utreras (World Scientific, Singapore, 1993) pp. 193–209.

W.A. Light, Ridge functions, sigmoidal functions, and neural, networks, in:Approximation Theory VII, ed. E.W. Cheney, C.K. Chui and L.L. Schumaker (Academic Press, Boston, 1992) pp. 163–206.

W.A. Light and E.W. Cheney, Quasi-interpolation with translates of a function having noncompact support, Constr. Approx. 8(1992)35–48.

R.P. Lippmann, J.E. Moody and D.S. Touretzky,Advances in Neural Information Processing Systems 3 (Morgan Kaufmann, San Mateo, CA, 1991).

E.J. McShane,Integration, 8th ed. (Princeton University Press, Princeton, 1974).

H.N. Mhaskar, Approximation properties of a multilayered feedforward artificial neural network, Adv. Comp. Math. 1(1993)61–80.

H.N. Mhaskar and C.A. Micchelli, Approximation by superposition of sigmoidal and radial basis functions, Adv. Appl. Math. 13(1992)350–373.

B. Müller and J. Reinhardt,Neural Networks (Springer, Berlin, 1991).

D.E. Rumelhart and J.L. McClelland,Parallel Distributed Processing: Explorations in the Microstructure of Cognition, Vols. I and II (MIT Press, Cambridge, MA, 1986).

S. Saks,Theory of the Integral, 2nd ed. (Hafner, New York, 1937).

Y. Xu, W.A. Light and E.W. Cheney, Constructive methods of approximation by ridge functions and radial functions, Numer. Algor. 4(1993)205–223.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Lenze, B. On local and global sigma-pi neural networks a common link. Adv Comput Math 2, 479–491 (1994). https://doi.org/10.1007/BF02521610

Received:

Revised:

Issue Date:

DOI: https://doi.org/10.1007/BF02521610