Abstract

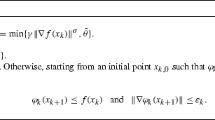

We propose a proximal Newton method for solving nondifferentiable convex optimization. This method combines the generalized Newton method with Rockafellar’s proximal point algorithm. At each step, the proximal point is found approximately and the regularization matrix is preconditioned to overcome inexactness of this approximation. We show that such a preconditioning is possible within some accuracy and the second-order differentiability properties of the Moreau-Yosida regularization are invariant with respect to this preconditioning. Based upon these, superlinear convergence is established under a semismoothness condition.

Similar content being viewed by others

References

X. Chen, L. Qi and R. Womersley, Newton’s method for quadratic stochastic programs with recourse,Journal of Computational and Applied Mathematics 60 (1995) 29–46.

F.H. Clarke,Optimization and Nonsmooth Analysis (John Wiley, New York, 1983).

J.E. Dennis, Jr. and R.B. Schnabel,Numerical Methods for Unconstrained Optimization and Nonlinear Equations (Prentice-Hall, Englewood Cliffs, NJ, 1983).

F. Facchinei, Minimization of SC1 functions and the Maratos effect,Operations Research Letters 17 (1995) 131–137.

M. Fukushima, A descent algorithm for nonsmooth convex optimization,Mathematical Programming 30 (1984) 163–175.

M. Fukushima and L. Qi, A globally and superlinearly convergent algorithm for nonsmooth convex minimization,SIAM Journal on Optimization 6 (1996) 1106–1120.

J. Hiriart-Urruty and C. Lemaréchal,Convex Analysis and Minimization Algorithms (Springer, Berlin, 1993).

C. Lemaréchal and C. Sagastizábal, Practical aspects of the Moreau-Yosida regularization: theoretical preliminaries,SIAM Journal on Optimization, to appear.

R. Mifflin, A quasi-second-order proximal bundle algorithm,Mathematical Programming 73 (1996) 51–72.

J.S. Pang and L. Qi, A globally convergent Newton method for convex SC1 minimization problems.Journal Optimization Theory and Applications 85 (1995) 633–648.

R. Poliquin and R.T. Rockafellar, Second-order nonsmooth analysis, in: D. Du, L. Qi and R. Womersley eds.,Recent Advances in Nonsmooth Optimization (World Scientific, Singapore, 1995) 322–350.

R. Poliquin and R.T. Rockafellar, Generalized Hessian properties of regularized nonsmooth functions,SIAM Journal on Optimization, to appear.

L. Qi, Convergence analysis of some algorithms for solving nonsmooth equations,Mathematics of Operations Research 18 (1993) 227–244.

L. Qi, Superlinearly convergent approximate Newton methods for LC1 optimization problems,Mathematical Programming 64 (1994) 277–294.

L. Qi, Second-order analysis of the Moreau-Yosida regularization of a convex function, Revised version, Applied Mathematics Report, Department of Applied Mathematics, The University of New South Wales (Sydney, 1995).

L. Qi and J. Sun, A nonsmooth version of Newton’s method,Mathematical Programming 58 (1993) 353–368.

L. Qi and R. Womersley, An SQP Algorithm for extended linear-quadratic problems in stochastic programming,Annals of Operations Research 56 (1995) 251–285.

R.T. Rockafellar,Convex Analysis (Princeton University Press, Princeton, NJ, 1970).

R.T. Rockafellar, Augmented Lagrangians and applications of the proximal point algorithm in convex programming,Mathematics of Operations Research 1 (1976) 97–116.

R.T. Rockafellar, Maximal monotone relations and the second derivatives of nonsmooth functions,Ann. Inst. H. Poincaré: Analyse Non Linéaire 2 (1985) 167–184.

Author information

Authors and Affiliations

Additional information

This work is supported by the Australian Research Council.

Rights and permissions

About this article

Cite this article

Qi, L., Chen, X. A preconditioning proximal newton method for nondifferentiable convex optimization. Mathematical Programming 76, 411–429 (1997). https://doi.org/10.1007/BF02614391

Received:

Revised:

Issue Date:

DOI: https://doi.org/10.1007/BF02614391