Abstract

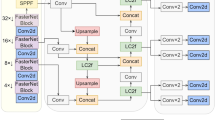

To address the challenges of detecting small targets in complex underwater environments, an efficient and lightweight model, SFESI-YOLOv8n, is proposed. The model improves small target recognition by incorporating a dedicated detection layer and reduces parameter count by removing the large target detection layer. Furthermore, the introduction of the C2f-F module eliminates redundant information from consecutive convolution operations in the bottleneck, further simplifying the model. The integration of a lightweight mixed local context attention (MLCA) mechanism within the small target fusion layer increases sensitivity to small targets. The dynamic upsampler (DySample) employs point sampling to preserve enhanced edge and detail information in feature maps, resulting in clearer feature representations. In addition, the novel In-NWD loss function, utilizing Wasserstein distance and auxiliary bounding boxes, improves small target detection performance. On the UPRC2020 public dataset, SFESI-YOLOv8n achieved an mAP@0.5 of 83.7%, which is a 1.1% improvement over the baseline model. The parameter count and size of the model were reduced by 49.2% and 45.7%, respectively. The frame rate reached 227 FPS, indicating a 9 FPS increase compared to the baseline. On the NVIDIA Jetson TX2 edge device, inference latency decreased from 65 ms to 24 ms with TensorRT acceleration, thereby meeting real-time detection requirements. The SFESI-YOLOv8n model provides a viable and efficient solution for the autonomous detection of small underwater targets, demonstrating significant practical value.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Zeng, L., Sun, B., Zhu, D.: Underwater target detection based on Faster R-CNN and adversarial occlusion network. Eng. Appl. Artif. Intell. 100, 104190 (2021). https://doi.org/10.1016/j.engappai.2021.104190

Yeh, C.-H., Lin, C.-H., Kang, L.-W., Huang, C.-H., Lin, M.-H., Chang, C.-Y., Wang, C.-C.: Lightweight deep neural network for joint learning of underwater object detection and color conversion. IEEE Trans. Neural Netw. Learn. Syst. 33(11), 6129–6143 (2021). https://doi.org/10.1109/TNNLS.2021.3072414

Cai, Z., Vasconcelos, N.: Cascade R-CNN: Delving into high quality object detection. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 6154-6162 (2018). https://doi.org/10.1109/CVPR.2018.00644

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2017). https://doi.org/10.1109/TPAMI.2016.2577031

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C., Berg, A.: SSD: Single Shot Multibox Detector. In: European Conference on Computer Vision, pp. 21-37. Springer, New York (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You Only Look Once: Unified, Real-Time Object Detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788 (2016). https://doi.org/10.48550/arXiv.1506.02640

Redmon, J., Farhadi, A.: YOLO9000: Better, Faster, Stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7263–7271 (2017). https://doi.org/10.1109/CVPR.2017.690

Redmon, J., Farhadi, A.: YOLOv3: An Incremental Improvement. arXiv preprint (2018). arXiv:1804.02767

Bochkovskiy, A., Wang, C., Liao, H.: YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv preprint, (2020). arXiv:2004.10934

Li, C., Li, L., Jiang, H., Weng, K., Geng, Y., Li, L., Ke, Z., Li, Q., Cheng, M., Nie, W., Li, Y., Zhang, B., Liang, Y., Zhou, L., Xu, X., Chu, X., Wei, X., Wei, X.: YOLOv6: A Single-stage Object Detection Framework for Industrial Applications. arXiv preprint (2022). arXiv:2209.02976

Wang, C., Bochkovskiy, A., Liao, H.: YOLOv7: Trainable Bag-of-freebies Sets New State-of-the-art for Real-time Object Detectors. arXiv preprint, (2022). arXiv:2207.02696

Wen, G., Li, S., Liu, F., Luo, X., Er, M.-J., Mahmud, M., Wu, T.: YOLOv5s-CA: A modified YOLOv5s network with coordinate attention for underwater target detection. Sensors 23(7), 3367 (2023). https://doi.org/10.3390/s23073367

Sun, Y., Zheng, W., Du, X., Yan, Z.: Underwater small target detection based on YOLOX combined with MobileViT and double coordinate attention. J. Mar. Sci. Eng. 11(6), 1178 (2023). https://doi.org/10.3390/jmse11061178

Han, Y., Chen, L., Luo, Y., Ai, H., Hong, Z., Ma, Z., Wang, J., Zhou, R., Zhang, Y.: Underwater Holothurian target-detection algorithm based on improved CenterNet and scene feature fusion. Sensors 22(19), 7204 (2022). https://doi.org/10.3390/s22197204

Liu, Q., Huang, W., Duan, X., Wei, J., Hu, T., Yu, J., Huang, J.: DSW-YOLOv8n: A new underwater target detection algorithm based on improved YOLOv8n. Electronics 12, 3892 (2023). https://doi.org/10.3390/electronics12183892

Chen, J., Kao, S.-H., He, H., Zhuo, W., Wen, S., Lee, C.-H., Chan, S.-H. G.: Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 12021-12031 (2023). https://doi.org/10.1109/CVPR52729.2023.01157

Ma, X., Guo, F.-M., Niu, W., Lin, X., Tang, J., Ma, K., Ren, B., Wang, Y.: PCONV: The missing but desirable sparsity in DNN weight pruning for real-time execution on mobile devices. In: Proceedings of the AAAI Conference on Artificial Intelligence, 10377-10384 (2020). https://doi.org/10.1609/aaai.v34i04.5954

Wan, D., Lu, R., Shen, S., Xu, T., Lang, X., Ren, Z.: Mixed local channel attention for object detection. Eng. Appl. Artif. Intell. 123, 106442 (2023). https://doi.org/10.1016/j.engappai.2023.106442

Liu, W., Lu, H., Fu, H., Cao, Z.: Learning to Upsample by Learning to Sample. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, pp. 1–9 (2023). https://doi.org/10.1109/ICCV51070.2023.00554

Zheng, Z., Wang, P., Liu, W., Li, J., Ye, R., Ren, D.: Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In: Proceedings of the AAAI Conference on Artificial Intelligence, 12993–13000 (2020). https://doi.org/10.1609/aaai.v34i07.6999

Wang, J., Xu, C., Yang, W., Yu, L.: A normalized Gaussian Wasserstein distance for tiny object detection. arXiv preprint (2021). https://doi.org/10.48550/arXiv.2110.13389. arXiv:2110.13389

Zhang, H., Xu, C., Zhang, S.: Inner-IoU: more effective intersection over union loss with auxiliary bounding box. arXiv preprint, (2023). https://doi.org/10.48550/arXiv.2311.02877. arXiv:2311.02877

Wang, J., Qi, S., Wang, C., Luo, J., Wen, X., Cao, R.: B-YOLOX-S: A lightweight method for underwater object detection based on data augmentation and multiscale feature fusion. J. Mar. Sci. Eng. 10(11), 1764 (2022). https://doi.org/10.3390/jmse10111764

Acknowledgements

This work was supported by Natural Science Foundation of Shandong Province (Grant No. ZR2019MEE054), and Shandong Qingchuang Science and Technology Program of Universities (Grant 2019KJN036). Yuhuan Fei thanks HZWTECH for the algorithm help and discussions regarding this study.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection, and analysis were performed by YH, FH, SM, GC, XF,and ZR. The first draft of the manuscript was written by FH .YH made changes to the manuscript and commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no conflict of interest regarding the publication of this article. They have no financial interests or personal relationships that could have influenced the research outcomes.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fei, Y., Liu, F., Su, M. et al. Real-time detection of small underwater organisms with a novel lightweight SFESI-YOLOv8n model. J Real-Time Image Proc 22, 23 (2025). https://doi.org/10.1007/s11554-024-01610-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01610-w