Abstract

This paper presents a new approach to image deblurring, on the basis of total variation (TV) and wavelet frame. The Rudin–Osher–Fatemi model, which is based on TV minimization, has been proven effective for image restoration. The explicit exploitation of sparse approximations of natural images has led to the success of wavelet frame approach in solving image restoration problems. However, TV introduces staircase effects. Thus, we propose a new objective functional that combines the tight wavelet frame and TV to reconstruct images from blurry and noisy observations while mitigating staircase effects. The minimization of the new objective functional presents a computational challenge. We propose a fast minimization algorithm by employing the augmented Lagrangian technique. The experiments on a set of image deblurring benchmark problems show that the proposed method outperforms the previous state-of-the-art methods for image restoration.

Similar content being viewed by others

1 Introduction

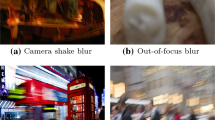

Image reconstruction from blurry and noisy observations is an important procedure in various fields including remote sensing, astronomy, medical imaging, video cameras, etc. Many image deblurring methods, such as statistics [11, 20], Fourier or wavelet transforms [29, 31], and variational analysis [13, 14, 39], have been proposed based on the assumption that blur is caused by linear operators. The conventional observation model is expressed as

where \(y,u\in R^{N}\) are observed and original \(\sqrt{N}\times \sqrt{N}\) images, respectively; \(H\in R^{N\times N}\) is the blur operator, and \(\eta \) is the zero-mean white Gaussian noise with variance \(\sigma ^2\). The deblurring problem involves the restoration of u from the observation image y.

However, recovering \(u\) from \(y\) is an ill-posed problem. A natural way to solve this problem is to add some regularization terms. By utilizing the stability regularization scheme, we can obtain \(\hat{u}\) by solving

where \(\left\| \cdot \right\| _2\) stands for the Frobenius norm, \(\mathrm{pen}(u)\) is a penalty function, and \(\mu \) is a positive regularization parameter. Tikhonov regularization and total variation regularization are two well-known classes of regularizer in the literature.

-

Tikhonov [45] regularization: \(\mathrm{pen}(u)=\left\| L u \right\| _2^2\), where \(L\) stands for some finite difference operators. In this case, given that the objective function (2) is quadratic, an efficient algorithm that minimizes this problem via linear equations is easy to develop. However, Tikhonov regularization often fails to preserve sharp image edges, because this regularization tends to make images overly smooth.

-

Total variation (TV) regularization [39, 40]: \(\mathrm{pen}(u)=\left\| \nabla u \right\| _2 \mathrm{or} \left\| \nabla u \right\| _1\), where \(\nabla \) stands for the discrete gradient. The TV regularization is also known as the Rudin–Osher–Fatemi (ROF) model, which was first used by Rudin, Osher, and Fatemi in [40] for image denoising, and then extended for image deblurring in [39]. Later, Yuan et al. [48] proposed a spatially weighted TV method to multiple-frame image super-resolution and obtained a significant improvement for image super-resolution. In comparison to Tikhonov regularizer, the TV-based methods have shown good performance in preserving important image features, such as points and edges. However, the main known drawback of TV regularization is that this type of regularization usually creates staircasing artifacts; i.e., TV regularizer tends to create large homogeneous zones, which results in the deletion of some textures.

To suppress the staircasing artifacts created by TV-based models, detail-preserving methods such as sparse representations are introduced in image deblurring approaches. A wide variety of dictionaries have been proposed, e.g, Fourier, wavelet, contourlet, curvelet, and wavelet frame, under the assumption that natural images are usually sparse in an appropriate transform domain. An optimal dictionary should be rich enough to obtain the sparsest representation. Recent publications have shown that wavelet frame based approaches for image representation can significantly improve image restoration [4–10, 12, 16–18, 30], because wavelet frame can explicitly exploits the sparse approximations of natural images and can effectively preserves image details. The basic idea of wavelet frame based approaches is that the images can be sparsely approximated by properly designed wavelet frames; that is, we can solve (1) in the proper wavelet frame domain and obtain a sparse solution of (1) in the corresponding wavelet frame domain. The regularization used for the majority of wavelet frame based models is the \(l_1\)-norm of the wavelet frame coefficients.

Inspired by the edge-preserving advantage of TV and the capability of sparsely approximating piecewise smooth images of the wavelet frame based methods, we have combined the wavelet frame and TV regularization to consider a new model for image deblurring. This novel model is expected to take advantage of wavelet frame and TV regularizations. The redundancy and translational invariant features of wavelet frame enable the proposed model to separate noise and image information. Therefore, the proposed model can efficiently suppresses the staircasing artifacts caused by TV regularization. Experimental results show that the proposed model can produces restored images with higher visual quality, peak signal-to-noise ratio (PSNR), and improvement signal-to-noise ratio (ISNR) than those by TV and wavelet frame restoration methods. It should be mentioned that, recently, Pustelnik et al. [35] considered a functional with the dual-tree wavelets and TV terms and then proposed a parallel proximal algorithm (PPXA) for image restoration. We also conduct some experiments to compare our proposed method with the hybrid regularization method PPXA. Experimental results show that our proposed method is better.

To solve the new image deblurring model, the augmented Lagrangian (AL) technique is adopted to develop a reconstruction algorithm. The AL method, which is first introduced in [27, 34], has notable stability and fast convergence performance and is currently widely used for the minimization of convex functionals under linear equality constraints. Similar to [43], we utilize AL method as a single and an efficient tool for deriving explicit reconstruction algorithms. First, we transform the proposed model into a new constrained problem by using auxiliary variables. Afterward, we transform the resulting constrained problem into a different unconstrained problem by using a variable splitting operator. Finally, the obtained unconstrained problem is solved by the AL technique. Simulation experiments show that the algorithm is effective and efficient.

The rest of this paper is organized as follows. In Sect. 2, we review the wavelet frame system and image representation. The framelet-based image reconstruction and the new model, along with the corresponding optimization algorithm, are introduced in Sect. 3. In Sect. 4, we conduct experiments to demonstrate the effectiveness of the proposed model and the corresponding algorithm. Concluding remarks and future works are given in Sect. 5.

2 The Wavelet Frame and Image Representation

In this section, we briefly review the concept of tight wavelet frames. Details on conducting wavelet frames and the corresponding framelet theory can be seen in [4–9, 12, 16–18, 22, 41].

A countable set \(X\subset L^2(R)\) is a tight frame if

where \(\langle \cdot ,\cdot \rangle \) is the inner product of \(L^2(R)\). For a given \(\varPsi :=\left\{ \psi _1,\psi _2,\ldots ,\psi _n\right\} \subset L^2(R)\), the wavelet system is defined by the collection of the dilations and the shifts of \(\varPsi \) as

If \(X(\varPsi )\) forms a tight frame of \(L^2(R)\), then \(X(\varPsi )\) is a tight wavelet frame and each \(\psi \in \varPsi \) is a framelet.

To construct compactly supported wavelet tight frames, a compactly supported scaling function \(\phi \) with refinement mask \(h_0\) should satisfy

where \(\hat{\phi }\) is the Fourier transform of \(\phi \), \(\hat{h}_0\) is the Fourier transform of \(h_0\), and \(\hat{h}_0\) is \(2\pi \)-periodic. For a given compactly supported scaling function \(\phi \), the construction of a wavelet tight frame requires an appropriate set of framelets \(\psi \in \varPsi \), which are defined in the Fourier domain by

Here, \(\hat{h}_i(i=1,\ldots ,n)\) is \(2\pi \)-periodic. The unitary extension principle [38] implies that the system \(X(\varPsi )\) in (4) forms a tight frame in \(L^2(R)\) provided that

for almost all \(\omega \) in \(R\). From (7), we can see that \(h_0\) corresponds to the lowpass filter, whereas \(\left\{ h_i,i=1,\ldots ,n\right\} \) corresponds to the highpass filter. The sequences of the Fourier coefficients of \(\left\{ h_i,i=1,\ldots ,n\right\} \) are called framelet masks. In our implementation, we adopt the piecewise linear B-spline framelets [38]. In this study, the refinement mask is \(\hat{h}_0(\omega )=\cos ^2(\frac{\omega }{2})\), which has a corresponding lowpass filter of \(h_0=\frac{1}{4}\left[ 1,2,1\right] \). The two framelet masks are \(\hat{h}_1=-\frac{\sqrt{2}i}{2}\sin (\omega )\) and \(\hat{h}_2=\sin ^2(\frac{\omega }{2})\), which have corresponding highpass filters of \(h_1=\frac{\sqrt{2}}{4}\left[ 1,0,-1\right] \) and \(h_2=\frac{1}{4}\left[ -1,2,-1\right] \), respectively. The associated refinable function and framelets are given in Fig. 1.

The wavelet frame transform is calculated by using the wavelet frame decomposition algorithm given in [19]. We conduct the decomposition algorithm without downsampling by means of refinement and framelet masks. We use \(W\) to denote the wavelet frame decomposition and \(W^\mathrm{T}\) to denote the wavelet frame reconstruction. Thus, \(W^\mathrm{T}W=I\), where \(I\) is the identity matrix. The rows of \(W\) form a tight frame in \(R^n\). Thus, for every vector \(u\in R^n\), the frame coefficients of \(u\) can be computed by \(x=Wu\). The reconstruction result is given by \(u=W^\mathrm{T} x\), i.e., \(u=W^\mathrm{T}(Wu)\). It should be mentioned that, in practical computation, we proceed in the s-dimensional setting. The s-dimensional framelet system can be easily conducted by the tensor product of the 1D framelet system. Details on generating the wavelet frame transform are given in [12].

3 The New Model for Image Deblurring and Its Optimization Algorithm

3.1 The Proposed Model for Image Deblurring

We first briefly describe the TV and framelet variational methods for clarity.

We use \(\nabla _1\) to denote the difference operator given by \(\nabla _1u(1,j)=u(1,j)-u(N,j)\) for \(j=1,\ldots ,N\) and \(\nabla _1u(i,j)=u(i+1,j)-u(i,j),\quad i=1,\ldots ,N-1;\; j=1,\ldots ,N\). Thus, \(\nabla _1\) is a linear mapping from \(R^{N\times N}\) to \(R^{N\times N}\). Similarly, \(\nabla _2\) is the difference operator from \(R^{N\times N}\) to \(R^{N\times N}\) given by \(\nabla _2u(i,1)=u(i,1)-u(i,N)\) for \(i=1,\ldots ,N\) and \(\nabla _2u(i,j)=u(i,j+1)-u(i,j),\quad i=1,\ldots ,N;j=1,\ldots ,N-1\). The discrete gradient operator is defined as \(\nabla u=(\nabla _1 u,\nabla _2 u)\). The TV of \(u\) is represented by \(\left\| \sqrt{(\nabla _1u)^2+(\nabla _2u)^2} \right\| _1\) or by \(\left\| \nabla _1u \right\| _1+\left\| \nabla _2u \right\| _1\). The isotropic TV model for deblurring is to solve the following optimization problem:

where \(\mu \) is the positive regularization parameter used to balance the two minimization terms. Correspondingly, the anisotropic TV variational approach for deblurring is given by:

The TV-based method works well in terms of preserving edges while suppressing noise. However, both types of the TV discretizations lead to the so-called staircasing effects [21, 32]. Many algorithms have been proposed to solve this TV model, such as time marching strategy [39], primal-dual variable strategy [15], more recent MM method [33], FTVd method [46], ADM method [44], split Bregman scheme [26], and ALM [43].

In the wavelet frame-based framework , Eq. (1) can be written as

which is obtained by \(u=W^\mathrm{T}x\), where \(W^\mathrm{T}\) is the ajoint of famelet basis \(W\) and \(x\) is the vector of representation coefficients. Then, the estimation can be performed by solving the following minimization problem:

where \(\left\| \cdot \right\| _2^2\) stands for the square of the Frobenius norm, ensuring that the solution agrees with the observation; \(\left\| \cdot \right\| _p\) is the \(l_p\)-norm, \(0\le p\le 1\), and \(\mu \) is the regularization parameter. The restored image is given by \(\hat{u}=W^\mathrm{T} \hat{x}\). Figueiredo and Nowak [24, 25] use orthogonal wavelet and redundant wavelet (also called wavelet frame) for image deconvolution. In [23], Elad utilizes the Haar wavelet frame for image deblurring and obtains good results. Li et al. [30] propose to handle blurred images corrupted by mixed Gaussian-impulse noise by minimizing a new functional including a content-dependent fidelity term and a novel regularizer defined as the \(l_1\) norm of geometric tight framelet coefficients. The advantage of the method is that this algorithm is parameter free, which makes the proposed method more practical. Lately, Cai et al. [10] propose a regularization-based approach to remove motion blurring from the image by regularizing the sparsity of both the original image and the motion blur kernel under tight wavelet frame systems. This method has performed quite well in the case of motion blurring being the uniform blurring over the image. However, it cannot deal with nonuniform motion blurring.

In this paper, we have combined wavelet frame and TV regularizations to create the following model for image deblurring and denoising, which is given by

where \(y\in R^{N\times N}\) is the observed image, \(H\in R^{N\times N}\) is the blurring operator, \(W^\mathrm{T}\) is the discrete inverse wavelet frame transform, \(x\) is the wavelet frame coefficient, and \(\alpha \) and \(\beta \) are positive regularization parameters that balance the three minimization terms. The deblurred image is given by \(\hat{u}=W^\mathrm{T}\hat{x}\). Here, \(\left\| \cdot \right\| _\mathrm{TV}\) denotes the total variation. We emphasize that our approach applies to both isotropic and anisotropic TV deconvolution problems. For simplicity, we will treat only the isotropic case in detail because the treatment for the anisotropic case is completely analogous. The proposed model, which will be called TVframe henceforth, uses TV and framelet approaches.

We adopt the augmented Langrangian (AL) technique to solve the inverse problem (10). The AL technique has been recently presented [43] to address TV-regularized minimization. The AL technique has notable stability and fast convergence rate compared with traditional methods. The AL method is discussed in the next subsection.

3.2 The Proposed Algorithm for this TVframe Model

We now apply the AL method to the basic problem (10).

By using two auxiliary variables \(z\) and \(v\), we first transform model (10) into the following constrained problem:

and then using a variable splitting operator, we transform the resulting constrained problem (11) into a different unconstrained problem:

The obtained unconstrained problem (12) is solved by the AL method. The AL function of (10) has the following form:

where \(p_1^k\) and \(p_2^k\) are vectors in \(R^{N\times N}\) to avoid \(\lambda _1\) and \(\lambda _2\) going to infinity. The classical AL method with Lagrangian \({\mathcal L}_{{\mathcal A}}(x,z,v,p_1,p_2)\) gives

as the solution to the unconstrained optimization problem (10). Finding the saddle point requires the minimization of \({\mathcal L}_{{\mathcal A}}\) with respect to variables \(x\), \(z\), and \(v\). A common practical approach is to obtain the saddle point by performing alternating optimization. By (14), we have obtained the following optimization algorithm:

Problems (18) and (19) can be solved efficiently. We are now left with the minimization problems (15), (16), and (17) to address.

3.2.1 Calculate \(v\) Subproblem with \(z\) and \(x\)

For problem (15),

The minimizer of the subproblem can be obtained by applying the two dimensional shrinkage [28] with

When the TV is anisotropic, \(v\) is given by the simpler one-dimensional shrinkage

where \(``\circ \)” represents the point-wise product and \(``\textit{sgn}\)” stands for the signum function defined by

3.2.2 Calculate \(z\) Subproblem with \(v\) and \(x\)

For problem (16),

Since the above function is differentiable, the optimality condition gives the following linear equation:

where \(\nabla ^\mathrm{T}\) represents the conjugate operator of \(\nabla \). Denoting \({\mathcal F}(u)\) as the fast Fourier transform of \(u\), we can obtain the solution of \(z\) as follows:

where

3.2.3 Calculate \(x\) Subproblem with \(z\) and \(v\)

For minimization of (17),

The subproblem can be minimized by using the fast shrinkage thresholding algorithm (FISTA) [2]. The outline of the algorithm is as follows: we choose \(r_1=x^0,\; t_1=1\). For each \(l\ge 1\), we let

where \(s_\delta \) is the shrinkage operator defined by

After knowing how to solve the subproblem (15)–(17), we present the following alternative minimization procedure to solve (13).

For simplicity, we terminated Algorithm 2 by relative change in \(u\) in all of our experiments; i.e.,

where \(\epsilon > 0\) is a given tolerance.

4 Experiment Results and Analysis

In this section, we perform some experiments to demonstrate the effectiveness and efficiency of the proposed approach and compare the proposed approach with the TV-based, wavelet frame-based and the hybrid regularization approaches. All experiments are conducted with MATLAB 2011b running on a desktop machine with a Windows 7 operating system and an Intel Core i3 Duo central processing unit at 3.07 GHz and 6 GB memory.

We consider the original clean Barbara (\(512 \times 512\)), Lena (\(512 \times 512\)), and Cameraman (\(256 \times 256\)) images as test images (see Fig. 2).

For all experiments, the tested images are generated by applying blur kernel on the clean images and then adding additional Gaussian white noise with various standard deviation. The initialization of \(y\) is set as the observed image. The stop criterion \(\epsilon = 0.0008\) is used to terminate iteration, and the quality of the deblurred image is measured by PSNR and ISNR, which are defined as

and

respectively, where MSE is the mean-squared-error per pixel.

4.1 Comparisons with the TV-Based Approach

In this subsection, we conduct some experiments to compare our method with LD [37], FTVd [44, 46], and SALSA [1] methods, which are based on the TV model. These methods for image deblurring are based on the ROF model and are more efficient compared with the other existing methods. The efficient Matlab code NUMIPAD [36] can be used for LD. The Matlab code for FTVd can be downloaded from Rice University and is available at http://www.caam.rice.edu/~optimization/L1/ftvd. The FTVd algorithm used for comparison is FTVd_v4.1, which is the latest version of FTVd. The Matlab code for SALSA is available at http://cascais.lx.it.pt/~mafonso/salsa.html.

We test three types of blurring kernels, i.e., Gaussian, average, and uniform. For simplicity, we denote Gaussian blur with a blurring size hsize and a SD sigma as G(hsize, sigma), whereas the average blur with a blurring size hsize is denoted by A(hsize). We generate these blur kernels by using the Matlab “fspecial” command.

In the first experiment, we conduct the experiment on the Barbara image. The blur kernel and the corresponding Gaussian noise are the same as those in [44]. The clean Barbara image is blurred by Gaussian blur G(11,9). Then, the Gaussian noise with SD of 0.255 is added to the blurred images. The regularization parameter \(\lambda =0.0002\) and iteration pars.loops=9 are selected in LD. The parameter \(\mu =1\mathrm{e}^5\) is selected in FTVd_v4.1, whereas the regularization parameters \(\lambda =0.002,\; \mu =0.00001\) are selected in SALSA. The regularization parameters \(\alpha =0.0000065, \, \beta =0.0000009\) are selected in TVframe. Figure 3 shows the experiment results. As shown in Fig. 3, the deblurred image estimated by the TVframe is better than that estimated by LD, FTVd_v4.1, and SALSA. Specifically, more details are recovered by the proposed method. Consequently, the PSNR and ISNR values obtained by TVframe are higher than those obtained by LD, FTVd_v4.1, and SALSA.

Deblurring of the Barbara image, from left to right and from top to bottom, zoomed fragments of the following images are presented: original, blurred noisy, reconstructed by LD (PSNR = 26.67 dB, ISNR = 3.87 dB), FTVd_v4.1 (PSNR = 26.14 dB, ISNR = 3.84 dB), SALSA (PSNR = 26.99 dB, ISNR = 4.04 dB) and by the proposed TVframe method (PSNR = 27.44 dB, ISNR = 4.21 dB)

Figure 4 shows the experimental results with the Lena image. In this experiment, we replicate the experimental condition of [44]. The blur point spread function is average blur A(17) and the Gaussian noise with SD is 0.255. The regularization parameter \(\lambda = 0.000009\) and the iteration pars.loops=9 are selected in LD. The parameter \(\mu =1\mathrm{e}^5\) is selected in FTVd_v4.1. The regularization parameters \(\lambda =0.003, \mu =0.0005\) are chosen in SALSA. Consequently, the regularization parameters \(\alpha =0.000006, \beta =0.00004\) are selected in TVframe. Figure 4 shows that the proposed algorithm can suppress staircasing effects and can provides sharp image edges.

Deblurring of the Lena image with average blur kernel A(17) and Gaussin noise \(\sigma \)=0.255. From left to right and from top to bottom, zoomed fragments of the following images are presented: original, blurred noisy, reconstructed by LD (PSNR = 31.39 dB, ISNR = 8.52 dB), FTVd_v4.1 (PSNR = 31.21 dB, ISNR = 8.94 dB), SALSA (PSNR = 31.45 dB, ISNR = 8.91 dB) and by the proposed TVframe method (PSNR = 31.89 dB, ISNR = 9.16 dB)

Figure 5 shows the experimental results with the Cameraman image. In this test, we use \(9 \times 9\) uniform blur kernel and Gaussian noise with SD of 0.56, which is the same as that in [1]. The regularization parameter \(\lambda = 0.00003\) and the iteration pars.loops=6 are chosen in LD. The regularization parameter \(\mu =1.5\mathrm{e}^4\) is chosen in FTVd_v4.1. Consequently, the regularization parameters \(\lambda =0.025,\; \mu =0.0025\) are chosen in SALSA. The regularization parameters \(\alpha =0.00005,\, \beta =0.0000009\) are selected in TVframe. It can be seen from Fig. 5 that more details can be recovered by the TVframe than other methods. The PSNR and ISNR values obtained by TVframe are higher compared to the ones obtained by LD, FTVd_v4.1, and SALSA.

Example of the deblurring results for the image Cameraman with Uniform blur kernel and Gaussin noise \(\sigma \)=0.56. From left to right and from top to bottom, zoomed fragments of the following images are presented: original, blurred noisy, reconstructed by LD (PSNR = 30.15 dB, ISNR = 8.02 dB), FTVd_v4.1 (PSNR = 29.93 dB, ISNR = 8.36 dB), SALSA (PSNR = 30.24 dB, ISNR = 8.22 dB) and by the proposed TVframe method (PSNR = 30.64 dB, ISNR = 8.70 dB)

4.2 Comparisons with the Framelet-Based Approach

On the basis of the above subsection, we conclude that the TVframe model is more suitable for image deblurring and denoising compared with TV-based methods. In this subsection, we will show that the proposed approach is more suitable for image deblurring and denoising compared with wavelet frame-based methods. We provide several examples to compare the TVframe method with the Wavelet Frame-based methods. Wavelet frame-based methods, including TwIST [3], SpaRSA [47], SALSA [1], and APG [42], are the current state of the art. We compare TVframe with SALSA and APG both in synthesis and analysis cases. For our numerical experiments, we replicate the experimental condition of [1]. The blur point spread function and the variance of the noise \(\sigma ^2\) for each scenario are summarized in Table 1. Each of the scenarios is tested with the well-known Cameraman image.

For a fair comparison, we have optimized the regularization parameters of TwIST, SpaRSA, SALSA, and APG algorithms by using ground truth images in the same way as that of TVframe. Figure 6 shows the recovered results of the APG synthesis, APG anlysis, and TVframe in scenarios 1 and 4. Only the close-ups of the image regions are shown for better comparison. As shown in Fig. 6, the restored images estimated by TVframe are better than those estimated by APG in synthesis case and APG in analysis case. Specifically, more details are recovered by the proposed method.

Comparisons between TVframe, APG synthesis and APG analysis for image deblurring. The first row is with Uniform blur and Gaussian noise with sigma = 0.56, from left to right, zoomed fragments of the following images are presented: blurred noisy, reconstructed by APG synthesis (PSNR = 28.53 dB, ISNR = 6.98 dB), APG analysis (PSNR = 30.42 dB, ISNR = 8.69 dB) and by the proposed TVframe (PSNR = 30.64 dB, ISNR = 8.70 dB). The second row is with blur kernel \(h_{ij}=1/(1+i^2+j^2)\) and Gaussian noise with \(\sigma =\sqrt{2}\), from left to right, zoomed fragments of the following images are presented: blurred noisy, reconstructed by APG synthesis (PSNR = 28.55 dB, ISNR = 3.18 dB), APG analysis (PSNR = 30.30 dB, ISNR = 3.62 dB) and by the proposed TVframe method (PSNR = 30.45 dB, ISNR = 3.76 dB)

It is clearly seen from Table 2 that the proposed method has the highest PSNR and ISNR values (bold values), which show that the proposed method can better maintain the gray levels of the clean image.

4.3 Comparisons with the Hybrid Regularization Approach

In this subsection, we compare the proposed method with another hybrid regularization method PPXA [35]. In this test, we use the Gaussian blur G(15,8), plus Gaussian noise with SD of 0255. We run the two methods many times to obtain the best results. In PPXA, the selected parameters are \(\theta =0.8,\) \(\mu =0.006\), and in the proposed method, the selected parameters are \(\alpha =0.000009,\) \(\beta =0.000009\). Figure 7 shows the recovered results of the two methods. We can see from Fig. 7 that the restored images estimated by the proposed method are better than the ones estimated by PPXA, more details are recovered by our method, especially, the face of lena in our method looks smoother than the one in PPXA. Meanwhile, the PSNR and ISNR values obtained by the proposed method are higher than those obtained by the PPXA method.

Deblurring of the Lena image with Gussian blur kernel G(15,8) and Gaussin noise \(\sigma =\) 0.255. From left to right and from top to bottom, zoomed fragments of the following images are presented: blurred noisy, reconstructed by PPXA (PSNR = 30.35 dB, ISNR = 6.87 dB) and by the proposed TVframe method (PSNR = 31.46 dB, ISNR = 7.97 dB)

5 Conclusion

In this paper, we propose a new model for image deblurring by combining the wavelet frame and TV approaches. Experimental results demonstrate the superiority of the novel model over the TV-based, the wavelet-frame based methods, and the hybrid regularization methods. The proposed model utilizes the characteristic of wavelet frame in preserving image details and suppressing the staircasing artifacts, thereby solving the destruction problem caused by TV-based methods. In this paper, we mainly consider the synthesis model for image deblurring. The analysis model and the balanced model will be considered in future studies.

References

M.V. Afonso, J.M. Bioucas-Dias, M.A.T. Figueiredo, Fast image recovery using variable splitting and constrained optimization. IEEE Trans. Image Process. 19(9), 2345–2356 (2010)

A. Beck, M. Teboulle, A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

J.M. Bioucas-Dias, M.A.T. Figueiredo, A new TwIST: two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 16(12), 2992–3004 (2007)

J.F. Cai, R.H. Chan, Z. Shen, A framelet-based image inpainting algorithm. Appl. Comput. Harm. Anal. 24(2), 131–149 (2008)

J.F. Cai, R. Chan, L. Shen, Z. Shen, Restoration of chopped and nodded images by framelets. SIAM J. Sci. Comput. 30(3), 1205–1227 (2008)

J.F. Cai, S. Osher, Z. Shen, Split Bregman methods and frame based image restoration. Multisc. Model. Simul. 8(2), 337–369 (2009)

J.F. Cai, R.H. Chan, L. Shen, Z. Shen, Convergence analysis of tight framelet approach for missing data recovery. Adv. Comput. Math. 31(1), 87–113 (2009)

J.F. Cai, R.H. Chan, Z. Shen, Simultaneous cartoon and texture inpainting. Inverse Probl. Imaging 4(3), 379–395 (2010)

J.F. Cai, Z. Shen, Framelet based deconvolution. J. Comput. Math. 28(3), 289–308 (2010)

J.-F. Cai, H. Ji, C. Liu, Z. Shen, Framelet-based blind motion deblurring from a single image. IEEE Trans. Image Process. 21(2), 562–572 (2012)

A.S. Carasso, Linear and nonlinear image deblurring: a documented study. SIAM J. Numer. Anal. 36(6), 1659–1689 (1999)

A. Chai, Z. Shen, Deconvolution: a wavelet frame approach. Numer. Math. 106(4), 529–587 (2007)

A. Chambolle, P.L. Lions, Image recovery via total variation minimization and related problems. Numer. Math. 76(2), 167–188 (1997)

T.F. Chan, J. Shen, Theory and computation of variational image deblurring. Math. Comput. Imaging Sci. Inf. Process. 11, 93 (2007)

T.F. Chan, G.H. Golub, P. Mulet, A nonlinear primal-dual method for total variation-based image restoration. SIAM J. Sci. Comput. 20(6), 1964–1977 (1999)

R.H. Chan, T.F. Chan, L. Shen, Z. Shen, Wavelet algorithms for high-resolution image reconstruction. SIAM J. Sci. Comput. 24(4), 1408–1432 (2003)

R.H. Chan, S.D. Riemenschneider, L. Shen, Z. Shen, Tight frame: an efficient way for high-resolution image reconstruction. Appl. Comput. Harm. Anal. 17(1), 91–115 (2004)

R.H. Chan, Z. Shen, T. Xia, A framelet algorithm for enhancing video stills. Appl. Comput. Harm. Anal. 23(2), 153–170 (2007)

I. Daubechies, B. Han, A. Ron, Z. Shen, Framelets: MRA-based constructions of wavelet frames. Appl. Comput. Harm. Anal. 14(1), 1–46 (2003)

G. Demoment, Image reconstruction and restoration: overview of common estimation structures and problems. IEEE Trans. Acoust. Speech Signal Process. 37(12), 2024–2036 (1989)

D.C. Dobson, F. Santosa, Recovery of blocky images from noisy and blurred data. SIAM J. Appl. Math. 56(4), 1181–1198 (1996)

B. Dong, Z. Shen, in MRA Based Wavelet Frames and Applications. IAS Lecture Notes Series, Summer Program on the Mathematics of Image Processing (Park City Mathematics Institute, Salt Lake City, 2010)

M. Elad, Sparse and Redundant Representations: From Theory to Applications in Signal and Image Processing (Springer, New York, 2010)

M.A.T. Figueiredo, R.D. Nowak, An EM algorithm for wavelet-based image restoration. IEEE Trans. Image Process. 12(8), 906–916 (2003)

M.A.T. Figueiredo, R.D. Nowak, A Bound Optimization Approach to Wavelet-Based Image Deconvolution (IEEE, New York, 2005), pp. II-782-5

T. Goldstein, S. Osher, The split Bregman method for L1-regularized problems. SIAM J. Imaging Sci. 2(2), 323–343 (2009)

M.R. Hestenes, Multiplier and gradient methods. J. Optim. Theory Appl. 4(5), 303–320 (1969)

R.Q. Jia, H. Zhao, W. Zhao, Convergence analysis of the Bregman method for the variational model of image denoising. Appl. Comput. Harm. Anal. 27(3), 367–379 (2009)

A.K. Katsaggelos, M. Schroeder, T. Kohonen, T. Huang, Digital Image Restoration (Springer, New York, 1991)

Y.-R. Li, L. Shen, D.-Q. Dai, B.W. Suter, Framelet algorithms for de-blurring images corrupted by impulse plus Gaussian noise. IEEE Trans. Image Process. 20(7), 1822–1837 (2011)

R. Neelamani, H. Choi, R. Baraniuk, Wavelet-Based Deconvolution for Ill-Conditioned Systems (IEEE, New York, 1999), pp. 3241–3244

M. Nikolova, Local strong homogeneity of a regularized estimator. SIAM J. Appl. Math. 61(2), 633–658 (2000)

J.P. Oliveira, J.M. Bioucas-Dias, M.A.T. Figueiredo, Adaptive total variation image deblurring: a majorizationminimization approach. Signal Process. 89(9), 1683–1693 (2009)

M.J.D. Powell, A Method for Non-linear Constraints in Minimization Problems (UKAEA, Abingdon, 1967)

N. Pustelnik, C. Chaux, J. Pesquet, Parallel proximal algorithm for image restoration using hybrid regularization. IEEE Trans. Image Process. 20(9), 2450–2462 (2011)

P. Rodrguez, B. Wohlberg, Numerical methods for inverse problems and adaptive decomposition (NUMIPAD). Software library. http://numipad.sourceforge.net/.(a) Blur and 10 (2007)

P. Rodrguez, B. Wohlberg, Efficient minimization method for a generalized total variation functional. IEEE Trans. Image Process. 18(2), 322–332 (2009)

A. Ron, Z. Shen, Affine systems in \(L\mathit{_2(R}^d)\): the analysis of the analysis operator. J. Funct. Anal. 148(2), 408–447 (1997)

L.I. Rudin, S. Osher, Total Variation Based Image Restoration with Free Local Constraints. in Proceedings of IEEE International Conference on Image Processing, II (IEEE, New York, 1994), pp. 31–35

L.I. Rudin, S. Osher, E. Fatemi, Nonlinear total variation based noise removal algorithms. Phys. D 60(1), 259–268 (1992)

Z. Shen, Wavelet frames and image restorations. in Proceedings of the International Congress of Mathematicians (IEEE, New York, 2010), pp. 2834–2863

Z. Shen, K.C. Toh, S. Yun, An accelerated proximal gradient algorithm for frame-based image restoration via the balanced approach. SIAM J. Imaging Sci. 4(2), 573–596 (2011)

X.C. Tai, C. Wu, in Augmented Lagrangian Method, Dual Methods and Split Bregman Iteration for ROF Model. Scale Space and Variational Methods in Computer Vision (Springer, Heidelberg, 2009), pp. 502–513

M. Tao, J. Yang, B. He, Alternating direction algorithms for total variation deconvolution in image reconstruction. TR0918 (Department of Mathematics, Nanjing University, Nanjing, 2009)

A. Tikhonov, V.Y. Arsenin, Methods for Solving Ill-Posed Problems. Scripta Series in Mathematics (Scripta Mathematics, New York, 1977) (1979)

Y. Wang, W. Yin, Y. Zhang, A Fast Algorithm for Image Deblurring with Total Variation Regularization. CAAM Technical Report TR07–10 (2007)

S.J. Wright, R.D. Nowak, M.A.T. Figueiredo, Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57(7), 2479–2493 (2009)

Q. Yuan, L. Zhang, H. Shen, Multiframe super-resolution employing a spatially weighted total variation model. IEEE Trans. Circuits Syst. Video Technol. 22(3), 379–392 (2012)

Acknowledgments

The authors are very grateful to the Editor and the two referees for their helpful comments and suggestions on the original version of the manuscript, which led to the improved version of the manuscript. This research was supported by the National Natural Science Foundation of China (No. 61001187, No. 41001256, No. 41101425, and No. 41301470).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, F., Jiao, Y., Lin, L. et al. Image Deblurring Via Combined Total Variation and Framelet. Circuits Syst Signal Process 33, 1899–1916 (2014). https://doi.org/10.1007/s00034-013-9725-x

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-013-9725-x