Abstract

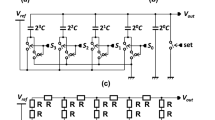

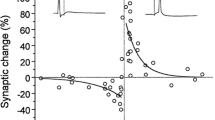

We propose a CMOS synapse circuit using a time-domain digital-to-analog converter (TDAC) for realizing spiking neural network hardware with on-chip learning. A TDAC has the advantages that (i) it can reproduce a post-synaptic potential and (ii) its number of analog components for DA conversion, such as current sources and capacitors, is independent of the bit width. We designed a synapse circuit using TSMC 40 nm technology, and the synaptic weight was updated by the remote supervised method (ReSuMe) or spike timing-dependent plasticity (STDP). The circuit simulation results of the designed circuit show that it can execute ReSuMe and generate the time-window function for STDP with high energy efficiency.

Similar content being viewed by others

References

F. Akopyan, J. Sawada, A. Cassidy, R. Alvarez-Icaza, J. Arthur, P. Merolla, N. Imam, Y. Nakamura, P. Datta, G.J. Nam et al., Truenorth: Design and tool flow of a 65 mW 1 million neuron programmable neurosynaptic chip. IEEE Trans. Comput.-Aided Design of Integr. Circuits Syst. 34(10), 1537–1557 (2015)

D. Bankman, , L. Yang, B. Moons, M. Verhelst, B. Murmann, An always-on 3.8 \(\mu \)j/86% CIFAR-10 mixed-signal binary CNN processor with all memory on chip in 28nm CMOS, in ISSCC, pp. 222–224. IEEE (2018)

Gq. Bi, Mm. Poo, Synaptic modification by correlated activity: Hebb’s postulate revisited. Annu. Rev. Neurosci. 24(1), 139–166 (2001)

M. Davies, N. Srinivasa, T.H. Lin, G. Chinya, Y. Cao, S.H. Choday, G. Dimou, P. Joshi, N. Imam, S. Jain et al., Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 38(1), 82–99 (2018)

Q. Dong, M.E. Sinangil, B. Erbagci, D. Sun, W.S. Khwa, H.J. Liao, Y. Wang, J. Chang, A 351TOPS/W and 372.4 GOPS compute-in-memory SRAM macro in 7nm FinFET CMOS for machine-learning applications, in ISSCC, pp. 242–244. IEEE (2020)

W. Gerstner, W.M. Kistler, Spiking Neuron Models: Single Neurons, Populations, Plasticity (Cambridge University Press, Cambridge, 2002)

S.K. Gonugondla, M. Kang, N. Shanbhag, A 42pJ/decision 3.12 TOPS/W robust in-memory machine learning classifier with on-chip training, in ISSCC, pp. 490–492. IEEE (2018)

J.S. Haas, T. Nowotny, H.D. Abarbanel, Spike-timing-dependent plasticity of inhibitory synapses in the entorhinal cortex. J. Neurophysiol. 96(6), 3305–3313 (2006)

A.L. Hodgkin, A.F. Huxley, A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117(4), 500–544 (1952)

G. Indiveri, F. Corradi, N. Qiao, Neuromorphic architectures for spiking deep neural networks, in IEDM, pp. 4–2. IEEE (2015)

E.M. Izhikevich, Simple model of spiking neurons. IEEE Trans. Neural Netw. 14(6), 1569–1572 (2003)

W.S. Khwa, J.J. Chen, J.F. Li, X. Si, E.Y. Yang, X. Sun, R., Liu, P.Y. Chen, Q. Li, S. Yu, et al. A 65nm 4kb algorithm-dependent computing-in-memory SRAM unit-macro with 2.3 ns and 55.8 TOPS/W fully parallel product-sum operation for binary DNN edge processors, in ISSCC, pp. 496–498. IEEE (2018)

Q. Liu, B. Gao, P. Yao, D. Wu, J. Chen, Y. Pang, W. Zhang, Y. Liao, C.X. Xue, W.H. Chen et al. A fully integrated analog ReRAM based 78.4 TOPS/W compute-in-memory chip with fully parallel MAC computing, in ISSCC, pp. 500–502. IEEE (2020)

R. Mochida, K. Kouno, Y. Hayata, M. Nakayama, T. Ono, H. Suwa, R. Yasuhara, K. Katayama, T. Mikawa, Y. Gohou, A 4M synapses integrated analog ReRAM based 66.5 TOPS/W neural-network processor with cell current controlled writing and flexible network architecture, in VLSIT, pp. 175–176. IEEE (2018)

S. Okumura, M. Yabuuchi, K. Hijioka, K. Nose, A ternary based bit scalable, 8.80 TOPS/W CNN accelerator with many-core processing-in-memory architecture with 896K synapses/mm 2, in VLSIC, pp. C248–C249. IEEE (2019)

T.S. Otis, Y. De Koninck, I. Mody, Characterization of synaptically elicited GABAB responses using patch-clamp recordings in rat hippocampal slices. J. Physiol. 463(1), 391–407 (1993)

J. Park, J. Lee, D. Jeon, A 65nm 236.5 nj/classification neuromorphic processor with 7.5% energy overhead on-chip learning using direct spike-only feedback, in ISSCC, pp. 140–142. IEEE (2019)

F. Ponulak, A. Kasiński, Supervised learning in spiking neural networks with resume: sequence learning, classification, and spike shifting. Neural Comput. 22(2), 467–510 (2010)

N. Qiao, H. Mostafa, F. Corradi, M. Osswald, F. Stefanini, D. Sumislawska, G. Indiveri, A reconfigurable on-line learning spiking neuromorphic processor comprising 256 neurons and 128k synapses. Front. Neurosci. 9, 141 (2015)

X. Si, J.J. Chen, Y.N. Tu, W.H. Huang, J.H. Wang, Y.C. Chiu, W.C. Wei, S.Y. Wu, X., Sun, R. Liu, et al. A twin-8T SRAM computation-in-memory macro for multiple-bit CNN-based machine learning, in ISSCC, pp. 396–398. IEEE (2019)

S. Song, K.D. Miller, L.F. Abbott, Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 3(9), 919 (2000)

J.W. Su, X. Si, Y.C. Chou, T.W. Chang, W.H. Huang, Y.N. Tu, R. Liu, P.J. Lu, T.W. Liu, J.H. Wang, et al. A 28nm 64kb inference-training two-way transpose multibit 6T SRAM compute-in-memory macro for AI edge chips, in ISSCC, pp. 240–242. IEEE (2020)

M. Tsukada, T. Aihara, Y. Kobayashi, H. Shimazaki, Spatial analysis of spike-timing-dependent LTP and LTD in the CA1 area of hippocampal slices using optical imaging. Hippocampus 15(1), 104–109 (2005)

S. Uenohara, K. Aihara, Time-domain digital-to-analog converter for spiking neural network hardware. Circuit Syst. Signal Process 1–19 (2020)

M. Wilson, J.M. Bower, Cortical oscillations and temporal interactions in a computer simulation of piriform cortex. J. Neurophysiol. 67(4), 981–995 (1992)

C.X. Xue, W.H. Chen, J.S. Liu, J.F. Li, W.Y. Lin, W.E. Lin, J.H. Wang, W.C. Wei, T.W. Chang, T.C. Chang, et al. A 1Mb multibit ReRAM computing-in-memory macro with 14.6 ns parallel MAC computing time for CNN based AI edge processors, in ISSCC, pp. 388–390. IEEE (2019)

J.H. Yoon, A. Raychowdhury, A 65nm 8.79 TOPS/W 23.82 mw mixed-signal oscillator-based neuroSLAM accelerator for applications in edge robotics, in ISSCC, pp. 478–480. IEEE (2020)

Acknowledgements

This work was supported by the VLSI Design and Education Center (VDEC), the University of Tokyo, in collaboration with Cadence Design Systems, Inc., AMED under Grant Number JP20dm0307009, UTokyo Center for Integrative Science of Human Behavior (CiSHuB), the International Research Center for Neurointelligence (WPI-IRCN) at The University of Tokyo Institutes for Advanced Study (UTIAS), and by the BMAI(BrainMorphic AI) project at Institute of Industrial Science, the University of Tokyo in collaboration with NEC Corporation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Uenohara, S., Aihara, K. A Trainable Synapse Circuit Using a Time-Domain Digital-to-Analog Converter. Circuits Syst Signal Process 42, 1312–1326 (2023). https://doi.org/10.1007/s00034-022-02168-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-022-02168-3