Abstract.

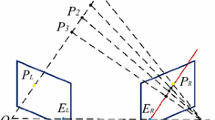

In this paper, a high-speed digital processed microscopic observational system for telemicrooperation is proposed with a dynamic focusing system and a high-speed digital-processing system using the “depth from focus” criterion. In our previous work [10], we proposed a system that could simultaneously obtain an “all-in-focus image” as well as the “depth” of an object. In reality, in a microoperation, it is not easy to obtain good visibility of objects with a microscope focused at a shallow depth, especially in microsurgery and DNA studies, among other procedures. In this sense, the all-in-focus image, which keeps an in-focus texture over the entire object, is useful for observing microenvironments with the microscope. However, one drawback of the all-in-focus image is that there is no information about the objects’ depth. It is also important to obtain a depth map and show the 3D microenvironments at any view angle in real time to actuate the microobjects. Our earlier system with a dynamic focusing lens and a smart sensor could obtain the all-in-focus image and the depth in 2 s. To realize real-time microoperation, a system that could process at least 30 frames per second (60 times faster than the previous system) would be required. This paper briefly reviews the depth from focus criterion to Simultaneously achieve the all-in-focus image and the reconstruction of 3D microenvironments. After discussing the problem inherent in our earlier system, a frame-rate system constructed with a high-speed video camera and FPGA (field programmable gate array) hardware is discussed. To adapt this system for use with the microscope, new criteria to solve the “ghost problem” in reconstructing the all-in-focus image are proposed. Finally, microobservation shows the validity of this system.

Similar content being viewed by others

References

Brajovic V, Kanade T (1997) Sensory attention: computational sensor paradigm for low-latency adaptive vision. In: Proceedings of the DARPA image understanding workshop, New Orleans, May 1997

Kaneko T, Ohmi T, Ohya N, Kawahara N, Hattori T (1997) A new, compact, and quick-response dynamic focusing lens. In: Proceedings of the 9th international conference on solid-state sensors and actuators (Transducers’97), Chicago, 16-19 June 1997, pp 63-66

Kodama K, Aizawa K, Hatori M (1997) Acquisition of an all-focused image by the use of multiple differently focused images. Trans Inst Electron Inform Commun Eng Engineers D-II J80-D-II(9):2298-2307

Kundur SR, Raviv D (1996) Novel active-vision-based visual-threat-cue for autonomous navigation tasks. In: Proceedings of CVPR’96, San Francisco, June 1996, pp 606-612

MAPP (1997) User documentation MAPP2200 PCI system. IVP

Moini A (1997) Vision chips or seeing silicon. http://www.iee.et.tu-dresden.de/iee/eb/analog/papers/mirror/visionchips/vision+_+chips/vision+_+chips.html

Namiki A, Nakabo Y, Ishii I, Ishikawa M (2000) 1ms sensory-motor fusion system. IEEE Trans Mechatron 5(3):244-252

Nayer SK, Nakagawa Y (1994) Shape from focus. IEEE Trans PAMI 16(8):824-831

Nayer SK, Watanabe M, Noguchi M (1995) Real-time focus range sensor. In: Proceedings of the international conference on computer vision (ICCV’95), Cambridge, MA, 20-23 June 1995, pp 995-1001

Pedraza Ortega JC, Ohba K, Tanie K, Rin G, Dangi R, Takei Y, Kaneko T, Kawahara N (2000) Real-time VR camera system. In: Proceedings of the 4th Asian conference on computer vision, Taipei, Taiwan, 8-11 January 2000, pp 503-513

Watanabe M, Nayer SK (1996) Minimal operator set for passive depth from defocus. In: Proceedings of CVPR’96, San Francisco, 18-20 June 1996, pp 431-438

Author information

Authors and Affiliations

Corresponding author

Additional information

Received: 12 August 2001, Accepted: 17 July 2002, Published online: 12 November 2003

Correspondence to: Kohtaro Ohba

Rights and permissions

About this article

Cite this article

Ohba, K., Ortega, J.C.P., Tanie, K. et al. Microscopic vision system with all-in-focus and depth images. Machine Vision and Applications 15, 55–62 (2003). https://doi.org/10.1007/s00138-003-0125-2

Issue Date:

DOI: https://doi.org/10.1007/s00138-003-0125-2