Abstract

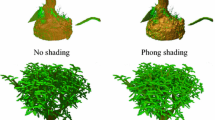

Immersive virtual environments with life-like interaction capabilities can provide a high fidelity view of the virtual world and seamless interaction methods to the user. High demanding requirements, however, raise many challenges in the development of sensing technologies and display systems. The focus of this study is on improving the performance of human–computer interaction by rendering optimizations guided by head pose estimates and their uncertainties. This work is part of a larger study currently being under investigation at NASA Ames, called “Virtual GloveboX” (VGX). VGX is a virtual simulator that aims to provide advanced training and simulation capabilities for astronauts to perform precise biological experiments in a glovebox aboard the International Space Station (ISS). Our objective is to enhance the virtual experience by incorporating information about the user’s viewing direction into the rendering process. In our system, viewing direction is approximated by estimating head orientation using markers placed on a pair of polarized eye-glasses. Using eye-glasses does not pose any constraints in our operational environment since they are an integral part of a stereo display used in VGX. During rendering, perceptual level of detail methods are coupled with head-pose estimation to improve the visual experience. A key contribution of our work is incorporating head pose estimation uncertainties into the level of detail computations to account for head pose estimation errors. Subject tests designed to quantify user satisfaction under different modes of operation indicate that incorporating uncertainty information during rendering improves the visual experience of the user.

Similar content being viewed by others

References

Luebke, D., Hallen, B., Newfield, D., Watson, B.: Perceptually driven simplification using gaze-directed rendering. Tech. Rep. CS-2000-04, University of Virginia, 2000

Foxlin E.: Motion tracking requirements and technologies. In: Stanney, K.M. (eds) Handbook of Virtual Environments: Design, Implementation, and Applications, Lawrence Erlbaum Associates, New Jersey (2002)

Smith, J., Gore, B., Dalal, M., Boyle, R.: Optimizing biology research tasks in space using human performance modeling and virtual reality simulation systems here on earth. In: 32nd International Conference on Environmental Systems, (2002)

Twombly A., Smith J., Montgomery K., Boyle R.: The virtual glovebox (vgx): a semi-immersive virtual environment for training astronauts in life science experiments. J. Syst. Cybern. Inf. 2(3), 30–34 (2006)

Martinez, J., Erol, A., Bebis, G., Boyle, R., Towmbly, X.: Rendering optimizations guided by head-pose estimates and their uncertainty. In: International Symposium on Visual Computing (ISVC05), (LNCS, vol 3804), Lake Tahoe, NV, December 2005

Duchowski A.: Acuity-matching resolution degradation through wavelet coefficient scaling. IEEE Trans. Image Process. 9(8), 1437–1440 (2000)

Perry, J., Geisler, W.: Gaze-contingent real-time simulation of arbitrary visual fields. SPIE: Hum. Vis. Electron. Imaging (2002)

Clark J.: Hierarchical geometric models for visible surface algorithms. Commun. ACM 19(10), 547–554 (1976)

Ohshima, T., Yamamoto, H., Tamura, H.: Gaze-directed adaptive rendering for interacting with virtual space. In: VRAIS ’96: Proceedings of the 1996 Virtual Reality Annual International Symposium (VRAIS 96), p. 103. IEEE Computer Society, (Washington, DC, 1996)

Junkins, S., Hux, A.: Subdividing reality. Intel Architecture Labs White Paper (2000)

Luebke D., Watson B., Cohen J., Reddy M., Varshney A.: Level of Detail for 3D Graphics. Elsevier Science Inc., New York (2002)

Luebke D.: A developer’s survey of polygonal simplification algorithms. IEEE Comput. Graph. Appl. 21(3), 24–35 (2001)

Reddy, M.: Perceptually modulated level of detail for virtual environments. CST-134-97. PhD thesis, University of Edinburgh (1997)

Williams, N., Luebke, D., Cohen, J., Kelley, M., Schubert, B.: Perceptually guided simplification of lit, textured meshes. SI3D ’03: Proceedings of the 2003 symposium on Interactive 3D graphics, pp. 113–121, ACM Press, New York (2003)

Murphy, H., Duchowski, A.: Gaze-contingent level of detail rendering. In: EuroGraphics Conference, (Las Vegas), September 2001

Gee A., Cipolla R.: Determining the gaze of faces in images. Image Vis. Comput. 30, 639–647 (1994)

Fu, Y., Huang, T.: hmouse: head tracking driven virtual computer mouse. IEEE Workshop Appl. Comput. Vis., 2007.

Hu, Y., Chen, L., Zhou, Y., Zhang, H.J.: Estimating face pose by facial asymmetry and geometry. IEEE Int. Conference on Automatic Face and Gesture Recognition, (2004)

Tao H., Huang T.: Explanation-based facial motion tracking using a piecewise bezier volume deformation model. Comput. Vis. Pattern Recognit. 1, 611–617 (1999)

Bouguet, J.: Camera calibration toolbox for matlab

Ma S.D.: Conics-based stereo, motion estimation and pose determination. Int. J. Comput. Vis. 10(1), 7–25 (1993)

Ji, Q., Haralick, R.: Error propagation for computer vision performance characterization. In: International Conference on Imaging Science, Systems, and Technology, Las Vegas, (1999)

Murray, D., Little, J.: Patchlets: Representing stereo vision data with surface elements. In: Workshop on the Applications of Computer Vision (WACV), pp. 192–199, 2005

Trucco, E., Verri, A.: Introductory Techniques for 3-D Computer Vision. Prentice Hall, 1998

Duda, Hart, and Stork, Pattern Classification. John-Wiley, (2001)

Arun K., Huang T., Blostein S.: Least-squares fitting of two 3-d point sets. IEEE Trans. Pattern Anal. Mach. Intell. 9(5), 698–700 (1987)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Martinez, J.E., Erol, A., Bebis, G. et al. Integrating perceptual level of detail with head-pose estimation and its uncertainty. Machine Vision and Applications 21, 69 (2009). https://doi.org/10.1007/s00138-008-0142-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-008-0142-2