Abstract

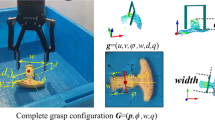

Novel object grasping is an important technology for robot manipulation in unstructured environments. For most of current works, a grasp sampling process is required to obtain grasp candidates, combined with a local feature extractor using deep learning. However, this pipeline is time–cost, especially when grasp points are sparse such as at the edge of a bowl. To tackle this problem, our algorithm takes the whole sparse point clouds as the input and requires no sampling or search process. Our work is combined with two steps. The first step is to predict poses, categories and scores (qualities) based on a SPH3D-GCN network. The second step is an iterative grasp pose refinement, which is to refine the best grasp generated in the first step. The whole weight sizes for these two steps are only about 0.81M and 0.52M, which takes about 73 ms for a whole prediction process including an iterative grasp pose refinement using a GeForce 840M GPU. Moreover, to generate training data of multi-object scene, a single-object dataset (79 objects from YCB object set, 23.7k grasps) and a multi-object dataset (20k point clouds with annotations and masks) combined with thin structures grasp planning are generated. Our experiment shows our work gets 76.67% success rate and 94.44% completion rate, which performs better than current state-of-the-art works.

Similar content being viewed by others

References

Bohg, J., Morales, A., Asfour, T., Kragic, D.: Data-driven grasp synthesis—a survey. IEEE Trans. Robot. 30, 289–309 (2014)

Zeng, A., Yu, K.-T., Song, S., Suo, D., Walker Jr, E., Rodriguez, A., Xiao, J.: Multi-view self-supervised deep learning for 6D pose estimation in the amazon picking challenge. In: IEEE International Conference on Robotics and Automation (ICRA) (2017)

Wang, C., et al.: DenseFusion: 6D Object Pose Estimation by Iterative Dense Fusion. arXiv preprint arXiv:1901.04780 (2019)

Miller, A.T., Allen, P.K.: Grasp it! A versatile simulator for robotic grasping. IEEE Robot. Autom. Mag. 11(4), 110–122 (2004). https://doi.org/10.1109/Mra.2004.1371616

Lenz, I., Lee, H., Saxena, A.: Deep learning for detecting robotic grasps. Int. J. Robot. Res. 34(4–5), 705–724 (2015)

Mahler, J., et al.: Learning ambidextrous robot grasping policies. Sci. Robot. 4(26), eaau4984 (2019)

ten Pas, A., et al.: Grasp pose detection in point clouds. Int. J. Robot. Res. 36(13-14), 1455–1473 (2017)

Liang, H., et al.: PointNetGPD: Detecting Grasp Configurations from Point Sets. arXiv preprint arXiv:1809.06267 (2018)

Qi, C.R., Su, H., Mo, K., et al.: Pointnet: deep learning on point sets for 3D classification and segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 652–660 (2017)

Johns, E., Leutenegger, S., Davison, A.J.: Deep learning a grasp function for grasping under gripper pose uncertainty. In: 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4461–4468. IEEE (2016)

Morrison, D., Corke, P., Leitner, J.: Closing the loop for robotic grasping: a real-time, generative grasp synthesis approach. arXiv preprint: http://arxiv.org/abs/1804.05172 (2018)

Levine, S., et al.: Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 37(4–5), 421–436 (2018)

Kalashnikov, D., Irpan, A., Pastor, P., Ibarz, J., Herzog, A., Jang, E., Quillen, D., Holly, E., Kalakrishnan, M., Vanhoucke, V., Levine, S.: Qt-opt: scalable deep reinforcement learning for vision-based robotic manipulation. In: Conference on Robot Learning (CoRL) (2018)

Zeng, A., et al.: Learning synergies between pushing and grasping with self-supervised deep reinforcement learning. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE (2018)

James, S., et al.: Sim-to-Real via Sim-to-Sim: Data-Efficient Robotic Grasping via Randomized-to-Canonical Adaptation Networks. Preprint at arXiv:1812.07252 (2018)

Lei, H., Akhtar, N., Mian, A.: Spherical Kernel for Efficient Graph Convolution on 3D Point Clouds. arXiv preprint arXiv:1909.09287 (2019)

Mahler, J., Goldberg, K.: Learning deep policies for robot bin picking by simulating robust grasping sequences. In: Conference on Robot Learning, pp. 515–524 (2017)

Ni, P., et al.: PointNet++ Grasping: Learning an End-to-End Spatial Grasp Generation Algorithm from Sparse Point Clouds. arXiv preprint arXiv:2003.09644 (2020)

Ferrari, C., Canny, J.: Planning optimal grasps. In: IEEE International Conference on Robotics and Automation (ICRA), vol. 3, pp. 2290–2295 (1992)

Calli, B., Walsman, A., Singh, A., Srinivasa, S., Abbeel, P., Dollar, A.M.: Benchmarking in manipulation research: using the YaleCMU-Berkeley Object and model set. IEEE Robot. Autom. Mag. 22(3), 36–52 (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Yan, C., Gong, B., Wei, Y., Gao, Y.: Deep multi-view enhancement hashing for image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. (2020). https://doi.org/10.1109/TPAMI.2020.2975798

Yan, C., Shao, B., Zhao, H., Ning, R., Zhang, Y., Xu, F.: 3D room layout estimation from a single RGB image. IEEE Trans. Multimed. (2020). https://doi.org/10.1109/TMM.2020.2967645

Ni, P., Zhang, W., Bai, W., et al.: A new approach based on two-stream CNNs for novel objects grasping in clutter. J. Intell. Robot. Syst. 2, 1–17 (2018)

Quillen, D., Jang, E., Nachum, O., et al.: Deep reinforcement learning for vision-based robotic grasping: a simulated comparative evaluation of off-policy methods. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 6284–6291. IEEE (2018)

Ahmed, E., Saint, A., Shabayek, A.E.R., et al.: Deep learning advances on different 3D data representations: a survey. arXiv preprint arXiv:1808.01462 (2018)

Choi, C., Schwarting, W., DelPreto, J., Rus, D.: Learning object grasping for soft robot hands. IEEE Robot. Autom. Lett. 3(3), 2370–2377 (2018)

Rubinstein, R.Y., Ridder, A., Vaisman, R.: Fast Sequential Monte Carlo Methods for Counting and Optimization. Wiley, New York (2013)

Campbell, D., et al.: 6-DOF GraspNet: variational grasp generation for object manipulation. In: Proceedings of the IEEE International Conference on Computer Vision (2019)

Qin, Y., et al.: S4G: Amodal single-view single-shot SE (3) grasp detection in cluttered scenes. In: Conference on Robot Learning (CoRL) (2019)

Kappler, D., Bohg, J., Schaal, S.: Leveraging big data for grasp planning. In: IEEE International Conference on Robotics and Automation, pp. 4304–4311 (2015)

ten Pas, A., Platt, R.: Using geometry to detect grasp poses in 3D point clouds. In: Robotics Research. Springer, Cham, pp. 307–324 (2018)

Mishra, B.: On the existence and synthesis of multifinger positive grips. Algorithmica (Special Issue: Robotics) 2(1–4), 541–558 (1987)

Coumans, E., Bai, Y.: Pybullet, a python module for physics simulation for games, robotics and machine learning. GitHub repository (2016)

Qi, C.R., Yi, L., Su, H., et al.: Pointnet++: deep hierarchical feature learning on point sets in a metric space. In: Advances in Neural Information Processing Systems, pp. 5099–5108 (2017)

Besl, P.J., McKay, N.D.: A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 14, 239–256 (1992)

Acknowledgements

Our research has been supported in part by National Natural Science Foundation of China under Grant 61673261 and 61703273. Moreover, it is also supported by National Key Research and Development Project of China (2018YFB1307702). We would like to thank Professor Cewu Lu for the generous help and insightful advice.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (MP4 63525 kb)

Rights and permissions

About this article

Cite this article

Ni, P., Zhang, W., Zhu, X. et al. Learning an end-to-end spatial grasp generation and refinement algorithm from simulation. Machine Vision and Applications 32, 10 (2021). https://doi.org/10.1007/s00138-020-01127-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-020-01127-9