Abstract

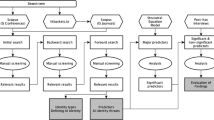

Since some AI algorithms with high predictive power have impacted human integrity, safety has become a crucial challenge in adopting and deploying AI. Although it is impossible to prevent an algorithm from failing in complex tasks, it is crucial to ensure that it fails safely, especially if it is a critical system. Moreover, due to AI’s unbridled development, it is imperative to minimize the methodological gaps in these systems’ engineering. This paper uses the well-known Box-Jenkins method for statistical modeling as a framework to identify engineering pitfalls in the adjustment and validation of AI models. Step by step, we point out state-of-the-art strategies and good practices to tackle these engineering drawbacks. In the final step, we integrate an internal and external validation scheme that might support an iterative evaluation of the normative, perceived, substantive, social, and environmental safety of all AI systems.

Similar content being viewed by others

References

Abràmoff MD, Tobey D, Char DS (2020) Lessons learned about autonomous ai: finding a safe, efficacious, and ethical path through the development process. Am J Ophthalmol 214:134–142

Agarwal A, Beygelzimer A, Dudík M, Langford J, Wallach H (2018) A reductions approach to fair classification. In International conference on machine learning, pp 60–69

Agarwal S, Farid H, Gu Y, He M, Nagano K, Li H (2019) Protecting world leaders against deep fakes. In Cvpr workshops, pp 38–45

Akatsuka J, Yamamoto Y, Sekine T, Numata Y, Morikawa H, Tsutsumi K (2019) Illuminating clues of cancer buried in prostate mr image: deep learning and expert approaches. Biomolecules 9(11):673

Alvarez-Melis D, Jaakkola TS (2018) Towards robust interpretability with self- explaining neural networks. http://arxiv.org/abs/1806.07538. Accessed 29 Jan 2021

Amodei D, Clark J (2016) Faulty reward functions in the wild. https://openai.com/blog/faulty-reward-functions. Accessed 1 Jul 2021

Amodei D, Olah C, Steinhardt J, Christiano P, Schulman J, Man´e D (2016) Concrete problems in ai safety. Retrieved 14 Mar 2020, from http://arxiv.org/abs/1606.06565

Baird HS (1992) Document image defect models. Structured document image analysis. Springer, New York, pp 546–556

Baker-Brunnbauer J (2021) Taii framework for trustworthy ai systems. ROBONOMICS J Autom Econ 2:17

Beale N, Battey H, Davison AC, MacKay RS (2020) An unethical optimization principle. R Soc Open Sci 7(7):200462

Biggio B, Nelson B, Laskov P (2012) Poisoning attacks against support vector machines. Retrieved 20 Feb 2021. https://arxiv.org/abs/1206.6389

Bolukbasi T, Chang K.-W, Zou JY, Saligrama V, Kalai AT (2016) Man is to computer programmer as woman is to homemaker? debiasing word embeddings. In Advances in neural information processing systems. MIT Press, pp 4349–4357

Box GE, Jenkins GM, Reinsel GC, Ljung GM (2015) Time series analysis: forecasting and control. Wiley, New York

Buolamwini JA (2017) Gender shades: intersectional phenotypic and demographic evaluation of face datasets and gender classifiers (Unpublished doctoral dissertation). Massachusetts Institute of Technology.

Cabitza F, Zeitoun J-D (2019) The proof of the pudding: in praise of a culture of real-world validation for medical artificial intelligence. Ann Transl Med 7(8):161

Cabour G, Morales A, Ledoux E´, Bassetto S (2021) Towards an explanation space to align humans and explainable-ai teamwork. Retrieved 25 Jan 2021. https://arxiv.org/abs/2106.01503

Card D, Zhang M, Smith NA (2019) Deep weighted averaging classifiers. proceedings of the conference on fairness, accountability and transparency, pp 369–378. Retrieved 28 Jan 2021. http://arxiv.org/abs/1811.02579. https://doi.org/10.1145/3287560.3287595

Carlini N, Wagner D (2017) Adversarial examples are not easily detected: Bypassing ten detection methods. In Proceedings of the 10th acm workshop on artificial intelligence and security, pp 3–14

CBC (2021) Whistleblower testifies facebook chooses profit over safety, calls for ’congressional action’. CBC News. https://www.cbc.ca/news/world/facebook-whistleblower-testifies-profit-safety-1.6199886. Accessed 18 Feb 2022

Chen Z, Bei Y, Rudin C (2020) Concept whitening for interpretable image recognition. Nat Mach Intell 2(12):772–782

Chen X, Liu C, Li B, Lu K, Song D (2017) Targeted backdoor attacks on deep learning systems using data poisoning. Retrieved 25 Jan 2021. http://arxiv.org/abs/1712.05526

European Commission (2019) Ethics guidelines for trustworthy ai. Retrieved from https://ec.europa.eu/futurium/en/ai-alliance-consultation.1.html

Dong H, Song K, He Y, Xu J, Yan Y, Meng Q (2019) Pga-net: Pyramid feature fusion and global context attention network for automated surface defect detection. IEEE Trans Industr Inf 16(12):7448–7458

Eckersley P (2018) Impossibility and uncertainty theorems in ai value alignment (or why your agi should not have a utility function). Retrieved 20 Mar 2020. https://arxiv.org/abs/1901.00064

Executive Office of the President of the United States (2019) The national artificial intelligence r&d strategic plan. Retrieved from https://trumpwhitehouse.archives.gov/wp-content/uploads/2019/06/National-AI-Research-and-Development-Strategic-Plan-2019-Update-June-2019.pdf

Facebook (2022). Facebook’s five pillars of responsible ai. https://ai.facebook.com/blog/facebooks-five-pillars-of-responsible-ai/. Accessed 18 Feb 2022

Fei-Fei L, Fergus R, Perona P (2006) One-shot learning of object categories. IEEE Trans Pattern Anal Mach Intell 28(4):594–611

Fidel G, Bitton R, Shabtai A (2019) When explainability meets adversarial learning: Detecting adversarial examples using SHAP Signatures. http://arxiv.org/abs/1909.03418. Accessed 17 Dec 2020

Fink M (2005) Object classification from a single example utilizing class relevance metrics. In Advances in neural information processing systems, pp 449–456

Georgakis G, Mousavian A, Berg AC, Kosecka J (2017) Synthesizing training data for object detection in indoor scenes. Retrieved 01 Dec 2020. https://arxiv.org/abs/1702.07836

Gianfrancesco MA, Tamang S, Yazdany J, Schmajuk G (2018) Potential biases in machine learning algorithms using electronic health record data. JAMA Intern Med 178(11):1544–1547

Government of Canada (2021) Responsible use of artificial intelligence (ai). Retrieved 04 Feb 2021. https://www.canada.ca/en/government/system/digital-government/digital-government-innovations/responsible-use-ai.html#toc1

Grosse K, Manoharan P, Papernot N, Backes M, McDaniel P (2017) On the (statistical) detection of adversarial examples. Retrieved 21 Feb 2021. https://arxiv.org/abs/1702.06280

Hadfield-Menell D, Russell SJ, Abbeel P, Dragan A (2016) Cooperative inverse reinforcement learning. In Advances in neural information processing systems. MIT Press, pp 3909–3917

Hallows R, Glazier L, Katz M, Aznar M, Williams M (2021) Safe and ethical artificial intelligence in radiotherapy–lessons learned from the aviation industry. Clinical Oncology, 34(2), 99-101

He Y, Song K, Meng Q, Yan Y (2019) An end-to-end steel surface defect detection approach via fusing multiple hierarchical features. IEEE Trans Instrum Meas 69(4):1493–1504

He H, Bai Y, Garcia EA, Li S (2008) Adasyn: Adaptive synthetic sampling approach for imbalanced learning. In 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), pp 1322–1328

Hendrycks D, Gimpel K (2016) Early methods for detecting adversarial images. Retrieved 01 Dec 2020. https://arxiv.org/abs/1608.00530

Hibbard B (2012) Decision support for safe ai design. In: International conference on artificial general intelligence, pp 117–125

IBM(2022). Explainable ai. https://www.ibm.com/watson/explainable-ai?utmcontent=SRCWW&p1=Search&p4=43700064515261160&p5=p&gclid=Cj0KCQiApL2QBhC8ARIsAGMm-KHAqR9Gb S91U33HXTEtZKshdCJbM4Qw7D7aVFO6fyOAEgMAkFrc8aAuNFEALwwcB&gclsrc=aw.ds. Accessed 18 Feb 2022

International Organization for Standardization (2020a). Ergonomics of human-system interaction—Part 110: Interaction principles. Retrieved 3 May 2021. https://www.iso.org/obp/ui/#iso:std:iso:9241:-110:ed-2:v1:en

International Organization for Standardization (2020b). Information technology—Artificial intelligence—Overview of trustworthiness in artificial intelligence. Retrieved 3 May 2021. https://www.iso.org/obp/ui/#iso:std:iso-iec:tr:24028:ed-1:v1:en

Jiang H, Nachum O (2020) Identifying and correcting label bias in machine learning. In International Conference on Artificial Intelligence and Statistics 702–712

Kim DW, Jang HY, Kim KW, Shin Y, Park SH (2019) Design characteristics of studies reporting the performance of artificial intelligence algorithms for diagnostic analysis of medical images: results from recently published papers. Korean J Radiol 20(3):405–410

Kobrin JL, Sinharay S, Haberman SJ, Chajewski M (2011) An investigation of the fit of linear regression models to data from an sat® validity study. ETS Res Rep Ser 2011(1):i–21

Koh PW, Liang P (2017) Understanding black-box predictions via influence functions. In International Conference on Machine Learning, pp 1885–1894

Koo J, Roth M, Bagchi S (2019) HAWKEYE: Adversarial Example Detector for Deep Neural Networks. http://arxiv.org/abs/1909.09938. Accessed 12 Feb 2021

Lapuschkin S, Waldchen S, Binder A, Montavon G, Samek W, Muller K-R (2019) Unmasking clever hans predictors and assessing what machines really learn. Nat Commun 10(1):1–8

Lapuschkin S, Binder A, Montavon G, Muller KR, Samek W (2016) Analyzing classifiers: Fisher vectors and deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2912–2920

Li Y, Chang M.-C, Lyu S (2018) In ictu oculi: Exposing ai generated fake face videos by detecting eye blinking. Retrieved 01 Dec 2020. https://arxiv.org/abs/1806.02877

Lundberg SM, Lee S-I (2017) A unified approach to interpreting Model predictions. In: I. Guyon et al. (Eds) Advances in Neural Information Processing Systems 30. Curran Associates, Inc., pp 4765–4774. http://papers.nips.cc/paper/7062-a-unified-approach-to-interpreting-model-predictions.pdf. Accessed 8 Oct 2020

Maimon OZ, Rokach L (2014) Data mining with decision trees: theory and applications. World scientific. 81

Marcus G, Davis E (2019) Rebooting ai: Building artificial intelligence we can trust. Pantheon

Mei S, Zhu X (2015) Using machine teaching to identify optimal training-set attacks on machine learners. In Proceedings of the aaai conference on artificial intelligence vol 29

Meng D, Chen H (2017) Magnet: a two-pronged defense against adversarial examples. In Proceedings of the 2017 acm sigsac conference on computer and communications security, pp 135–147

Ministry of Science and Technology (MOST) of China (2021) New generation artificial intelligence ethics specifications. Retrieved 4 Feb 2021. http://www.most.gov.cn/kjbgz/202109/t20210926177063.html

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Morales-Forero A, Bassetto S (2019) Case study: a semi-supervised methodology for anomaly detection and diagnosis. In 2019 ieee international conference on industrial engineering and engineering management (ieem). IEEE, pp 1031–1037. https://doi.org/10.1109/IEEM44572.2019.8978509

Mor-Yosef S, Samueloff A, Modan B, Navot D, Schenker JG (1990) Ranking the risk factors for cesarean: logistic regression analysis of a nationwide study. Obstet Gynecol 75(6):944–947

NíFhaoláin L, Hines A, Nallur V (2020) Assessing the appetite for trustworthiness and the regulation of artificial intelligence in europe. In: Proceedings of the The 28th irish conference on artificial intelligence and cognitive science, dublin, republic of ireland, 7-8 december 2020. CEUR Workshop Proceedings

Nauck D, Kruse R (1999) Obtaining interpretable fuzzy classification rules from medical data. Artif Intell Med 16(2):149–169

Papernot N, McDaniel P (2018) Deep k-nearest neighbors: towards confident, interpretable and robust deep learning. http://arxiv.org/abs/1803.04765. Accessed 28 Jan 2021

Parikh RB, Obermeyer Z, Navathe AS (2019) Regulation of predictive analytics in medicine. Science 363(6429):810–812

Ren K, Zheng T, Qin Z, Liu X (2020) Adversarial attacks and defenses in deep learning. Engineering 6(3):346–360

Ribeiro MT, Singh S, Guestrin C (2016) “Why should i trust you?” Explaining the predictions of any classifier. In: Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining. Association for computing machinery, New York, NY, USA, pp 1135–1144. Retrieved from https://doi.org/10.1145/2939672.2939778

Rolls-Royce. (2021). The aletheia framework. https://www.rolls-royce.com/sustainability/ethics-and-compliance/the-aletheia-framework.aspx. Accessed 1 July 2021

Rudin C (2019) Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell 1(5):206–215

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2019) Grad- CAM: visual explanations from deep networks via gradient-based localization. http://arxiv.org/abs/1610.02391. https://doi.org/10.1007/s11263-019-01228-7. Accessed 25 Jan 2021

Shafahi A, Huang WR, Najibi M, Suciu O, Studer C, Dumitras T, Goldstein T (2018) Poison frogs! targeted clean-label poisoning attacks on neural networks. Retrieved 01 Dec 2020. https://arxiv.org/abs/1804.00792

Shin D (2021) The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable ai. Int J Hum Comput Stud 146:102551

Shneiderman B (2020) Bridging the gap between ethics and practice: guidelines for reliable, safe, and trustworthy human-centered ai systems. ACM Trans Interact Intell Syst (TiiS) 10(4):1–31

Shrikumar A, Greenside P, Kundaje A (2019) Learning important features through propagating activation differences. http://arxiv.org/abs/1704.02685. Accessed 21 Jan 2021

Snell J, Swersky K, Zemel R (2017) Prototypical networks for few-shot learning. In Advances in neural information processing systems. MIT Press, pp 4077–4087

Solans D, Biggio B, Castillo C (2020) Poisoning attacks on algorithmic fairness. Retrieved 20 Dec 2021. https://arxiv.org/abs/2004.07401

Song K, Yan Y (2013) A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Appl Surf Sci 285:858–864

Stanley KO (2019) Why open-endedness matters. Artif Life 25(3):232–235

Thomas PS, da Silva BC, Barto AG, Giguere S, Brun Y, Brunskill E (2019) Preventing undesirable behavior of intelligent machines. Science 366(6468):999–1004

Varshney KR, Alemzadeh H (2017) On the safety of machine learning: cyber-physical systems, decision sciences, and data products. Big Data 5(3):246–255

Vasconcelos CN, Vasconcelos BN (2017) Increasing deep learning melanoma classification by classical and expert knowledge-based image transforms. CoRR. http://arxiv.org/abs/1702.07025, 1

Vinyals O, Blundell C, Lillicrap T, Wierstra D, et al. (2016) Matching networks for one shot learning. In Advances in neural information processing systems. MIT Press, pp 3630–3638

Xu H, Mannor S (2012) Robustness and generalization. Mach Learn 86(3):391–423

Yang X, Li Y, Lyu S (2019) Exposing deep fakes using inconsistent head poses. In Icassp 2019–2019 ieee international conference on acoustics, speech and signal processing (icassp), pp 8261–8265

Yao L, Chu Z, Li S, Li Y, Gao J, Zhang A (2020) A survey on causal inference. Retrieved 18 Feb 2021. https://arxiv.org/abs/2002.02770

Zafar MB, Valera I, Rogriguez MG, Gummadi KP (2017) Fairness constraints: mechanisms for fair classification. In Artificial intelligence and statistics. PMLR, pp 962–970

Zheng W, Jin M (2020) The effects of class imbalance and training data size on classifier learning: an empirical study. SN Comput Sci 1(2):1–13

Zhou P, Han X, Morariu VI, Davis LS (2017) Two-stream neural networks for tampered face detection. In 2017 IEEE conference on computer vision and pattern recognition workshops (cvprw), pp 1831–1839

Zhou P, Han X, Morariu VI, Davis LS (2018) Learning rich features for image manipulation detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1053–1061

Acknowledgements

This research is supported by the Consortium for Research and Innovation in Aerospace in Québec (CRIAQ), funded by Mitacs Accelerate program. The findings and conclusions in this report are those of the authors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Morales-Forero, A., Bassetto, S. & Coatanea, E. Toward safe AI. AI & Soc 38, 685–696 (2023). https://doi.org/10.1007/s00146-022-01591-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00146-022-01591-z