Abstract

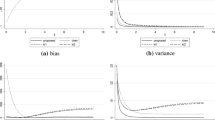

In this paper we suggest a bias reducing technique in kerneldistribution function estimation. In fact, it uses a convex combination of three kernel estimators, and it turned out that the bias has been reduced to the fourth power of the bandwidth, while the bias of the kernel distribution function estimator has the second power of the bandwidth. Also, the variance of the proposed estimator remains at the same order as the kernel distribution function estimator. Numerical results based on simulation studies show this phenomenon, too.

Similar content being viewed by others

References

Altman N, Leger C (1995) Bandwidth selection for kernel distribution function estimation. J Stat Plan Inference 46:195–214

Azzalini A (1981) A note on the estimation of a distribution function and quantiles by a kernel method. Biometrika 68:326–328

Bowman A, Hall P, Prvan T (1998) Bandwidth selection for the smoothing of distribution functions. Biometrika 85:799–808

Cheng M-Y, Choi E, Fan J, Hall P (2000) Skewing methods for two-parameter locally parametric density estimation. Bernoulli 6:169–182

Choi E, Hall P (1998) On bias reduction in local linear smoothing. Biometrika 85:333–345

Kaplan EL, Meier P (1958) Nonparametric estimation from incomplete observations. J Am Stat Assoc 53:457–481

Kim C, Kim W, Park B-U (2003) Skewing and generalized jackknifing in kernal density estimation. Commun Stat Theory Methods 32:2153–2162

Nadaraya EA (1964) Some new estimates for distribution functions. Theory Prob Appl 15:497–500

Reiss R-D (1981) Nonparametric estimation of smooth distribution functions. Scand J Stat 8:116–119

Sarda P (1993). Smoothing parameter selection for smooth distribution functions. J Stat Plan Inference 35:65–75

Silverman BW (1986). Density estimation for statistics and data analysis. Chapman and Hall, London

Schucany WR, Sommers JP (1977) Improvement of kernel type estimators. J Am Stat Assoc 72:420–423

Wand MP, Jones MC (1995) Kernel smoothing. Chapman and Hall, London

Acknowledgements

This research was supported by Korea Science and Engineering Foundation grant(R14-2003-002-01000-0). The authors thank the editor and referees for their helpful comments which greatly improved the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Proof of Theorem 1

By a Taylor expansion

and by using the following facts;

we have

Also, it is easy to show that

Hence,

Therefore, \(E \tilde{F}(x)\) can be computed by letting l = 0, l1 and l2, and the terms in h2 and h3 vanish if and only if

and

By letting −l1 = l2 = l, the two equations imply

Finally, if we substitute λ1 = λ2 = λ, −l1 = l2 = l = {μ2(2λ + 1)/2λ}1/2 for \(E \tilde{F}(x)\), then we get the desired result for the bias part. Similar computations, though quite tedious, give the variance part. First, note that

Now, we will compute each term on the right hand side of Var\([\tilde{F}]\) except \(\operatorname{Var}(\widehat{F})\) which is given in Sect. 2. Let

then, for the first term, we have

Now,

because

and

Also, it can be easily shown that

and

Therefore,

Similar computations give

since

Now, we have

and

By adding up all these terms we have the desired result for the variance.

Rights and permissions

About this article

Cite this article

Kim, C., Kim, S., Park, M. et al. A bias reducing technique in kernel distribution function estimation. Computational Statistics 21, 589–601 (2006). https://doi.org/10.1007/s00180-006-0016-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-006-0016-x